Lehigh University

14 Mar 2024

For decades intersecting D-branes and O-planes have been playing a very important role in string phenomenology in the context of particle physics model building and in the context of flux compactifications. The corresponding supergravity equations are hard to solve so generically solutions only exist in a so-called smeared limit where the delta function sources are replaced by constants. We are showing here that supergravity solutions for two perpendicularly intersecting localized sources in flat space do not exist for a generic diagonal metric Ansatz. We show this for two intersecting sources with p=1,2,3,4,5,6 spatial dimensions that preserve 8 supercharges, and we allow for fully generic fluxes.

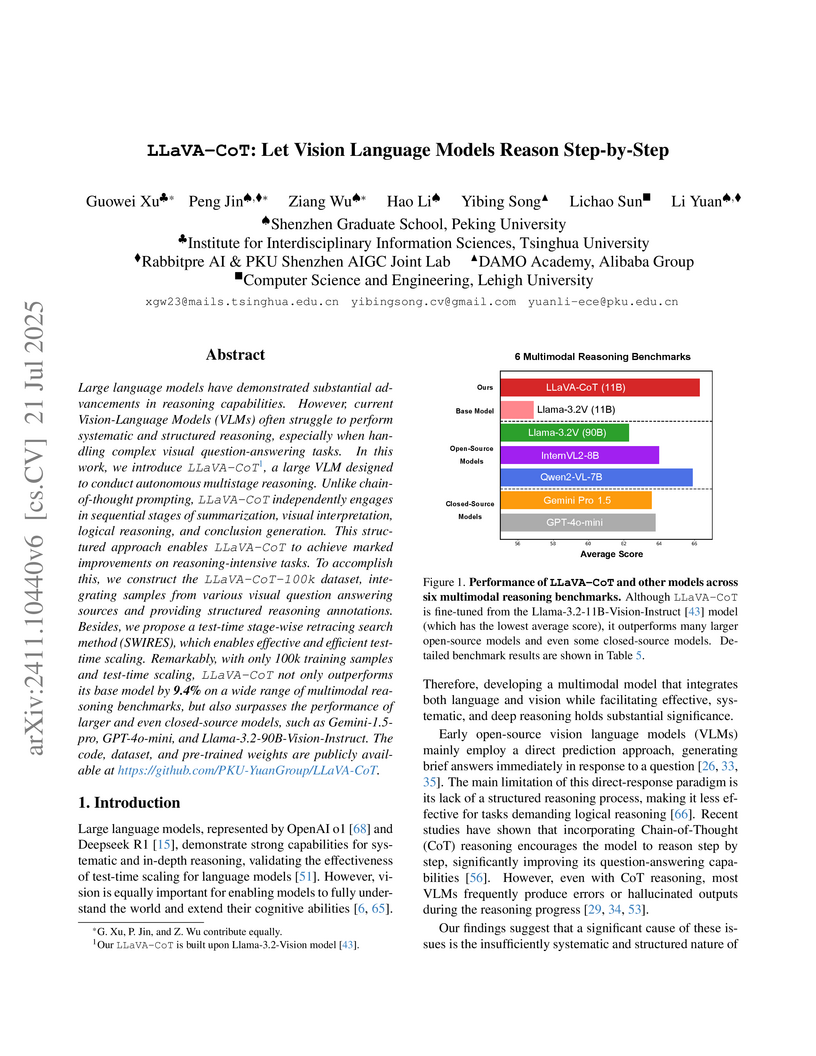

LLaVA-CoT, from a collaboration including Peking University and Tsinghua University, introduces a Vision-Language Model capable of autonomous multistage reasoning, enhanced by a novel test-time self-correction mechanism named Stage-wise Retracing Search (SWIRES). It improves reasoning-intensive tasks by 5.8% over its base model and demonstrates competitive performance against larger multimodal models.

State-of-the-art Vision-Language-Action (VLA) models, despite reporting high success on the standard LIBERO benchmark, exhibit a severe lack of generalization. The LIBERO-PRO benchmark introduces systematic perturbations, revealing that these models rely on rote memorization and their performance collapses to near 0% under even minor environmental or instructional variations.

30 Sep 2024

Michigan State University

Michigan State University University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of California, Santa Barbara

University of California, Santa Barbara Harvard University

Harvard University UCLA

UCLA Carnegie Mellon University

Carnegie Mellon University University of Notre Dame

University of Notre Dame University of Southern California

University of Southern California UC Berkeley

UC Berkeley Georgia Institute of Technology

Georgia Institute of Technology Stanford UniversityIllinois Institute of Technology

Stanford UniversityIllinois Institute of Technology Texas A&M University

Texas A&M University Yale University

Yale University Northwestern University

Northwestern University University of Georgia

University of Georgia Microsoft

Microsoft Columbia UniversityLehigh UniversityUniversity of Illinois Chicago

Columbia UniversityLehigh UniversityUniversity of Illinois Chicago Johns Hopkins University

Johns Hopkins University University of Maryland

University of Maryland University of Wisconsin-MadisonMassachusetts General Hospital

University of Wisconsin-MadisonMassachusetts General Hospital Mohamed bin Zayed University of Artificial IntelligenceSalesforce ResearchInstitut Polytechnique de Paris

Mohamed bin Zayed University of Artificial IntelligenceSalesforce ResearchInstitut Polytechnique de Paris Duke University

Duke University Virginia TechWilliam & MaryFlorida International UniversityUNC-Chapel HillCISPALawrence Livermore National LaboratorySamsungIBM Research AIDrexel UniversityUniversity of Tennessee, Knoxville

Virginia TechWilliam & MaryFlorida International UniversityUNC-Chapel HillCISPALawrence Livermore National LaboratorySamsungIBM Research AIDrexel UniversityUniversity of Tennessee, KnoxvilleThe TRUSTLLM framework and benchmark offer a comprehensive system for evaluating the trustworthiness of large language models across six key dimensions. This work reveals that while proprietary models generally exhibit higher trustworthiness, open-source models can also achieve strong performance in specific areas, highlighting challenges like 'over-alignment' and data leakage.

Michigan State University

Michigan State University University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of GeorgiaLehigh University

University of GeorgiaLehigh University The University of Hong Kong

The University of Hong Kong Huazhong University of Science and TechnologySalesforce ResearchUniversity of Illinois at Chicago

Huazhong University of Science and TechnologySalesforce ResearchUniversity of Illinois at Chicago Duke UniversityJilin University

Duke UniversityJilin University Southern University of Science and TechnologyWorcester Polytechnic InstituteLinkedIn CorporationSquirrel Ai Learning

Southern University of Science and TechnologyWorcester Polytechnic InstituteLinkedIn CorporationSquirrel Ai LearningThis survey offers the first comprehensive review of Post-training Language Models (PoLMs), systematically classifying methods, datasets, and applications within a novel intellectual framework. It traces the evolution of LLMs across five core paradigms—Fine-tuning, Alignment, Reasoning, Efficiency, and Integration & Adaptation—and identifies critical future research directions.

An independent analysis of OpenAI's Sora details the text-to-video generative AI model, highlighting its capabilities to produce up to one-minute-long, high-quality videos based on human instructions. The review, based on public reports and reverse engineering, describes the model's likely diffusion transformer architecture and emergent world simulation abilities.

MM-UPT establishes an Unsupervised Post-Training (UPT) paradigm for multi-modal large language models, allowing them to continually refine reasoning capabilities without relying on human-annotated data. The framework employs an online reinforcement learning approach with a majority-voting self-reward mechanism and enables the models to generate their own synthetic training data, leading to enhanced performance across diverse multi-modal benchmarks.

Researchers introduce "MLLM-as-a-Judge," a new benchmark to assess how well Multimodal Large Language Models (MLLMs) can act as evaluators for other MLLM outputs, comparing their judgments against human preferences. The work reveals that while models like GPT-4V show strong alignment with human preferences in pairwise comparisons, they diverge significantly in scoring and ranking tasks, exhibiting various biases and hallucinations.

METATOOL introduces the first comprehensive benchmark evaluating Large Language Models' foundational intelligence in tool utilization, specifically focusing on their "tool usage awareness" and "tool selection capabilities." The benchmark reveals that most LLMs exhibit poor awareness of their limitations, struggle with identifying when no suitable tool is available (CSR below 20%), and show varied performance in distinguishing between similar tools, with ChatGPT generally outperforming other models.

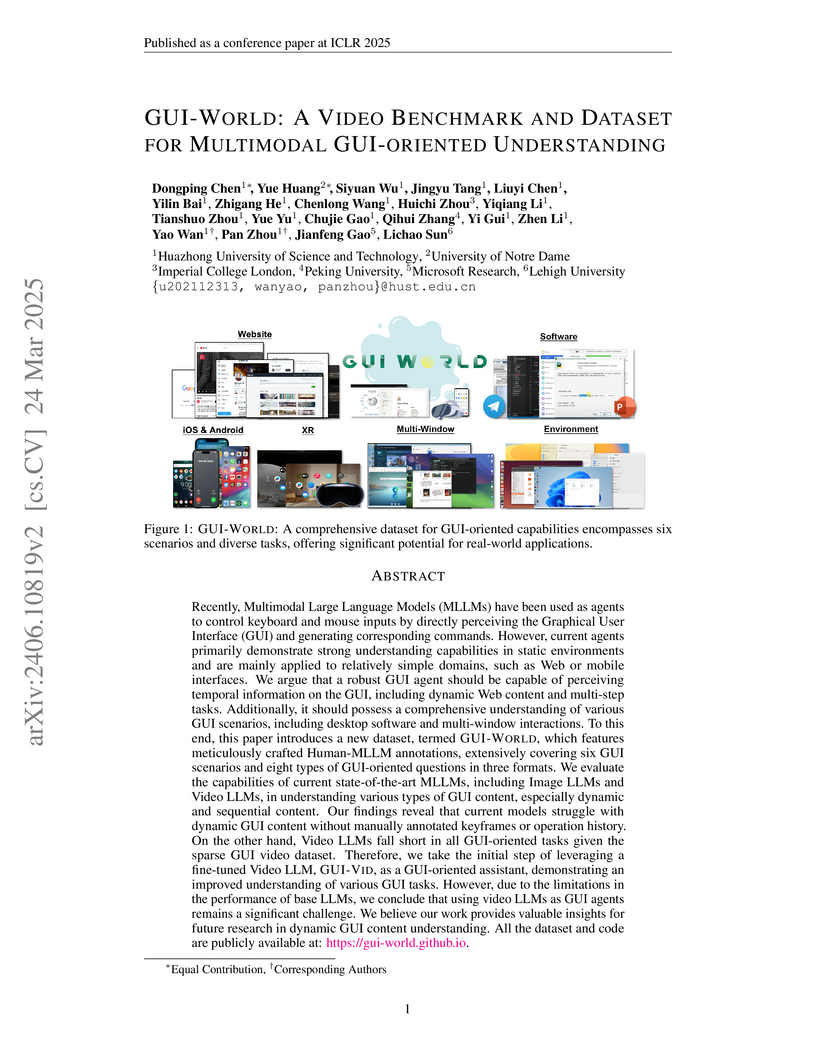

Recently, Multimodal Large Language Models (MLLMs) have been used as agents

to control keyboard and mouse inputs by directly perceiving the Graphical User

Interface (GUI) and generating corresponding commands. However, current agents

primarily demonstrate strong understanding capabilities in static environments

and are mainly applied to relatively simple domains, such as Web or mobile

interfaces. We argue that a robust GUI agent should be capable of perceiving

temporal information on the GUI, including dynamic Web content and multi-step

tasks. Additionally, it should possess a comprehensive understanding of various

GUI scenarios, including desktop software and multi-window interactions. To

this end, this paper introduces a new dataset, termed GUI-World, which features

meticulously crafted Human-MLLM annotations, extensively covering six GUI

scenarios and eight types of GUI-oriented questions in three formats. We

evaluate the capabilities of current state-of-the-art MLLMs, including Image

LLMs and Video LLMs, in understanding various types of GUI content, especially

dynamic and sequential content. Our findings reveal that current models

struggle with dynamic GUI content without manually annotated keyframes or

operation history. On the other hand, Video LLMs fall short in all GUI-oriented

tasks given the sparse GUI video dataset. Therefore, we take the initial step

of leveraging a fine-tuned Video LLM, GUI-Vid, as a GUI-oriented assistant,

demonstrating an improved understanding of various GUI tasks. However, due to

the limitations in the performance of base LLMs, we conclude that using video

LLMs as GUI agents remains a significant challenge. We believe our work

provides valuable insights for future research in dynamic GUI content

understanding. All the dataset and code are publicly available at:

this https URL

University of Utah University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley Stanford University

Stanford University University of Michigan

University of Michigan Texas A&M University

Texas A&M University NVIDIA

NVIDIA University of Texas at Austin

University of Texas at Austin Columbia UniversityLehigh University

Columbia UniversityLehigh University University of Florida

University of Florida Johns Hopkins University

Johns Hopkins University Arizona State University

Arizona State University University of Wisconsin-Madison

University of Wisconsin-Madison Purdue UniversityUniversity of California, MercedTechnische Universität MünchenBosch Research North AmericaBosch Center for Artificial Intelligence (BCAI)University of California Riverside

Purdue UniversityUniversity of California, MercedTechnische Universität MünchenBosch Research North AmericaBosch Center for Artificial Intelligence (BCAI)University of California Riverside AdobeCleveland State UniversityTexas A&M Transportation Institute

AdobeCleveland State UniversityTexas A&M Transportation Institute

University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley Stanford University

Stanford University University of Michigan

University of Michigan Texas A&M University

Texas A&M University NVIDIA

NVIDIA University of Texas at Austin

University of Texas at Austin Columbia UniversityLehigh University

Columbia UniversityLehigh University University of Florida

University of Florida Johns Hopkins University

Johns Hopkins University Arizona State University

Arizona State University University of Wisconsin-Madison

University of Wisconsin-Madison Purdue UniversityUniversity of California, MercedTechnische Universität MünchenBosch Research North AmericaBosch Center for Artificial Intelligence (BCAI)University of California Riverside

Purdue UniversityUniversity of California, MercedTechnische Universität MünchenBosch Research North AmericaBosch Center for Artificial Intelligence (BCAI)University of California Riverside AdobeCleveland State UniversityTexas A&M Transportation Institute

AdobeCleveland State UniversityTexas A&M Transportation InstituteA comprehensive survey examines how generative AI technologies (GANs, VAEs, Diffusion Models, LLMs) are being applied across the autonomous driving stack, mapping current applications while analyzing challenges in safety, evaluation, and deployment through a collaborative effort spanning 20+ institutions including Texas A&M, Stanford, and NVIDIA.

11 Apr 2025

Researchers from GoCharlie.ai and Lehigh University propose a theoretical framework for integrating Case-Based Reasoning (CBR) with Large Language Model (LLM) agents, formalizing architectural components and cognitive aspects. This integration aims to enhance LLM agent transparency, adaptability, and cognitive capabilities, offering a hybrid neuro-symbolic approach to overcome current LLM limitations.

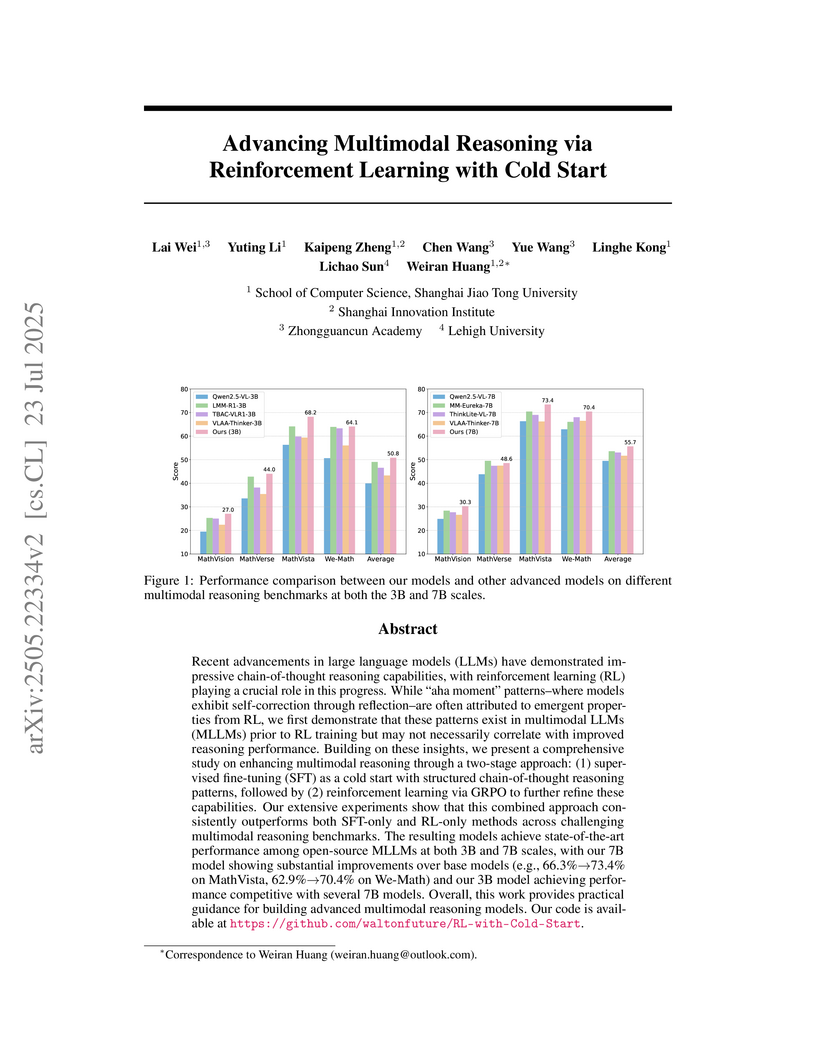

Recent advancements in large language models (LLMs) have demonstrated impressive chain-of-thought reasoning capabilities, with reinforcement learning (RL) playing a crucial role in this progress. While "aha moment" patterns--where models exhibit self-correction through reflection--are often attributed to emergent properties from RL, we first demonstrate that these patterns exist in multimodal LLMs (MLLMs) prior to RL training but may not necessarily correlate with improved reasoning performance. Building on these insights, we present a comprehensive study on enhancing multimodal reasoning through a two-stage approach: (1) supervised fine-tuning (SFT) as a cold start with structured chain-of-thought reasoning patterns, followed by (2) reinforcement learning via GRPO to further refine these capabilities. Our extensive experiments show that this combined approach consistently outperforms both SFT-only and RL-only methods across challenging multimodal reasoning benchmarks. The resulting models achieve state-of-the-art performance among open-source MLLMs at both 3B and 7B scales, with our 7B model showing substantial improvements over base models (e.g., 66.3 %→73.4 % on MathVista, 62.9 %→70.4 % on We-Math) and our 3B model achieving performance competitive with several 7B models. Overall, this work provides practical guidance for building advanced multimodal reasoning models. Our code is available at this https URL.

Researchers from Huazhong University of Science and Technology, University of Notre Dame, Lehigh University, and Duke University developed JudgeDeceiver, an optimization-based prompt injection attack targeting Large Language Models (LLMs) used as evaluative judges. The method achieves high attack success rates, manipulating LLMs to select specific attacker-controlled responses even when faced with partial information and varying response positions.

A comprehensive empirical evaluation framework assesses efficiency techniques for Large Language Models across architecture pretraining, fine-tuning, and quantization dimensions, revealing key trade-offs between memory usage, compute utilization, latency, throughput and energy consumption while demonstrating effective transfer of findings to vision and multimodal models.

This paper presents the first comprehensive survey on aligning Multimodal Large Language Models (MLLMs) with human preferences. It systematically categorizes existing alignment algorithms, dataset construction methods, and evaluation benchmarks across various application scenarios, revealing the field's rapid expansion beyond image understanding to complex modalities and specialized domains while highlighting persistent challenges in data quality and comprehensive evaluation.

Researchers from Huazhong University of Science and Technology and Lehigh University introduce BadVLA, the first backdoor attack framework targeting Vision-Language-Action models used in robotics, employing a two-stage objective-decoupled optimization that injects subtle triggers into perception modules while preserving clean task performance, achieving near-100% attack success rates across multiple VLA architectures and standard embodied benchmarks while remaining undetectable by existing defense mechanisms.

Mora, an open-source multi-agent framework for generalist video generation, employs a self-modulated fine-tuning algorithm and a data-free training strategy. It achieves a video quality score of 0.800, which surpasses Sora's 0.797 on the VBench suite for text-to-video generation, and demonstrates strong performance across six diverse video tasks.

30 Sep 2025

University of Washington

University of Washington University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of Waterloo

University of Waterloo University of California, Santa Barbara

University of California, Santa Barbara Carnegie Mellon University

Carnegie Mellon University University of Notre Dame

University of Notre Dame University of Southern California

University of Southern California University of Chicago

University of Chicago National University of Singapore

National University of Singapore Stanford University

Stanford University Cornell UniversityOhio State University

Cornell UniversityOhio State University Texas A&M University

Texas A&M University University of GeorgiaIBM ResearchUniversity of WisconsinLehigh University

University of GeorgiaIBM ResearchUniversity of WisconsinLehigh University Allen Institute for AI

Allen Institute for AI Emory UniversityUniversity of Illinois Chicago

Emory UniversityUniversity of Illinois Chicago Arizona State University

Arizona State University University of MarylandMassachusetts General Hospital

University of MarylandMassachusetts General Hospital Mohamed bin Zayed University of Artificial IntelligenceSalesforce Research

Mohamed bin Zayed University of Artificial IntelligenceSalesforce Research Duke UniversitySingapore Management UniversityUniversity of QueenslandUniversity of MiamiCISPA – Helmholtz Center for Information SecurityUNC-Chapel Hill

Duke UniversitySingapore Management UniversityUniversity of QueenslandUniversity of MiamiCISPA – Helmholtz Center for Information SecurityUNC-Chapel HillA large consortium of 54 authors across 34 institutions established a unified set of eight guiding principles for trustworthy Generative Foundation Models (GenFMs). They introduced TrustGen, a dynamic benchmarking platform for continuous and comprehensive evaluation across seven trustworthiness dimensions for text-to-image, large language, and vision-language models, revealing overall progress while identifying persistent bottlenecks.

Radiology report generation requires advanced medical image analysis, effective temporal reasoning, and accurate text generation. Although recent innovations, particularly multimodal large language models, have shown improved performance, their supervised fine-tuning (SFT) objective is not explicitly aligned with clinical efficacy. In this work, we introduce EditGRPO, a mixed-policy reinforcement learning algorithm designed specifically to optimize the generation through clinically motivated rewards. EditGRPO integrates on-policy exploration with off-policy guidance by injecting sentence-level detailed corrections during training rollouts. This mixed-policy approach addresses the exploration dilemma and sampling efficiency issues typically encountered in RL. Applied to a Qwen2.5-VL-3B, EditGRPO outperforms both SFT and vanilla GRPO baselines, achieving an average improvement of 3.4\% in clinical metrics across four major datasets. Notably, EditGRPO also demonstrates superior out-of-domain generalization, with an average performance gain of 5.9\% on unseen datasets.

There are no more papers matching your filters at the moment.