Loyola University Chicago

Automatic software system optimization can improve software speed, reduce

operating costs, and save energy. Traditional approaches to optimization rely

on manual tuning and compiler heuristics, limiting their ability to generalize

across diverse codebases and system contexts. Recent methods using Large

Language Models (LLMs) offer automation to address these limitations, but often

fail to scale to the complexity of real-world software systems and

applications. We present SysLLMatic, a system that integrates LLMs with

profiling-guided feedback and system performance insights to automatically

optimize software code. We evaluate it on three benchmark suites: HumanEval_CPP

(competitive programming in C++), SciMark2 (scientific kernels in Java), and

DaCapoBench (large-scale software systems in Java). Results show that

SysLLMatic can improve system performance, including latency, throughput,

energy efficiency, memory usage, and CPU utilization. It consistently

outperforms state-of-the-art LLM baselines on microbenchmarks. On large-scale

application codes, it surpasses traditional compiler optimizations, achieving

average relative improvements of 1.85x in latency and 2.24x in throughput. Our

findings demonstrate that LLMs, guided by principled systems thinking and

appropriate performance diagnostics, can serve as viable software system

optimizers. We further identify limitations of our approach and the challenges

involved in handling complex applications. This work provides a foundation for

generating optimized code across various languages, benchmarks, and program

sizes in a principled manner.

A hybrid neuro-symbolic framework deterministically detects statutory inconsistencies in complex legal texts, showcasing its reliability by identifying a known conflict within IRC Section 121. The system, leveraging LLM-assisted Prolog formalization, achieved 100% success in finding the inconsistency, significantly outperforming an LLM used as a standalone probabilistic reasoning engine which had only a 33% success rate.

Researchers at the University of Colorado Anschutz Medical Campus and University of Wisconsin–Madison developed DR.KNOWS, a system that integrates medical knowledge graphs into large language models (LLMs) to enhance diagnosis prediction from electronic health records by providing LLMs with explainable knowledge paths.

02 Sep 2025

Accurate conditional prediction in the regression setting plays an important role in many real-world problems. Typically, a point prediction often falls short since no attempt is made to quantify the prediction accuracy. Classically, under the normality and linearity assumptions, the Prediction Interval (PI) for the response variable can be determined routinely based on the t distribution. Unfortunately, these two assumptions are rarely met in practice. To fully avoid these two conditions, we develop a so-called calibration PI (cPI) which leverages estimations by Deep Neural Networks (DNN) or kernel methods. Moreover, the cPI can be easily adjusted to capture the estimation variability within the prediction procedure, which is a crucial error source often ignored in practice. Under regular assumptions, we verify that our cPI has an asymptotically valid coverage rate. We also demonstrate that cPI based on the kernel method ensures a coverage rate with a high probability when the sample size is large. Besides, with several conditions, the cPI based on DNN works even with finite samples. A comprehensive simulation study supports the usefulness of cPI, and the convincing performance of cPI with a short sample is confirmed with two empirical datasets.

This study presents preliminary results from the analysis of cosmic-ray anisotropy using air showers detected by the IceTop surface array between 2011 and 2022. With improved statistical precision and updated Monte Carlo simulation events compared to previous IceTop reports, we investigate anisotropy patterns across four energy ranges spanning from 300 TeV to 6.9 PeV. This work extends the measurement of cosmic-ray anisotropy in the southern hemisphere to higher energies than previously achieved with IceTop. Our results provide a foundation for exploring potential connections between the observed anisotropy, the energy spectrum, and the mass composition of the cosmic-ray flux.

The meaningful use of electronic health records (EHR) continues to progress in the digital era with clinical decision support systems augmented by artificial intelligence. A priority in improving provider experience is to overcome information overload and reduce the cognitive burden so fewer medical errors and cognitive biases are introduced during patient care. One major type of medical error is diagnostic error due to systematic or predictable errors in judgment that rely on heuristics. The potential for clinical natural language processing (cNLP) to model diagnostic reasoning in humans with forward reasoning from data to diagnosis and potentially reduce the cognitive burden and medical error has not been investigated. Existing tasks to advance the science in cNLP have largely focused on information extraction and named entity recognition through classification tasks. We introduce a novel suite of tasks coined as Diagnostic Reasoning Benchmarks, DR.BENCH, as a new benchmark for developing and evaluating cNLP models with clinical diagnostic reasoning ability. The suite includes six tasks from ten publicly available datasets addressing clinical text understanding, medical knowledge reasoning, and diagnosis generation. DR.BENCH is the first clinical suite of tasks designed to be a natural language generation framework to evaluate pre-trained language models. Experiments with state-of-the-art pre-trained generative language models using large general domain models and models that were continually trained on a medical corpus demonstrate opportunities for improvement when evaluated in DR. BENCH. We share DR. BENCH as a publicly available GitLab repository with a systematic approach to load and evaluate models for the cNLP community.

Electronic health records (EHRs) are long, noisy, and often redundant, posing a major challenge for the clinicians who must navigate them. Large language models (LLMs) offer a promising solution for extracting and reasoning over this unstructured text, but the length of clinical notes often exceeds even state-of-the-art models' extended context windows. Retrieval-augmented generation (RAG) offers an alternative by retrieving task-relevant passages from across the entire EHR, potentially reducing the amount of required input tokens. In this work, we propose three clinical tasks designed to be replicable across health systems with minimal effort: 1) extracting imaging procedures, 2) generating timelines of antibiotic use, and 3) identifying key diagnoses. Using EHRs from actual hospitalized patients, we test three state-of-the-art LLMs with varying amounts of provided context, using either targeted text retrieval or the most recent clinical notes. We find that RAG closely matches or exceeds the performance of using recent notes, and approaches the performance of using the models' full context while requiring drastically fewer input tokens. Our results suggest that RAG remains a competitive and efficient approach even as newer models become capable of handling increasingly longer amounts of text.

Recent breakthroughs in deep learning and generative systems have significantly fostered the creation of synthetic media, as well as the local alteration of real content via the insertion of highly realistic synthetic manipulations. Local image manipulation, in particular, poses serious challenges to the integrity of digital content and societal trust. This problem is not only confined to multimedia data, but also extends to biological images included in scientific publications, like images depicting Western blots. In this work, we address the task of localizing synthetic manipulations in Western blot images. To discriminate between pristine and synthetic pixels of an analyzed image, we propose a synthetic detector that operates on small patches extracted from the image. We aggregate patch contributions to estimate a tampering heatmap, highlighting synthetic pixels out of pristine ones. Our methodology proves effective when tested over two manipulated Western blot image datasets, one altered automatically and the other manually by exploiting advanced AI-based image manipulation tools that are unknown at our training stage. We also explore the robustness of our method over an external dataset of other scientific images depicting different semantics, manipulated through unseen generation techniques.

Data from online job postings are difficult to access and are not built in a standard or transparent manner. Data included in the standard taxonomy and occupational information database (O*NET) are updated infrequently and based on small survey samples. We adopt O*NET as a framework for building natural language processing tools that extract structured information from job postings. We publish the Job Ad Analysis Toolkit (JAAT), a collection of open-source tools built for this purpose, and demonstrate its reliability and accuracy in out-of-sample and LLM-as-a-Judge testing. We extract more than 10 billion data points from more than 155 million online job ads provided by the National Labor Exchange (NLx) Research Hub, including O*NET tasks, occupation codes, tools, and technologies, as well as wages, skills, industry, and more features. We describe the construction of a dataset of occupation, state, and industry level features aggregated by monthly active jobs from 2015 - 2025. We illustrate the potential for research and future uses in education and workforce development.

Diffusion models are state-of-the-art generative models, yet their samples often fail to satisfy application objectives such as safety constraints or domain-specific validity. Existing techniques for alignment require gradients, internal model access, or large computational budgets resulting in high compute demands, or lack of support for certain objectives. In response, we introduce an inference-time alignment framework based on evolutionary algorithms. We treat diffusion models as black boxes and search their latent space to maximize alignment objectives. Given equal or less running time, our method achieves 3-35% higher ImageReward scores than gradient-free and gradient-based methods. On the Open Image Preferences dataset, our method achieves competitive results across four popular alignment objectives. In terms of computational efficiency, we require 55% to 76% less GPU memory and are 72% to 80% faster than gradient-based methods.

University of Washington

University of Washington Google DeepMind

Google DeepMind University of Notre Dame

University of Notre Dame University of Southern California

University of Southern California Yale UniversityUniversity of California BerkeleyGeorgetown UniversityData & SocietyLoyola University ChicagoCollective Intelligence ProjectRedditRemeshAI & Democracy FoundationDemosBertelsmann StiftungThe New York TimesUK Policy LabJigsaw, Google

Yale UniversityUniversity of California BerkeleyGeorgetown UniversityData & SocietyLoyola University ChicagoCollective Intelligence ProjectRedditRemeshAI & Democracy FoundationDemosBertelsmann StiftungThe New York TimesUK Policy LabJigsaw, GoogleTwo substantial technological advances have reshaped the public square in recent decades: first with the advent of the internet and second with the recent introduction of large language models (LLMs). LLMs offer opportunities for a paradigm shift towards more decentralized, participatory online spaces that can be used to facilitate deliberative dialogues at scale, but also create risks of exacerbating societal schisms. Here, we explore four applications of LLMs to improve digital public squares: collective dialogue systems, bridging systems, community moderation, and proof-of-humanity systems. Building on the input from over 70 civil society experts and technologists, we argue that LLMs both afford promising opportunities to shift the paradigm for conversations at scale and pose distinct risks for digital public squares. We lay out an agenda for future research and investments in AI that will strengthen digital public squares and safeguard against potential misuses of AI.

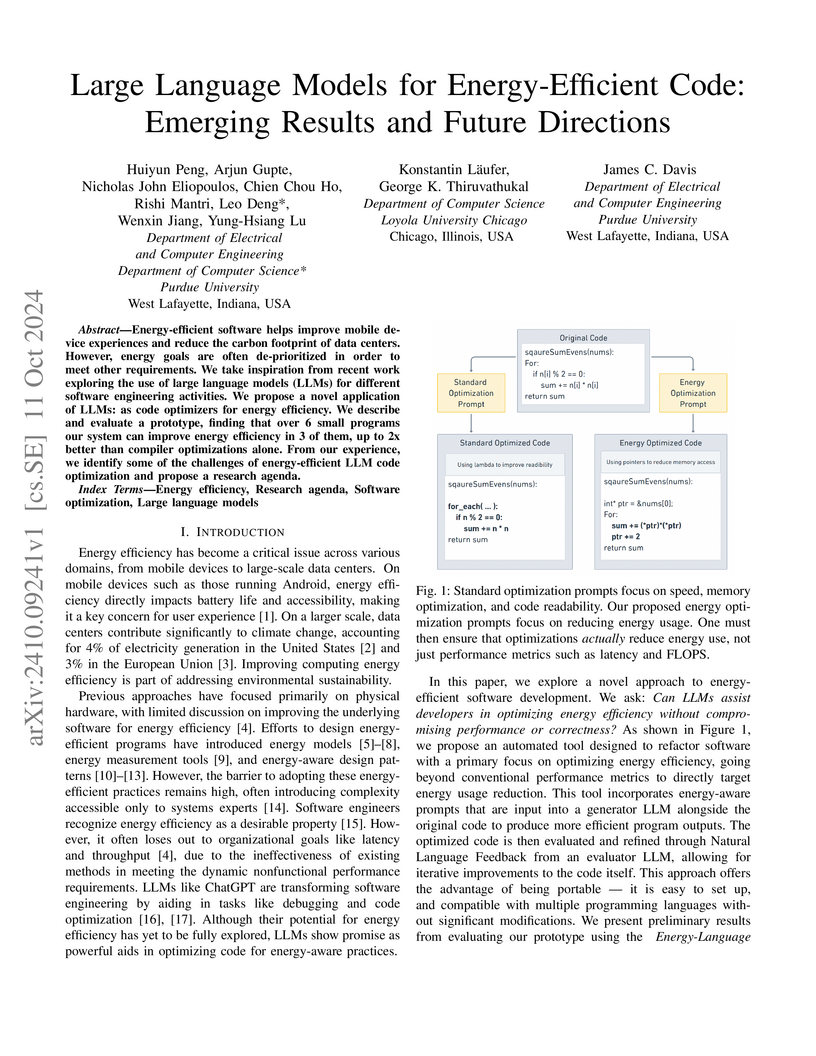

11 Oct 2024

Energy-efficient software helps improve mobile device experiences and reduce the carbon footprint of data centers. However, energy goals are often de-prioritized in order to meet other requirements. We take inspiration from recent work exploring the use of large language models (LLMs) for different software engineering activities. We propose a novel application of LLMs: as code optimizers for energy efficiency. We describe and evaluate a prototype, finding that over 6 small programs our system can improve energy efficiency in 3 of them, up to 2x better than compiler optimizations alone. From our experience, we identify some of the challenges of energy-efficient LLM code optimization and propose a research agenda.

18 Jan 2024

The Standard Model predicts a long-range force, proportional to GF2/r5, between fermions due to the exchange of a pair of neutrinos. This quantum force is feeble and has not been observed yet. In this paper, we compute this force in the presence of neutrino backgrounds, both for isotropic and directional background neutrinos. We find that for the case of directional background the force can have a 1/r dependence and it can be significantly enhanced compared to the vacuum case. In particular, background effects caused by reactor, solar, and supernova neutrinos enhance the force by many orders of magnitude. The enhancement, however, occurs only in the direction parallel to the direction of the background neutrinos. We discuss the experimental prospects of detecting the neutrino force in neutrino backgrounds and find that the effect is close to the available sensitivity of the current fifth force experiments. Yet, the angular spread of the neutrino flux and that of the test masses reduce the strength of this force. The results are encouraging and a detailed experimental study is called for to check if the effect can be probed.

As innovation in deep learning continues, many engineers are incorporating Pre-Trained Models (PTMs) as components in computer systems. Some PTMs are foundation models, and others are fine-tuned variations adapted to different needs. When these PTMs are named well, it facilitates model discovery and reuse. However, prior research has shown that model names are not always well chosen and can sometimes be inaccurate and misleading. The naming practices for PTM packages have not been systematically studied, which hampers engineers' ability to efficiently search for and reliably reuse these models. In this paper, we conduct the first empirical investigation of PTM naming practices in the Hugging Face PTM registry. We begin by reporting on a survey of 108 Hugging Face users, highlighting differences from traditional software package naming and presenting findings on PTM naming practices. The survey results indicate a mismatch between engineers' preferences and current practices in PTM naming. We then introduce DARA, the first automated DNN ARchitecture Assessment technique designed to detect PTM naming inconsistencies. Our results demonstrate that architectural information alone is sufficient to detect these inconsistencies, achieving an accuracy of 94% in identifying model types and promising performance (over 70%) in other architectural metadata as well. We also highlight potential use cases for automated naming tools, such as model validation, PTM metadata generation and verification, and plagiarism detection. Our study provides a foundation for automating naming inconsistency detection. Finally, we envision future work focusing on automated tools for standardizing package naming, improving model selection and reuse, and strengthening the security of the PTM supply chain.

Liver cancer is one of the most prevalent and lethal forms of cancer, making early detection crucial for effective treatment. This paper introduces a novel approach for automated liver tumor segmentation in computed tomography (CT) images by integrating a 3D U-Net architecture with the Bat Algorithm for hyperparameter optimization. The method enhances segmentation accuracy and robustness by intelligently optimizing key parameters like the learning rate and batch size. Evaluated on a publicly available dataset, our model demonstrates a strong ability to balance precision and recall, with a high F1-score at lower prediction thresholds. This is particularly valuable for clinical diagnostics, where ensuring no potential tumors are missed is paramount. Our work contributes to the field of medical image analysis by demonstrating that the synergy between a robust deep learning architecture and a metaheuristic optimization algorithm can yield a highly effective solution for complex segmentation tasks.

Applications are increasingly written as dynamic workflows underpinned by an execution framework that manages asynchronous computations across distributed hardware. However, execution frameworks typically offer one-size-fits-all solutions for data flow management, which can restrict performance and scalability. ProxyStore, a middleware layer that optimizes data flow via an advanced pass-by-reference paradigm, has shown to be an effective mechanism for addressing these limitations. Here, we investigate integrating ProxyStore with Dask Distributed, one of the most popular libraries for distributed computing in Python, with the goal of supporting scalable and portable scientific workflows. Dask provides an easy-to-use and flexible framework, but is less optimized for scaling certain data-intensive workflows. We investigate these limitations and detail the technical contributions necessary to develop a robust solution for distributed applications and demonstrate improved performance on synthetic benchmarks and real applications.

04 Aug 2023

The IceCube Observatory provides our highest-statistics picture of the cosmic-ray arrival directions in the Southern Hemisphere, with over 700 billion cosmic-ray-induced muon events collected between May 2011 and May 2022. Using the larger data volume, we find an improved significance of the PeV cosmic ray anisotropy down to scales of 6∘. In addition, we observe a variation in the angular power spectrum as a function of energy, hinting at a relative decrease in large-scale features above 100 TeV. The data-taking period covers a complete solar cycle, providing new insight into the time variability of the signal. We present preliminary results using this up-to-date event sample.

Objective: Applying large language models (LLMs) to the clinical domain is

challenging due to the context-heavy nature of processing medical records.

Retrieval-augmented generation (RAG) offers a solution by facilitating

reasoning over large text sources. However, there are many parameters to

optimize in just the retrieval system alone. This paper presents an ablation

study exploring how different embedding models and pooling methods affect

information retrieval for the clinical domain.

Methods: Evaluating on three retrieval tasks on two electronic health record

(EHR) data sources, we compared seven models, including medical- and

general-domain models, specialized encoder embedding models, and off-the-shelf

decoder LLMs. We also examine the choice of embedding pooling strategy for each

model, independently on the query and the text to retrieve.

Results: We found that the choice of embedding model significantly impacts

retrieval performance, with BGE, a comparatively small general-domain model,

consistently outperforming all others, including medical-specific models.

However, our findings also revealed substantial variability across datasets and

query text phrasings. We also determined the best pooling methods for each of

these models to guide future design of retrieval systems.

Discussion: The choice of embedding model, pooling strategy, and query

formulation can significantly impact retrieval performance and the performance

of these models on other public benchmarks does not necessarily transfer to new

domains. Further studies such as this one are vital for guiding

empirically-grounded development of retrieval frameworks, such as in the

context of RAG, for the clinical domain.

The demand for high-quality synthetic data for model training and

augmentation has never been greater in medical imaging. However, current

evaluations predominantly rely on computational metrics that fail to align with

human expert recognition. This leads to synthetic images that may appear

realistic numerically but lack clinical authenticity, posing significant

challenges in ensuring the reliability and effectiveness of AI-driven medical

tools. To address this gap, we introduce GazeVal, a practical framework that

synergizes expert eye-tracking data with direct radiological evaluations to

assess the quality of synthetic medical images. GazeVal leverages gaze patterns

of radiologists as they provide a deeper understanding of how experts perceive

and interact with synthetic data in different tasks (i.e., diagnostic or Turing

tests). Experiments with sixteen radiologists revealed that 96.6% of the

generated images (by the most recent state-of-the-art AI algorithm) were

identified as fake, demonstrating the limitations of generative AI in producing

clinically accurate images.

Sports analytics -- broadly defined as the pursuit of improvement in athletic

performance through the analysis of data -- has expanded its footprint both in

the professional sports industry and in academia over the past 30 years. In

this paper, we connect four big ideas that are common across multiple sports:

the expected value of a game state, win probability, measures of team strength,

and the use of sports betting market data. For each, we explore both the shared

similarities and individual idiosyncrasies of analytical approaches in each

sport. While our focus is on the concepts underlying each type of analysis, any

implementation necessarily involves statistical methodologies, computational

tools, and data sources. Where appropriate, we outline how data, models, tools,

and knowledge of the sport combine to generate actionable insights. We also

describe opportunities to share analytical work, but omit an in-depth

discussion of individual player evaluation as beyond our scope. This paper

should serve as a useful overview for anyone becoming interested in the study

of sports analytics.

There are no more papers matching your filters at the moment.