Marshall University

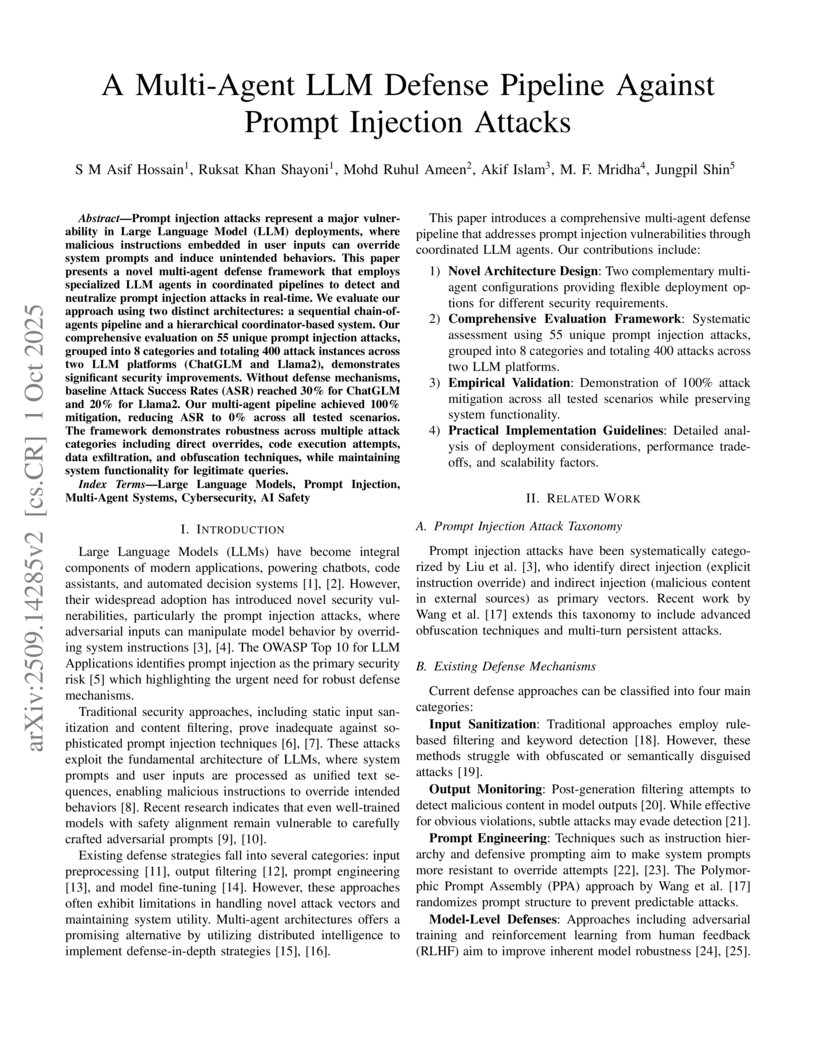

Prompt injection attacks represent a major vulnerability in Large Language Model (LLM) deployments, where malicious instructions embedded in user inputs can override system prompts and induce unintended behaviors. This paper presents a novel multi-agent defense framework that employs specialized LLM agents in coordinated pipelines to detect and neutralize prompt injection attacks in real-time. We evaluate our approach using two distinct architectures: a sequential chain-of-agents pipeline and a hierarchical coordinator-based system. Our comprehensive evaluation on 55 unique prompt injection attacks, grouped into 8 categories and totaling 400 attack instances across two LLM platforms (ChatGLM and Llama2), demonstrates significant security improvements. Without defense mechanisms, baseline Attack Success Rates (ASR) reached 30% for ChatGLM and 20% for Llama2. Our multi-agent pipeline achieved 100% mitigation, reducing ASR to 0% across all tested scenarios. The framework demonstrates robustness across multiple attack categories including direct overrides, code execution attempts, data exfiltration, and obfuscation techniques, while maintaining system functionality for legitimate queries.

Researchers from the University of Washington, SETI Institute, and other institutions developed a comprehensive roadmap for systematically searching interstellar objects (ISOs) for technosignatures. The framework categorizes potential technosignatures into four types and details observational strategies, emphasizing rigorous comparison with natural phenomena in anticipation of increased ISO discoveries from the Rubin Observatory.

Manual labeling for large-scale image and video datasets is often

time-intensive, error-prone, and costly, posing a significant barrier to

efficient machine learning workflows in fault detection from railroad videos.

This study introduces a semi-automated labeling method that utilizes a

pre-trained You Only Look Once (YOLO) model to streamline the labeling process

and enhance fault detection accuracy in railroad videos. By initiating the

process with a small set of manually labeled data, our approach iteratively

trains the YOLO model, using each cycle's output to improve model accuracy and

progressively reduce the need for human intervention.

To facilitate easy correction of model predictions, we developed a system to

export YOLO's detection data as an editable text file, enabling rapid

adjustments when detections require refinement. This approach decreases

labeling time from an average of 2 to 4 minutes per image to 30 seconds to 2

minutes, effectively minimizing labor costs and labeling errors. Unlike costly

AI based labeling solutions on paid platforms, our method provides a

cost-effective alternative for researchers and practitioners handling large

datasets in fault detection and other detection based machine learning

applications.

This study examines how large language models categorize sentences from

scientific papers using prompt engineering. We use two advanced web-based

models, GPT-4o (by OpenAI) and DeepSeek R1, to classify sentences into

predefined relationship categories. DeepSeek R1 has been tested on benchmark

datasets in its technical report. However, its performance in scientific text

categorization remains unexplored. To address this gap, we introduce a new

evaluation method designed specifically for this task. We also compile a

dataset of cleaned scientific papers from diverse domains. This dataset

provides a platform for comparing the two models. Using this dataset, we

analyze their effectiveness and consistency in categorization.

13 Oct 2024

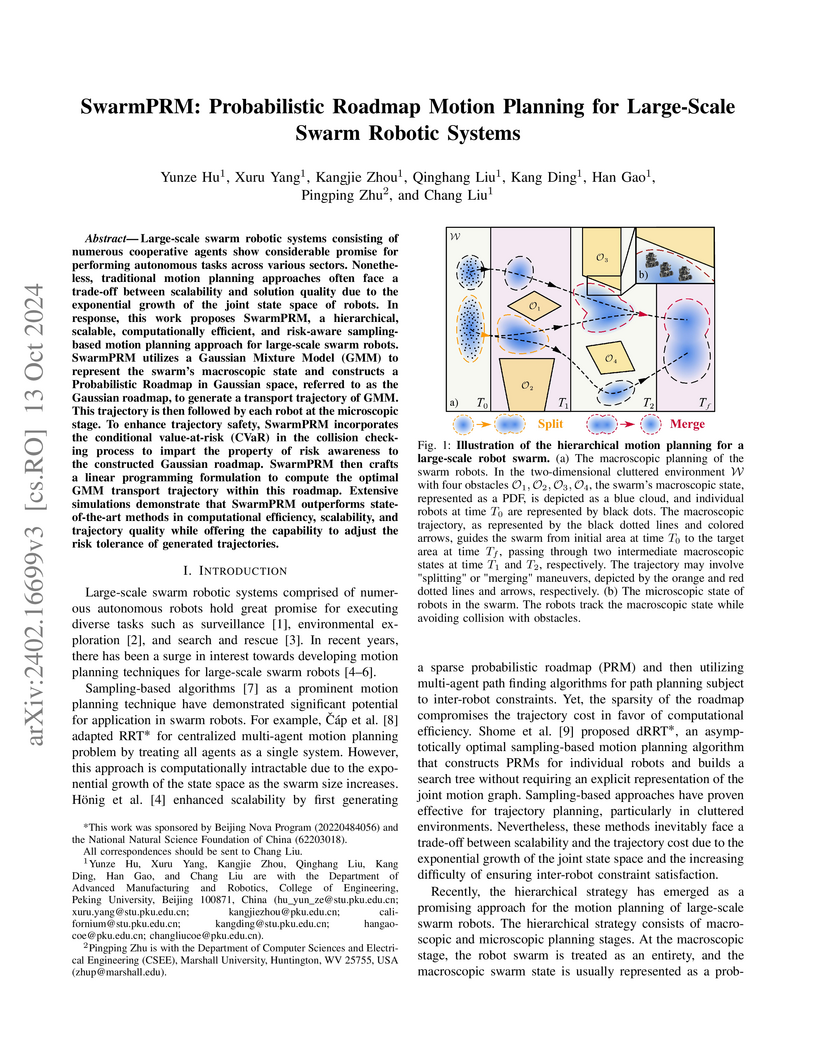

Large-scale swarm robotic systems consisting of numerous cooperative agents show considerable promise for performing autonomous tasks across various sectors. Nonetheless, traditional motion planning approaches often face a trade-off between scalability and solution quality due to the exponential growth of the joint state space of robots. In response, this work proposes SwarmPRM, a hierarchical, scalable, computationally efficient, and risk-aware sampling-based motion planning approach for large-scale swarm robots. SwarmPRM utilizes a Gaussian Mixture Model (GMM) to represent the swarm's macroscopic state and constructs a Probabilistic Roadmap in Gaussian space, referred to as the Gaussian roadmap, to generate a transport trajectory of GMM. This trajectory is then followed by each robot at the microscopic stage. To enhance trajectory safety, SwarmPRM incorporates the conditional value-at-risk (CVaR) in the collision checking process to impart the property of risk awareness to the constructed Gaussian roadmap. SwarmPRM then crafts a linear programming formulation to compute the optimal GMM transport trajectory within this roadmap. Extensive simulations demonstrate that SwarmPRM outperforms state-of-the-art methods in computational efficiency, scalability, and trajectory quality while offering the capability to adjust the risk tolerance of generated trajectories.

Additional food sources for an introduced predator are known to increase its efficiency on a target pest. In this context, inhibiting factors such as interference, predator competition, and the introduction of temporally dependent quantity and quality of additional food are all known to enable pest extinction. As climate change and habitat degradation have increasing effects in enhancing patchiness in ecological systems, the effect of additional food in patch models has also been recently considered. However, the question of complete pest extinction in such patchy systems remains open. In the current manuscript, we consider a biological control model where additional food drives competition among predators in one patch, and they subsequently disperse to a neighboring patch via drift or dispersal. We show that complete pest extinction in both patches is possible. Further, this state is proved to be globally asymptotically stable under certain parametric restrictions. We also prove a codimension-2 Bogdanov-Takens bifurcation. We discuss our results in the context of designing pest management strategies under enhanced climate change and habitat fragmentation. Such strategies are particularly relevant to control invasive pests such as the Soybean aphid (\emph{Aphis glycines}), in the North Central United States.

03 Apr 2025

California Institute of TechnologyUniversity of Victoria

California Institute of TechnologyUniversity of Victoria UCLAVanderbilt University

UCLAVanderbilt University University of Michigan

University of Michigan University of California, San Diego

University of California, San Diego Northwestern University

Northwestern University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center University of Texas at AustinLouisiana State University

University of Texas at AustinLouisiana State University Columbia University

Columbia University Rice University

Rice University The Pennsylvania State UniversityLos Alamos National LaboratoryOak Ridge National LaboratoryLiverpool John Moores UniversityNSF’s NOIRLabTexas Tech UniversityNational Radio Astronomy ObservatoryUniversity of TennesseeMichigan Technological UniversityNASA Marshall Space Flight Center

The Pennsylvania State UniversityLos Alamos National LaboratoryOak Ridge National LaboratoryLiverpool John Moores UniversityNSF’s NOIRLabTexas Tech UniversityNational Radio Astronomy ObservatoryUniversity of TennesseeMichigan Technological UniversityNASA Marshall Space Flight Center University of California, Santa CruzSmithsonian Astrophysical ObservatoryUniversity of Maryland Baltimore CountyPlanetary Science InstituteMarshall University

University of California, Santa CruzSmithsonian Astrophysical ObservatoryUniversity of Maryland Baltimore CountyPlanetary Science InstituteMarshall UniversityA white paper stemming from the 3rd TDAMM Workshop advocates for a deeply integrated, multidisciplinary approach to time-domain and multimessenger astrophysics, proposing an "end-to-end" research methodology inspired by the NNSA to maximize the scientific return from astronomical facilities and address grand scientific questions. The paper identifies that overcoming disciplinary silos and realigning funding incentives are crucial to advancing understanding of phenomena such as the origin of elements and extreme matter conditions.

Many astrophysical systems of interest to numerical relativity, such as rapidly rotating stars, black hole accretion disks, and core-collapse supernovae, exhibit near-symmetries. These systems generally consist of a strongly gravitating central object surrounded by an accretion disk, debris, and ejecta. Simulations can efficiently exploit the near-axisymmetry of these systems by reducing the number of points in the angular direction around the near-symmetry axis, enabling efficient simulations over seconds-long timescales with minimal computational expense. In this paper, we introduce GRoovy, a novel code capable of modeling astrophysical systems containing compact objects by solving the equations of general relativistic hydrodynamics (GRHD) in full general relativity using singular curvilinear (spherical-like and cylindrical-like) and Cartesian coordinates. We demonstrate the code's robustness through a battery of challenging GRHD tests, ranging from flat, static spacetimes to curved, dynamical spacetimes. These tests further showcase the code's capabilities in modeling systems with realistic, finite-temperature equations of state and neutrino cooling via a leakage scheme. GRoovy extensively leverages GRHayL, an open-source, modular, and infrastructure-agnostic general relativistic magnetohydrodynamics library built from the highly robust algorithms of IllinoisGRMHD. Long-term simulations of binary neutron star and black hole-neutron star post-merger remnants will benefit greatly from GRoovy to study phenomena such as remnant stability, gamma-ray bursts, and nucleosynthesis.

02 Sep 2020

König's edge coloring theorem says that a bipartite graph with maximal degree n has an edge coloring with no more than n colors. We explore the computability theory and Reverse Mathematics aspects of this theorem. Computable bipartite graphs with degree bounded by n have computable edge colorings with n+1 colors, but the theorem that there is an edge coloring with n colors is equivalent to WKLo over RCAo. This gives an additional proof of a theorem of Hirst: WKLo is equivalent over RCAo to the principle that every countable bipartite n-regular graph is the union of n complete matchings. We describe open questions related to Vizing's edge coloring theorem and a countable form of Birkhoff's theorem.

03 Aug 2024

This chapter narrates the journey of developing and integrating computing

into the physics curriculum through three consecutive courses, each tailored to

the learners' level. It starts with the entry-level "Physics Playground in

Python" for high school and freshman students with no programming experience,

designed in the spirit of the "Hello World" approach. At the sophomore and

junior level, students from all sciences and engineering disciplines learn

"Scientific Computing with Python" in an environment based on the "Two Bites at

Every Apple" approach. Ultimately, upper undergraduate and entry-level graduate

students take "Computational Physics," to develop their skills in solving

advanced problems using complex numerical algorithms and computational tools.

This journey showcases the increasing complexity and sophistication of

computational tools and techniques that can be incorporated into the physical

science curriculum, serving as a guide for educators looking to integrate

computing into their teaching. It also aims to inspire students by showcasing

the impact and potential of computational methods in science education and

research.

03 Oct 2024

Swarm robotics, or very large-scale robotics (VLSR), has many meaningful applications for complicated tasks. However, the complexity of motion control and energy costs stack up quickly as the number of robots increases. In addressing this problem, our previous studies have formulated various methods employing macroscopic and microscopic approaches. These methods enable microscopic robots to adhere to a reference Gaussian mixture model (GMM) distribution observed at the macroscopic scale. As a result, optimizing the macroscopic level will result in an optimal overall result. However, all these methods require systematic and global generation of Gaussian components (GCs) within obstacle-free areas to construct the GMM trajectories. This work utilizes centroidal Voronoi tessellation to generate GCs methodically. Consequently, it demonstrates performance improvement while also ensuring consistency and reliability.

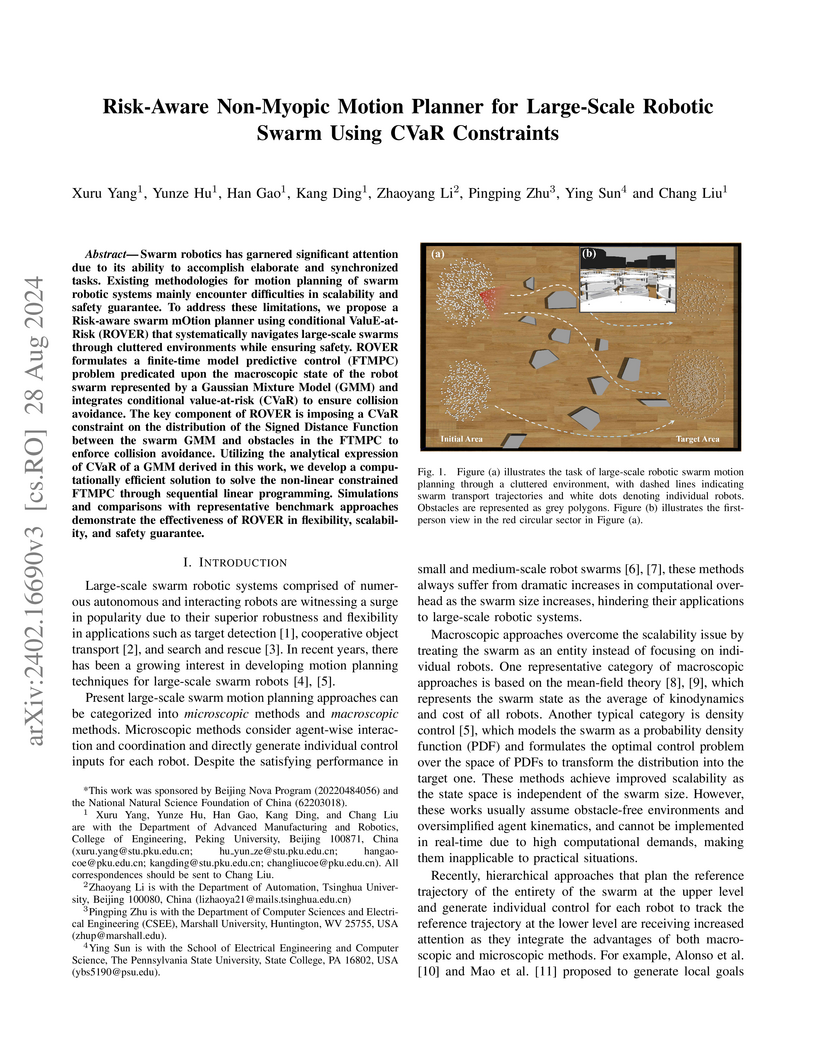

28 Aug 2024

Swarm robotics has garnered significant attention due to its ability to accomplish elaborate and synchronized tasks. Existing methodologies for motion planning of swarm robotic systems mainly encounter difficulties in scalability and safety guarantee. To address these limitations, we propose a Risk-aware swarm mOtion planner using conditional ValuE at Risk (ROVER) that systematically navigates large-scale swarms through cluttered environments while ensuring safety. ROVER formulates a finite-time model predictive control (FTMPC) problem predicated upon the macroscopic state of the robot swarm represented by a Gaussian Mixture Model (GMM) and integrates conditional value-at-risk (CVaR) to ensure collision avoidance. The key component of ROVER is imposing a CVaR constraint on the distribution of the Signed Distance Function between the swarm GMM and obstacles in the FTMPC to enforce collision avoidance. Utilizing the analytical expression of CVaR of a GMM derived in this work, we develop a computationally efficient solution to solve the non-linear constrained FTMPC through sequential linear programming. Simulations and comparisons with representative benchmark approaches demonstrate the effectiveness of ROVER in flexibility, scalability, and risk mitigation.

23 Jul 2020

We show that RT(2,4) cannot be proved with one typical application of RT(2,2)

in an intuitionistic extension of RCA0 to higher types, but that this does not

remain true when the law of the excluded middle is added. The argument uses

Kohlenbach's axiomatization of higher order reverse mathematics, results

related to modified reducibility, and a formalization of Weihrauch

reducibility.

11 Jun 2025

A mathematical model for forecasting of public sentiments via the Mean Field Games theory is proposed. A numerical method is developed. This is a version of the so-called convexification method. Convergence analysis demonstrates the global convergence of this method. Convergence rate is established. Numerical experiments demonstrate both an accurate performance of the convexification technique and some promising features of this approach.

06 Apr 2025

It is proposed to monitor spatial and temporal spreads of epidemics via

solution of a Coefficient Inverse Problem for a system of three coupled

nonlinear parabolic equations. To solve this problem numerically, a version of

the so-called Carleman contraction mapping method is developed for this

problem. On each iteration, a linear problem with the incomplete lateral Cauchy

data is solved by the weighted Quasi-Reversibility Method, where the weight is

the Carleman Weight Function. This is the function, which is involved as the

weight in the Carleman estimate for the corresponding parabolic operator.

Convergence analysis ensures the global convergence of this procedure.

Numerical results demonstrate an accurate performance of this technique for

noisy data.

14 Sep 2023

Inferential decision-making algorithms typically assume that an underlying probabilistic model of decision alternatives and outcomes may be learned a priori or online. Furthermore, when applied to robots in real-world settings they often perform unsatisfactorily or fail to accomplish the necessary tasks because this assumption is violated and/or they experience unanticipated external pressures and constraints. Cognitive studies presented in this and other papers show that humans cope with complex and unknown settings by modulating between near-optimal and satisficing solutions, including heuristics, by leveraging information value of available environmental cues that are possibly redundant. Using the benchmark inferential decision problem known as ``treasure hunt", this paper develops a general approach for investigating and modeling active perception solutions under pressure. By simulating treasure hunt problems in virtual worlds, our approach learns generalizable strategies from high performers that, when applied to robots, allow them to modulate between optimal and heuristic solutions on the basis of external pressures and probabilistic models, if and when available. The result is a suite of active perception algorithms for camera-equipped robots that outperform treasure-hunt solutions obtained via cell decomposition, information roadmap, and information potential algorithms, in both high-fidelity numerical simulations and physical experiments. The effectiveness of the new active perception strategies is demonstrated under a broad range of unanticipated conditions that cause existing algorithms to fail to complete the search for treasures, such as unmodelled time constraints, resource constraints, and adverse weather (fog).

Manual labeling for large-scale image and video datasets is often time-intensive, error-prone, and costly, posing a significant barrier to efficient machine learning workflows in fault detection from railroad videos. This study introduces a semi-automated labeling method that utilizes a pre-trained You Only Look Once (YOLO) model to streamline the labeling process and enhance fault detection accuracy in railroad videos. By initiating the process with a small set of manually labeled data, our approach iteratively trains the YOLO model, using each cycle's output to improve model accuracy and progressively reduce the need for human intervention.

To facilitate easy correction of model predictions, we developed a system to export YOLO's detection data as an editable text file, enabling rapid adjustments when detections require refinement. This approach decreases labeling time from an average of 2 to 4 minutes per image to 30 seconds to 2 minutes, effectively minimizing labor costs and labeling errors. Unlike costly AI based labeling solutions on paid platforms, our method provides a cost-effective alternative for researchers and practitioners handling large datasets in fault detection and other detection based machine learning applications.

We present GiRaFFE, the first open-source general relativistic force-free

electrodynamics (GRFFE) code for dynamical, numerical-relativity generated

spacetimes. GiRaFFE adopts the strategy pioneered by McKinney and modified by

Paschalidis and Shapiro to convert a GR magnetohydrodynamic (GRMHD) code into a

GRFFE code. In short, GiRaFFE exists as a modification of IllinoisGRMHD, a

user-friendly, open-source, dynamical-spacetime GRMHD code. Both GiRaFFE and

IllinoisGRMHD leverage the Einstein Toolkit's highly-scalable infrastructure to

make possible large-scale simulations of magnetized plasmas in strong,

dynamical spacetimes on adaptive-mesh refinement (AMR) grids. We demonstrate

that GiRaFFE passes a large suite of both flat and curved-spacetime code tests

passed by a number of other state-of-the-art GRFFE codes, and is thus ready for

production-scale simulations of GRFFE phenomena of key interest to relativistic

astrophysics.

Detecting cancer manually in whole slide images requires significant time and

effort on the laborious process. Recent advances in whole slide image analysis

have stimulated the growth and development of machine learning-based approaches

that improve the efficiency and effectiveness in the diagnosis of cancer

diseases. In this paper, we propose an unsupervised learning approach for

detecting cancer in breast invasive carcinoma (BRCA) whole slide images. The

proposed method is fully automated and does not require human involvement

during the unsupervised learning procedure. We demonstrate the effectiveness of

the proposed approach for cancer detection in BRCA and show how the machine can

choose the most appropriate clusters during the unsupervised learning

procedure. Moreover, we present a prototype application that enables users to

select relevant groups mapping all regions related to the groups in whole slide

images.

Information-driven control can be used to develop intelligent sensors that can optimize their measurement value based on environmental feedback. In object tracking applications, sensor actions are chosen based on the expected reduction in uncertainty also known as information gain. Random finite set (RFS) theory provides a formalism for quantifying and estimating information gain in multi-object tracking problems. However, estimating information gain in these applications remains computationally challenging. This paper presents a new tractable approximation of the RFS expected information gain applicable to sensor control for multi-object search and tracking. Unlike existing RFS approaches, the information gain approximation presented in this paper considers the contributions of non-ideal noisy measurements, missed detections, false alarms, and object appearance/disappearance. The effectiveness of the information-driven sensor control is demonstrated through two multi-vehicle search-while-tracking experiments using real video data from remote terrestrial and satellite sensors.

There are no more papers matching your filters at the moment.