Michigan State University

Michigan State UniversityAmit Kumar Bhuyan and Hrishikesh Dutta's review from Michigan State University offers a comprehensive, holistic overview of quantum communication, detailing its theoretical foundations, practical protocols, and network architectures. The work synthesizes advancements across the field, underscoring quantum entanglement as the driving force behind secure and efficient information exchange in quantum networks.

Temporal Point Processes (TPPs) hold a pivotal role in modeling event

sequences across diverse domains, including social networking and e-commerce,

and have significantly contributed to the advancement of recommendation systems

and information retrieval strategies. Through the analysis of events such as

user interactions and transactions, TPPs offer valuable insights into

behavioral patterns, facilitating the prediction of future trends. However,

accurately forecasting future events remains a formidable challenge due to the

intricate nature of these patterns. The integration of Neural Networks with

TPPs has ushered in the development of advanced deep TPP models. While these

models excel at processing complex and nonlinear temporal data, they encounter

limitations in modeling intensity functions, grapple with computational

complexities in integral computations, and struggle to capture long-range

temporal dependencies effectively. In this study, we introduce the CuFun model,

representing a novel approach to TPPs that revolves around the Cumulative

Distribution Function (CDF). CuFun stands out by uniquely employing a monotonic

neural network for CDF representation, utilizing past events as a scaling

factor. This innovation significantly bolsters the model's adaptability and

precision across a wide range of data scenarios. Our approach addresses several

critical issues inherent in traditional TPP modeling: it simplifies

log-likelihood calculations, extends applicability beyond predefined density

function forms, and adeptly captures long-range temporal patterns. Our

contributions encompass the introduction of a pioneering CDF-based TPP model,

the development of a methodology for incorporating past event information into

future event prediction, and empirical validation of CuFun's effectiveness

through extensive experimentation on synthetic and real-world datasets.

A comprehensive survey details the field of Retrieval-Augmented Generation with Graphs (GraphRAG), proposing a unified framework for integrating graph-structured data into RAG systems and specializing its application across ten distinct domains, providing a structured understanding of current techniques and future research directions.

Nonnegative Matrix Factorization (NMF) is an important unsupervised learning method to extract meaningful features from data. To address the NMF problem within a polynomial time framework, researchers have introduced a separability assumption, which has recently evolved into the concept of coseparability. This advancement offers a more efficient core representation for the original data. However, in the real world, the data is more natural to be represented as a multi-dimensional array, such as images or videos. The NMF's application to high-dimensional data involves vectorization, which risks losing essential multi-dimensional correlations. To retain these inherent correlations in the data, we turn to tensors (multidimensional arrays) and leverage the tensor t-product. This approach extends the coseparable NMF to the tensor setting, creating what we term coseparable Nonnegative Tensor Factorization (NTF). In this work, we provide an alternating index selection method to select the coseparable core. Furthermore, we validate the t-CUR sampling theory and integrate it with the tensor Discrete Empirical Interpolation Method (t-DEIM) to introduce an alternative, randomized index selection process. These methods have been tested on both synthetic and facial analysis datasets. The results demonstrate the efficiency of coseparable NTF when compared to coseparable NMF.

30 Sep 2024

Michigan State University

Michigan State University University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of California, Santa Barbara

University of California, Santa Barbara Harvard University

Harvard University UCLA

UCLA Carnegie Mellon University

Carnegie Mellon University University of Notre Dame

University of Notre Dame University of Southern California

University of Southern California UC Berkeley

UC Berkeley Georgia Institute of Technology

Georgia Institute of Technology Stanford UniversityIllinois Institute of Technology

Stanford UniversityIllinois Institute of Technology Texas A&M University

Texas A&M University Yale University

Yale University Northwestern University

Northwestern University University of Georgia

University of Georgia Microsoft

Microsoft Columbia UniversityLehigh UniversityUniversity of Illinois Chicago

Columbia UniversityLehigh UniversityUniversity of Illinois Chicago Johns Hopkins University

Johns Hopkins University University of Maryland

University of Maryland University of Wisconsin-MadisonMassachusetts General Hospital

University of Wisconsin-MadisonMassachusetts General Hospital Mohamed bin Zayed University of Artificial IntelligenceSalesforce ResearchInstitut Polytechnique de Paris

Mohamed bin Zayed University of Artificial IntelligenceSalesforce ResearchInstitut Polytechnique de Paris Duke University

Duke University Virginia TechWilliam & MaryFlorida International UniversityUNC-Chapel HillCISPALawrence Livermore National LaboratorySamsungIBM Research AIDrexel UniversityUniversity of Tennessee, Knoxville

Virginia TechWilliam & MaryFlorida International UniversityUNC-Chapel HillCISPALawrence Livermore National LaboratorySamsungIBM Research AIDrexel UniversityUniversity of Tennessee, KnoxvilleThe TRUSTLLM framework and benchmark offer a comprehensive system for evaluating the trustworthiness of large language models across six key dimensions. This work reveals that while proprietary models generally exhibit higher trustworthiness, open-source models can also achieve strong performance in specific areas, highlighting challenges like 'over-alignment' and data leakage.

Michigan State University

Michigan State University University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of GeorgiaLehigh University

University of GeorgiaLehigh University The University of Hong Kong

The University of Hong Kong Huazhong University of Science and TechnologySalesforce ResearchUniversity of Illinois at Chicago

Huazhong University of Science and TechnologySalesforce ResearchUniversity of Illinois at Chicago Duke UniversityJilin University

Duke UniversityJilin University Southern University of Science and TechnologyWorcester Polytechnic InstituteLinkedIn CorporationSquirrel Ai Learning

Southern University of Science and TechnologyWorcester Polytechnic InstituteLinkedIn CorporationSquirrel Ai LearningThis survey offers the first comprehensive review of Post-training Language Models (PoLMs), systematically classifying methods, datasets, and applications within a novel intellectual framework. It traces the evolution of LLMs across five core paradigms—Fine-tuning, Alignment, Reasoning, Efficiency, and Integration & Adaptation—and identifies critical future research directions.

Researchers from Penn State, in collaboration with industry partners, provide the first comprehensive survey of Reinforcement Learning-based agentic search, systematically organizing its foundational concepts, functional roles, optimization strategies, and applications. This work clarifies the interplay between RL and agentic LLMs, delineating current capabilities, evaluation methods, and critical future research directions.

SVD-LLM introduces a post-training compression method for Large Language Models that employs a truncation-aware Singular Value Decomposition and a sequential parameter update strategy to maintain accuracy at high compression ratios. The method achieves significant perplexity reduction and inference efficiency, outperforming existing SVD-based, pruning, and 1-bit quantization approaches while also enabling simultaneous model and KV cache compression.

Researchers from Snap Inc. and Michigan State University systematically investigated the model scaling behaviors of two generative recommendation paradigms: Semantic ID-based GR and LLM-as-RS. Their findings reveal that SID-based GR suffers from fundamental bottlenecks preventing effective scaling, while LLM-as-RS demonstrates superior and consistent performance improvements with increased model size, achieving up to 20% better Recall@5 than SID-based GR.

24 Oct 2025

Researchers developed MINJA, a method for memory injection attacks on Large Language Model (LLM) agents through query-only interaction, without requiring direct memory access or modification of victim queries. This approach successfully compromises agents' long-term memory, achieving an average injection success rate of 98.2% and an attack success rate of 76.8% across various agents, LLMs, and tasks, and demonstrated resilience against common defense strategies.

10 Oct 2025

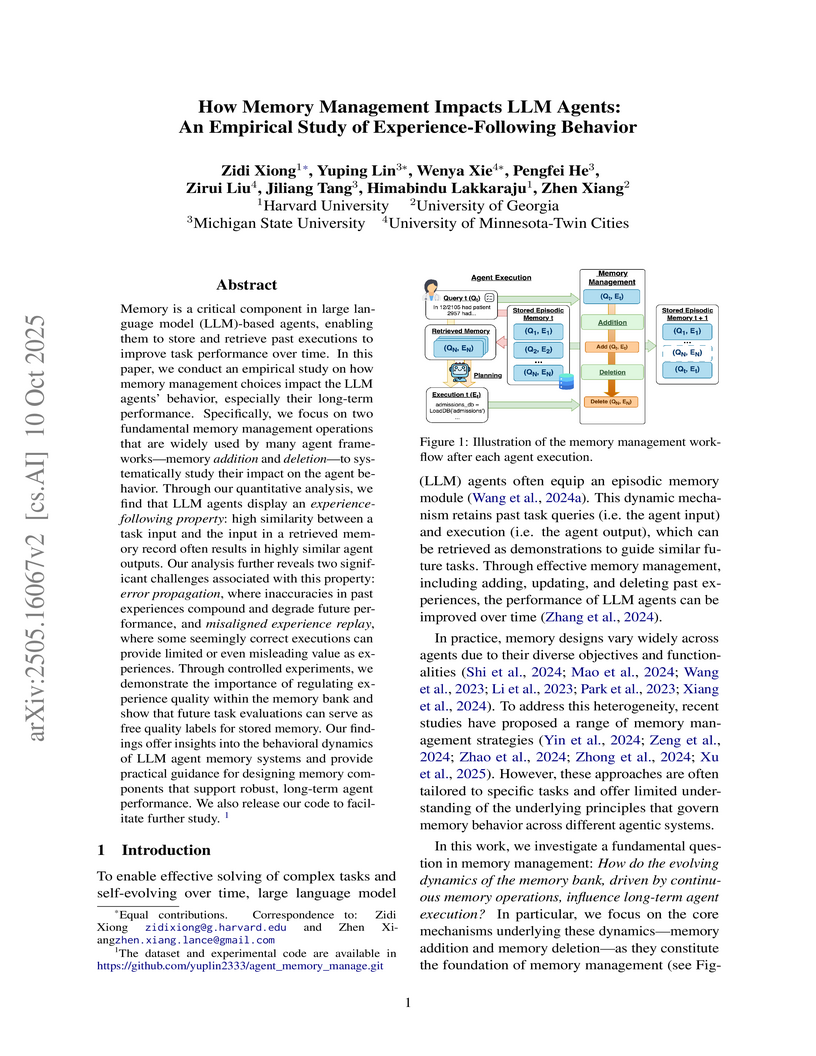

An empirical study clarifies the impact of memory addition and deletion on the long-term performance of Large Language Model (LLM) agents, identifying the "experience-following property" and demonstrating how reliable trajectory evaluators are essential for managing self-generated, noisy experiences to achieve consistent self-improvement.

This survey paper systematically reviews and categorizes a wide array of techniques designed to enhance the efficiency of Large Language Models (LLMs), addressing their substantial computational and memory demands. It establishes a three-tiered taxonomy that encompasses model-centric, data-centric, and framework-level approaches, providing a comprehensive resource for researchers and practitioners.

17 Oct 2025

This research systematically evaluates Retrieval-Augmented Generation (RAG) and various GraphRAG methods on text-based question answering and summarization tasks using established benchmarks. It provides empirical evidence differentiating their strengths, showing RAG excels at direct factual recall while specific GraphRAG variants perform better on multi-hop reasoning, and demonstrates improved performance with hybrid strategies.

SA LUN (Saliency Unlearning) introduces a principled framework that uses gradient-based weight saliency to selectively update model parameters, achieving efficient and stable machine unlearning in both image classification and image generation tasks. It consistently reduces the performance gap with exact retraining and effectively prevents generative models from creating undesirable content.

We explore machine unlearning (MU) in the domain of large language models (LLMs), referred to as LLM unlearning. This initiative aims to eliminate undesirable data influence (e.g., sensitive or illegal information) and the associated model capabilities, while maintaining the integrity of essential knowledge generation and not affecting causally unrelated information. We envision LLM unlearning becoming a pivotal element in the life-cycle management of LLMs, potentially standing as an essential foundation for developing generative AI that is not only safe, secure, and trustworthy, but also resource-efficient without the need of full retraining. We navigate the unlearning landscape in LLMs from conceptual formulation, methodologies, metrics, and applications. In particular, we highlight the often-overlooked aspects of existing LLM unlearning research, e.g., unlearning scope, data-model interaction, and multifaceted efficacy assessment. We also draw connections between LLM unlearning and related areas such as model editing, influence functions, model explanation, adversarial training, and reinforcement learning. Furthermore, we outline an effective assessment framework for LLM unlearning and explore its applications in copyright and privacy safeguards and sociotechnical harm reduction.

05 Aug 2025

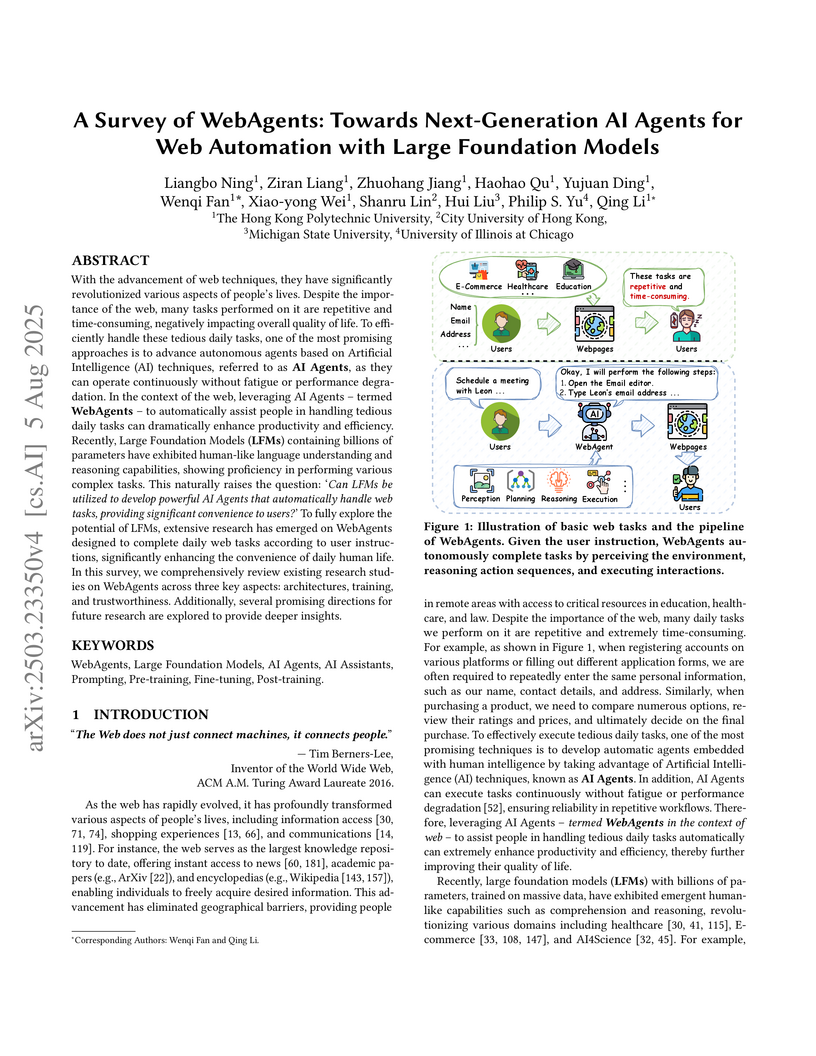

A comprehensive survey categorizes existing research on LFM-empowered WebAgents by analyzing their architectures, training methodologies, and trustworthiness dimensions. It consolidates fragmented knowledge, highlighting advancements in web task automation and identifying critical gaps in areas like safety, privacy, and generalizability.

Recommender systems have become an essential component of many online platforms, providing personalized recommendations to users. A crucial aspect is embedding techniques that convert the high-dimensional discrete features, such as user and item IDs, into low-dimensional continuous vectors, which can enhance the recommendation performance. Embedding techniques have revolutionized the capture of complex entity relationships, generating significant research interest. This survey presents a comprehensive analysis of recent advances in recommender system embedding techniques. We examine centralized embedding approaches across matrix, sequential, and graph structures. In matrix-based scenarios, collaborative filtering generates embeddings that effectively model user-item preferences, particularly in sparse data environments. For sequential data, we explore various approaches including recurrent neural networks and self-supervised methods such as contrastive and generative learning. In graph-structured contexts, we analyze techniques like node2vec that leverage network relationships, along with applicable self-supervised methods. Our survey addresses critical scalability challenges in embedding methods and explores innovative directions in recommender systems. We introduce emerging approaches, including AutoML, hashing techniques, and quantization methods, to enhance performance while reducing computational complexity. Additionally, we examine the promising role of Large Language Models (LLMs) in embedding enhancement. Through detailed discussion of various architectures and methodologies, this survey aims to provide a thorough overview of state-of-the-art embedding techniques in recommender systems, while highlighting key challenges and future research directions.

A new evaluation protocol assesses Vision-Language Models' spatial understanding, revealing that current models predominantly rely on English-centric egocentric perspectives for spatial reasoning and lack robustness and flexibility in adopting alternative Frames of Reference.

The LLM unlearning technique has recently been introduced to comply with data

regulations and address the safety and ethical concerns of LLMs by removing the

undesired data-model influence. However, state-of-the-art unlearning methods

face a critical vulnerability: they are susceptible to ``relearning'' the

removed information from a small number of forget data points, known as

relearning attacks. In this paper, we systematically investigate how to make

unlearned models robust against such attacks. For the first time, we establish

a connection between robust unlearning and sharpness-aware minimization (SAM)

through a unified robust optimization framework, in an analogy to adversarial

training designed to defend against adversarial attacks. Our analysis for SAM

reveals that smoothness optimization plays a pivotal role in mitigating

relearning attacks. Thus, we further explore diverse smoothing strategies to

enhance unlearning robustness. Extensive experiments on benchmark datasets,

including WMDP and MUSE, demonstrate that SAM and other smoothness optimization

approaches consistently improve the resistance of LLM unlearning to relearning

attacks. Notably, smoothness-enhanced unlearning also helps defend against

(input-level) jailbreaking attacks, broadening our proposal's impact in

robustifying LLM unlearning. Codes are available at

this https URL

A comprehensive survey reviews Vision-and-Language Navigation (VLN) research, detailing the integration of foundation models like LLMs and VLMs into VLN systems and outlining the resulting progress, opportunities, and challenges in the field. The paper highlights how these models enhance multimodal comprehension, reasoning, and generalization across various VLN tasks, particularly through improved world modeling, human instruction interpretation, and agent planning capabilities.

There are no more papers matching your filters at the moment.