National University of Mongolia

Current deep learning models for electroencephalography (EEG) are often task-specific and depend on large labeled datasets, limiting their adaptability. Although emerging foundation models aim for broader applicability, their rigid dependence on fixed, high-density multi-channel montages restricts their use across heterogeneous datasets and in missing-channel or practical low-channel settings. To address these limitations, we introduce SingLEM, a self-supervised foundation model that learns robust, general-purpose representations from single-channel EEG, making it inherently hardware agnostic. The model employs a hybrid encoder architecture that combines convolutional layers to extract local features with a hierarchical transformer to model both short- and long-range temporal dependencies. SingLEM is pretrained on 71 public datasets comprising over 9,200 subjects and 357,000 single-channel hours of EEG. When evaluated as a fixed feature extractor across six motor imagery and cognitive tasks, aggregated single-channel representations consistently outperformed leading multi-channel foundation models and handcrafted baselines. These results demonstrate that a single-channel approach can achieve state-of-the-art generalization while enabling fine-grained neurophysiological analysis and enhancing interpretability. The source code and pretrained models are available at this https URL.

ETH ZurichIdiap Research InstituteIT University of Copenhagen

ETH ZurichIdiap Research InstituteIT University of Copenhagen Aalborg University

Aalborg University EPFLJilin UniversityLondon School of Economics and Political ScienceUniversity of TrentoNational University of MongoliaAmrita Vishwa VidyapeethamFBKInstituto Potosino de Investigación Científica y TecnológicaUniversidad Católica “Nuestra Señora de la Asunción”

EPFLJilin UniversityLondon School of Economics and Political ScienceUniversity of TrentoNational University of MongoliaAmrita Vishwa VidyapeethamFBKInstituto Potosino de Investigación Científica y TecnológicaUniversidad Católica “Nuestra Señora de la Asunción”Understanding everyday life behavior of young adults through personal

devices, e.g., smartphones and smartwatches, is key for various applications,

from enhancing the user experience in mobile apps to enabling appropriate

interventions in digital health apps. Towards this goal, previous studies have

relied on datasets combining passive sensor data with human-provided

annotations or self-reports. However, many existing datasets are limited in

scope, often focusing on specific countries primarily in the Global North,

involving a small number of participants, or using a limited range of

pre-processed sensors. These limitations restrict the ability to capture

cross-country variations of human behavior, including the possibility of

studying model generalization, and robustness. To address this gap, we

introduce DiversityOne, a dataset which spans eight countries (China, Denmark,

India, Italy, Mexico, Mongolia, Paraguay, and the United Kingdom) and includes

data from 782 college students over four weeks. DiversityOne contains data from

26 smartphone sensor modalities and 350K+ self-reports. As of today, it is one

of the largest and most diverse publicly available datasets, while featuring

extensive demographic and psychosocial survey data. DiversityOne opens the

possibility of studying important research problems in ubiquitous computing,

particularly in domain adaptation and generalization across countries, all

research areas so far largely underexplored because of the lack of adequate

datasets.

University of Washington

University of Washington University of Amsterdam

University of Amsterdam University of Cambridge

University of Cambridge Carnegie Mellon University

Carnegie Mellon University New York UniversityIT University of Copenhagen

New York UniversityIT University of Copenhagen MetaUniversity of Edinburgh

MetaUniversity of Edinburgh ETH Zürich

ETH Zürich HKUSTUniversity of AberdeenÉcole Normale Supérieure - PSLNational University of MongoliaAmazon Alexa AIAllen Institute of AI

HKUSTUniversity of AberdeenÉcole Normale Supérieure - PSLNational University of MongoliaAmazon Alexa AIAllen Institute of AIThe ability to generalise well is one of the primary desiderata of natural

language processing (NLP). Yet, what 'good generalisation' entails and how it

should be evaluated is not well understood, nor are there any evaluation

standards for generalisation. In this paper, we lay the groundwork to address

both of these issues. We present a taxonomy for characterising and

understanding generalisation research in NLP. Our taxonomy is based on an

extensive literature review of generalisation research, and contains five axes

along which studies can differ: their main motivation, the type of

generalisation they investigate, the type of data shift they consider, the

source of this data shift, and the locus of the shift within the modelling

pipeline. We use our taxonomy to classify over 400 papers that test

generalisation, for a total of more than 600 individual experiments.

Considering the results of this review, we present an in-depth analysis that

maps out the current state of generalisation research in NLP, and we make

recommendations for which areas might deserve attention in the future. Along

with this paper, we release a webpage where the results of our review can be

dynamically explored, and which we intend to update as new NLP generalisation

studies are published. With this work, we aim to take steps towards making

state-of-the-art generalisation testing the new status quo in NLP.

14 Oct 2018

Controlling magnetism of transition metal atoms by pairing with π electronic states of graphene is intriguing. Herein, through first - principle computation we explore the possibility of switching magnetization by forming the tetrahedral sp3 - metallic d hybrid bonds. Graphene multilayers capped by single - layer cobalt atoms can transform into the sp3 - bonded diamond films upon the hydrogenation of the bottom surface. While the conversion is favored by hybridization between the sp3 dangling bonds and metallic dz2 states, such a strong hybridization can lead to the reorientation of magnetization easy axis of cobalt adatoms in plane to perpendicular. The further investigations identify that this anisotropic magnetization even can be modulated upon the change in charge carrier density, suggesting the possibility of an electric - field control of magnetization reorientation. These results provide a novel alternative that would represent tailoring magnetism by means of degree of the interlayer hybrid bonds in the layered materials.

02 Dec 2025

This paper provides a detailed expository and computational account of the elementary methods developed by P. L. Chebyshev and J. J. Sylvester to establish explicit bounds on the prime counting function. The core of the method involves replacing the Möbius function with a finitely supported arithmetic function in the convolution identities, relating the Chebyshev function psi(x) to the summatory logarithm function T(x) = log([x]!). We present a comprehensive analysis of the various schemes proposed by Chebyshev and Sylvester, with a central focus on Sylvester's innovative iterative refinement procedure. By implementing this procedure computationally, we replicate, verify, and optimize the historical results, providing a self-contained pedagogical resource for this pivotal technique in analytic number theory.

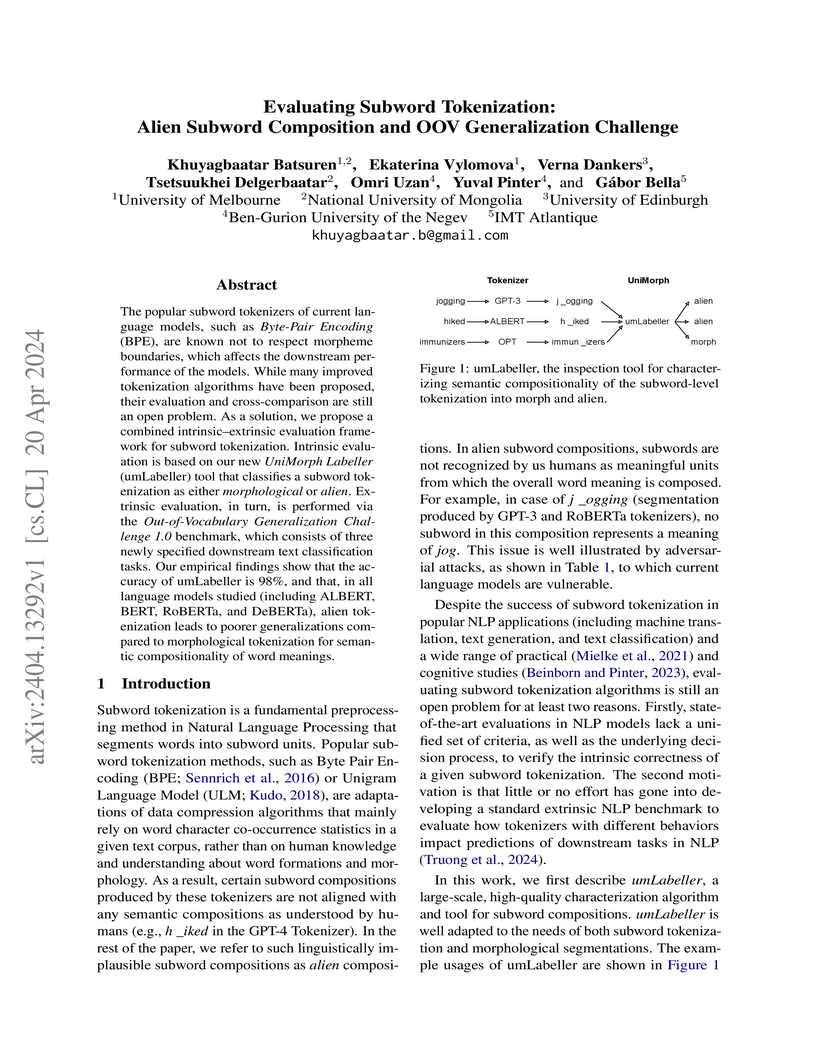

The popular subword tokenizers of current language models, such as Byte-Pair

Encoding (BPE), are known not to respect morpheme boundaries, which affects the

downstream performance of the models. While many improved tokenization

algorithms have been proposed, their evaluation and cross-comparison is still

an open problem. As a solution, we propose a combined intrinsic-extrinsic

evaluation framework for subword tokenization. Intrinsic evaluation is based on

our new UniMorph Labeller tool that classifies subword tokenization as either

morphological or alien. Extrinsic evaluation, in turn, is performed via the

Out-of-Vocabulary Generalization Challenge 1.0 benchmark, which consists of

three newly specified downstream text classification tasks. Our empirical

findings show that the accuracy of UniMorph Labeller is 98%, and that, in all

language models studied (including ALBERT, BERT, RoBERTa, and DeBERTa), alien

tokenization leads to poorer generalizations compared to morphological

tokenization for semantic compositionality of word meanings.

26 Sep 2024

This study introduces marginal density functions of the general Bayesian

Markov-Switching Vector Autoregressive (MS-VAR) process. In the case of the

Bayesian MS-VAR process, we provide closed-form density functions and

Monte-Carlo simulation algorithms, including the importance sampling method.

The Monte-Carlo simulation method departs from the previous simulation methods

because it removes the duplication in a regime vector.

Recent advancements in medical image analysis have predominantly relied on Convolutional Neural Networks (CNNs), achieving impressive performance in chest X-ray classification tasks, such as the 92% AUC reported by AutoThorax-Net and the 88% AUC achieved by ChexNet in classifcation tasks. However, in the medical field, even small improvements in accuracy can have significant clinical implications. This study explores the application of Vision Transformers (ViT), a state-of-the-art architecture in machine learning, to chest X-ray analysis, aiming to push the boundaries of diagnostic accuracy. I present a comparative analysis of two ViT-based approaches: one utilizing full chest X-ray images and another focusing on segmented lung regions. Experiments demonstrate that both methods surpass the performance of traditional CNN-based models, with the full-image ViT achieving up to 97.83% accuracy and the lung-segmented ViT reaching 96.58% accuracy in classifcation of diseases on three label and AUC of 94.54% when label numbers are increased to eight. Notably, the full-image approach showed superior performance across all metrics, including precision, recall, F1 score, and AUC-ROC. These findings suggest that Vision Transformers can effectively capture relevant features from chest X-rays without the need for explicit lung segmentation, potentially simplifying the preprocessing pipeline while maintaining high accuracy. This research contributes to the growing body of evidence supporting the efficacy of transformer-based architectures in medical image analysis and highlights their potential to enhance diagnostic precision in clinical settings.

13 Aug 2013

A serious threat today is malicious executables. It is designed to damage

computer system and some of them spread over network without the knowledge of

the owner using the system. Two approaches have been derived for it i.e.

Signature Based Detection and Heuristic Based Detection. These approaches

performed well against known malicious programs but cannot catch the new

malicious programs. Different researchers have proposed methods using data

mining and machine learning for detecting new malicious programs. The method

based on data mining and machine learning has shown good results compared to

other approaches. This work presents a static malware detection system using

data mining techniques such as Information Gain, Principal component analysis,

and three classifiers: SVM, J48, and Na\"ive Bayes. For overcoming the lack of

usual anti-virus products, we use methods of static analysis to extract

valuable features of Windows PE file. We extract raw features of Windows

executables which are PE header information, DLLs, and API functions inside

each DLL of Windows PE file. Thereafter, Information Gain, calling frequencies

of the raw features are calculated to select valuable subset features, and then

Principal Component Analysis is used for dimensionality reduction of the

selected features. By adopting the concepts of machine learning and

data-mining, we construct a static malware detection system which has a

detection rate of 99.6%.

20 Jun 2020

University of MississippiWuhan University University of Cambridge

University of Cambridge Chinese Academy of Sciences

Chinese Academy of Sciences Carnegie Mellon UniversityDESYSichuan University

Carnegie Mellon UniversityDESYSichuan University Beihang UniversityIndiana University

Beihang UniversityIndiana University Nanjing UniversityUniversity of BonnPanjab University

Nanjing UniversityUniversity of BonnPanjab University Zhejiang UniversityUniversity of Electronic Science and Technology of ChinaNankai University

Zhejiang UniversityUniversity of Electronic Science and Technology of ChinaNankai University Peking University

Peking University University of Minnesota

University of Minnesota Stockholm UniversityUniversity of TurinUppsala UniversityUniversity of RochesterGuangxi Normal UniversityJilin UniversityCentral China Normal University

Stockholm UniversityUniversity of TurinUppsala UniversityUniversity of RochesterGuangxi Normal UniversityJilin UniversityCentral China Normal University Shandong UniversityLanzhou UniversityIndian Institute of Technology MadrasXiangtan UniversitySoochow UniversityUniversity of South ChinaNanjing Normal UniversityHenan University of Science and TechnologyUniversity of PerugiaZhengzhou UniversityJohannes Gutenberg UniversityINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityUniversity of Hawai’iDonghua UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenGSI Helmholtz Centre for Heavy Ion ResearchCollege of William & MaryHelmholtz Institute MainzUniversity of the PunjabLiaoning UniversityHuazhong Normal UniversityNational University of MongoliaHuangshan CollegeFairfield UniversityUniversity of Science and Technology LiaoningKVI-CART, University of GroningenCOMSATS Institute of Information TechnologyG.N. Budker Institute of Nuclear PhysicsHelmholtz-Institut f¨ur Strahlen-und KernphysikINFN-Sezione di FerraraRuhr-University-Bochum

Shandong UniversityLanzhou UniversityIndian Institute of Technology MadrasXiangtan UniversitySoochow UniversityUniversity of South ChinaNanjing Normal UniversityHenan University of Science and TechnologyUniversity of PerugiaZhengzhou UniversityJohannes Gutenberg UniversityINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityUniversity of Hawai’iDonghua UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenGSI Helmholtz Centre for Heavy Ion ResearchCollege of William & MaryHelmholtz Institute MainzUniversity of the PunjabLiaoning UniversityHuazhong Normal UniversityNational University of MongoliaHuangshan CollegeFairfield UniversityUniversity of Science and Technology LiaoningKVI-CART, University of GroningenCOMSATS Institute of Information TechnologyG.N. Budker Institute of Nuclear PhysicsHelmholtz-Institut f¨ur Strahlen-und KernphysikINFN-Sezione di FerraraRuhr-University-Bochum

University of Cambridge

University of Cambridge Chinese Academy of Sciences

Chinese Academy of Sciences Carnegie Mellon UniversityDESYSichuan University

Carnegie Mellon UniversityDESYSichuan University Beihang UniversityIndiana University

Beihang UniversityIndiana University Nanjing UniversityUniversity of BonnPanjab University

Nanjing UniversityUniversity of BonnPanjab University Zhejiang UniversityUniversity of Electronic Science and Technology of ChinaNankai University

Zhejiang UniversityUniversity of Electronic Science and Technology of ChinaNankai University Peking University

Peking University University of Minnesota

University of Minnesota Stockholm UniversityUniversity of TurinUppsala UniversityUniversity of RochesterGuangxi Normal UniversityJilin UniversityCentral China Normal University

Stockholm UniversityUniversity of TurinUppsala UniversityUniversity of RochesterGuangxi Normal UniversityJilin UniversityCentral China Normal University Shandong UniversityLanzhou UniversityIndian Institute of Technology MadrasXiangtan UniversitySoochow UniversityUniversity of South ChinaNanjing Normal UniversityHenan University of Science and TechnologyUniversity of PerugiaZhengzhou UniversityJohannes Gutenberg UniversityINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityUniversity of Hawai’iDonghua UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenGSI Helmholtz Centre for Heavy Ion ResearchCollege of William & MaryHelmholtz Institute MainzUniversity of the PunjabLiaoning UniversityHuazhong Normal UniversityNational University of MongoliaHuangshan CollegeFairfield UniversityUniversity of Science and Technology LiaoningKVI-CART, University of GroningenCOMSATS Institute of Information TechnologyG.N. Budker Institute of Nuclear PhysicsHelmholtz-Institut f¨ur Strahlen-und KernphysikINFN-Sezione di FerraraRuhr-University-Bochum

Shandong UniversityLanzhou UniversityIndian Institute of Technology MadrasXiangtan UniversitySoochow UniversityUniversity of South ChinaNanjing Normal UniversityHenan University of Science and TechnologyUniversity of PerugiaZhengzhou UniversityJohannes Gutenberg UniversityINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityUniversity of Hawai’iDonghua UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenGSI Helmholtz Centre for Heavy Ion ResearchCollege of William & MaryHelmholtz Institute MainzUniversity of the PunjabLiaoning UniversityHuazhong Normal UniversityNational University of MongoliaHuangshan CollegeFairfield UniversityUniversity of Science and Technology LiaoningKVI-CART, University of GroningenCOMSATS Institute of Information TechnologyG.N. Budker Institute of Nuclear PhysicsHelmholtz-Institut f¨ur Strahlen-und KernphysikINFN-Sezione di FerraraRuhr-University-BochumThe processes $X(3872)\to D^{*0}\bar{D^{0}}+c.c.,~\gamma J/\psi,~\gamma

\psi(2S),and\gamma D^{+}D^{-}aresearchedforina9.0~\rm fb^{-1}$ data

sample collected at center-of-mass energies between 4.178 and 4.278 GeV

with the BESIII detector. We observe X(3872)→D∗0D0ˉ+c.c. and

find evidence for X(3872)→γJ/ψ with statistical significances of

7.4σ and 3.5σ, respectively. No evident signals for

X(3872)→γψ(2S) and γD+D− are found, and upper limit

on the relative branching ratio $R_{\gamma \psi}

\equiv\frac{\mathcal{B}(X(3872)\to\gamma\psi(2S))}{\mathcal{B}(X(3872)\to\gamma

J/\psi)}<0.59issetat90\%$ confidence level. Measurements of branching

ratios relative to decay X(3872)→π+π−J/ψ are also reported for

decays X(3872)→D∗0D0ˉ+c.c., γψ(2S), γJ/ψ,

γD+D−, as well as the non-D∗0Dˉ0 three-body decays

π0D0Dˉ0 and γD0Dˉ0.

15 May 2021

We calculated the infrared conductivity spectrum of orbitally ordered

LaMnO3 in phonon frequency and overtone frequency ranges. We considered

orbital exchange, Jahn-Teller electron-phonon coupling, and phonon--phonon

coupling. The fundamental excitation of the phonon-coupled orbiton was only

Raman active, not infrared active, while its overtone modes were both Raman and

infrared active. Our calculations reproduced the small peaks near 1300

cm−1 observed both in Raman scattering and infrared conductivity spectra,

as consistent with previous experimental results.

University of Cambridge

University of Cambridge Carnegie Mellon University

Carnegie Mellon University New York UniversityIndiana UniversityUniversity of MelbourneUniversity of Edinburgh

New York UniversityIndiana UniversityUniversity of MelbourneUniversity of Edinburgh ETH Zürich

ETH Zürich University of British Columbia

University of British Columbia KU Leuven

KU Leuven Johns Hopkins University

Johns Hopkins University Stony Brook UniversityUniversity of HelsinkiUniversity of Liverpool

Stony Brook UniversityUniversity of HelsinkiUniversity of Liverpool Australian National UniversityUniversity of LouisvilleUniversity of Colorado BoulderBoston CollegeUniversity at BuffaloGeorge Mason UniversityUniversity of Trento

Australian National UniversityUniversity of LouisvilleUniversity of Colorado BoulderBoston CollegeUniversity at BuffaloGeorge Mason UniversityUniversity of Trento University of GroningenHigher School of EconomicsBen-Gurion University of the NegevUniversity of OregonUniversity of ZürichGraduate Center, City University of New YorkMoscow State UniversityBar Ilan UniversityCharles Darwin UniversityUniversity of GothenburgUniversity of YorkGeorgetown UniversityUniversitas IndonesiaInstitute of Computer Science, Polish Academy of SciencesPontificia Universidad Católica del PerúNational University of MongoliaSwarthmore CollegeDharmsinh Desai UniversityNortheastern Illinois UniversityDr. Bhimrao Ambedkar UniversityBrian Leonard ConsultingMila/McGill University MontrealInstitute of Linguistics, Russian Academy of SciencesSTKIP WeetebulaKarelian Research Centre of the Russian Academy of SciencesTuvan State UniversityESRC International Centre for Language and Communicative Development(LuCiD)Institute for System Programming, Russian Academy of SciencesUniversidad Católica Sedes Sapientiae, Filial AtalayaInstitute of Philology of the Siberian Branch of the Russian Academy of SciencesILSP/Athena RC

University of GroningenHigher School of EconomicsBen-Gurion University of the NegevUniversity of OregonUniversity of ZürichGraduate Center, City University of New YorkMoscow State UniversityBar Ilan UniversityCharles Darwin UniversityUniversity of GothenburgUniversity of YorkGeorgetown UniversityUniversitas IndonesiaInstitute of Computer Science, Polish Academy of SciencesPontificia Universidad Católica del PerúNational University of MongoliaSwarthmore CollegeDharmsinh Desai UniversityNortheastern Illinois UniversityDr. Bhimrao Ambedkar UniversityBrian Leonard ConsultingMila/McGill University MontrealInstitute of Linguistics, Russian Academy of SciencesSTKIP WeetebulaKarelian Research Centre of the Russian Academy of SciencesTuvan State UniversityESRC International Centre for Language and Communicative Development(LuCiD)Institute for System Programming, Russian Academy of SciencesUniversidad Católica Sedes Sapientiae, Filial AtalayaInstitute of Philology of the Siberian Branch of the Russian Academy of SciencesILSP/Athena RCThe Universal Morphology (UniMorph) project is a collaborative effort

providing broad-coverage instantiated normalized morphological inflection

tables for hundreds of diverse world languages. The project comprises two major

thrusts: a language-independent feature schema for rich morphological

annotation and a type-level resource of annotated data in diverse languages

realizing that schema. This paper presents the expansions and improvements made

on several fronts over the last couple of years (since McCarthy et al. (2020)).

Collaborative efforts by numerous linguists have added 67 new languages,

including 30 endangered languages. We have implemented several improvements to

the extraction pipeline to tackle some issues, e.g. missing gender and macron

information. We have also amended the schema to use a hierarchical structure

that is needed for morphological phenomena like multiple-argument agreement and

case stacking, while adding some missing morphological features to make the

schema more inclusive. In light of the last UniMorph release, we also augmented

the database with morpheme segmentation for 16 languages. Lastly, this new

release makes a push towards inclusion of derivational morphology in UniMorph

by enriching the data and annotation schema with instances representing

derivational processes from MorphyNet.

In NLP, models are usually evaluated by reporting single-number performance

scores on a number of readily available benchmarks, without much deeper

analysis. Here, we argue that - especially given the well-known fact that

benchmarks often contain biases, artefacts, and spurious correlations - deeper

results analysis should become the de-facto standard when presenting new models

or benchmarks. We present a tool that researchers can use to study properties

of the dataset and the influence of those properties on their models'

behaviour. Our Text Characterization Toolkit includes both an easy-to-use

annotation tool, as well as off-the-shelf scripts that can be used for specific

analyses. We also present use-cases from three different domains: we use the

tool to predict what are difficult examples for given well-known trained models

and identify (potentially harmful) biases and heuristics that are present in a

dataset.

21 Sep 2024

In this paper, we have studied option pricing methods that are based on a Bayesian Markov-Switching Vector Autoregressive (MS-BVAR) process using a risk-neutral valuation approach. A BVAR process, which is a special case of the Bayesian MS-VAR process is widely used to model interdependencies of economic variables and forecast economic variables. Here we assumed that a regime-switching process is generated by a homogeneous Markov process and a residual process follows a conditional heteroscedastic model. With a direct calculation and change of probability measure, for some frequently used options, we derived pricing formulas. An advantage of our model is it depends on economic variables and is easy to use compared to previous option pricing papers, which depend on regime-switching.

03 Aug 2023

In this study, we introduce new estimation methods for the required rate of

returns on equity and liabilities of private and public companies using the

stochastic dividend discount model (DDM). To estimate the required rate of

return on equity, we use the maximum likelihood method, the Bayesian method,

and the Kalman filtering. We also provide a method that evaluates the market

values of liabilities. We apply the model to a set of firms from the S\&P 500

index using historical dividend and price data over a 32--year period. Overall,

the suggested methods can be used to estimate the required rate of returns.

14 Mar 2023

South China University of TechnologyWuhan University of TechnologyWuhan UniversityNational Central University Chinese Academy of Sciences

Chinese Academy of Sciences Carnegie Mellon UniversityBudker Institute of Nuclear Physics SB RASSichuan University

Carnegie Mellon UniversityBudker Institute of Nuclear Physics SB RASSichuan University Sun Yat-Sen University

Sun Yat-Sen University University of ManchesterGyeongsang National University

University of ManchesterGyeongsang National University University of Science and Technology of China

University of Science and Technology of China Beihang University

Beihang University Nanjing UniversityUniversity of Electronic Science and Technology of ChinaUniversity of ReginaCentral South University

Nanjing UniversityUniversity of Electronic Science and Technology of ChinaUniversity of ReginaCentral South University Peking UniversityJoint Institute for Nuclear ResearchGuangdong University of TechnologySouth China Normal UniversityUppsala UniversityGuangxi Normal UniversityJilin UniversityUniversity of Science and Technology BeijingCentral China Normal University

Peking UniversityJoint Institute for Nuclear ResearchGuangdong University of TechnologySouth China Normal UniversityUppsala UniversityGuangxi Normal UniversityJilin UniversityUniversity of Science and Technology BeijingCentral China Normal University Shandong UniversityUniversity of SiegenLanzhou UniversityPaul Scherrer InstitutIndian Institute of Technology MadrasIowa State UniversityUniversity of South ChinaUniversity of JinanHunan University

Shandong UniversityUniversity of SiegenLanzhou UniversityPaul Scherrer InstitutIndian Institute of Technology MadrasIowa State UniversityUniversity of South ChinaUniversity of JinanHunan University University of GroningenNanjing Normal UniversityYantai UniversityGuangxi UniversityShanxi UniversityShandong University of TechnologyZhengzhou UniversityINFN, Sezione di TorinoCOMSATS University IslamabadJohannes Gutenberg University MainzHenan Normal UniversityNorth China Electric Power UniversityChiang Mai UniversityHenan Polytechnic UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenInstitute of Nuclear Physics, Polish Academy of SciencesHangzhou Normal UniversityHelmholtz Institute MainzLiaoning UniversityHuazhong Agricultural UniversityNational University of MongoliaDogus UniversityUniversity of GiessenLiaocheng UniversityINFN-Sezione di FrascatiNorthwestern University (China)INFN-Sezione di FerraraRuhr-University-Bochum

University of GroningenNanjing Normal UniversityYantai UniversityGuangxi UniversityShanxi UniversityShandong University of TechnologyZhengzhou UniversityINFN, Sezione di TorinoCOMSATS University IslamabadJohannes Gutenberg University MainzHenan Normal UniversityNorth China Electric Power UniversityChiang Mai UniversityHenan Polytechnic UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenInstitute of Nuclear Physics, Polish Academy of SciencesHangzhou Normal UniversityHelmholtz Institute MainzLiaoning UniversityHuazhong Agricultural UniversityNational University of MongoliaDogus UniversityUniversity of GiessenLiaocheng UniversityINFN-Sezione di FrascatiNorthwestern University (China)INFN-Sezione di FerraraRuhr-University-Bochum

Chinese Academy of Sciences

Chinese Academy of Sciences Carnegie Mellon UniversityBudker Institute of Nuclear Physics SB RASSichuan University

Carnegie Mellon UniversityBudker Institute of Nuclear Physics SB RASSichuan University Sun Yat-Sen University

Sun Yat-Sen University University of ManchesterGyeongsang National University

University of ManchesterGyeongsang National University University of Science and Technology of China

University of Science and Technology of China Beihang University

Beihang University Nanjing UniversityUniversity of Electronic Science and Technology of ChinaUniversity of ReginaCentral South University

Nanjing UniversityUniversity of Electronic Science and Technology of ChinaUniversity of ReginaCentral South University Peking UniversityJoint Institute for Nuclear ResearchGuangdong University of TechnologySouth China Normal UniversityUppsala UniversityGuangxi Normal UniversityJilin UniversityUniversity of Science and Technology BeijingCentral China Normal University

Peking UniversityJoint Institute for Nuclear ResearchGuangdong University of TechnologySouth China Normal UniversityUppsala UniversityGuangxi Normal UniversityJilin UniversityUniversity of Science and Technology BeijingCentral China Normal University Shandong UniversityUniversity of SiegenLanzhou UniversityPaul Scherrer InstitutIndian Institute of Technology MadrasIowa State UniversityUniversity of South ChinaUniversity of JinanHunan University

Shandong UniversityUniversity of SiegenLanzhou UniversityPaul Scherrer InstitutIndian Institute of Technology MadrasIowa State UniversityUniversity of South ChinaUniversity of JinanHunan University University of GroningenNanjing Normal UniversityYantai UniversityGuangxi UniversityShanxi UniversityShandong University of TechnologyZhengzhou UniversityINFN, Sezione di TorinoCOMSATS University IslamabadJohannes Gutenberg University MainzHenan Normal UniversityNorth China Electric Power UniversityChiang Mai UniversityHenan Polytechnic UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenInstitute of Nuclear Physics, Polish Academy of SciencesHangzhou Normal UniversityHelmholtz Institute MainzLiaoning UniversityHuazhong Agricultural UniversityNational University of MongoliaDogus UniversityUniversity of GiessenLiaocheng UniversityINFN-Sezione di FrascatiNorthwestern University (China)INFN-Sezione di FerraraRuhr-University-Bochum

University of GroningenNanjing Normal UniversityYantai UniversityGuangxi UniversityShanxi UniversityShandong University of TechnologyZhengzhou UniversityINFN, Sezione di TorinoCOMSATS University IslamabadJohannes Gutenberg University MainzHenan Normal UniversityNorth China Electric Power UniversityChiang Mai UniversityHenan Polytechnic UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenInstitute of Nuclear Physics, Polish Academy of SciencesHangzhou Normal UniversityHelmholtz Institute MainzLiaoning UniversityHuazhong Agricultural UniversityNational University of MongoliaDogus UniversityUniversity of GiessenLiaocheng UniversityINFN-Sezione di FrascatiNorthwestern University (China)INFN-Sezione di FerraraRuhr-University-BochumUsing initial-state radiation events from a total integrated luminosity of 11.957 fb−1 of e+e− collision data collected at center-of-mass energies between 3.773 and 4.258 GeV with the BESIII detector at BEPCII, the cross section for the process e+e−→ΛΛˉ is measured in 16 ΛΛˉ invariant mass intervals from the production threshold up to 3.00 GeV/c2. The results are consistent with previous results from BaBar and BESIII, but with better precision and with narrower ΛΛˉ invariant mass intervals than BaBar.

27 May 2024

Data reuse is fundamental for reducing the data integration effort required to build data supporting new applications, especially in data scarcity contexts. However, data reuse requires to deal with data heterogeneity, which is always present in data coming from different sources. Such heterogeneity appears at different levels, like the language used by the data, the structure of the information it represents, and the data types and formats adopted by the datasets. Despite the valuable insights gained by reusing data across contexts, dealing with data heterogeneity is still a high price to pay. Additionally, data reuse is hampered by the lack of data distribution infrastructures supporting the production and distribution of quality and interoperable data. These issues affecting data reuse are amplified considering cross-country data reuse, where geographical and cultural differences are more pronounced. In this paper, we propose LiveData, a cross-country data distribution network handling high quality and diversity-aware data. LiveData is composed by different nodes having an architecture providing components for the generation and distribution of a new type of data, where heterogeneity is transformed into information diversity and considered as a feature, explicitly defined and used to satisfy the data users purposes. This paper presents the specification of the LiveData network, by defining the architecture and the type of data handled by its nodes. This specification is currently being used to implement a concrete use case for data reuse and integration between the University of Trento (Italy) and the National University of Mongolia.

21 Sep 2024

In this paper, we developed the Merton's structural model for public

companies under an assumption that liabilities of the companies are observed.

Using Campbell and Shiller's approximation method, we obtain formulas of

risk-neutral equity and liability values and default probabilities for the

public companies. Also, the paper provides ML estimators of suggested model's

parameters.

21 Sep 2024

In this paper, we introduce a dynamic Gordon growth model, which is augmented by a time--varying spot interest rate and the Gordon growth model for dividends. Using the risk--neutral valuation method and locally risk--minimizing strategy, we obtain pricing and hedging formulas for the dividend--paying European call and put options and equity--linked life insurance products. Also, we provide ML estimator of the model.

Smartphones enable understanding human behavior with activity recognition to

support people's daily lives. Prior studies focused on using inertial sensors

to detect simple activities (sitting, walking, running, etc.) and were mostly

conducted in homogeneous populations within a country. However, people are more

sedentary in the post-pandemic world with the prevalence of remote/hybrid

work/study settings, making detecting simple activities less meaningful for

context-aware applications. Hence, the understanding of (i) how multimodal

smartphone sensors and machine learning models could be used to detect complex

daily activities that can better inform about people's daily lives and (ii) how

models generalize to unseen countries, is limited. We analyzed in-the-wild

smartphone data and over 216K self-reports from 637 college students in five

countries (Italy, Mongolia, UK, Denmark, Paraguay). Then, we defined a 12-class

complex daily activity recognition task and evaluated the performance with

different approaches. We found that even though the generic multi-country

approach provided an AUROC of 0.70, the country-specific approach performed

better with AUROC scores in [0.79-0.89]. We believe that research along the

lines of diversity awareness is fundamental for advancing human behavior

understanding through smartphones and machine learning, for more real-world

utility across countries.

There are no more papers matching your filters at the moment.