Nokia Technologies

Researchers from Tampere University and Nokia Technologies developed the Attractor-based Joint Diarization, Counting, and Separation System (A-DCSS), which addresses the challenge of separating speech from an unknown number of speakers, each contributing multiple non-contiguous utterances. The system achieved superior speech separation performance and high accuracy in speaker diarization and counting across various challenging acoustic conditions.

07 Feb 2024

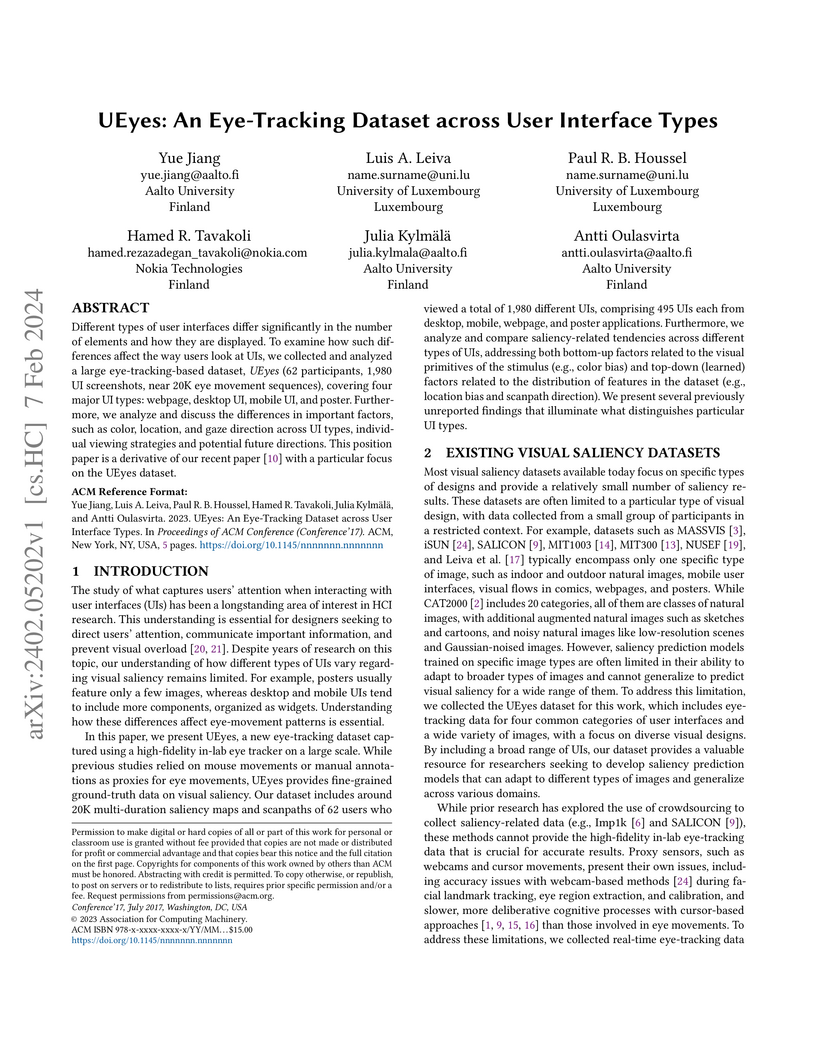

Different types of user interfaces differ significantly in the number of elements and how they are displayed. To examine how such differences affect the way users look at UIs, we collected and analyzed a large eye-tracking-based dataset, UEyes (62 participants, 1,980 UI screenshots, near 20K eye movement sequences), covering four major UI types: webpage, desktop UI, mobile UI, and poster. Furthermore, we analyze and discuss the differences in important factors, such as color, location, and gaze direction across UI types, individual viewing strategies and potential future directions. This position paper is a derivative of our recent paper with a particular focus on the UEyes dataset.

19 Mar 2016

This research from Eigenor Corporation, University of Warwick, Nokia Technologies, and University of Eastern Finland introduces Cauchy difference priors for edge-preserving Bayesian inversion, addressing the limitations of Gaussian and Total Variation priors. The method, applied to 1D deconvolution and 2D X-ray tomography, yields reconstructions with superior edge sharpness and demonstrates discretization-invariance, providing a robust approach to recovering piecewise smooth objects from noisy, indirect measurements.

19 Sep 2025

As wireless communication systems advance toward Sixth Generation (6G) Radio Access Networks (RAN), Deep Learning (DL)-based neural receivers are emerging as transformative solutions for Physical Layer (PHY) processing, delivering superior Block Error Rate (BLER) performance compared to traditional model-based approaches. Practical deployment on resource-constrained hardware, however, requires efficient quantization to reduce latency, energy, and memory without sacrificing reliability. In this paper, we extend Post-Training Quantization (PTQ) by focusing on Quantization-Aware Training (QAT), which incorporates low-precision simulation during training for robustness at ultra-low bitwidths. In particular, we develop a QAT methodology for a neural receiver architecture and benchmark it against a PTQ approach across diverse 3GPP Clustered Delay Line (CDL) channel profiles under both Line-of-Sight (LoS) and Non-LoS (NLoS) conditions, with user velocities up to 40 m/s. Results show that 4-bit and 8-bit QAT models achieve BLERs comparable to FP32 models at a 10% target BLER. Moreover, QAT models succeed in NLoS scenarios where PTQ models fail to reach the 10% BLER target, while also yielding an 8x compression. These results with respect to full-precision demonstrate that QAT is a key enabler of low-complexity and latency-constrained inference at the PHY layer, facilitating real-time processing in 6G edge devices.

We introduced a high-resolution equirectangular panorama (360-degree, virtual

reality) dataset for object detection and propose a multi-projection variant of

YOLO detector. The main challenge with equirectangular panorama image are i)

the lack of annotated training data, ii) high-resolution imagery and iii)

severe geometric distortions of objects near the panorama projection poles. In

this work, we solve the challenges by i) using training examples available in

the "conventional datasets" (ImageNet and COCO), ii) employing only

low-resolution images that require only moderate GPU computing power and

memory, and iii) our multi-projection YOLO handles projection distortions by

making multiple stereographic sub-projections. In our experiments, YOLO

outperforms the other state-of-art detector, Faster RCNN and our

multi-projection YOLO achieves the best accuracy with low-resolution input.

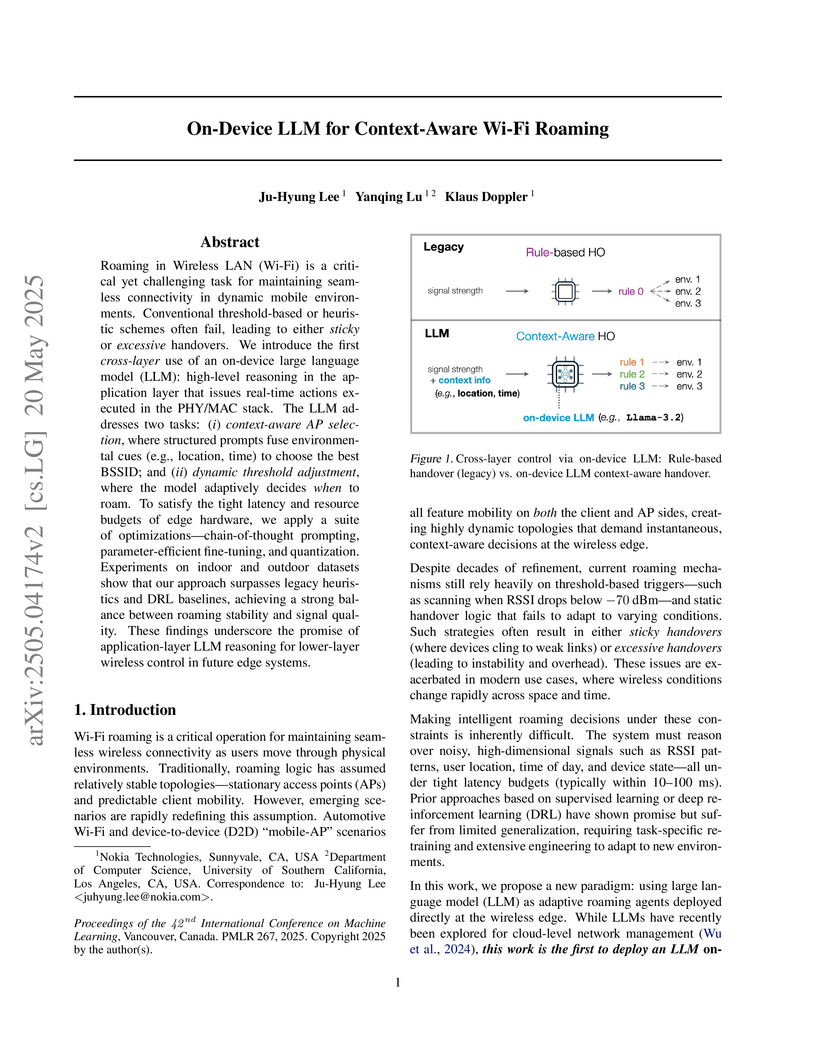

Researchers from Nokia Technologies developed an on-device Large Language Model (LLM) system to manage Wi-Fi roaming, demonstrating its ability to intelligently balance signal quality and handover frequency in dynamic environments. The optimized LLM-based solution outperformed traditional heuristics and Deep Reinforcement Learning baselines while proving feasible for deployment on consumer-grade edge devices.

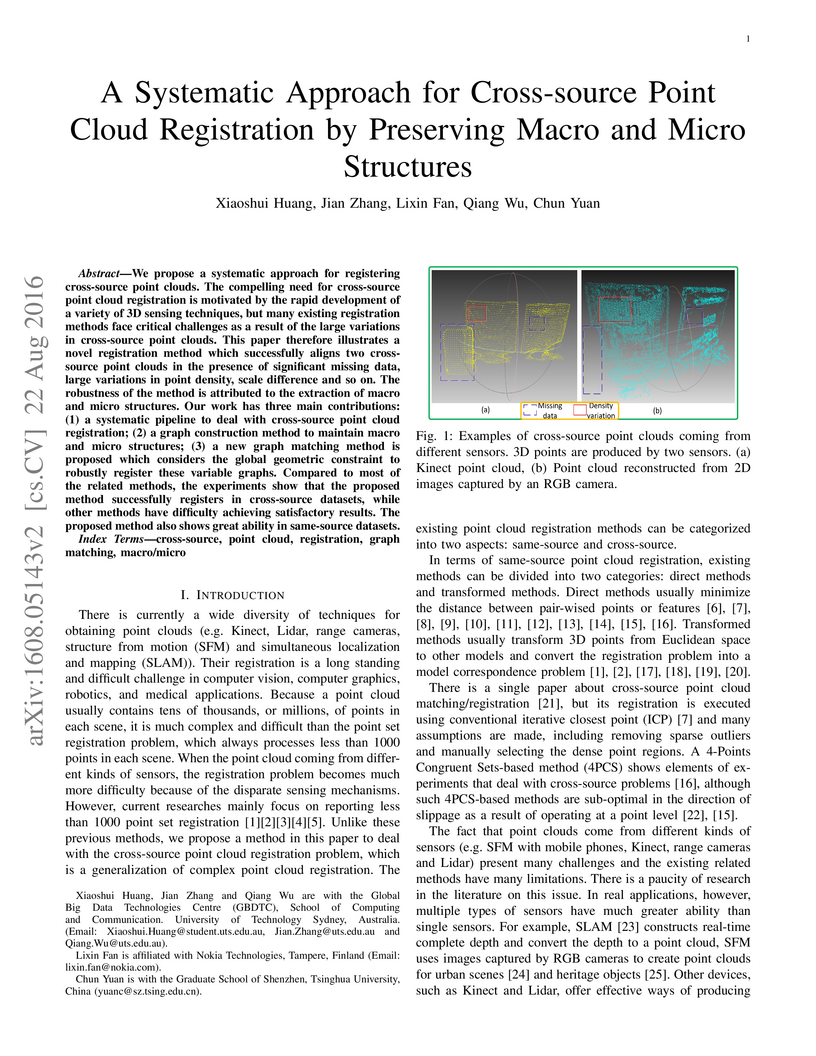

We propose a systematic approach for registering cross-source point clouds. The compelling need for cross-source point cloud registration is motivated by the rapid development of a variety of 3D sensing techniques, but many existing registration methods face critical challenges as a result of the large variations in cross-source point clouds. This paper therefore illustrates a novel registration method which successfully aligns two cross-source point clouds in the presence of significant missing data, large variations in point density, scale difference and so on. The robustness of the method is attributed to the extraction of macro and micro structures. Our work has three main contributions: (1) a systematic pipeline to deal with cross-source point cloud registration; (2) a graph construction method to maintain macro and micro structures; (3) a new graph matching method is proposed which considers the global geometric constraint to robustly register these variable graphs. Compared to most of the related methods, the experiments show that the proposed method successfully registers in cross-source datasets, while other methods have difficulty achieving satisfactory results. The proposed method also shows great ability in same-source datasets.

A deep neural network solution for time-scale modification (TSM) focused on large stretching factors is proposed, targeting environmental sounds. Traditional TSM artifacts such as transient smearing, loss of presence, and phasiness are heavily accentuated and cause poor audio quality when the TSM factor is four or larger. The weakness of established TSM methods, often based on a phase vocoder structure, lies in the poor description and scaling of the transient and noise components, or nuances, of a sound. Our novel solution combines a sines-transients-noise decomposition with an independent WaveNet synthesizer to provide a better description of the noise component and an improve sound quality for large stretching factors. Results of a subjective listening test against four other TSM algorithms are reported, showing the proposed method to be often superior. The proposed method is stereo compatible and has a wide range of applications related to the slow motion of media content.

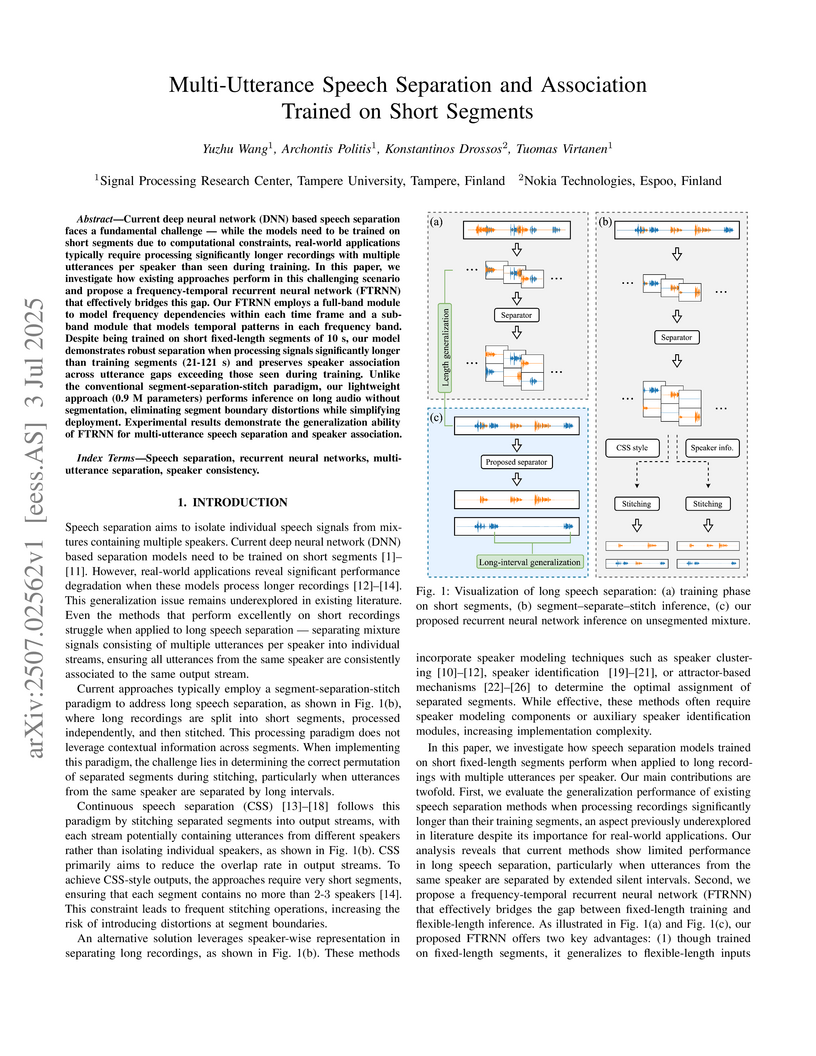

Researchers from Tampere University and Nokia Technologies developed a lightweight Frequency-Temporal Recurrent Neural Network (FTRNN) capable of directly performing multi-utterance speech separation on long audio recordings. The FTRNN, trained on short segments, maintains speaker identity across extended silent intervals and achieves 15.2 dB SI-SDR, outperforming conventional segment-stitch methods and demonstrating robustness to utterance gaps up to 40 seconds.

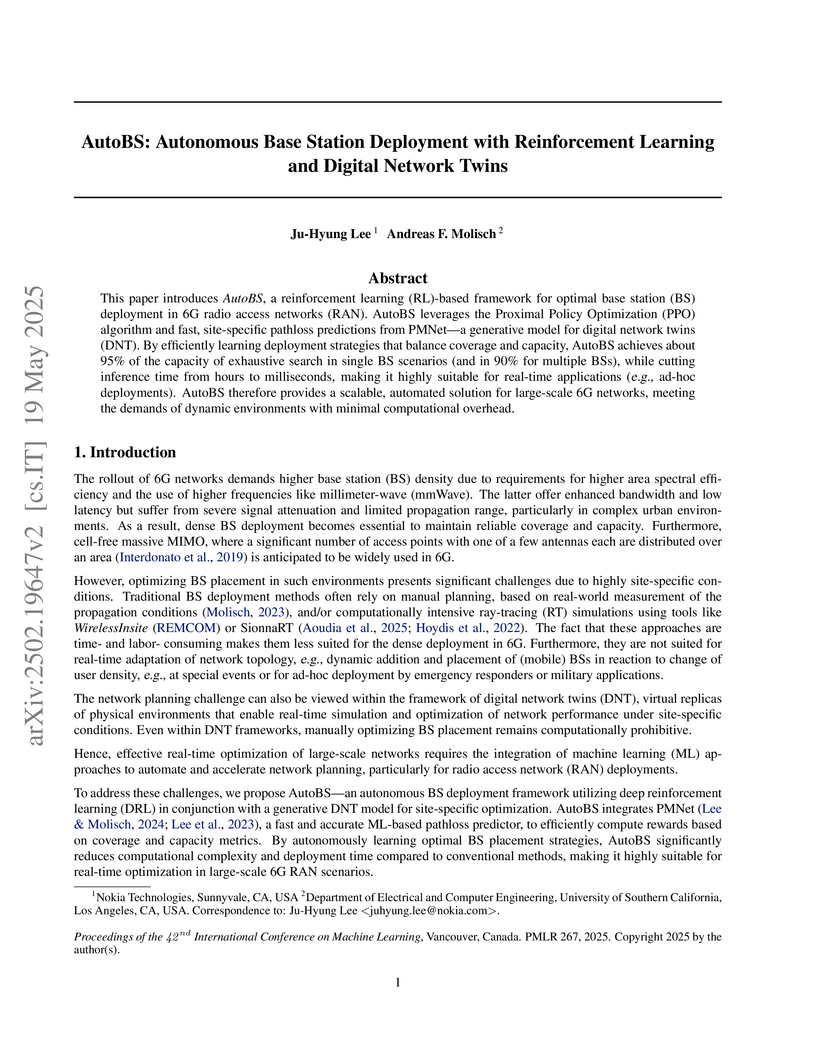

This paper introduces AutoBS, a reinforcement learning (RL)-based framework for optimal base station (BS) deployment in 6G radio access networks (RAN). AutoBS leverages the Proximal Policy Optimization (PPO) algorithm and fast, site-specific pathloss predictions from PMNet-a generative model for digital network twins (DNT). By efficiently learning deployment strategies that balance coverage and capacity, AutoBS achieves about 95% of the capacity of exhaustive search in single BS scenarios (and in 90% for multiple BSs), while cutting inference time from hours to milliseconds, making it highly suitable for real-time applications (e.g., ad-hoc deployments). AutoBS therefore provides a scalable, automated solution for large-scale 6G networks, meeting the demands of dynamic environments with minimal computational overhead.

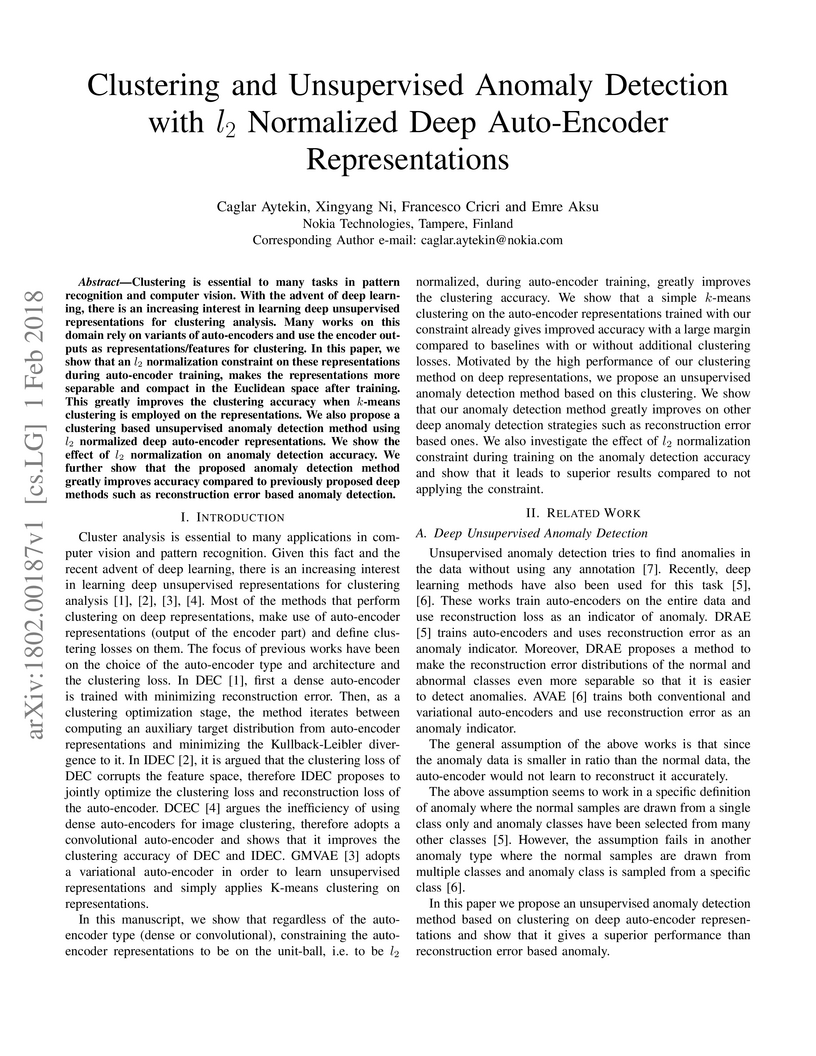

Clustering is essential to many tasks in pattern recognition and computer

vision. With the advent of deep learning, there is an increasing interest in

learning deep unsupervised representations for clustering analysis. Many works

on this domain rely on variants of auto-encoders and use the encoder outputs as

representations/features for clustering. In this paper, we show that an l2

normalization constraint on these representations during auto-encoder training,

makes the representations more separable and compact in the Euclidean space

after training. This greatly improves the clustering accuracy when k-means

clustering is employed on the representations. We also propose a clustering

based unsupervised anomaly detection method using l2 normalized deep

auto-encoder representations. We show the effect of l2 normalization on anomaly

detection accuracy. We further show that the proposed anomaly detection method

greatly improves accuracy compared to previously proposed deep methods such as

reconstruction error based anomaly detection.

14 Oct 2025

Deep learning-based neural receivers are redefining physical-layer signal processing for next-generation wireless systems. We propose an axial self-attention transformer neural receiver designed for applicability to 6G and beyond wireless systems, validated through 5G-compliant experimental configurations, that achieves state-of-the-art block error rate (BLER) performance with significantly improved computational efficiency. By factorizing attention operations along temporal and spectral axes, the proposed architecture reduces the quadratic complexity of conventional multi-head self-attention from O((TF)2) to O(T2F+TF2), yielding substantially fewer total floating-point operations and attention matrix multiplications per transformer block compared to global self-attention. Relative to convolutional neural receiver baselines, the axial neural receiver achieves significantly lower computational cost with a fraction of the parameters. Experimental validation under 3GPP Clustered Delay Line (CDL) channels demonstrates consistent performance gains across varying mobility scenarios. Under non-line-of-sight CDL-C conditions, the axial neural receiver consistently outperforms all evaluated receiver architectures, including global self-attention, convolutional neural receivers, and traditional LS-LMMSE at 10\% BLER with reduced computational complexity per inference. At stringent reliability targets of 1\% BLER, the axial receiver maintains robust symbol detection at high user speeds, whereas the traditional LS-LMMSE receiver fails to converge, underscoring its suitability for ultra-reliable low-latency (URLLC) communication in dynamic 6G environments and beyond. These results establish the axial neural receiver as a structured, scalable, and efficient framework for AI-Native 6G RAN systems, enabling deployment in resource-constrained edge environments.

Learning a data-driven spatio-temporal semantic representation of the objects

is the key to coherent and consistent labelling in video. This paper proposes

to achieve semantic video object segmentation by learning a data-driven

representation which captures the synergy of multiple instances from continuous

frames. To prune the noisy detections, we exploit the rich information among

multiple instances and select the discriminative and representative subset.

This selection process is formulated as a facility location problem solved by

maximising a submodular function. Our method retrieves the longer term

contextual dependencies which underpins a robust semantic video object

segmentation algorithm. We present extensive experiments on a challenging

dataset that demonstrate the superior performance of our approach compared with

the state-of-the-art methods.

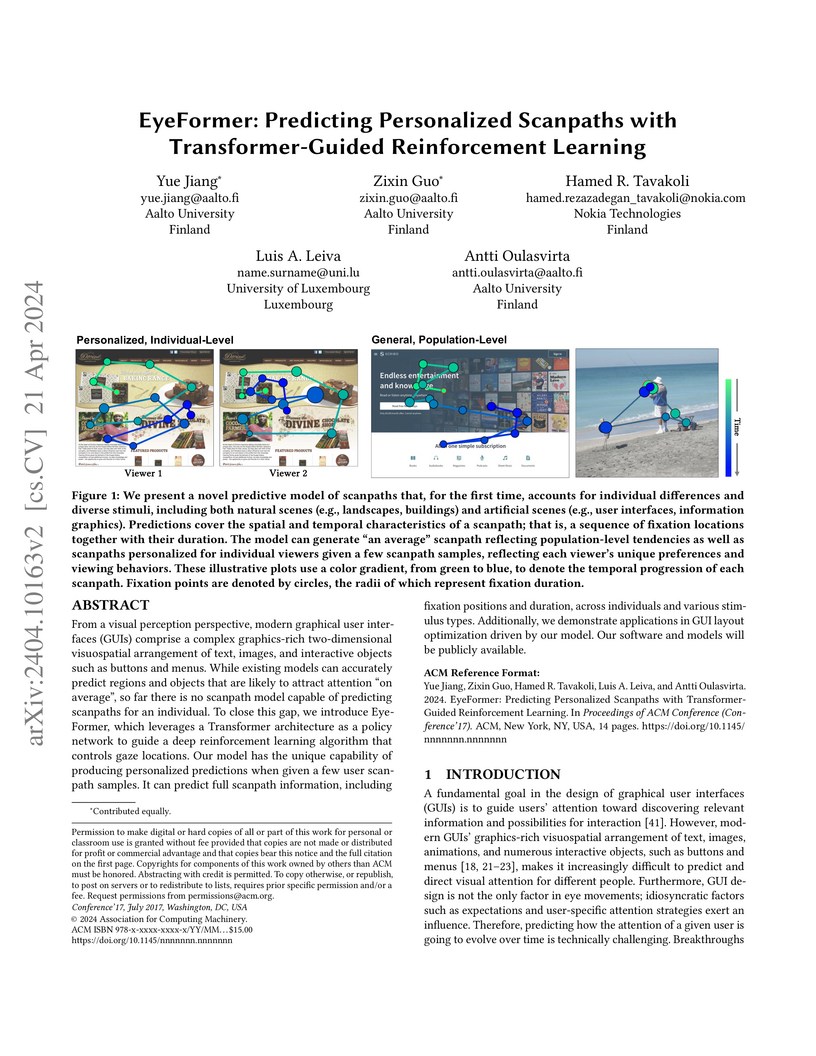

From a visual perception perspective, modern graphical user interfaces (GUIs) comprise a complex graphics-rich two-dimensional visuospatial arrangement of text, images, and interactive objects such as buttons and menus. While existing models can accurately predict regions and objects that are likely to attract attention ``on average'', so far there is no scanpath model capable of predicting scanpaths for an individual. To close this gap, we introduce EyeFormer, which leverages a Transformer architecture as a policy network to guide a deep reinforcement learning algorithm that controls gaze locations. Our model has the unique capability of producing personalized predictions when given a few user scanpath samples. It can predict full scanpath information, including fixation positions and duration, across individuals and various stimulus types. Additionally, we demonstrate applications in GUI layout optimization driven by our model. Our software and models will be publicly available.

Today, according to the Cisco Annual Internet Report (2018-2023), the fastest-growing category of Internet traffic is machine-to-machine communication. In particular, machine-to-machine communication of images and videos represents a new challenge and opens up new perspectives in the context of data compression. One possible solution approach consists of adapting current human-targeted image and video coding standards to the use case of machine consumption. Another approach consists of developing completely new compression paradigms and architectures for machine-to-machine communications. In this paper, we focus on image compression and present an inference-time content-adaptive finetuning scheme that optimizes the latent representation of an end-to-end learned image codec, aimed at improving the compression efficiency for machine-consumption. The conducted experiments show that our online finetuning brings an average bitrate saving (BD-rate) of -3.66% with respect to our pretrained image codec. In particular, at low bitrate points, our proposed method results in a significant bitrate saving of -9.85%. Overall, our pretrained-and-then-finetuned system achieves -30.54% BD-rate over the state-of-the-art image/video codec Versatile Video Coding (VVC).

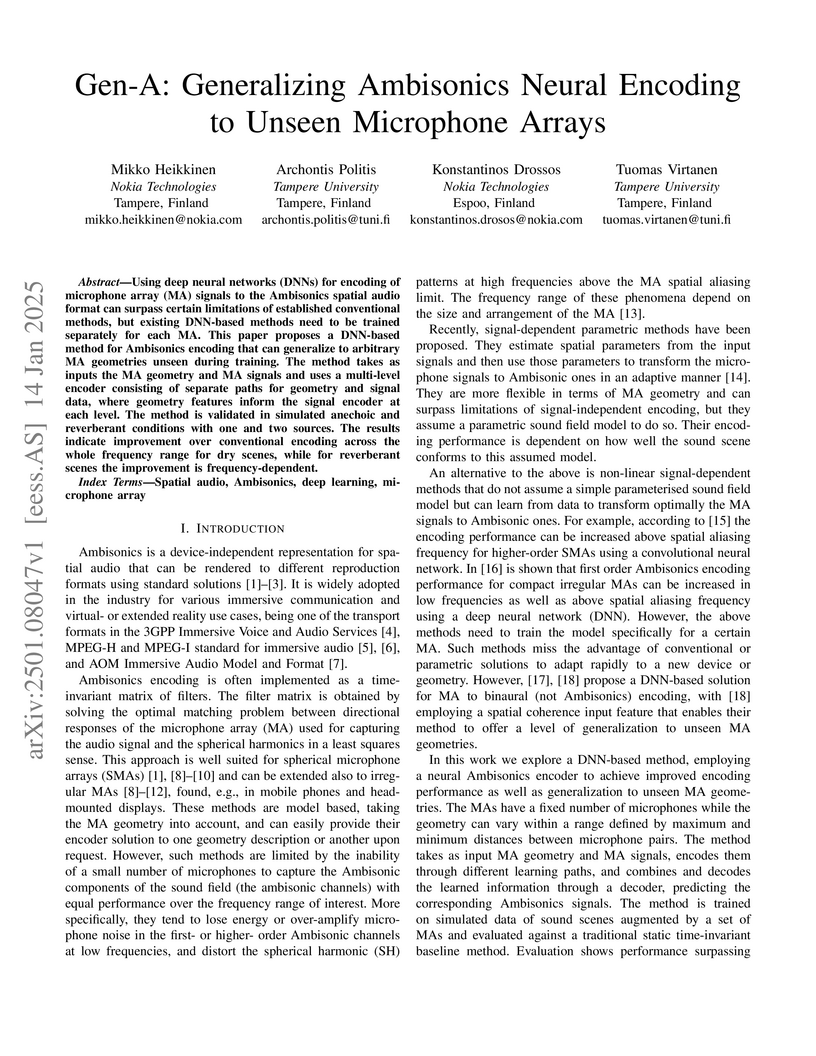

14 Jan 2025

Using deep neural networks (DNNs) for encoding of microphone array (MA) signals to the Ambisonics spatial audio format can surpass certain limitations of established conventional methods, but existing DNN-based methods need to be trained separately for each MA. This paper proposes a DNN-based method for Ambisonics encoding that can generalize to arbitrary MA geometries unseen during training. The method takes as inputs the MA geometry and MA signals and uses a multi-level encoder consisting of separate paths for geometry and signal data, where geometry features inform the signal encoder at each level. The method is validated in simulated anechoic and reverberant conditions with one and two sources. The results indicate improvement over conventional encoding across the whole frequency range for dry scenes, while for reverberant scenes the improvement is frequency-dependent.

Image coding for machines (ICM) aims at reducing the bitrate required to

represent an image while minimizing the drop in machine vision analysis

accuracy. In many use cases, such as surveillance, it is also important that

the visual quality is not drastically deteriorated by the compression process.

Recent works on using neural network (NN) based ICM codecs have shown

significant coding gains against traditional methods; however, the decompressed

images, especially at low bitrates, often contain checkerboard artifacts. We

propose an effective decoder finetuning scheme based on adversarial training to

significantly enhance the visual quality of ICM codecs, while preserving the

machine analysis accuracy, without adding extra bitcost or parameters at the

inference phase. The results show complete removal of the checkerboard

artifacts at the negligible cost of -1.6% relative change in task performance

score. In the cases where some amount of artifacts is tolerable, such as when

machine consumption is the primary target, this technique can enhance both

pixel-fidelity and feature-fidelity scores without losing task performance.

Ambisonics encoding of microphone array signals can enable various spatial

audio applications, such as virtual reality or telepresence, but it is

typically designed for uniformly-spaced spherical microphone arrays. This paper

proposes a method for Ambisonics encoding that uses a deep neural network (DNN)

to estimate a signal transform from microphone inputs to Ambisonics signals.

The approach uses a DNN consisting of a U-Net structure with a learnable

preprocessing as well as a loss function consisting of mean average error,

spatial correlation, and energy preservation components. The method is

validated on two microphone arrays with regular and irregular shapes having

four microphones, on simulated reverberant scenes with multiple sources. The

results of the validation show that the proposed method can meet or exceed the

performance of a conventional signal-independent Ambisonics encoder on a number

of error metrics.

We present the Video Ladder Network (VLN) for efficiently generating future

video frames. VLN is a neural encoder-decoder model augmented at all layers by

both recurrent and feedforward lateral connections. At each layer, these

connections form a lateral recurrent residual block, where the feedforward

connection represents a skip connection and the recurrent connection represents

the residual. Thanks to the recurrent connections, the decoder can exploit

temporal summaries generated from all layers of the encoder. This way, the top

layer is relieved from the pressure of modeling lower-level spatial and

temporal details. Furthermore, we extend the basic version of VLN to

incorporate ResNet-style residual blocks in the encoder and decoder, which help

improving the prediction results. VLN is trained in self-supervised regime on

the Moving MNIST dataset, achieving competitive results while having very

simple structure and providing fast inference.

17 Mar 2023

Collaborative writing is essential for teams that create documents together.

Creating documents in large-scale collaborations is a challenging task that

requires an efficient workflow. The design of such a workflow has received

comparatively little attention. Conventional solutions such as working on a

single Microsoft Word document or a shared online document are still widely

used. In this paper, we propose a new workflow consisting of a combination of

the lightweight markup language AsciiDoc together with the state-of-the-art

version control system Git. The proposed process makes use of well-established

workflows in the field of software development that have grown over decades. We

present a detailed comparison of the proposed markup + Git workflow to Word and

Word for the Web as the most prominent examples for conventional approaches.We

argue that the proposed approach provides significant benefits regarding

scalability, flexibility, and structuring of most collaborative writing tasks,

both in academia and industry.

There are no more papers matching your filters at the moment.