OMRON SINIC X Corporation

We formalize the generalization error bound using the Rademacher complexity for the Lean 4 theorem prover based on the probability theory in the Mathlib 4 library. Generalization error quantifies the gap between a learning machine's performance on given training data versus unseen test data, and the Rademacher complexity is a powerful tool to upper-bound the generalization error of a variety of modern learning problems. Previous studies have only formalized extremely simple cases such as bounds by parameter counts and analyses for very simple models (decision stumps). Formalizing the Rademacher complexity bound, also known as the uniform law of large numbers, requires substantial development and is achieved for the first time in this study. In the course of development, we formalize the Rademacher complexity and its unique arguments such as symmetrization, and clarify the topological assumptions on hypothesis classes under which the bound holds. As an application, we also present the formalization of generalization error bound for L2-regularization models.

11 Oct 2025

Reinforcement Learning has emerged as a dominant post-training approach to elicit agentic RAG behaviors such as search and planning from language models. Despite its success with larger models, applying RL to compact models (e.g., 0.5--1B parameters) presents unique challenges. The compact models exhibit poor initial performance, resulting in sparse rewards and unstable training. To overcome these difficulties, we propose Distillation-Guided Policy Optimization (DGPO), which employs cold-start initialization from teacher demonstrations and continuous teacher guidance during policy optimization. To understand how compact models preserve agentic behavior, we introduce Agentic RAG Capabilities (ARC), a fine-grained metric analyzing reasoning, search coordination, and response synthesis. Comprehensive experiments demonstrate that DGPO enables compact models to achieve sophisticated agentic search behaviors, even outperforming the larger teacher model in some cases. DGPO makes agentic RAG feasible in computing resource-constrained environments.

Assessing image-text alignment models such as CLIP is crucial for bridging visual and linguistic representations. Yet existing benchmarks rely on rule-based perturbations or short captions, limiting their ability to measure fine-grained alignment. We introduce AlignBench, a benchmark that provides a new indicator of image-text alignment by evaluating detailed image-caption pairs generated by diverse image-to-text and text-to-image models. Each sentence is annotated for correctness, enabling direct assessment of VLMs as alignment evaluators. Benchmarking a wide range of decoder-based VLMs reveals three key findings: (i) CLIP-based models, even those tailored for compositional reasoning, remain nearly blind; (ii) detectors systematically over-score early sentences; and (iii) they show strong self-preference, favoring their own outputs and harming detection performance. Our project page will be available at this https URL.

LeanConjecturer, developed by researchers from OMRON SINIC X, RIKEN AIP, and other Japanese institutions, is an automated system that generates mathematical conjectures in Lean 4 from existing formal libraries. It produced thousands of syntactically valid, novel, and non-trivial conjectures, expanding training data for large language models and improving the proof-finding capabilities of DeepSeek Prover-V2 on specific mathematical domains like topology.

This paper revisits datasets and evaluation criteria for Symbolic Regression (SR), specifically focused on its potential for scientific discovery. Focused on a set of formulas used in the existing datasets based on Feynman Lectures on Physics, we recreate 120 datasets to discuss the performance of symbolic regression for scientific discovery (SRSD). For each of the 120 SRSD datasets, we carefully review the properties of the formula and its variables to design reasonably realistic sampling ranges of values so that our new SRSD datasets can be used for evaluating the potential of SRSD such as whether or not an SR method can (re)discover physical laws from such datasets. We also create another 120 datasets that contain dummy variables to examine whether SR methods can choose necessary variables only. Besides, we propose to use normalized edit distances (NED) between a predicted equation and the true equation trees for addressing a critical issue that existing SR metrics are either binary or errors between the target values and an SR model's predicted values for a given input. We conduct benchmark experiments on our new SRSD datasets using various representative SR methods. The experimental results show that we provide a more realistic performance evaluation, and our user study shows that the NED correlates with human judges significantly more than an existing SR metric. We publish repositories of our code and 240 SRSD datasets.

Researchers from OMRON SINIC X Corporation and Waseda University introduce SciPostLayout, the first public dataset of 7,855 scientific posters with detailed layout annotations, including 100 paper-poster pairs. This dataset enables systematic research into automated scientific poster generation and analysis, providing a crucial resource for the field and establishing baselines for future development.

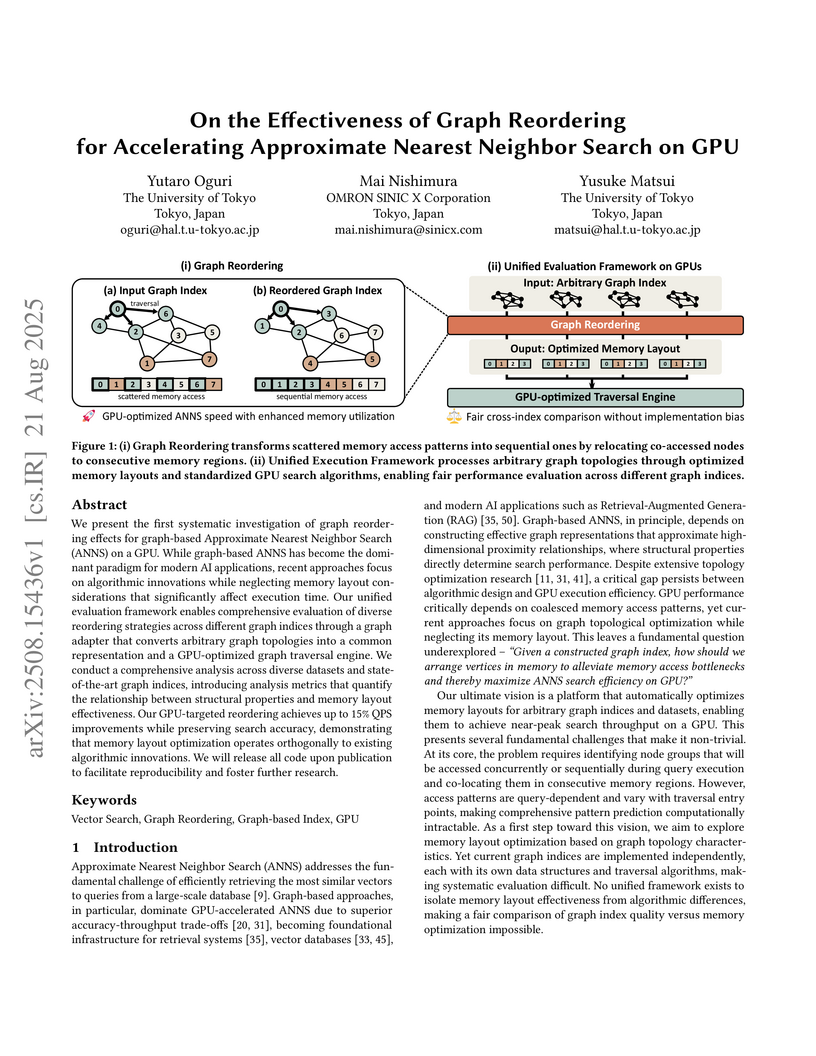

On the Effectiveness of Graph Reordering for Accelerating Approximate Nearest Neighbor Search on GPU

On the Effectiveness of Graph Reordering for Accelerating Approximate Nearest Neighbor Search on GPU

We present the first systematic investigation of graph reordering effects for graph-based Approximate Nearest Neighbor Search (ANNS) on a GPU. While graph-based ANNS has become the dominant paradigm for modern AI applications, recent approaches focus on algorithmic innovations while neglecting memory layout considerations that significantly affect execution time. Our unified evaluation framework enables comprehensive evaluation of diverse reordering strategies across different graph indices through a graph adapter that converts arbitrary graph topologies into a common representation and a GPU-optimized graph traversal engine. We conduct a comprehensive analysis across diverse datasets and state-of-the-art graph indices, introducing analysis metrics that quantify the relationship between structural properties and memory layout effectiveness. Our GPU-targeted reordering achieves up to 15% QPS improvements while preserving search accuracy, demonstrating that memory layout optimization operates orthogonally to existing algorithmic innovations. We will release all code upon publication to facilitate reproducibility and foster further research.

04 Aug 2025

Dynamic Movement Primitives (DMPs) provide a flexible framework wherein smooth robotic motions are encoded into modular parameters. However, they face challenges in integrating multimodal inputs commonly used in robotics like vision and language into their framework. To fully maximize DMPs' potential, enabling them to handle multimodal inputs is essential. In addition, we also aim to extend DMPs' capability to handle object-focused tasks requiring one-shot complex motion generation, as observation occlusion could easily happen mid-execution in such tasks (e.g., knife occlusion in cake icing, hand occlusion in dough kneading, etc.). A promising approach is to leverage Vision-Language Models (VLMs), which process multimodal data and can grasp high-level concepts. However, they typically lack enough knowledge and capabilities to directly infer low-level motion details and instead only serve as a bridge between high-level instructions and low-level control. To address this limitation, we propose Keyword Labeled Primitive Selection and Keypoint Pairs Generation Guided Movement Primitives (KeyMPs), a framework that combines VLMs with sequencing of DMPs. KeyMPs use VLMs' high-level reasoning capability to select a reference primitive through \emph{keyword labeled primitive selection} and VLMs' spatial awareness to generate spatial scaling parameters used for sequencing DMPs by generalizing the overall motion through \emph{keypoint pairs generation}, which together enable one-shot vision-language guided motion generation that aligns with the intent expressed in the multimodal input. We validate our approach through experiments on two occlusion-rich tasks: object cutting, conducted in both simulated and real-world environments, and cake icing, performed in simulation. These evaluations demonstrate superior performance over other DMP-based methods that integrate VLM support.

A quad-process theory (System 0/1/2/3) is proposed, expanding traditional dual-process models to incorporate pre-cognitive embodied processes and super-slow collective intelligence, unified by Henri Bergson's multi-timescale philosophy. This framework offers a theoretical basis for understanding intelligence across physical, individual, and societal scales, including a formalization for collective symbol emergence via Collective Predictive Coding.

This research from Kyoto University, OMRON SINIC X, and the University of Tokyo introduces ViLaIn, a Vision-Language Interpreter that automatically generates formal PDDL problem descriptions for robots from natural language instructions and visual observations. The system combines large language models and vision-language models with symbolic planners to enhance interpretability and logical correctness, achieving over 99% syntactic correctness and up to 99% plan validity in tested domains.

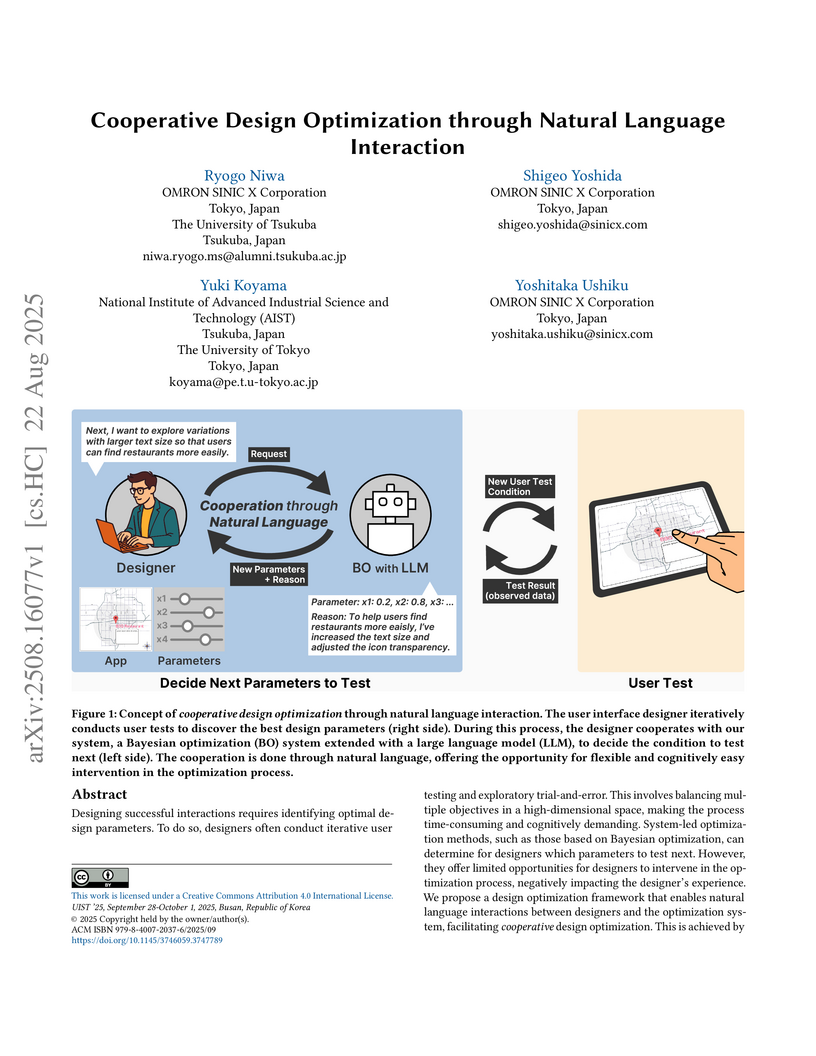

Researchers from OMRON SINIC X Corporation and leading academic institutions developed a framework for cooperative design optimization that integrates Large Language Models with Bayesian Optimization, enabling designers to steer the process through natural language. This approach significantly increased designers' sense of agency and reduced their cognitive load compared to traditional system-led or explicit-constraint cooperative optimization methods, while achieving comparable or better optimization performance than purely manual design.

27 Jan 2025

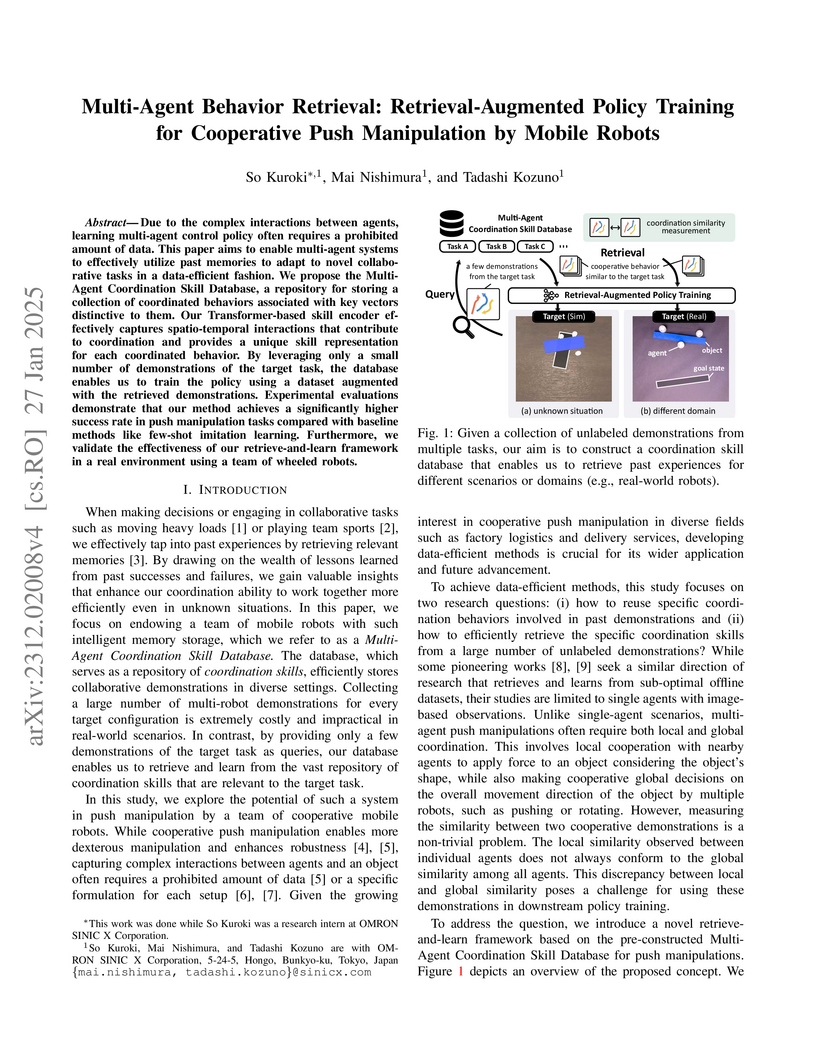

Due to the complex interactions between agents, learning multi-agent control

policy often requires a prohibited amount of data. This paper aims to enable

multi-agent systems to effectively utilize past memories to adapt to novel

collaborative tasks in a data-efficient fashion. We propose the Multi-Agent

Coordination Skill Database, a repository for storing a collection of

coordinated behaviors associated with key vectors distinctive to them. Our

Transformer-based skill encoder effectively captures spatio-temporal

interactions that contribute to coordination and provides a unique skill

representation for each coordinated behavior. By leveraging only a small number

of demonstrations of the target task, the database enables us to train the

policy using a dataset augmented with the retrieved demonstrations.

Experimental evaluations demonstrate that our method achieves a significantly

higher success rate in push manipulation tasks compared with baseline methods

like few-shot imitation learning. Furthermore, we validate the effectiveness of

our retrieve-and-learn framework in a real environment using a team of wheeled

robots.

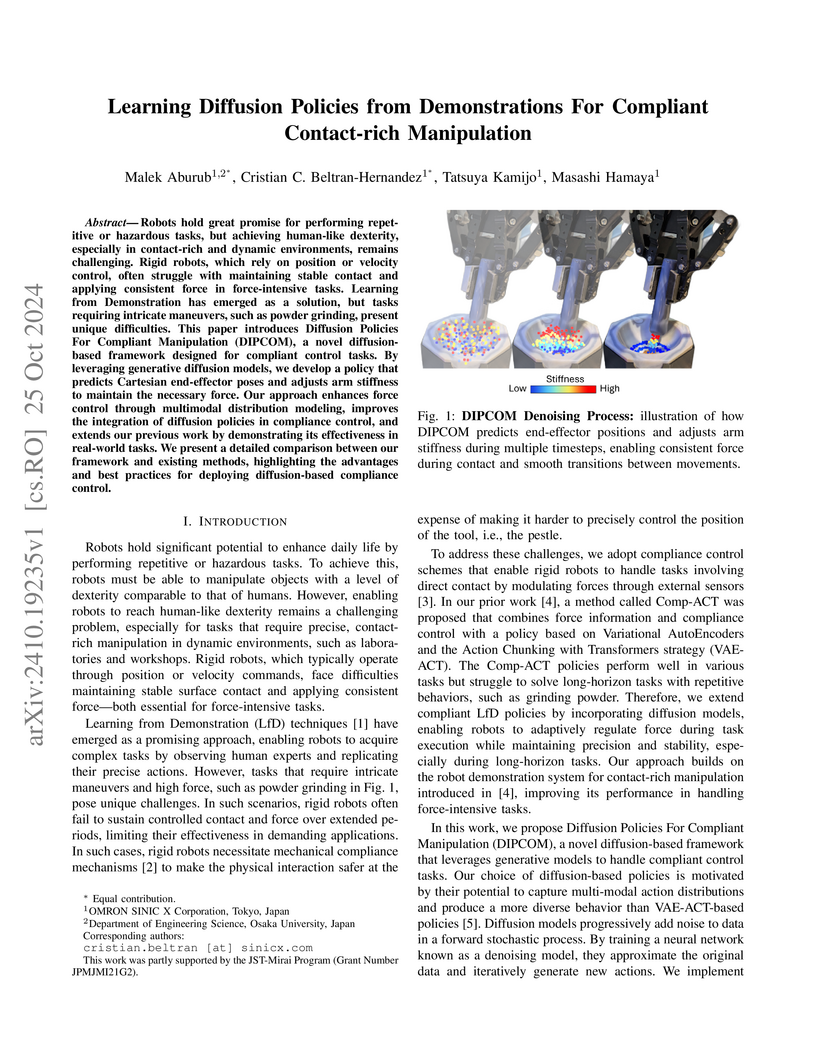

Robots hold great promise for performing repetitive or hazardous tasks, but achieving human-like dexterity, especially in contact-rich and dynamic environments, remains challenging. Rigid robots, which rely on position or velocity control, often struggle with maintaining stable contact and applying consistent force in force-intensive tasks. Learning from Demonstration has emerged as a solution, but tasks requiring intricate maneuvers, such as powder grinding, present unique difficulties. This paper introduces Diffusion Policies For Compliant Manipulation (DIPCOM), a novel diffusion-based framework designed for compliant control tasks. By leveraging generative diffusion models, we develop a policy that predicts Cartesian end-effector poses and adjusts arm stiffness to maintain the necessary force. Our approach enhances force control through multimodal distribution modeling, improves the integration of diffusion policies in compliance control, and extends our previous work by demonstrating its effectiveness in real-world tasks. We present a detailed comparison between our framework and existing methods, highlighting the advantages and best practices for deploying diffusion-based compliance control.

26 Sep 2025

Robotic manipulators capable of regulating both compliance and stiffness offer enhanced operational safety and versatility. Here, we introduce Worm Gear-based Adaptive Variable Elasticity (WAVE), a variable stiffness actuator (VSA) that integrates a non-backdrivable worm gear. By decoupling the driving motor from external forces using this gear, WAVE enables precise force transmission to the joint, while absorbing positional discrepancies through compliance. WAVE is protected from excessive loads by converting impact forces into elastic energy stored in a spring. In addition, the actuator achieves continuous joint stiffness modulation by changing the spring's precompression length. We demonstrate these capabilities, experimentally validate the proposed stiffness model, show that motor loads approach zero at rest--even under external loading--and present applications using a manipulator with WAVE. This outcome showcases the successful decoupling of external forces. The protective attributes of this actuator allow for extended operation in contact-intensive tasks, and for robust robotic applications in challenging environments.

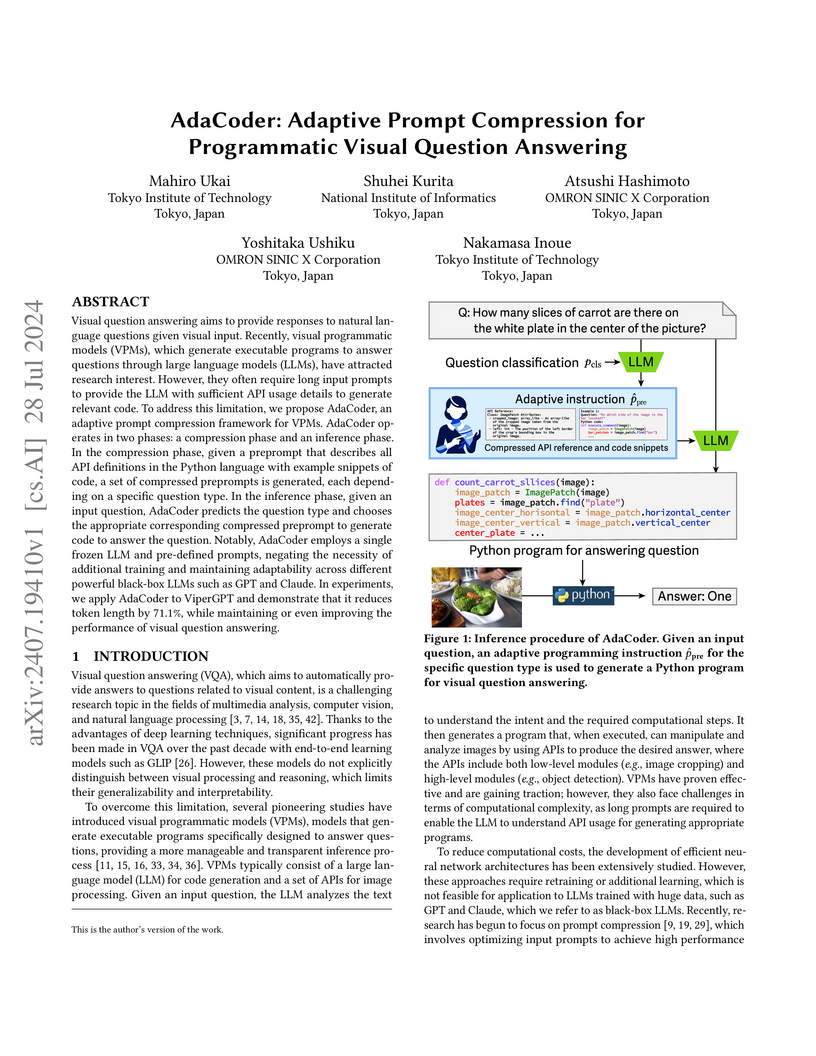

Visual question answering aims to provide responses to natural language

questions given visual input. Recently, visual programmatic models (VPMs),

which generate executable programs to answer questions through large language

models (LLMs), have attracted research interest. However, they often require

long input prompts to provide the LLM with sufficient API usage details to

generate relevant code. To address this limitation, we propose AdaCoder, an

adaptive prompt compression framework for VPMs. AdaCoder operates in two

phases: a compression phase and an inference phase. In the compression phase,

given a preprompt that describes all API definitions in the Python language

with example snippets of code, a set of compressed preprompts is generated,

each depending on a specific question type. In the inference phase, given an

input question, AdaCoder predicts the question type and chooses the appropriate

corresponding compressed preprompt to generate code to answer the question.

Notably, AdaCoder employs a single frozen LLM and pre-defined prompts, negating

the necessity of additional training and maintaining adaptability across

different powerful black-box LLMs such as GPT and Claude. In experiments, we

apply AdaCoder to ViperGPT and demonstrate that it reduces token length by

71.1%, while maintaining or even improving the performance of visual question

answering.

01 Sep 2024

Traversability assessment of deformable terrain is vital for safe rover navigation on planetary surfaces. Machine learning (ML) is a powerful tool for traversability prediction but faces predictive uncertainty. This uncertainty leads to prediction errors, increasing the risk of wheel slips and immobilization for planetary rovers. To address this issue, we integrate principal approaches to uncertainty handling -- quantification, exploitation, and adaptation -- into a single learning and planning framework for rover navigation. The key concept is \emph{deep probabilistic traversability}, forming the basis of an end-to-end probabilistic ML model that predicts slip distributions directly from rover traverse observations. This probabilistic model quantifies uncertainties in slip prediction and exploits them as traversability costs in path planning. Its end-to-end nature also allows adaptation of pre-trained models with in-situ traverse experience to reduce uncertainties. We perform extensive simulations in synthetic environments that pose representative uncertainties in planetary analog terrains. Experimental results show that our method achieves more robust path planning under novel environmental conditions than existing approaches.

As medical diagnoses increasingly leverage multimodal data, machine learning models are expected to effectively fuse heterogeneous information while remaining robust to missing modalities. In this work, we propose a novel multimodal learning framework that integrates enhanced modalities dropout and contrastive learning to address real-world limitations such as modality imbalance and missingness. Our approach introduces learnable modality tokens for improving missingness-aware fusion of modalities and augments conventional unimodal contrastive objectives with fused multimodal representations. We validate our framework on large-scale clinical datasets for disease detection and prediction tasks, encompassing both visual and tabular modalities. Experimental results demonstrate that our method achieves state-of-the-art performance, particularly in challenging and practical scenarios where only a single modality is available. Furthermore, we show its adaptability through successful integration with a recent CT foundation model. Our findings highlight the effectiveness, efficiency, and generalizability of our approach for multimodal learning, offering a scalable, low-cost solution with significant potential for real-world clinical applications. The code is available at this https URL.

27 Feb 2024

The hierarchy of global and local planners is one of the most commonly utilized system designs in autonomous robot navigation. While the global planner generates a reference path from the current to goal locations based on the pre-built map, the local planner produces a kinodynamic trajectory to follow the reference path while avoiding perceived obstacles. To account for unforeseen or dynamic obstacles not present on the pre-built map, ``when to replan'' the reference path is critical for the success of safe and efficient navigation. However, determining the ideal timing to execute replanning in such partially unknown environments still remains an open question. In this work, we first conduct an extensive simulation experiment to compare several common replanning strategies and confirm that effective strategies are highly dependent on the environment as well as the global and local planners. Based on this insight, we then derive a new adaptive replanning strategy based on deep reinforcement learning, which can learn from experience to decide appropriate replanning timings in the given environment and planning setups. Our experimental results show that the proposed replanner can perform on par or even better than the current best-performing strategies in multiple situations regarding navigation robustness and efficiency.

10 Oct 2025

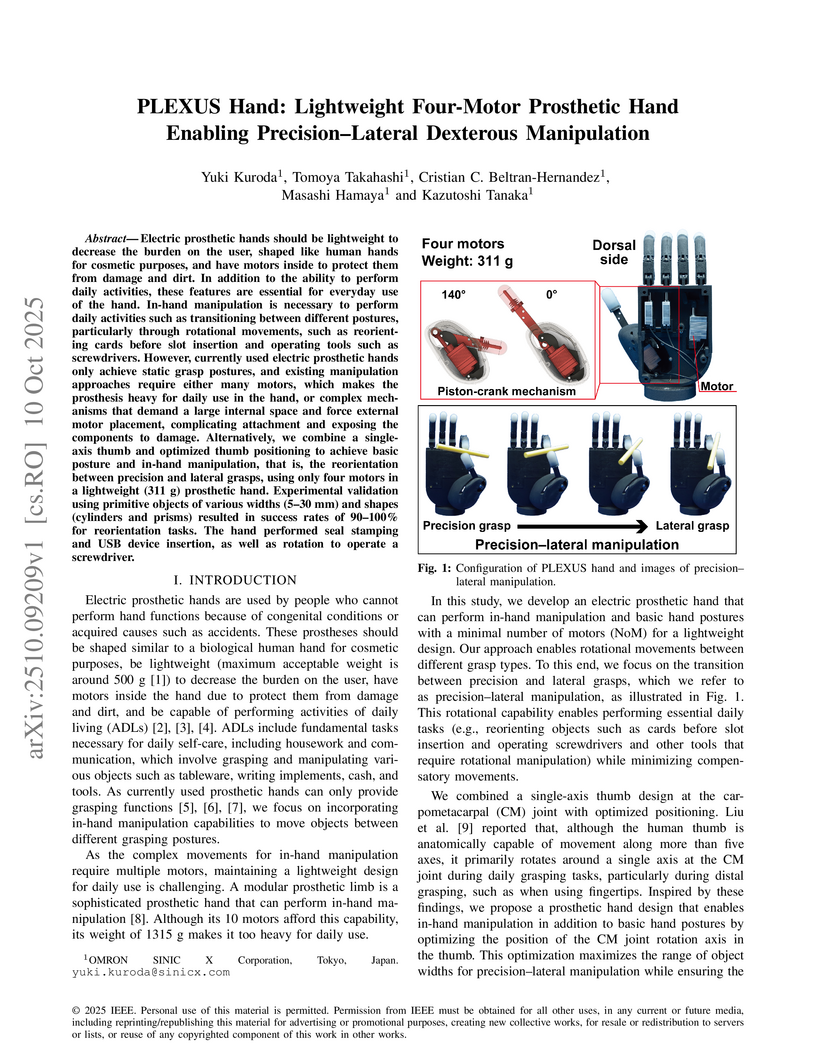

Electric prosthetic hands should be lightweight to decrease the burden on the user, shaped like human hands for cosmetic purposes, and have motors inside to protect them from damage and dirt. In addition to the ability to perform daily activities, these features are essential for everyday use of the hand. In-hand manipulation is necessary to perform daily activities such as transitioning between different postures, particularly through rotational movements, such as reorienting cards before slot insertion and operating tools such as screwdrivers. However, currently used electric prosthetic hands only achieve static grasp postures, and existing manipulation approaches require either many motors, which makes the prosthesis heavy for daily use in the hand, or complex mechanisms that demand a large internal space and force external motor placement, complicating attachment and exposing the components to damage. Alternatively, we combine a single-axis thumb and optimized thumb positioning to achieve basic posture and in-hand manipulation, that is, the reorientation between precision and lateral grasps, using only four motors in a lightweight (311 g) prosthetic hand. Experimental validation using primitive objects of various widths (5-30 mm) and shapes (cylinders and prisms) resulted in success rates of 90-100% for reorientation tasks. The hand performed seal stamping and USB device insertion, as well as rotation to operate a screwdriver.

Machine learning (ML) plays a crucial role in assessing traversability for autonomous rover operations on deformable terrains but suffers from inevitable prediction errors. Especially for heterogeneous terrains where the geological features vary from place to place, erroneous traversability prediction can become more apparent, increasing the risk of unrecoverable rover's wheel slip and immobilization. In this work, we propose a new path planning algorithm that explicitly accounts for such erroneous prediction. The key idea is the probabilistic fusion of distinctive ML models for terrain type classification and slip prediction into a single distribution. This gives us a multimodal slip distribution accounting for heterogeneous terrains and further allows statistical risk assessment to be applied to derive risk-aware traversing costs for path planning. Extensive simulation experiments have demonstrated that the proposed method is able to generate more feasible paths on heterogeneous terrains compared to existing methods.

There are no more papers matching your filters at the moment.