OriginAI

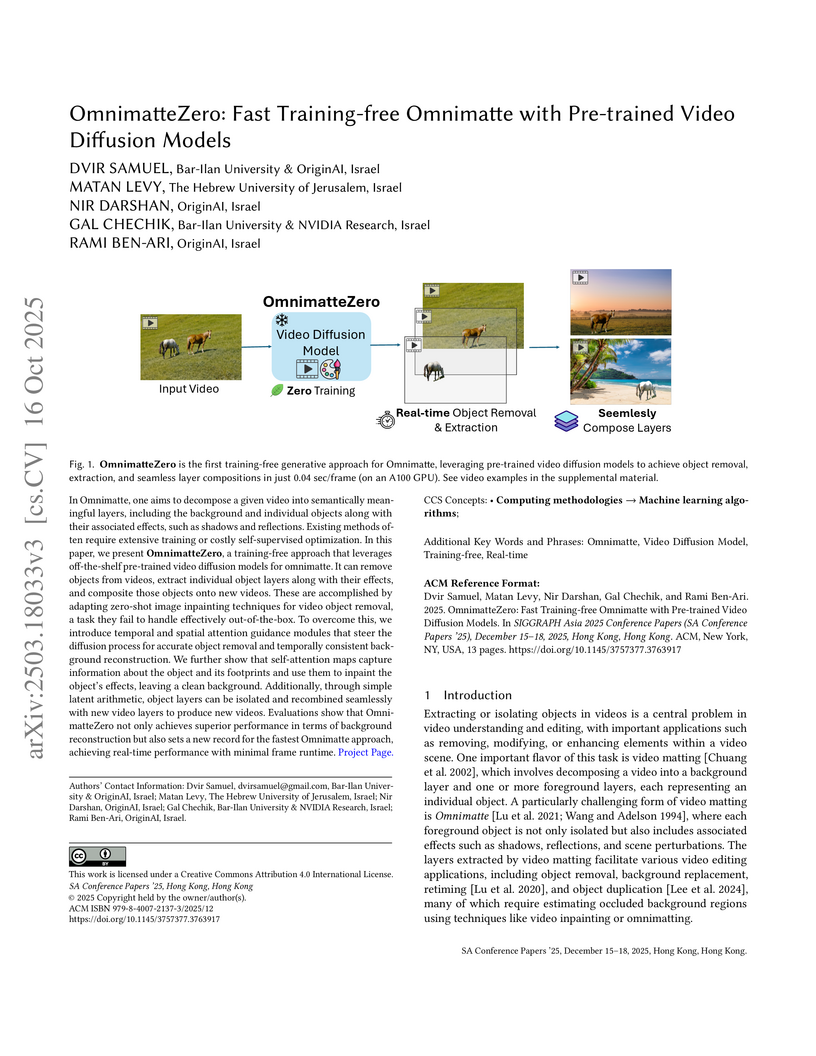

In Omnimatte, one aims to decompose a given video into semantically meaningful layers, including the background and individual objects along with their associated effects, such as shadows and reflections. Existing methods often require extensive training or costly self-supervised optimization. In this paper, we present OmnimatteZero, a training-free approach that leverages off-the-shelf pre-trained video diffusion models for omnimatte. It can remove objects from videos, extract individual object layers along with their effects, and composite those objects onto new videos. These are accomplished by adapting zero-shot image inpainting techniques for video object removal, a task they fail to handle effectively out-of-the-box. To overcome this, we introduce temporal and spatial attention guidance modules that steer the diffusion process for accurate object removal and temporally consistent background reconstruction. We further show that self-attention maps capture information about the object and its footprints and use them to inpaint the object's effects, leaving a clean background. Additionally, through simple latent arithmetic, object layers can be isolated and recombined seamlessly with new video layers to produce new videos. Evaluations show that OmnimatteZero not only achieves superior performance in terms of background reconstruction but also sets a new record for the fastest Omnimatte approach, achieving real-time performance with minimal frame runtime.

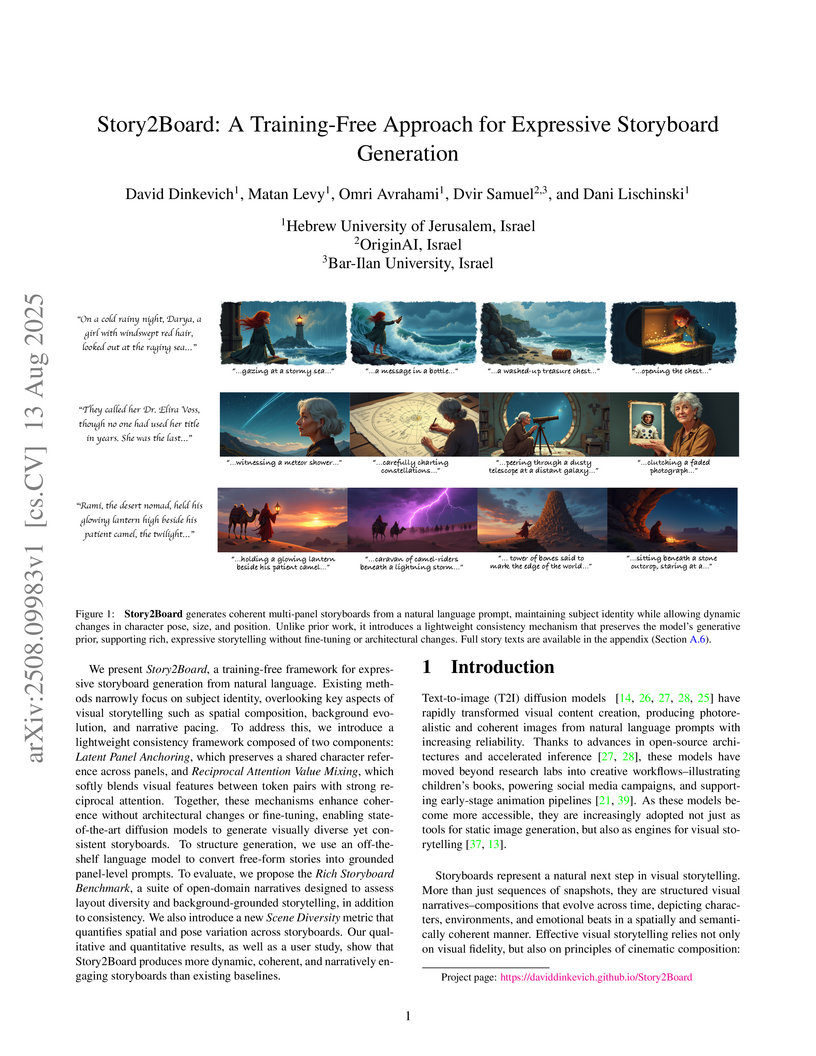

The Story2Board framework enables training-free generation of expressive, multi-panel storyboards from natural language, maintaining strong character consistency while allowing for diverse scene compositions and narrative progression. It achieves this by employing an LLM-based prompt decomposition alongside two novel in-context consistency mechanisms during the diffusion model's denoising process.

Diffusion inversion is the problem of taking an image and a text prompt that

describes it and finding a noise latent that would generate the exact same

image. Most current deterministic inversion techniques operate by approximately

solving an implicit equation and may converge slowly or yield poor

reconstructed images. We formulate the problem by finding the roots of an

implicit equation and devlop a method to solve it efficiently. Our solution is

based on Newton-Raphson (NR), a well-known technique in numerical analysis. We

show that a vanilla application of NR is computationally infeasible while

naively transforming it to a computationally tractable alternative tends to

converge to out-of-distribution solutions, resulting in poor reconstruction and

editing. We therefore derive an efficient guided formulation that fastly

converges and provides high-quality reconstructions and editing. We showcase

our method on real image editing with three popular open-sourced diffusion

models: Stable Diffusion, SDXL-Turbo, and Flux with different deterministic

schedulers. Our solution, Guided Newton-Raphson Inversion, inverts an image

within 0.4 sec (on an A100 GPU) for few-step models (SDXL-Turbo and Flux.1),

opening the door for interactive image editing. We further show improved

results in image interpolation and generation of rare objects.

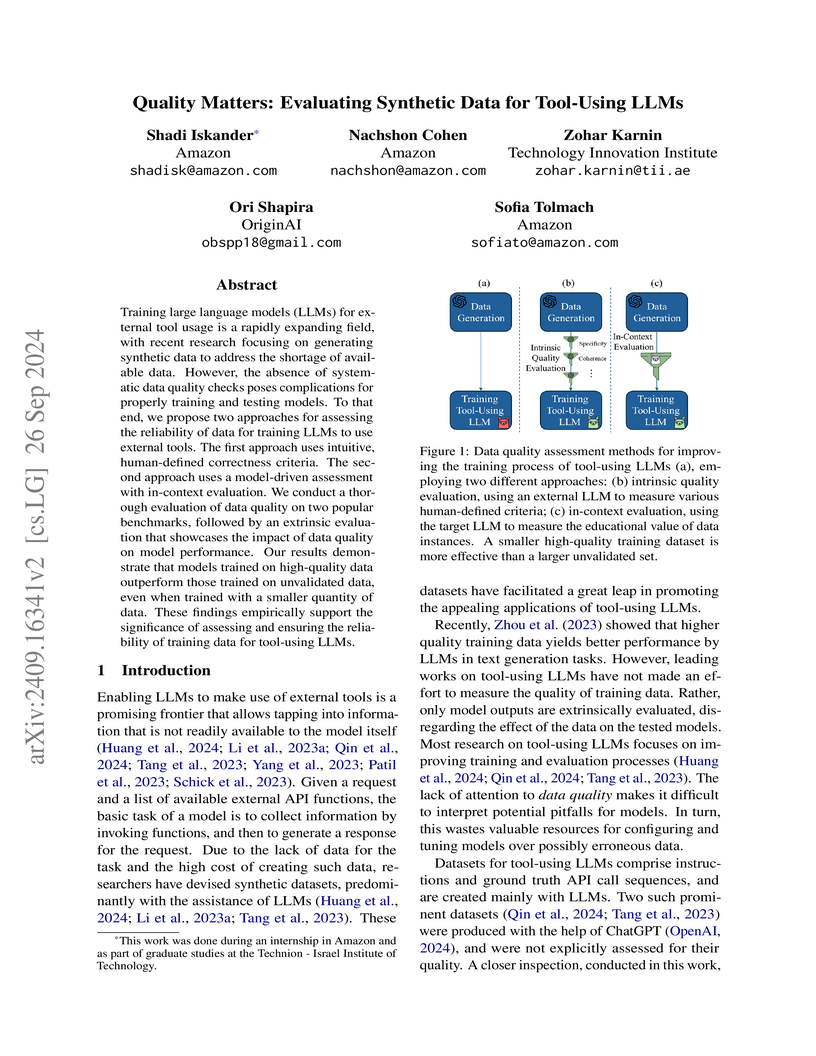

This research systematically evaluates the quality of synthetic training data for tool-using Large Language Models (LLMs), introducing automated intrinsic metrics and an In-Context Evaluation (ICE) method. The study demonstrates that models fine-tuned on significantly smaller, high-quality filtered datasets achieve superior or comparable performance compared to those trained on much larger, unvalidated datasets.

Current evaluations of large language models (LLMs) rely on benchmark scores, but it is difficult to interpret what these individual scores reveal about a model's overall skills. Specifically, as a community we lack understanding of how tasks relate to one another, what they measure in common, how they differ, or which ones are redundant. As a result, models are often assessed via a single score averaged across benchmarks, an approach that fails to capture the models' wholistic strengths and limitations. Here, we propose a new evaluation paradigm that uses factor analysis to identify latent skills driving performance across benchmarks. We apply this method to a comprehensive new leaderboard showcasing the performance of 60 LLMs on 44 tasks, and identify a small set of latent skills that largely explain performance. Finally, we turn these insights into practical tools that identify redundant tasks, aid in model selection, and profile models along each latent skill.

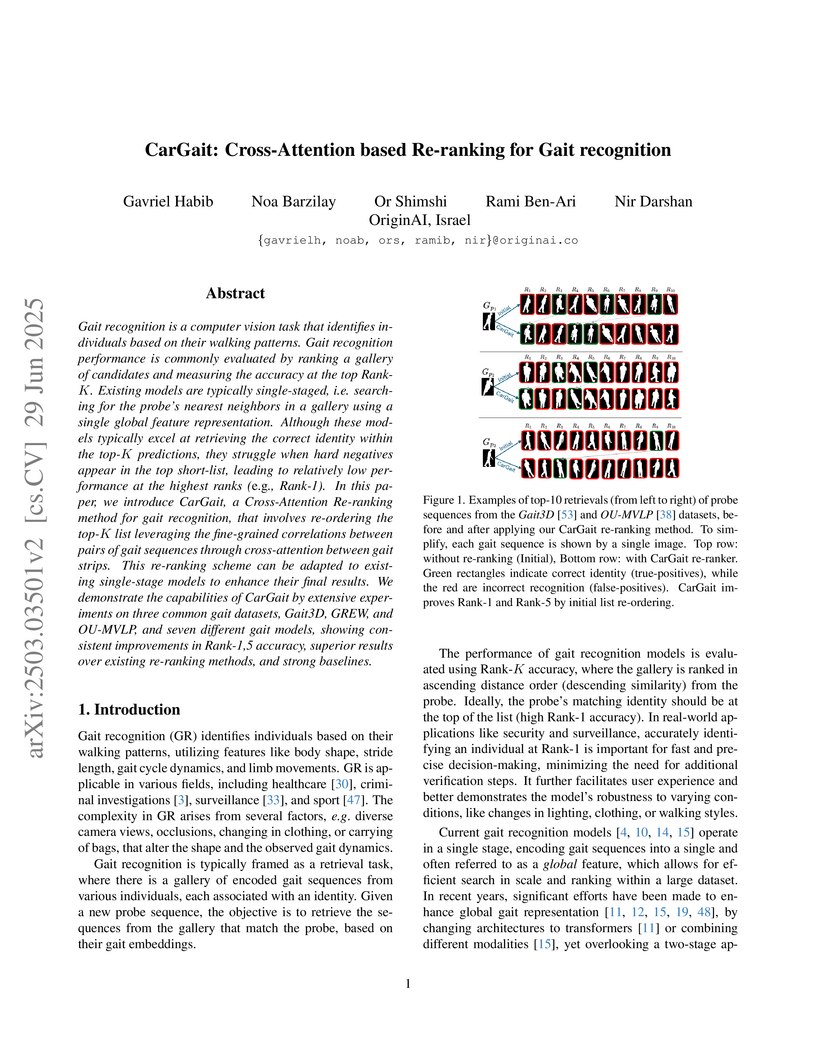

Gait recognition is a computer vision task that identifies individuals based on their walking patterns. Gait recognition performance is commonly evaluated by ranking a gallery of candidates and measuring the accuracy at the top Rank-K. Existing models are typically single-staged, i.e. searching for the probe's nearest neighbors in a gallery using a single global feature representation. Although these models typically excel at retrieving the correct identity within the top-K predictions, they struggle when hard negatives appear in the top short-list, leading to relatively low performance at the highest ranks (e.g., Rank-1). In this paper, we introduce CarGait, a Cross-Attention Re-ranking method for gait recognition, that involves re-ordering the top-K list leveraging the fine-grained correlations between pairs of gait sequences through cross-attention between gait strips. This re-ranking scheme can be adapted to existing single-stage models to enhance their final results. We demonstrate the capabilities of CarGait by extensive experiments on three common gait datasets, Gait3D, GREW, and OU-MVLP, and seven different gait models, showing consistent improvements in Rank-1,5 accuracy, superior results over existing re-ranking methods, and strong baselines.

The task of Visual Place Recognition (VPR) is to predict the location of a query image from a database of geo-tagged images. Recent studies in VPR have highlighted the significant advantage of employing pre-trained foundation models like DINOv2 for the VPR task. However, these models are often deemed inadequate for VPR without further fine-tuning on VPR-specific data. In this paper, we present an effective approach to harness the potential of a foundation model for VPR. We show that features extracted from self-attention layers can act as a powerful re-ranker for VPR, even in a zero-shot setting. Our method not only outperforms previous zero-shot approaches but also introduces results competitive with several supervised methods. We then show that a single-stage approach utilizing internal ViT layers for pooling can produce global features that achieve state-of-the-art performance, with impressive feature compactness down to 128D. Moreover, integrating our local foundation features for re-ranking further widens this performance gap. Our method also demonstrates exceptional robustness and generalization, setting new state-of-the-art performance, while handling challenging conditions such as occlusion, day-night transitions, and seasonal variations.

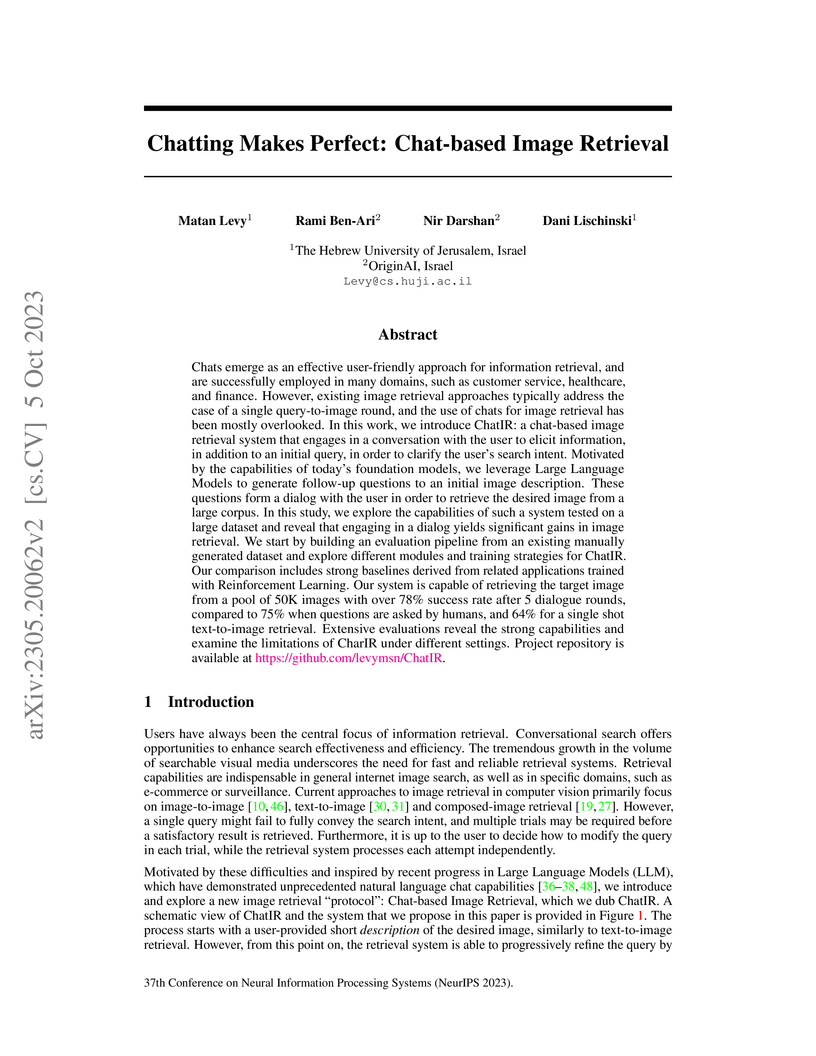

The paper introduces ChatIR, a chat-based image retrieval system that leverages Large Language Models for question generation and Vision-and-Language models for multi-modal understanding. This interactive approach consistently improves retrieval accuracy, demonstrating an 18% increase in success over single-shot methods on the VisDial dataset, with ChatGPT-generated questions outperforming human-generated ones.

The paper introduces SeedSelect, a method to improve the generation of images for rare or structurally complex concepts using pre-trained text-to-image diffusion models. It optimizes the initial noise seed to guide the model, achieving significantly higher classification accuracy for rare concepts (e.g., 76.1% for ImageNet tail classes) while maintaining image realism and diversity.

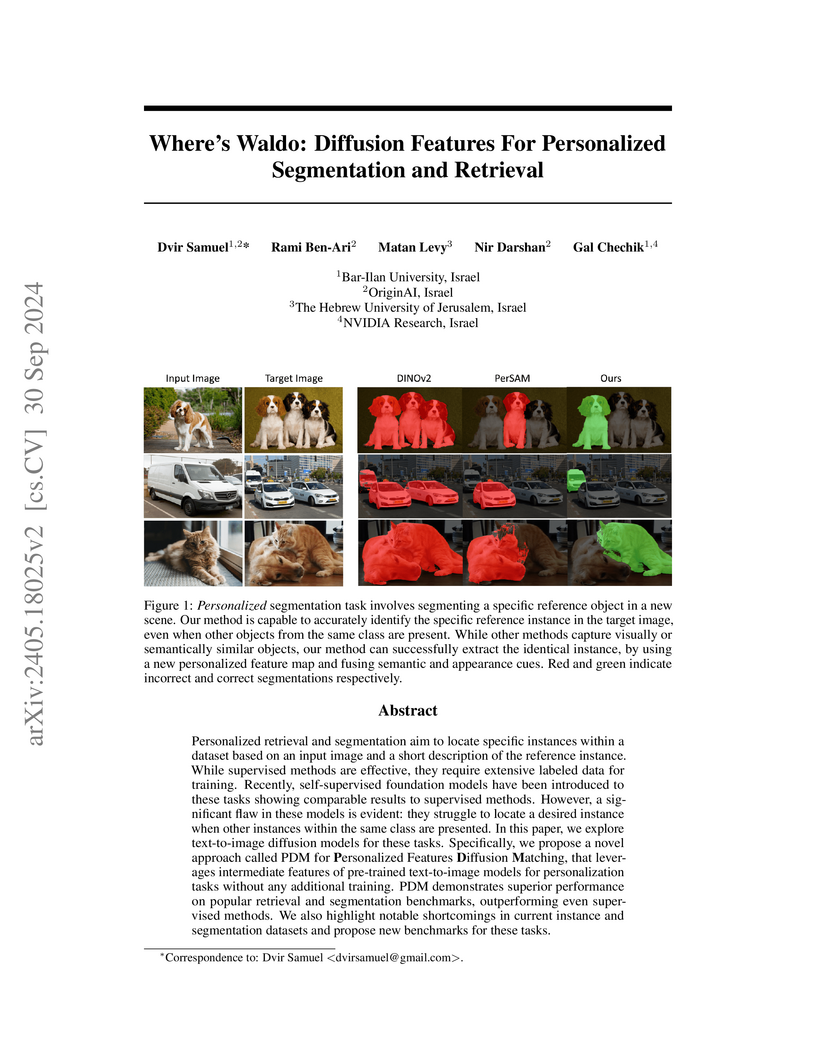

Personalized retrieval and segmentation aim to locate specific instances within a dataset based on an input image and a short description of the reference instance. While supervised methods are effective, they require extensive labeled data for training. Recently, self-supervised foundation models have been introduced to these tasks showing comparable results to supervised methods. However, a significant flaw in these models is evident: they struggle to locate a desired instance when other instances within the same class are presented. In this paper, we explore text-to-image diffusion models for these tasks. Specifically, we propose a novel approach called PDM for Personalized Features Diffusion Matching, that leverages intermediate features of pre-trained text-to-image models for personalization tasks without any additional training. PDM demonstrates superior performance on popular retrieval and segmentation benchmarks, outperforming even supervised methods. We also highlight notable shortcomings in current instance and segmentation datasets and propose new benchmarks for these tasks.

In this paper, we address the problem of single-microphone speech separation in the presence of ambient noise. We propose a generative unsupervised technique that directly models both clean speech and structured noise components, training exclusively on these individual signals rather than noisy mixtures. Our approach leverages an audio-visual score model that incorporates visual cues to serve as a strong generative speech prior. By explicitly modelling the noise distribution alongside the speech distribution, we enable effective decomposition through the inverse problem paradigm. We perform speech separation by sampling from the posterior distributions via a reverse diffusion process, which directly estimates and removes the modelled noise component to recover clean constituent signals. Experimental results demonstrate promising performance, highlighting the effectiveness of our direct noise modelling approach in challenging acoustic environments.

Multi-object Attention Optimization (MaO) offers a two-stage retrieval framework addressing Small Object Image Retrieval (SoIR) in cluttered scenes, outperforming existing methods. MaO-DINOv2 achieved up to 83.70% mAP on VoxDet (fine-tuned), an 18-26 mAP point boost over baselines, by creating a unified image descriptor optimized for all objects.

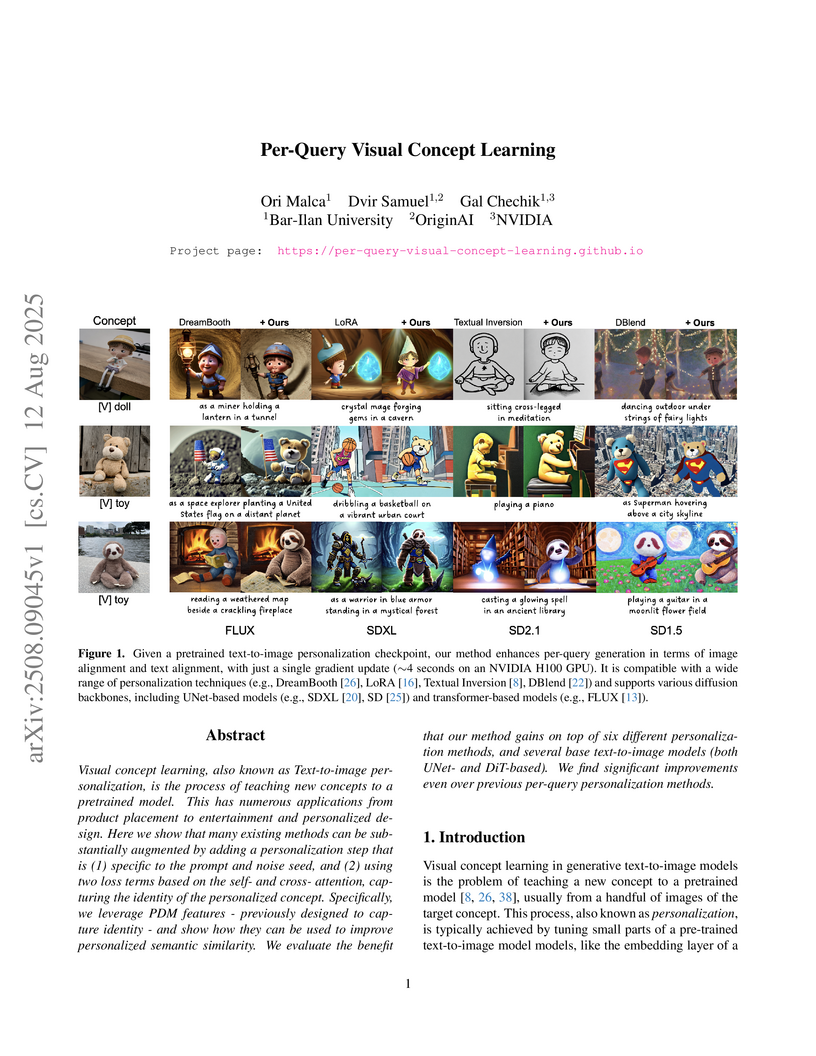

Visual concept learning, also known as Text-to-image personalization, is the process of teaching new concepts to a pretrained model. This has numerous applications from product placement to entertainment and personalized design. Here we show that many existing methods can be substantially augmented by adding a personalization step that is (1) specific to the prompt and noise seed, and (2) using two loss terms based on the self- and cross- attention, capturing the identity of the personalized concept. Specifically, we leverage PDM features - previously designed to capture identity - and show how they can be used to improve personalized semantic similarity. We evaluate the benefit that our method gains on top of six different personalization methods, and several base text-to-image models (both UNet- and DiT-based). We find significant improvements even over previous per-query personalization methods.

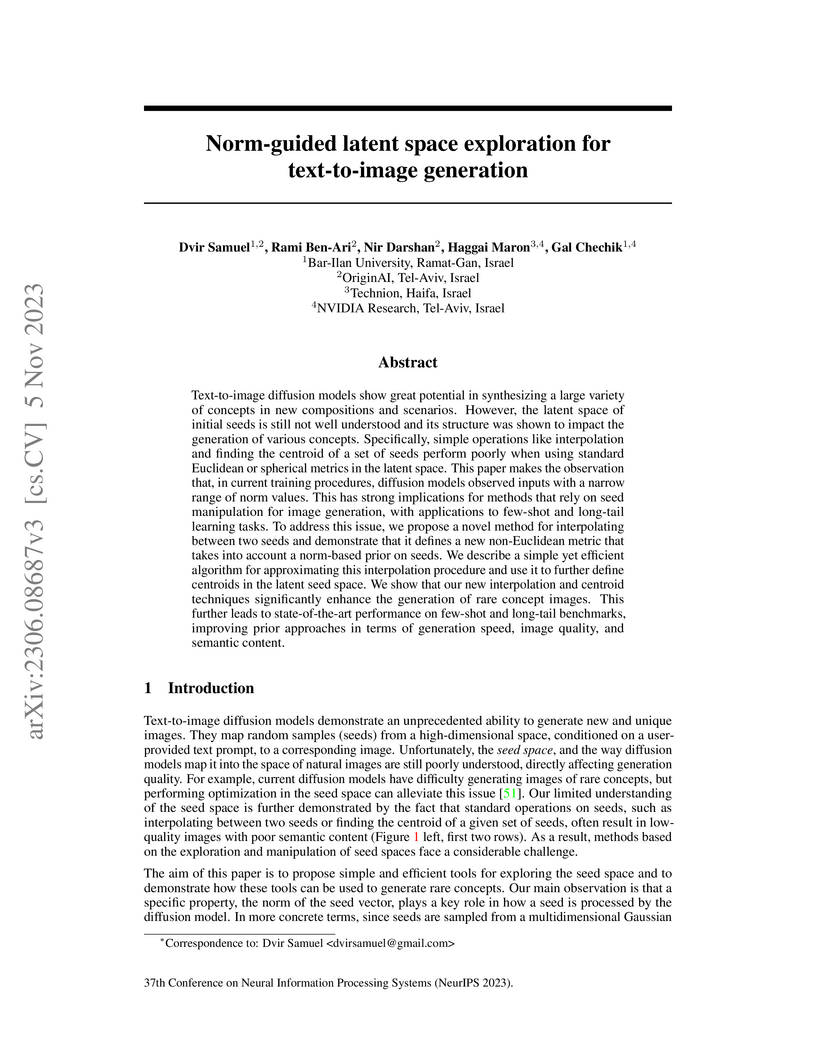

Text-to-image diffusion models show great potential in synthesizing a large variety of concepts in new compositions and scenarios. However, the latent space of initial seeds is still not well understood and its structure was shown to impact the generation of various concepts. Specifically, simple operations like interpolation and finding the centroid of a set of seeds perform poorly when using standard Euclidean or spherical metrics in the latent space. This paper makes the observation that, in current training procedures, diffusion models observed inputs with a narrow range of norm values. This has strong implications for methods that rely on seed manipulation for image generation, with applications to few-shot and long-tail learning tasks. To address this issue, we propose a novel method for interpolating between two seeds and demonstrate that it defines a new non-Euclidean metric that takes into account a norm-based prior on seeds. We describe a simple yet efficient algorithm for approximating this interpolation procedure and use it to further define centroids in the latent seed space. We show that our new interpolation and centroid techniques significantly enhance the generation of rare concept images. This further leads to state-of-the-art performance on few-shot and long-tail benchmarks, improving prior approaches in terms of generation speed, image quality, and semantic content.

Active Learning (AL) is a user-interactive approach aimed at reducing annotation costs by selecting the most crucial examples to label. Although AL has been extensively studied for image classification tasks, the specific scenario of interactive image retrieval has received relatively little attention. This scenario presents unique characteristics, including an open-set and class-imbalanced binary classification, starting with very few labeled samples. We introduce a novel batch-mode Active Learning framework named GAL (Greedy Active Learning) that better copes with this application. It incorporates a new acquisition function for sample selection that measures the impact of each unlabeled sample on the classifier. We further embed this strategy in a greedy selection approach, better exploiting the samples within each batch. We evaluate our framework with both linear (SVM) and non-linear MLP/Gaussian Process classifiers. For the Gaussian Process case, we show a theoretical guarantee on the greedy approximation. Finally, we assess our performance for the interactive content-based image retrieval task on several benchmarks and demonstrate its superiority over existing approaches and common baselines. Code is available at this https URL.

The task of Composed Image Retrieval (CoIR) involves queries that combine image and text modalities, allowing users to express their intent more effectively. However, current CoIR datasets are orders of magnitude smaller compared to other vision and language (V&L) datasets. Additionally, some of these datasets have noticeable issues, such as queries containing redundant modalities. To address these shortcomings, we introduce the Large Scale Composed Image Retrieval (LaSCo) dataset, a new CoIR dataset which is ten times larger than existing ones. Pre-training on our LaSCo, shows a noteworthy improvement in performance, even in zero-shot. Furthermore, we propose a new approach for analyzing CoIR datasets and methods, which detects modality redundancy or necessity, in queries. We also introduce a new CoIR baseline, the Cross-Attention driven Shift Encoder (CASE). This baseline allows for early fusion of modalities using a cross-attention module and employs an additional auxiliary task during training. Our experiments demonstrate that this new baseline outperforms the current state-of-the-art methods on established benchmarks like FashionIQ and CIRR.

In this work we present a novel single-channel Voice Activity Detector (VAD) approach. We utilize a Convolutional Neural Network (CNN) which exploits the spatial information of the noisy input spectrum to extract frame-wise embedding sequence, followed by a Self Attention (SA) Encoder with a goal of finding contextual information from the embedding sequence. Different from previous works which were employed on each frame (with context frames) separately, our method is capable of processing the entire signal at once, and thus enabling long receptive field. We show that the fusion of CNN and SA architectures outperforms methods based solely on CNN and SA. Extensive experimental-study shows that our model outperforms previous models on real-life benchmarks, and provides State Of The Art (SOTA) results with relatively small and lightweight model.

06 Mar 2022

In this paper we present a unified time-frequency method for speaker extraction in clean and noisy conditions. Given a mixed signal, along with a reference signal, the common approaches for extracting the desired speaker are either applied in the time-domain or in the frequency-domain. In our approach, we propose a Siamese-Unet architecture that uses both representations. The Siamese encoders are applied in the frequency-domain to infer the embedding of the noisy and reference spectra, respectively. The concatenated representations are then fed into the decoder to estimate the real and imaginary components of the desired speaker, which are then inverse-transformed to the time-domain. The model is trained with the Scale-Invariant Signal-to-Distortion Ratio (SI-SDR) loss to exploit the time-domain information. The time-domain loss is also regularized with frequency-domain loss to preserve the speech patterns. Experimental results demonstrate that the unified approach is not only very easy to train, but also provides superior results as compared with state-of-the-art (SOTA) Blind Source Separation (BSS) methods, as well as commonly used speaker extraction approach.

10 Feb 2025

This paper introduces a multi-microphone method for extracting a desired

speaker from a mixture involving multiple speakers and directional noise in a

reverberant environment. In this work, we propose leveraging the instantaneous

relative transfer function (RTF), estimated from a reference utterance recorded

in the same position as the desired source. The effectiveness of the RTF-based

spatial cue is compared with direction of arrival (DOA)-based spatial cue and

the conventional spectral embedding. Experimental results in challenging

acoustic scenarios demonstrate that using spatial cues yields better

performance than the spectral-based cue and that the instantaneous RTF

outperforms the DOA-based spatial cue.

With the increasing prevalence of recorded human speech, spoken language

understanding (SLU) is essential for its efficient processing. In order to

process the speech, it is commonly transcribed using automatic speech

recognition technology. This speech-to-text transition introduces errors into

the transcripts, which subsequently propagate to downstream NLP tasks, such as

dialogue summarization. While it is known that transcript noise affects

downstream tasks, a systematic approach to analyzing its effects across

different noise severities and types has not been addressed. We propose a

configurable framework for assessing task models in diverse noisy settings, and

for examining the impact of transcript-cleaning techniques. The framework

facilitates the investigation of task model behavior, which can in turn support

the development of effective SLU solutions. We exemplify the utility of our

framework on three SLU tasks and four task models, offering insights regarding

the effect of transcript noise on tasks in general and models in particular.

For instance, we find that task models can tolerate a certain level of noise,

and are affected differently by the types of errors in the transcript.

There are no more papers matching your filters at the moment.