Otto-von-Guericke University

Researchers at Otto von Guericke University developed 6DRepNet, a landmark-free head pose estimation method that achieves state-of-the-art accuracy by utilizing a continuous 6D rotation representation and a geodesic distance loss, outperforming previous methods by up to 20% on challenging datasets.

Human gaze is a crucial cue used in various applications such as human-robot interaction and virtual reality. Recently, convolution neural network (CNN) approaches have made notable progress in predicting gaze direction. However, estimating gaze in-the-wild is still a challenging problem due to the uniqueness of eye appearance, lightning conditions, and the diversity of head pose and gaze directions. In this paper, we propose a robust CNN-based model for predicting gaze in unconstrained settings. We propose to regress each gaze angle separately to improve the per-angel prediction accuracy, which will enhance the overall gaze performance. In addition, we use two identical losses, one for each angle, to improve network learning and increase its generalization. We evaluate our model with two popular datasets collected with unconstrained settings. Our proposed model achieves state-of-the-art accuracy of 3.92° and 10.41° on MPIIGaze and Gaze360 datasets, respectively. We make our code open source at this https URL.

12 Dec 2023

We investigate the force of flowing granular material on an obstacle. A sphere suspended in a discharging silo experiences both the weight of the overlaying layers and drag of the surrounding moving grains. In experiments with frictional hard glass beads, the force on the obstacle was practically flow-rate independent. In contrast, flow of nearly frictionless soft hydrogel spheres added drag to the gravitational force. The dependence of the total force on the obstacle diameter is qualitatively different for the two types of material: It grows quadratically with the obstacle diameter in the soft, low friction material, while it grows much weaker, nearly linearly with the obstacle diameter, in the bed of glass spheres. In addition to the drag, the obstacle embedded in flowing low-friction soft particles experiences a total force from the top as if immersed in a hydrostatic pressure profile, but a much lower counterforce acting from below. In contrast, when embedded in frictional, hard particles, a strong pressure gradient forms near the upper obstacle surface.

09 Oct 2025

We propose a test for a change in the mean for a sequence of functional observations that are only partially observed on subsets of the domain, with no information available on the complement. The framework accommodates important scenarios, including both abrupt and gradual changes. The significance of the test statistic is assessed via a permutation test. In addition to the classical permutation approach with a fixed number of permutation samples, we also discuss a variant with controlled resampling risk that relies on a random (data-driven) number of permutation samples. The small sample performance of the proposed methodology is illustrated in a Monte Carlo simulation study and an application to real data.

Using methods from the statistical mechanics of disordered systems we analyze the properties of bimatrix games with random payoffs in the limit where the number of pure strategies of each player tends to infinity. We analytically calculate quantities such as the number of equilibrium points, the expected payoff, and the fraction of strategies played with non-zero probability as a function of the correlation between the payoff matrices of both players and compare the results with numerical simulations.

31 Aug 2025

The growing demand for effective tools to parse PDF-formatted texts, particularly structured documents such as textbooks, reveals the limitations of current methods developed mainly for research paper segmentation. This work addresses the challenge of hierarchical segmentation in complex structured documents, with a focus on legal textbooks that contain layered knowledge essential for interpreting and applying legal norms. We examine a Table of Contents (TOC)-based technique and approaches that rely on open-source structural parsing tools or Large Language Models (LLMs) operating without explicit TOC input. To enhance parsing accuracy, we incorporate preprocessing strategies such as OCR-based title detection, XML-derived features, and contextual text features. These strategies are evaluated based on their ability to identify section titles, allocate hierarchy levels, and determine section boundaries. Our findings show that combining LLMs with structure-aware preprocessing substantially reduces false positives and improves extraction quality. We also find that when the metadata quality of headings in the PDF is high, TOC-based techniques perform particularly well. All code and data are publicly available to support replication. We conclude with a comparative evaluation of the methods, outlining their respective strengths and limitations.

Matrix games constitute a fundamental problem of game theory and describe a situation of two players with completely conflicting interests. We show how methods from statistical mechanics can be used to investigate the statistical properties of optimal mixed strategies of large matrix games with random payoff matrices and derive analytical expressions for the value of the game and the distribution of strategy strengths. In particular the fraction of pure strategies not contributing to the optimal mixed strategy of a player is calculated. Both independently distributed as well as correlated elements of the payoff matrix are considered and the results compared with numerical simulations.

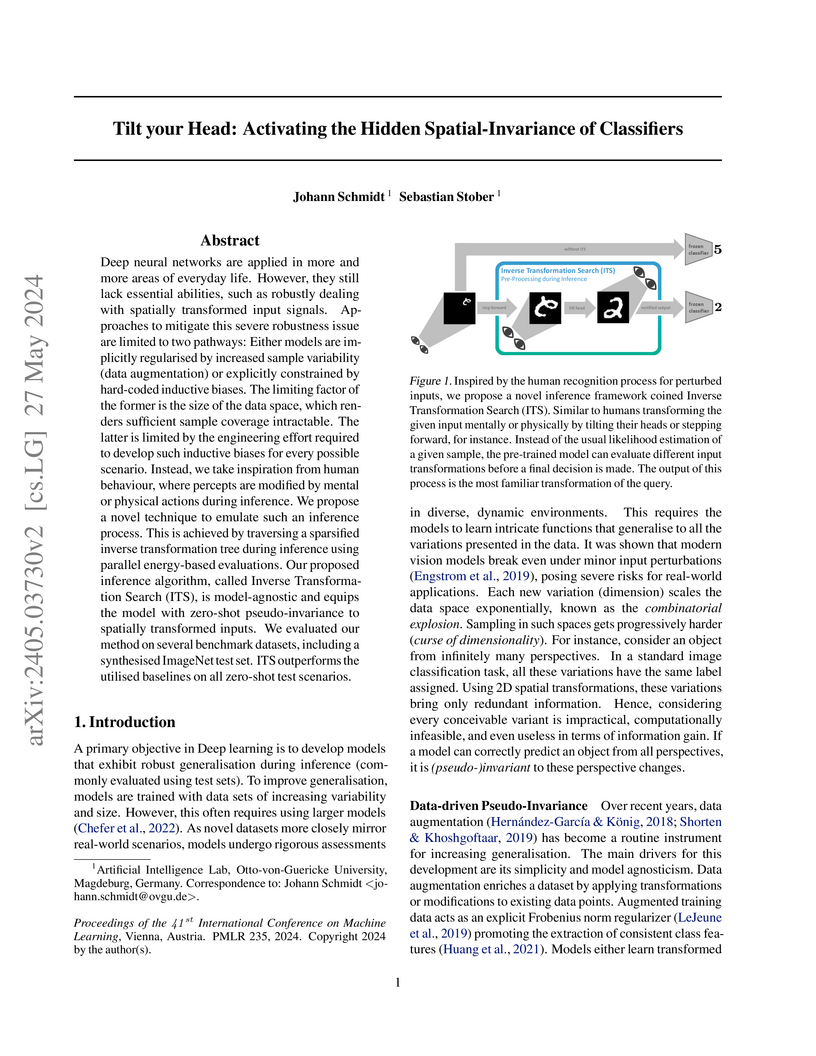

Deep neural networks are applied in more and more areas of everyday life. However, they still lack essential abilities, such as robustly dealing with spatially transformed input signals. Approaches to mitigate this severe robustness issue are limited to two pathways: Either models are implicitly regularised by increased sample variability (data augmentation) or explicitly constrained by hard-coded inductive biases. The limiting factor of the former is the size of the data space, which renders sufficient sample coverage intractable. The latter is limited by the engineering effort required to develop such inductive biases for every possible scenario. Instead, we take inspiration from human behaviour, where percepts are modified by mental or physical actions during inference. We propose a novel technique to emulate such an inference process for neural nets. This is achieved by traversing a sparsified inverse transformation tree during inference using parallel energy-based evaluations. Our proposed inference algorithm, called Inverse Transformation Search (ITS), is model-agnostic and equips the model with zero-shot pseudo-invariance to spatially transformed inputs. We evaluated our method on several benchmark datasets, including a synthesised ImageNet test set. ITS outperforms the utilised baselines on all zero-shot test scenarios.

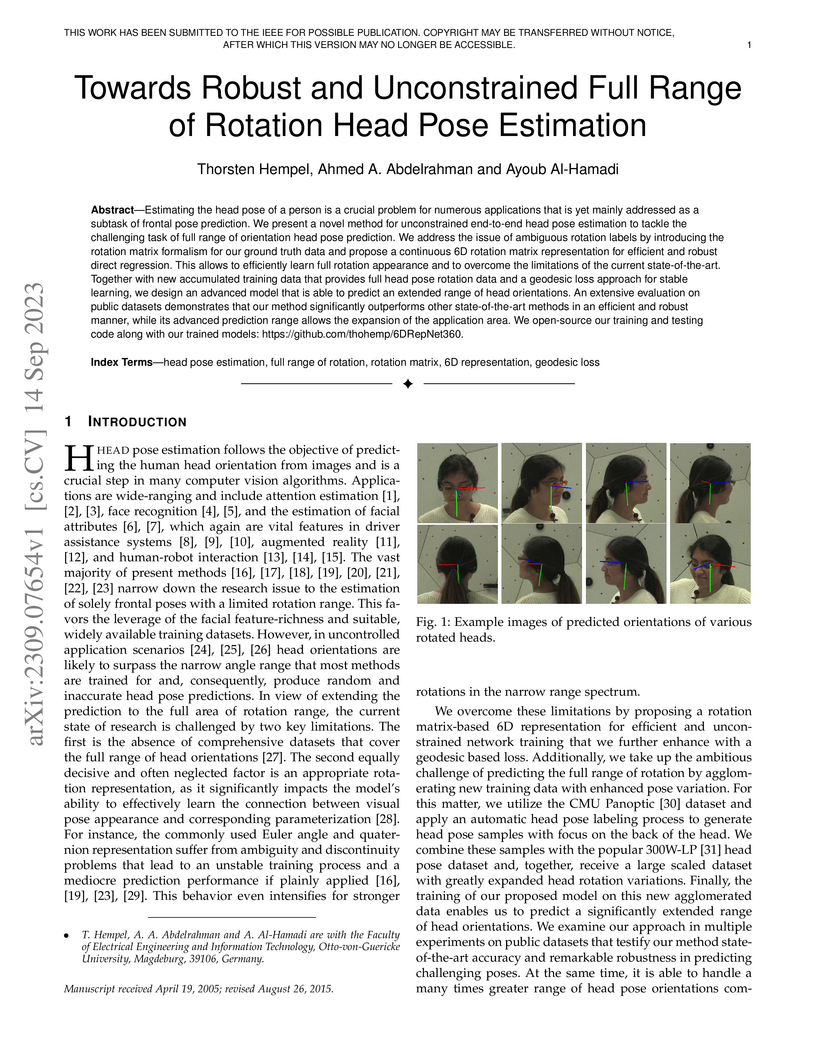

Estimating the head pose of a person is a crucial problem for numerous

applications that is yet mainly addressed as a subtask of frontal pose

prediction. We present a novel method for unconstrained end-to-end head pose

estimation to tackle the challenging task of full range of orientation head

pose prediction. We address the issue of ambiguous rotation labels by

introducing the rotation matrix formalism for our ground truth data and propose

a continuous 6D rotation matrix representation for efficient and robust direct

regression. This allows to efficiently learn full rotation appearance and to

overcome the limitations of the current state-of-the-art. Together with new

accumulated training data that provides full head pose rotation data and a

geodesic loss approach for stable learning, we design an advanced model that is

able to predict an extended range of head orientations. An extensive evaluation

on public datasets demonstrates that our method significantly outperforms other

state-of-the-art methods in an efficient and robust manner, while its advanced

prediction range allows the expansion of the application area. We open-source

our training and testing code along with our trained models:

this https URL

Deception detection is an interdisciplinary field attracting researchers from psychology, criminology, computer science, and economics. We propose a multimodal approach combining deep learning and discriminative models for automated deception detection. Using video modalities, we employ convolutional end-to-end learning to analyze gaze, head pose, and facial expressions, achieving promising results compared to state-of-the-art methods. Due to limited training data, we also utilize discriminative models for deception detection. Although sequence-to-class approaches are explored, discriminative models outperform them due to data scarcity. Our approach is evaluated on five datasets, including a new Rolling-Dice Experiment motivated by economic factors. Results indicate that facial expressions outperform gaze and head pose, and combining modalities with feature selection enhances detection performance. Differences in expressed features across datasets emphasize the importance of scenario-specific training data and the influence of context on deceptive behavior. Cross-dataset experiments reinforce these findings. Despite the challenges posed by low-stake datasets, including the Rolling-Dice Experiment, deception detection performance exceeds chance levels. Our proposed multimodal approach and comprehensive evaluation shed light on the potential of automating deception detection from video modalities, opening avenues for future research.

There is an increasing convergence between biologically plausible

computational models of inference and learning with local update rules and the

global gradient-based optimization of neural network models employed in machine

learning. One particularly exciting connection is the correspondence between

the locally informed optimization in predictive coding networks and the error

backpropagation algorithm that is used to train state-of-the-art deep

artificial neural networks. Here we focus on the related, but still largely

under-explored connection between precision weighting in predictive coding

networks and the Natural Gradient Descent algorithm for deep neural networks.

Precision-weighted predictive coding is an interesting candidate for scaling up

uncertainty-aware optimization -- particularly for models with large parameter

spaces -- due to its distributed nature of the optimization process and the

underlying local approximation of the Fisher information metric, the adaptive

learning rate that is central to Natural Gradient Descent. Here, we show that

hierarchical predictive coding networks with learnable precision indeed are

able to solve various supervised and unsupervised learning tasks with

performance comparable to global backpropagation with natural gradients and

outperform their classical gradient descent counterpart on tasks where high

amounts of noise are embedded in data or label inputs. When applied to

unsupervised auto-encoding of image inputs, the deterministic network produces

hierarchically organized and disentangled embeddings, hinting at the close

connections between predictive coding and hierarchical variational inference.

We propose a new symplectic convolutional neural network (CNN) architecture by leveraging symplectic neural networks, proper symplectic decomposition, and tensor techniques. Specifically, we first introduce a mathematically equivalent form of the convolution layer and then, using symplectic neural networks, we demonstrate a way to parameterize the layers of the CNN to ensure that the convolution layer remains symplectic. To construct a complete autoencoder, we introduce a symplectic pooling layer. We demonstrate the performance of the proposed neural network on three examples: the wave equation, the nonlinear Schrödinger (NLS) equation, and the sine-Gordon equation. The numerical results indicate that the symplectic CNN outperforms the linear symplectic autoencoder obtained via proper symplectic decomposition.

Heterogeneous computing systems, which combine general-purpose processors with specialized accelerators, are increasingly important for optimizing the performance of modern applications. A central challenge is to decide which parts of an application should be executed on which accelerator or, more generally, how to map the tasks of an application to available devices. Predicting the impact of a change in a task mapping on the overall makespan is non-trivial. While there are very capable simulators, these generally require a full implementation of the tasks in question, which is particularly time-intensive for programmable logic. A promising alternative is to use a purely analytical function, which allows for very fast predictions, but abstracts significantly from reality. Bridging the gap between theory and practice poses a significant challenge to algorithm developers. This paper aims to aid in the development of rapid makespan prediction algorithms by providing a highly flexible evaluation framework for heterogeneous systems consisting of CPUs, GPUs and FPGAs, which is capable of collecting real-world makespan results based on abstract task graph descriptions. We analyze to what extent actual makespans can be predicted by existing analytical approaches. Furthermore, we present common challenges that arise from high-level characteristics such as data transfer overhead and device congestion in heterogeneous systems.

22 Jun 2022

We propose the Bayes-UCBVI algorithm for reinforcement learning in tabular,

stage-dependent, episodic Markov decision process: a natural extension of the

Bayes-UCB algorithm by Kaufmann et al. (2012) for multi-armed bandits. Our

method uses the quantile of a Q-value function posterior as upper confidence

bound on the optimal Q-value function. For Bayes-UCBVI, we prove a regret bound

of order O(H3SAT) where H is the length of one episode,

S is the number of states, A the number of actions, T the number of

episodes, that matches the lower-bound of Ω(H3SAT) up to

poly-log terms in H,S,A,T for a large enough T. To the best of our

knowledge, this is the first algorithm that obtains an optimal dependence on

the horizon H (and S) without the need for an involved Bernstein-like bonus

or noise. Crucial to our analysis is a new fine-grained anti-concentration

bound for a weighted Dirichlet sum that can be of independent interest. We then

explain how Bayes-UCBVI can be easily extended beyond the tabular setting,

exhibiting a strong link between our algorithm and Bayesian bootstrap (Rubin,

1981).

29 Oct 2024

There has been a surge of interest in leveraging speech as a marker of health for a wide spectrum of conditions. The underlying premise is that any neurological, mental, or physical deficits that impact speech production can be objectively assessed via automated analysis of speech. Recent advances in speech-based Artificial Intelligence (AI) models for diagnosing and tracking mental health, cognitive, and motor disorders often use supervised learning, similar to mainstream speech technologies like recognition and verification. However, clinical speech AI has distinct challenges, including the need for specific elicitation tasks, small available datasets, diverse speech representations, and uncertain diagnostic labels. As a result, application of the standard supervised learning paradigm may lead to models that perform well in controlled settings but fail to generalize in real-world clinical deployments. With translation into real-world clinical scenarios in mind, this tutorial paper provides an overview of the key components required for robust development of clinical speech AI. Specifically, this paper will cover the design of speech elicitation tasks and protocols most appropriate for different clinical conditions, collection of data and verification of hardware, development and validation of speech representations designed to measure clinical constructs of interest, development of reliable and robust clinical prediction models, and ethical and participant considerations for clinical speech AI. The goal is to provide comprehensive guidance on building models whose inputs and outputs link to the more interpretable and clinically meaningful aspects of speech, that can be interrogated and clinically validated on clinical datasets, and that adhere to ethical, privacy, and security considerations by design.

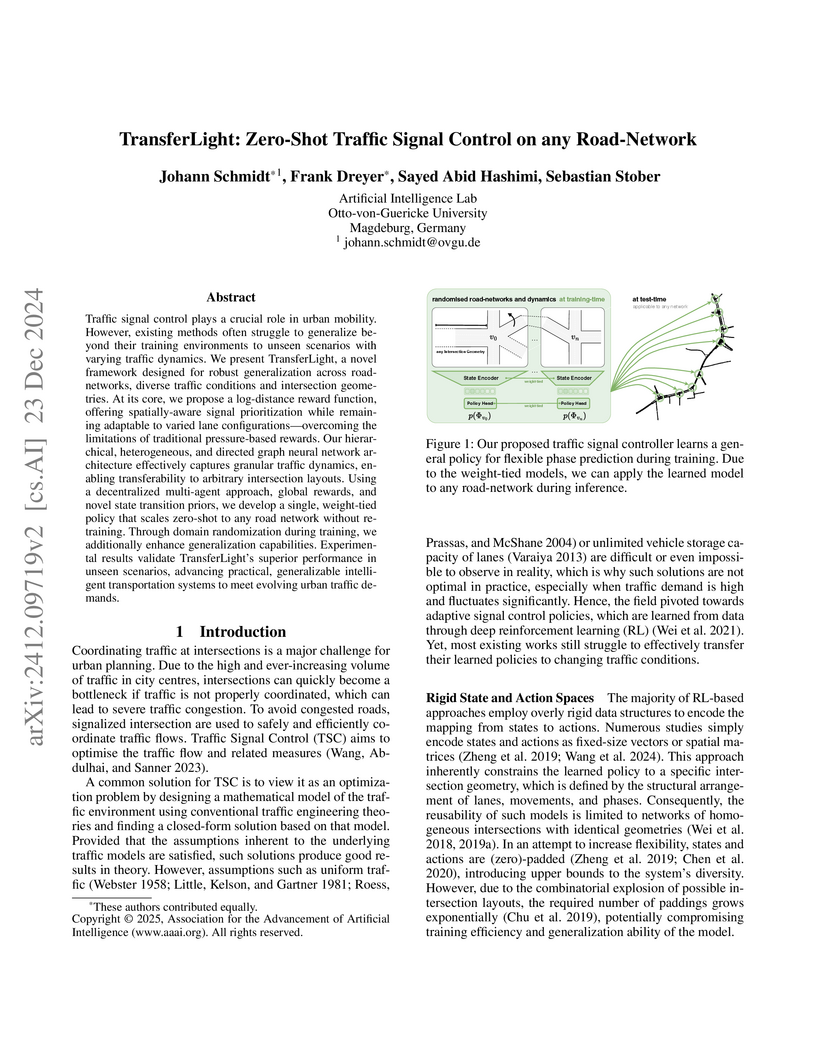

Traffic signal control plays a crucial role in urban mobility. However,

existing methods often struggle to generalize beyond their training

environments to unseen scenarios with varying traffic dynamics. We present

TransferLight, a novel framework designed for robust generalization across

road-networks, diverse traffic conditions and intersection geometries. At its

core, we propose a log-distance reward function, offering spatially-aware

signal prioritization while remaining adaptable to varied lane configurations -

overcoming the limitations of traditional pressure-based rewards. Our

hierarchical, heterogeneous, and directed graph neural network architecture

effectively captures granular traffic dynamics, enabling transferability to

arbitrary intersection layouts. Using a decentralized multi-agent approach,

global rewards, and novel state transition priors, we develop a single,

weight-tied policy that scales zero-shot to any road network without

re-training. Through domain randomization during training, we additionally

enhance generalization capabilities. Experimental results validate

TransferLight's superior performance in unseen scenarios, advancing practical,

generalizable intelligent transportation systems to meet evolving urban traffic

demands.

This paper deals with differentiable dynamical models congruent with neural process theories that cast brain function as the hierarchical refinement of an internal generative model explaining observations. Our work extends existing implementations of gradient-based predictive coding with automatic differentiation and allows to integrate deep neural networks for non-linear state parameterization. Gradient-based predictive coding optimises inferred states and weights locally in for each layer by optimising precision-weighted prediction errors that propagate from stimuli towards latent states. Predictions flow backwards, from latent states towards lower layers. The model suggested here optimises hierarchical and dynamical predictions of latent states. Hierarchical predictions encode expected content and hierarchical structure. Dynamical predictions capture changes in the encoded content along with higher order derivatives. Hierarchical and dynamical predictions interact and address different aspects of the same latent states. We apply the model to various perception and planning tasks on sequential data and show their mutual dependence. In particular, we demonstrate how learning sampling distances in parallel address meaningful locations data sampled at discrete time steps. We discuss possibilities to relax the assumption of linear hierarchies in favor of more flexible graph structure with emergent properties. We compare the granular structure of the model with canonical microcircuits describing predictive coding in biological networks and review the connection to Markov Blankets as a tool to characterize modularity. A final section sketches out ideas for efficient perception and planning in nested spatio-temporal hierarchies.

External localization is an essential part for the indoor operation of small

or cost-efficient robots, as they are used, for example, in swarm robotics. We

introduce a two-stage localization and instance identification framework for

arbitrary robots based on convolutional neural networks. Object detection is

performed on an external camera image of the operation zone providing robot

bounding boxes for an identification and orientation estimation convolutional

neural network. Additionally, we propose a process to generate the necessary

training data. The framework was evaluated with 3 different robot types and

various identification patterns. We have analyzed the main framework

hyperparameters providing recommendations for the framework operation settings.

We achieved up to 98% mAP@IOU0.5 and only 1.6{\deg} orientation error, running

with a frame rate of 50 Hz on a GPU.

Ultrasound offers promising applications in biology and chemistry, but quantifying local ultrasound conditions remains challenging due to the lack of non-invasive measurement tools. We introduce antibubbles as novel optical reporters of local ultrasound pressure. These liquid-core, air-shell structures encapsulate fluorescent payloads, releasing them upon exposure to low-intensity ultrasound. We demonstrate their versatility by fabricating antibubbles with hydrophilic and hydrophobic payloads, revealing payload-dependent encapsulation efficiency and release dynamics. Using acoustic holograms, we showcase precise spatial control of payload release, enabling visualization of complex ultrasound fields. High-speed fluorescence imaging reveals a gentle, single-shot release mechanism occurring within 20-50 ultrasound cycles. It is thus possible to determine via an optical fluorescence marker what the applied ultrasound pressure was. This work thereby introduces a non-invasive method for mapping ultrasound fields in complex environments, potentially accelerating research in ultrasound-based therapies and processes. The long-term stability and versatility of these antibubble reporters suggest broad applicability in studying and optimizing ultrasound effects across various biological and chemical systems.

Modern Code Review (MCR) is a standard practice in software engineering, yet it demands substantial time and resource investments. Recent research has increasingly explored automating core review tasks using machine learning (ML) and deep learning (DL). As a result, there is substantial variability in task definitions, datasets, and evaluation procedures. This study provides the first comprehensive analysis of MCR automation research, aiming to characterize the field's evolution, formalize learning tasks, highlight methodological challenges, and offer actionable recommendations to guide future research. Focusing on the primary code review tasks, we systematically surveyed 691 publications and identified 24 relevant studies published between May 2015 and April 2024. Each study was analyzed in terms of tasks, models, metrics, baselines, results, validity concerns, and artifact availability. In particular, our analysis reveals significant potential for standardization, including 48 task metric combinations, 22 of which were unique to their original paper, and limited dataset reuse. We highlight challenges and derive concrete recommendations for examples such as the temporal bias threat, which are rarely addressed so far. Our work contributes to a clearer overview of the field, supports the framing of new research, helps to avoid pitfalls, and promotes greater standardization in evaluation practices.

There are no more papers matching your filters at the moment.