Salesforce Research Asia

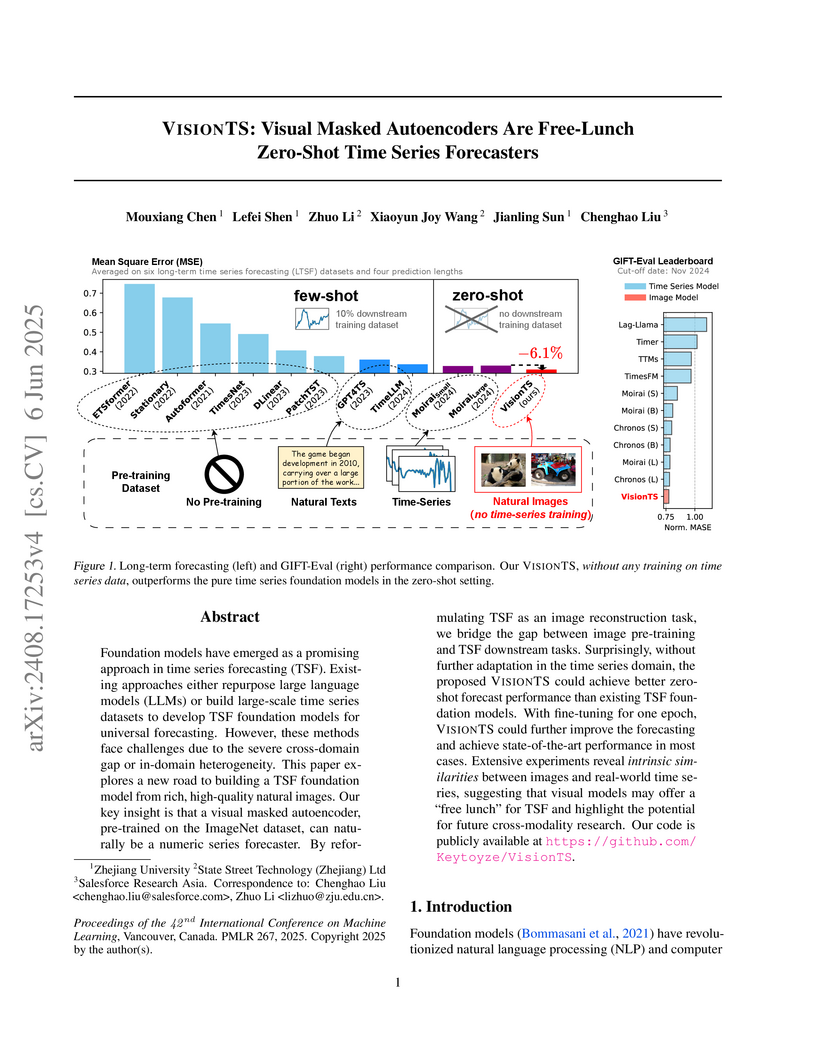

VISIONTS reformulates time series forecasting as an image reconstruction task, enabling visual masked autoencoders pre-trained on natural images to serve as zero-shot forecasters. This approach achieves state-of-the-art or comparable performance on numerous benchmarks, often surpassing dedicated time series foundation models without requiring time series-specific training.

Large language models (LLMs), with demonstrated reasoning abilities across

multiple domains, are largely underexplored for time-series reasoning (TsR),

which is ubiquitous in the real world. In this work, we propose TimerBed, the

first comprehensive testbed for evaluating LLMs' TsR performance. Specifically,

TimerBed includes stratified reasoning patterns with real-world tasks,

comprehensive combinations of LLMs and reasoning strategies, and various

supervised models as comparison anchors. We perform extensive experiments with

TimerBed, test multiple current beliefs, and verify the initial failures of

LLMs in TsR, evidenced by the ineffectiveness of zero shot (ZST) and

performance degradation of few shot in-context learning (ICL). Further, we

identify one possible root cause: the numerical modeling of data. To address

this, we propose a prompt-based solution VL-Time, using visualization-modeled

data and language-guided reasoning. Experimental results demonstrate that

Vl-Time enables multimodal LLMs to be non-trivial ZST and powerful ICL

reasoners for time series, achieving about 140% average performance improvement

and 99% average token costs reduction.

Transformers have been actively studied for time-series forecasting in recent years. While often showing promising results in various scenarios, traditional Transformers are not designed to fully exploit the characteristics of time-series data and thus suffer some fundamental limitations, e.g., they generally lack of decomposition capability and interpretability, and are neither effective nor efficient for long-term forecasting. In this paper, we propose ETSFormer, a novel time-series Transformer architecture, which exploits the principle of exponential smoothing in improving Transformers for time-series forecasting. In particular, inspired by the classical exponential smoothing methods in time-series forecasting, we propose the novel exponential smoothing attention (ESA) and frequency attention (FA) to replace the self-attention mechanism in vanilla Transformers, thus improving both accuracy and efficiency. Based on these, we redesign the Transformer architecture with modular decomposition blocks such that it can learn to decompose the time-series data into interpretable time-series components such as level, growth and seasonality. Extensive experiments on various time-series benchmarks validate the efficacy and advantages of the proposed method. Code is available at this https URL.

Deep learning has been actively studied for time series forecasting, and the mainstream paradigm is based on the end-to-end training of neural network architectures, ranging from classical LSTM/RNNs to more recent TCNs and Transformers. Motivated by the recent success of representation learning in computer vision and natural language processing, we argue that a more promising paradigm for time series forecasting, is to first learn disentangled feature representations, followed by a simple regression fine-tuning step -- we justify such a paradigm from a causal perspective. Following this principle, we propose a new time series representation learning framework for time series forecasting named CoST, which applies contrastive learning methods to learn disentangled seasonal-trend representations. CoST comprises both time domain and frequency domain contrastive losses to learn discriminative trend and seasonal representations, respectively. Extensive experiments on real-world datasets show that CoST consistently outperforms the state-of-the-art methods by a considerable margin, achieving a 21.3% improvement in MSE on multivariate benchmarks. It is also robust to various choices of backbone encoders, as well as downstream regressors. Code is available at this https URL.

What are the units of text that we want to model? From bytes to multi-word

expressions, text can be analyzed and generated at many granularities. Until

recently, most natural language processing (NLP) models operated over words,

treating those as discrete and atomic tokens, but starting with byte-pair

encoding (BPE), subword-based approaches have become dominant in many areas,

enabling small vocabularies while still allowing for fast inference. Is the end

of the road character-level model or byte-level processing? In this survey, we

connect several lines of work from the pre-neural and neural era, by showing

how hybrid approaches of words and characters as well as subword-based

approaches based on learned segmentation have been proposed and evaluated. We

conclude that there is and likely will never be a silver bullet singular

solution for all applications and that thinking seriously about tokenization

remains important for many applications.

The integration of Large Language Models (LLMs) with Graph Representation Learning (GRL) marks a significant evolution in analyzing complex data structures. This collaboration harnesses the sophisticated linguistic capabilities of LLMs to improve the contextual understanding and adaptability of graph models, thereby broadening the scope and potential of GRL. Despite a growing body of research dedicated to integrating LLMs into the graph domain, a comprehensive review that deeply analyzes the core components and operations within these models is notably lacking. Our survey fills this gap by proposing a novel taxonomy that breaks down these models into primary components and operation techniques from a novel technical perspective. We further dissect recent literature into two primary components including knowledge extractors and organizers, and two operation techniques including integration and training stratigies, shedding light on effective model design and training strategies. Additionally, we identify and explore potential future research avenues in this nascent yet underexplored field, proposing paths for continued progress.

Recent years have witnessed the success of introducing deep learning models to time series forecasting. From a data generation perspective, we illustrate that existing models are susceptible to distribution shifts driven by temporal contexts, whether observed or unobserved. Such context-driven distribution shift (CDS) introduces biases in predictions within specific contexts and poses challenges for conventional training paradigms. In this paper, we introduce a universal calibration methodology for the detection and adaptation of CDS with a trained model. To this end, we propose a novel CDS detector, termed the "residual-based CDS detector" or "Reconditionor", which quantifies the model's vulnerability to CDS by evaluating the mutual information between prediction residuals and their corresponding contexts. A high Reconditionor score indicates a severe susceptibility, thereby necessitating model adaptation. In this circumstance, we put forth a straightforward yet potent adapter framework for model calibration, termed the "sample-level contextualized adapter" or "SOLID". This framework involves the curation of a contextually similar dataset to the provided test sample and the subsequent fine-tuning of the model's prediction layer with a limited number of steps. Our theoretical analysis demonstrates that this adaptation strategy can achieve an optimal bias-variance trade-off. Notably, our proposed Reconditionor and SOLID are model-agnostic and readily adaptable to a wide range of models. Extensive experiments show that SOLID consistently enhances the performance of current forecasting models on real-world datasets, especially on cases with substantial CDS detected by the proposed Reconditionor, thus validating the effectiveness of the calibration approach.

According to Complementary Learning Systems (CLS)

theory~\citep{mcclelland1995there} in neuroscience, humans do effective

\emph{continual learning} through two complementary systems: a fast learning

system centered on the hippocampus for rapid learning of the specifics and

individual experiences, and a slow learning system located in the neocortex for

the gradual acquisition of structured knowledge about the environment.

Motivated by this theory, we propose a novel continual learning framework named

"DualNet", which comprises a fast learning system for supervised learning of

pattern-separated representation from specific tasks and a slow learning system

for unsupervised representation learning of task-agnostic general

representation via a Self-Supervised Learning (SSL) technique. The two fast and

slow learning systems are complementary and work seamlessly in a holistic

continual learning framework. Our extensive experiments on two challenging

continual learning benchmarks of CORE50 and miniImageNet show that DualNet

outperforms state-of-the-art continual learning methods by a large margin. We

further conduct ablation studies of different SSL objectives to validate

DualNet's efficacy, robustness, and scalability. Code will be made available

upon acceptance.

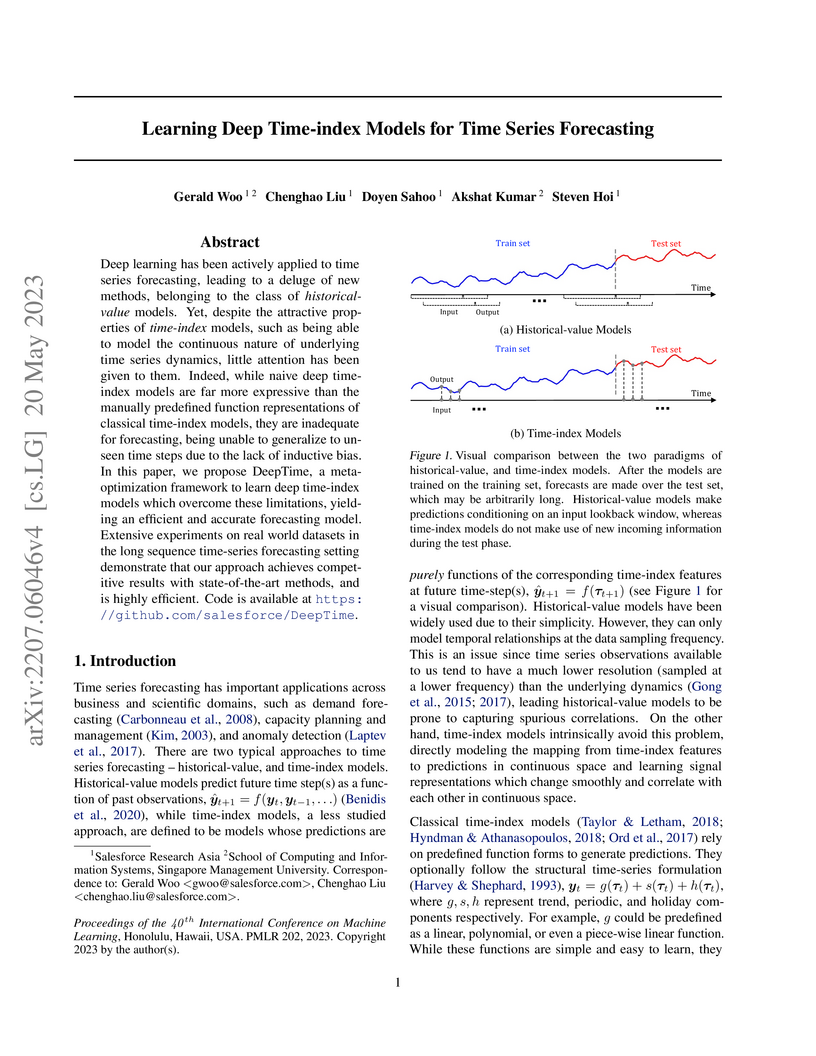

Deep learning has been actively applied to time series forecasting, leading to a deluge of new methods, belonging to the class of historical-value models. Yet, despite the attractive properties of time-index models, such as being able to model the continuous nature of underlying time series dynamics, little attention has been given to them. Indeed, while naive deep time-index models are far more expressive than the manually predefined function representations of classical time-index models, they are inadequate for forecasting, being unable to generalize to unseen time steps due to the lack of inductive bias. In this paper, we propose DeepTime, a meta-optimization framework to learn deep time-index models which overcome these limitations, yielding an efficient and accurate forecasting model. Extensive experiments on real world datasets in the long sequence time-series forecasting setting demonstrate that our approach achieves competitive results with state-of-the-art methods, and is highly efficient. Code is available at this https URL.

LFPT5 unifies lifelong and few-shot language learning through prompt tuning of a frozen T5 model, effectively mitigating catastrophic forgetting across diverse NLP tasks from limited data. The framework significantly outperforms prior lifelong learning baselines across sequence labeling, text classification, and text generation tasks while maintaining high parameter efficiency.

Existing continual learning methods use Batch Normalization (BN) to

facilitate training and improve generalization across tasks. However, the

non-i.i.d and non-stationary nature of continual learning data, especially in

the online setting, amplify the discrepancy between training and testing in BN

and hinder the performance of older tasks. In this work, we study the

cross-task normalization effect of BN in online continual learning where BN

normalizes the testing data using moments biased towards the current task,

resulting in higher catastrophic forgetting. This limitation motivates us to

propose a simple yet effective method that we call Continual Normalization (CN)

to facilitate training similar to BN while mitigating its negative effect.

Extensive experiments on different continual learning algorithms and online

scenarios show that CN is a direct replacement for BN and can provide

substantial performance improvements. Our implementation is available at

\url{this https URL}.

An important aspect of health monitoring is effective logging of food consumption. This can help management of diet-related diseases like obesity, diabetes, and even cardiovascular diseases. Moreover, food logging can help fitness enthusiasts, and people who wanting to achieve a target weight. However, food-logging is cumbersome, and requires not only taking additional effort to note down the food item consumed regularly, but also sufficient knowledge of the food item consumed (which is difficult due to the availability of a wide variety of cuisines). With increasing reliance on smart devices, we exploit the convenience offered through the use of smart phones and propose a smart-food logging system: FoodAI, which offers state-of-the-art deep-learning based image recognition capabilities. FoodAI has been developed in Singapore and is particularly focused on food items commonly consumed in Singapore. FoodAI models were trained on a corpus of 400,000 food images from 756 different classes. In this paper we present extensive analysis and insights into the development of this system. FoodAI has been deployed as an API service and is one of the components powering Healthy 365, a mobile app developed by Singapore's Heath Promotion Board. We have over 100 registered organizations (universities, companies, start-ups) subscribing to this service and actively receive several API requests a day. FoodAI has made food logging convenient, aiding smart consumption and a healthy lifestyle.

Root Cause Analysis (RCA) of any service-disrupting incident is one of the

most critical as well as complex tasks in IT processes, especially for cloud

industry leaders like Salesforce. Typically RCA investigation leverages

data-sources like application error logs or service call traces. However a rich

goldmine of root cause information is also hidden in the natural language

documentation of the past incidents investigations by domain experts. This is

generally termed as Problem Review Board (PRB) Data which constitute a core

component of IT Incident Management. However, owing to the raw unstructured

nature of PRBs, such root cause knowledge is not directly reusable by manual or

automated pipelines for RCA of new incidents. This motivates us to leverage

this widely-available data-source to build an Incident Causation Analysis (ICA)

engine, using SoTA neural NLP techniques to extract targeted information and

construct a structured Causal Knowledge Graph from PRB documents. ICA forms the

backbone of a simple-yet-effective Retrieval based RCA for new incidents,

through an Information Retrieval system to search and rank past incidents and

detect likely root causes from them, given the incident symptom. In this work,

we present ICA and the downstream Incident Search and Retrieval based RCA

pipeline, built at Salesforce, over 2K documented cloud service incident

investigations collected over a few years. We also establish the effectiveness

of ICA and the downstream tasks through various quantitative benchmarks,

qualitative analysis as well as domain expert's validation and real incident

case studies after deployment.

Video-grounded dialogue systems aim to integrate video understanding and dialogue understanding to generate responses that are relevant to both the dialogue and video context. Most existing approaches employ deep learning models and have achieved remarkable performance, given the relatively small datasets available. However, the results are partly accomplished by exploiting biases in the datasets rather than developing multimodal reasoning, resulting in limited generalization. In this paper, we propose a novel approach of Compositional Counterfactual Contrastive Learning (C3) to develop contrastive training between factual and counterfactual samples in video-grounded dialogues. Specifically, we design factual/counterfactual sampling based on the temporal steps in videos and tokens in dialogues and propose contrastive loss functions that exploit object-level or action-level variance. Different from prior approaches, we focus on contrastive hidden state representations among compositional output tokens to optimize the representation space in a generation setting. We achieved promising performance gains on the Audio-Visual Scene-Aware Dialogues (AVSD) benchmark and showed the benefits of our approach in grounding video and dialogue context.

Most existing object instance detection and segmentation models only work

well on fairly balanced benchmarks where per-category training sample numbers

are comparable, such as COCO. They tend to suffer performance drop on realistic

datasets that are usually long-tailed. This work aims to study and address such

open challenges. Specifically, we systematically investigate performance drop

of the state-of-the-art two-stage instance segmentation model Mask R-CNN on the

recent long-tail LVIS dataset, and unveil that a major cause is the inaccurate

classification of object proposals. Based on such an observation, we first

consider various techniques for improving long-tail classification performance

which indeed enhance instance segmentation results. We then propose a simple

calibration framework to more effectively alleviate classification head bias

with a bi-level class balanced sampling approach. Without bells and whistles,

it significantly boosts the performance of instance segmentation for tail

classes on the recent LVIS dataset and our sampled COCO-LT dataset. Our

analysis provides useful insights for solving long-tail instance detection and

segmentation problems, and the straightforward \emph{SimCal} method can serve

as a simple but strong baseline. With the method we have won the 2019 LVIS

challenge. Codes and models are available at this https URL

Continual learning aims to learn continuously from a stream of tasks and data in an online-learning fashion, being capable of exploiting what was learned previously to improve current and future tasks while still being able to perform well on the previous tasks. One common limitation of many existing continual learning methods is that they often train a model directly on all available training data without validation due to the nature of continual learning, thus suffering poor generalization at test time. In this work, we present a novel framework of continual learning named "Bilevel Continual Learning" (BCL) by unifying a {\it bilevel optimization} objective and a {\it dual memory management} strategy comprising both episodic memory and generalization memory to achieve effective knowledge transfer to future tasks and alleviate catastrophic forgetting on old tasks simultaneously. Our extensive experiments on continual learning benchmarks demonstrate the efficacy of the proposed BCL compared to many state-of-the-art methods. Our implementation is available at this https URL.

Video-grounded dialogue systems aim to integrate video understanding and dialogue understanding to generate responses that are relevant to both the dialogue and video context. Most existing approaches employ deep learning models and have achieved remarkable performance, given the relatively small datasets available. However, the results are partly accomplished by exploiting biases in the datasets rather than developing multimodal reasoning, resulting in limited generalization. In this paper, we propose a novel approach of Compositional Counterfactual Contrastive Learning (C3) to develop contrastive training between factual and counterfactual samples in video-grounded dialogues. Specifically, we design factual/counterfactual sampling based on the temporal steps in videos and tokens in dialogues and propose contrastive loss functions that exploit object-level or action-level variance. Different from prior approaches, we focus on contrastive hidden state representations among compositional output tokens to optimize the representation space in a generation setting. We achieved promising performance gains on the Audio-Visual Scene-Aware Dialogues (AVSD) benchmark and showed the benefits of our approach in grounding video and dialogue context.

Dynamic Time Warping (DTW) has become the pragmatic choice for measuring distance between time series. However, it suffers from unavoidable quadratic time complexity when the optimal alignment matrix needs to be computed exactly. This hinders its use in deep learning architectures, where layers involving DTW computations cause severe bottlenecks. To alleviate these issues, we introduce a new metric for time series data based on the Optimal Transport (OT) framework, called Optimal Transport Warping (OTW). OTW enjoys linear time/space complexity, is differentiable and can be parallelized. OTW enjoys a moderate sensitivity to time and shape distortions, making it ideal for time series. We show the efficacy and efficiency of OTW on 1-Nearest Neighbor Classification and Hierarchical Clustering, as well as in the case of using OTW instead of DTW in Deep Learning architectures.

Adversarial training has shown impressive success in learning bilingual

dictionary without any parallel data by mapping monolingual embeddings to a

shared space. However, recent work has shown superior performance for

non-adversarial methods in more challenging language pairs. In this work, we

revisit adversarial autoencoder for unsupervised word translation and propose

two novel extensions to it that yield more stable training and improved

results. Our method includes regularization terms to enforce cycle consistency

and input reconstruction, and puts the target encoders as an adversary against

the corresponding discriminator. Extensive experimentations with European,

non-European and low-resource languages show that our method is more robust and

achieves better performance than recently proposed adversarial and

non-adversarial approaches.

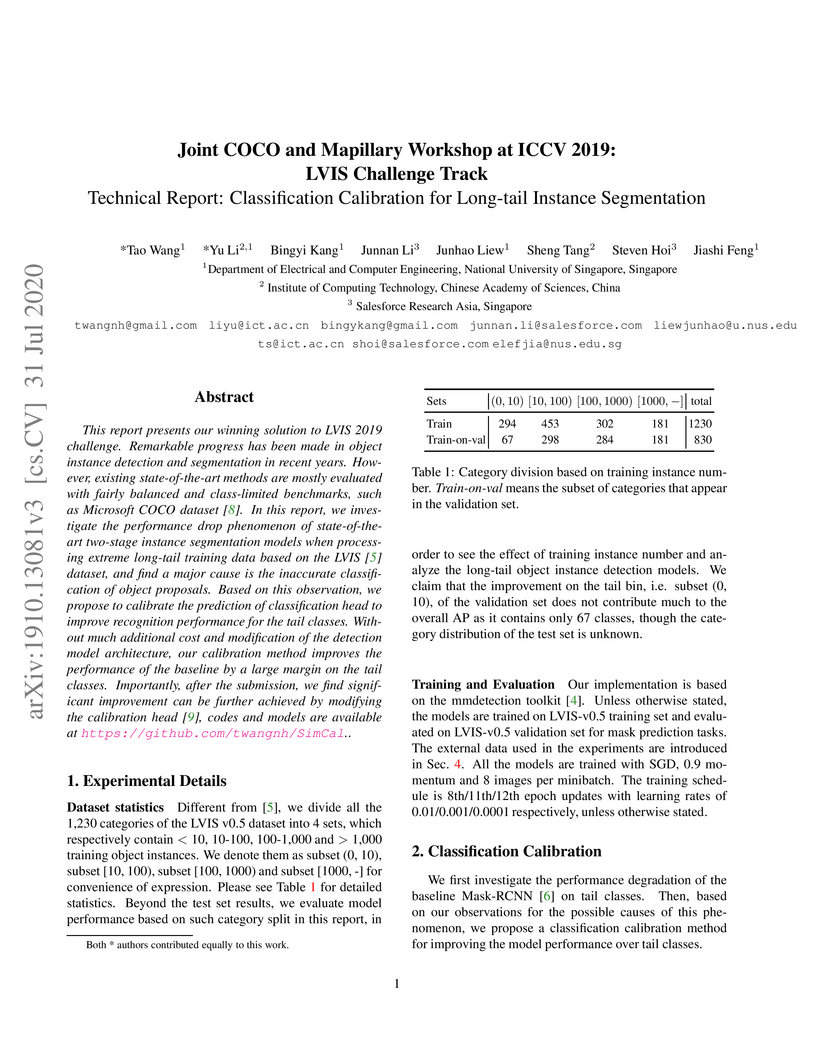

Remarkable progress has been made in object instance detection and

segmentation in recent years. However, existing state-of-the-art methods are

mostly evaluated with fairly balanced and class-limited benchmarks, such as

Microsoft COCO dataset [8]. In this report, we investigate the performance drop

phenomenon of state-of-the-art two-stage instance segmentation models when

processing extreme long-tail training data based on the LVIS [5] dataset, and

find a major cause is the inaccurate classification of object proposals. Based

on this observation, we propose to calibrate the prediction of classification

head to improve recognition performance for the tail classes. Without much

additional cost and modification of the detection model architecture, our

calibration method improves the performance of the baseline by a large margin

on the tail classes. Codes will be available. Importantly, after the

submission, we find significant improvement can be further achieved by

modifying the calibration head, which we will update later.

There are no more papers matching your filters at the moment.