Ask or search anything...

The paper empirically investigates the performance of multi-agent LLM systems across diverse agentic tasks and architectures, revealing that benefits are highly contingent on task structure rather than universal. It establishes a quantitative scaling principle, achieving 87% accuracy in predicting optimal agent architectures for unseen tasks based on model capability, task properties, and measured coordination dynamics.

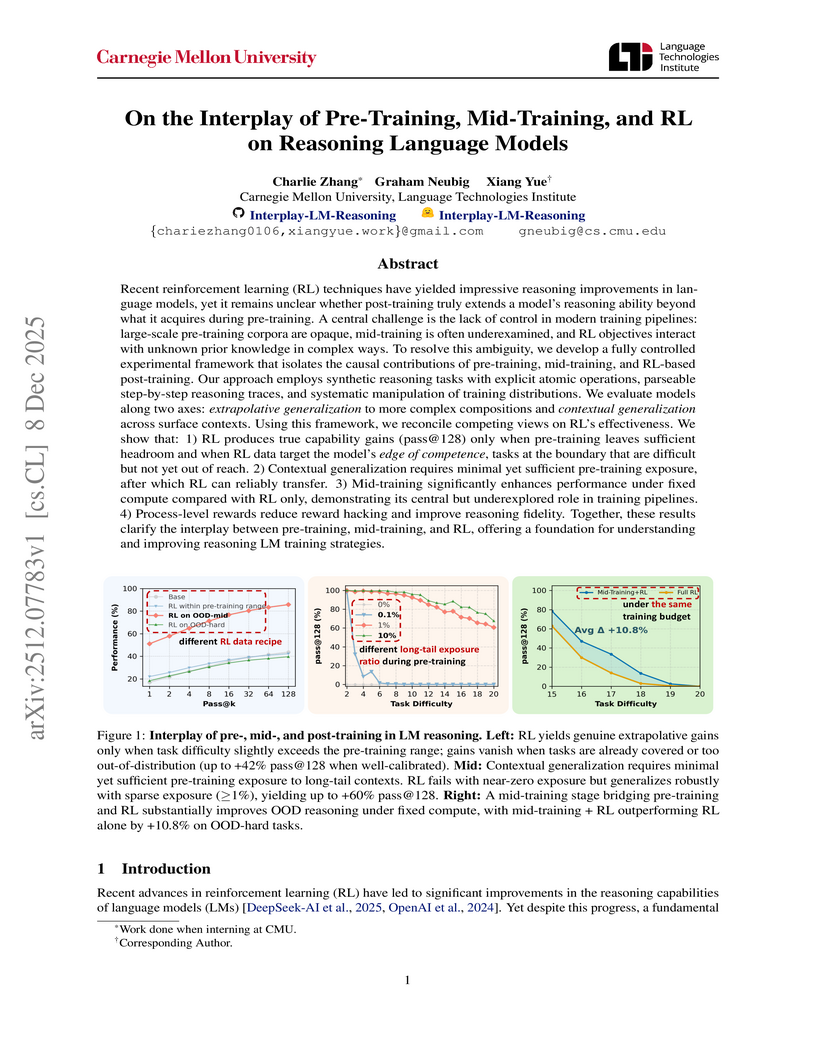

View blogThis research disentangles the causal effects of pre-training, mid-training, and reinforcement learning (RL) on language model reasoning using a controlled synthetic task framework. It establishes that RL extends reasoning capabilities only under specific conditions of pre-training exposure and data calibration, with mid-training playing a crucial role in bridging training stages and improving generalization.

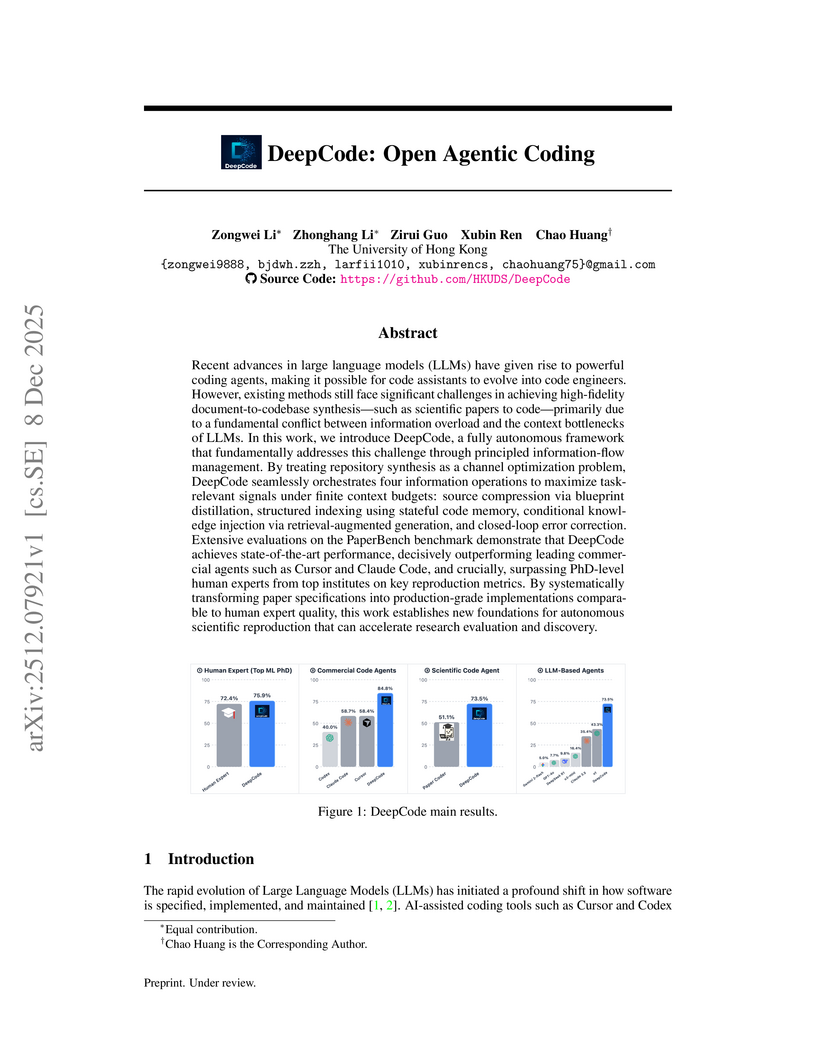

View blogDeepCode presents a multi-stage agentic framework for autonomously generating executable code repositories from scientific papers, achieving a 73.5% replication score on the PaperBench Code-Dev benchmark and exceeding PhD-level human expert performance.

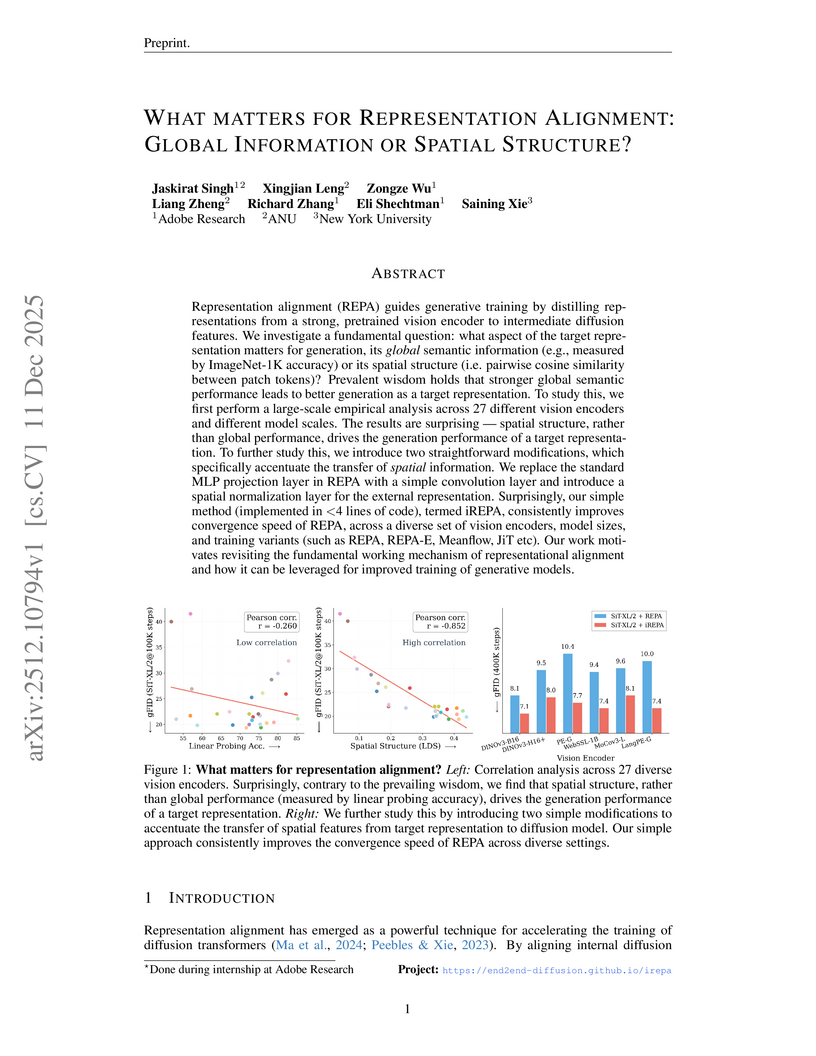

View blogA collaborative study from Adobe Research, Australian National University, and New York University investigates the drivers of representation alignment in diffusion models, revealing that the spatial structure of pretrained vision encoder features is more critical for high-quality image generation than global semantic understanding, which challenges a prevalent assumption. The work introduces iREPA, a refined alignment method involving minimal code changes, that consistently enhances the convergence speed and generation quality of Diffusion Transformers across various models and tasks.

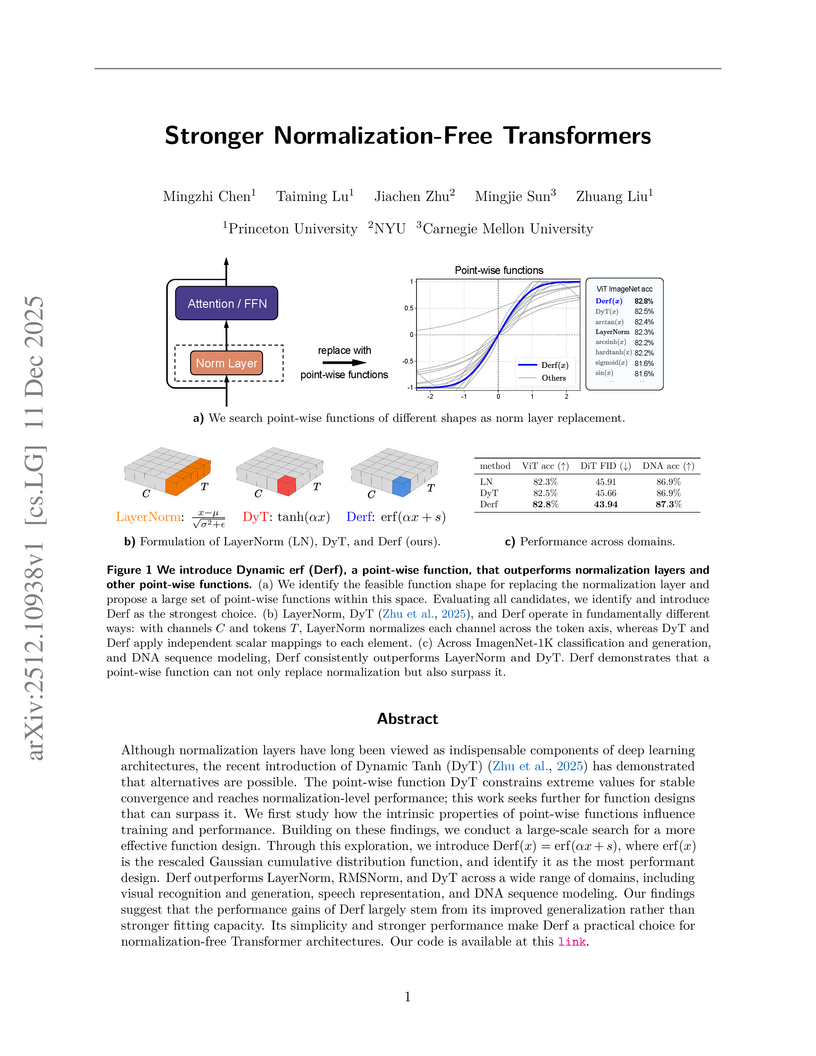

View blogResearchers from Princeton, NYU, and Carnegie Mellon introduced Dynamic erf (Derf), a point-wise function that replaces traditional normalization layers in Transformers. Derf consistently achieved higher accuracy or lower error rates across various modalities, including an average of 0.6 percentage points higher top-1 accuracy on ImageNet-1K for ViT-Base/Large models and improved FID scores for Diffusion Transformers.

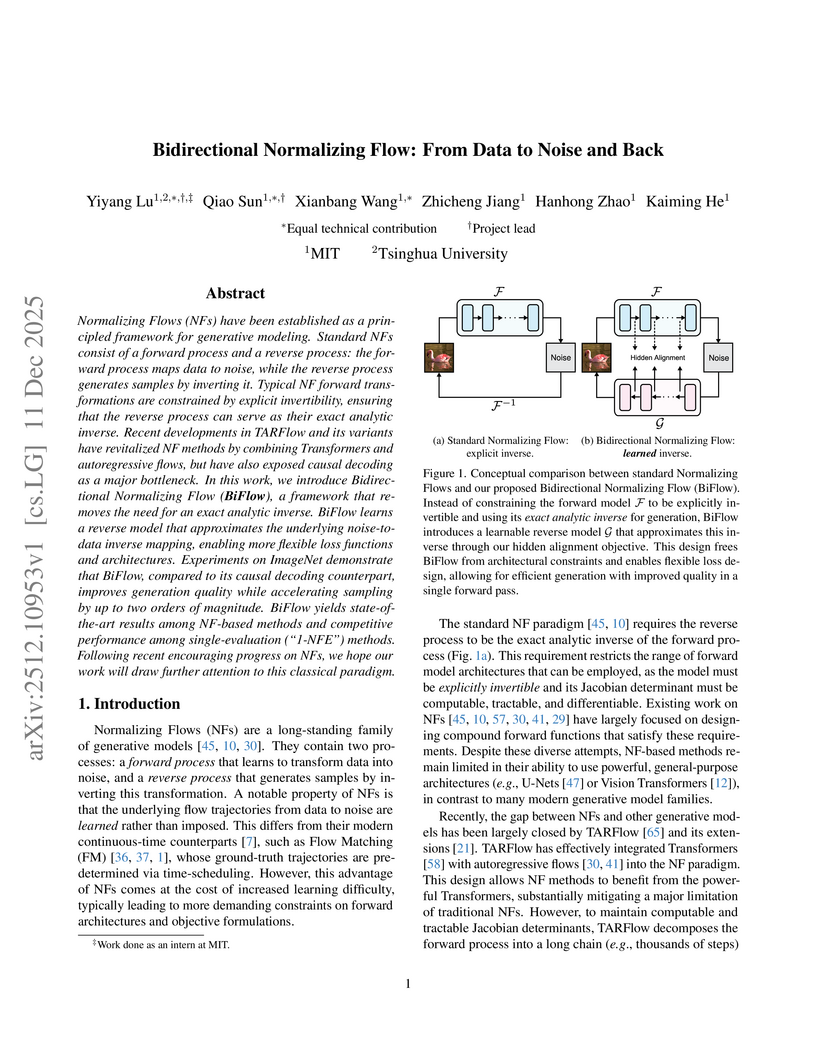

View blogBidirectional Normalizing Flow (BiFlow) introduces a method to learn an approximate inverse for Normalizing Flows, allowing for highly efficient and high-fidelity image generation. This approach achieves a state-of-the-art FID of 2.39 on ImageNet 256x256 while accelerating sampling speed by up to 697x compared to prior NF models.

View blogResearchers from Columbia University and NYU introduced Online World Modeling (OWM) and Adversarial World Modeling (AWM) to mitigate the train-test gap in world models for gradient-based planning (GBP). These methods enabled GBP to achieve performance comparable to or better than search-based planning algorithms like CEM, while simultaneously reducing computation time by an order of magnitude across various robotic tasks.

View blogThis work presents a comprehensive engineering guide for designing and deploying production-grade agentic AI workflows, offering nine best practices demonstrated through a multimodal news-to-media generation case study. The approach improves system determinism, reliability, and responsible AI integration, reducing issues like hallucination and enabling scalable, maintainable deployments.

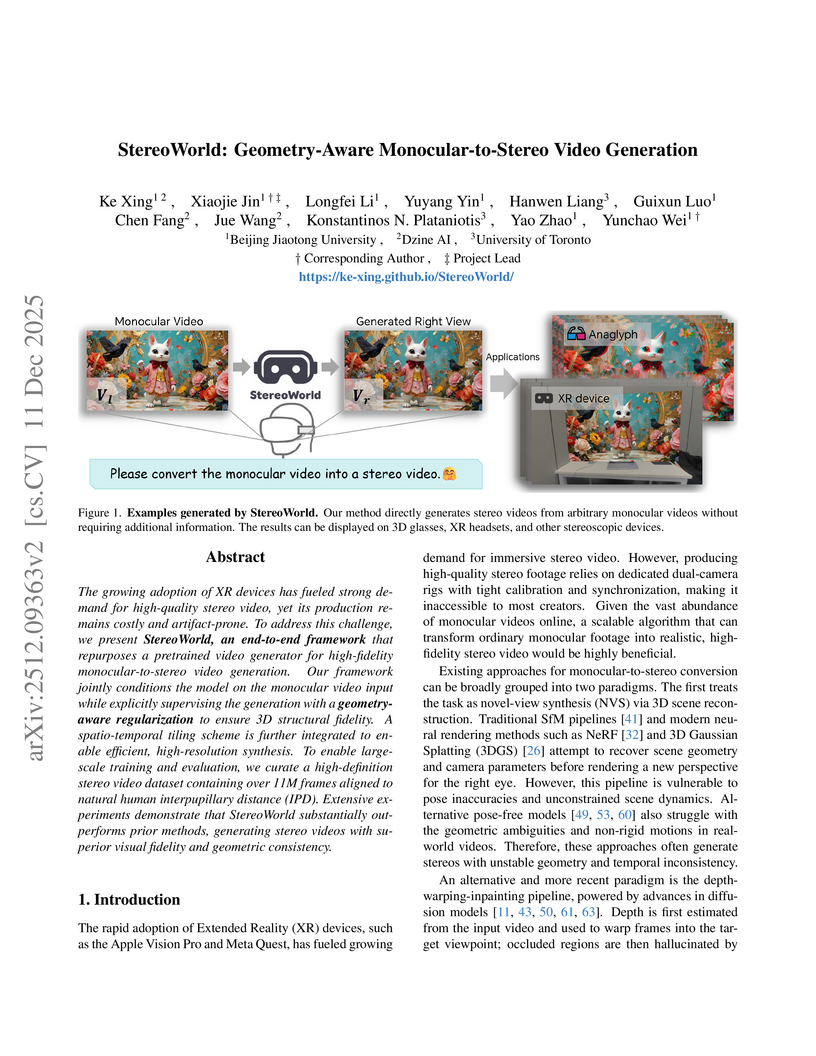

View blogStereoWorld introduces an end-to-end diffusion-based framework to convert monocular videos into high-fidelity, geometry-aware stereo videos. It leverages a pretrained video generative model, a large-scale IPD-aligned dataset, and explicit disparity and depth supervision to produce superior visual quality, temporal stability, and geometric accuracy for immersive XR experiences.

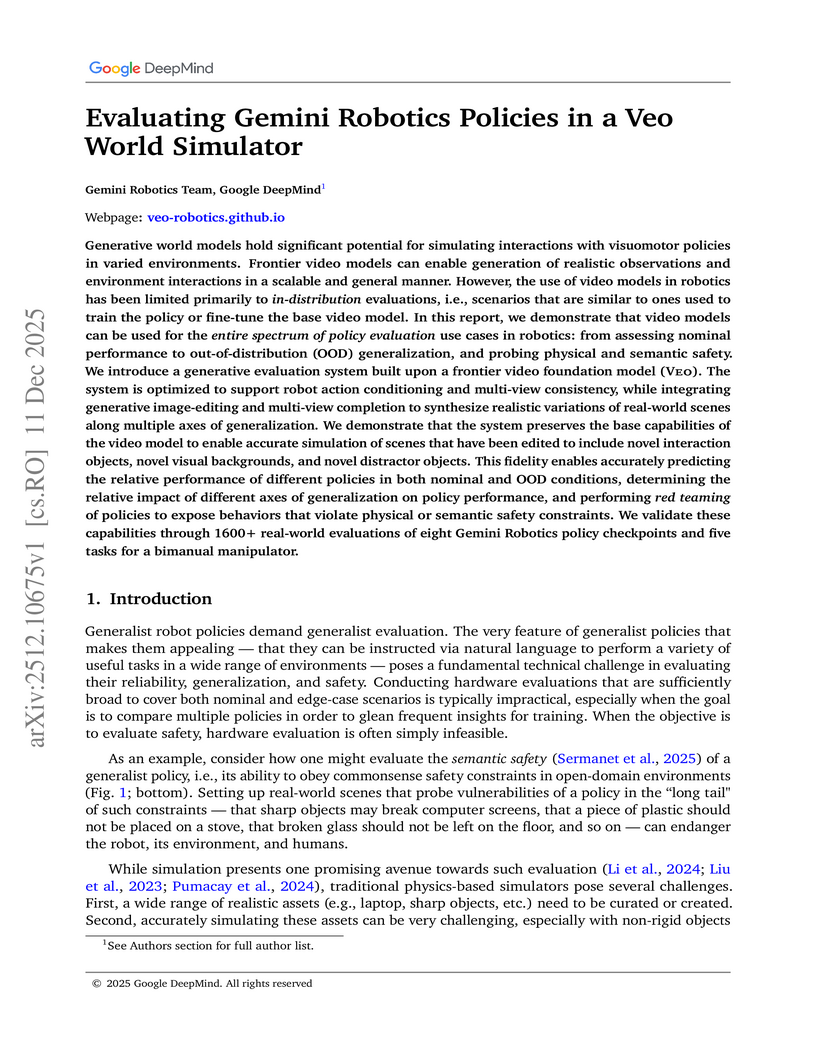

View blogGoogle DeepMind's Gemini Robotics Team developed a system utilizing the Veo video foundation model to evaluate generalist robot policies. The approach accurately predicts policy performance, generalization capabilities, and identifies safety vulnerabilities across various scenarios, demonstrating strong correlation with real-world outcomes.

View blogThe Native Parallel Reasoner (NPR) framework allows Large Language Models to autonomously acquire and deploy genuine parallel reasoning capabilities, without relying on external teacher models. Experiments show NPR improves accuracy by up to 24.5% over baselines and delivers up to 4.6 times faster inference, maintaining 100% parallel execution across various benchmarks.

View blogResearchers at Meta FAIR developed VL-JEPA, a Joint Embedding Predictive Architecture for vision-language tasks, which predicts abstract semantic embeddings rather than explicit tokens. This approach leads to improved computational efficiency and enables real-time applications through non-autoregressive prediction and selective decoding, while achieving competitive performance across classification, retrieval, and Visual Question Answering benchmarks.

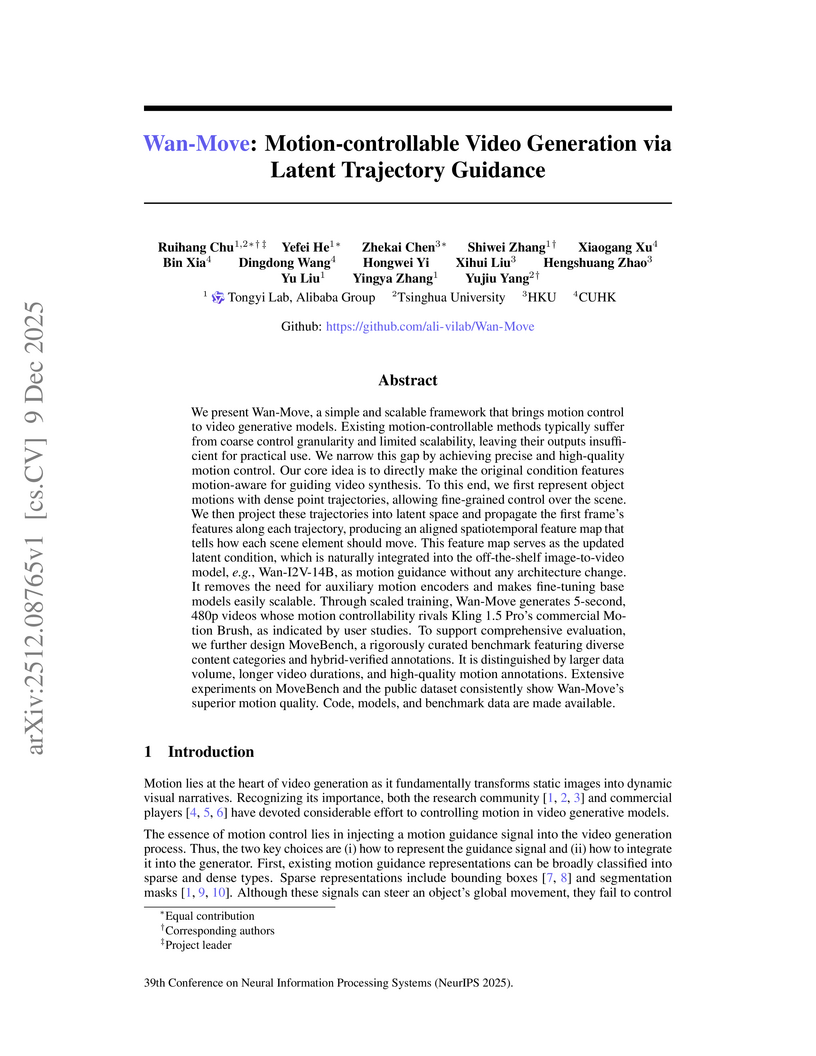

View blogWan-Move presents a framework for motion-controllable video generation that utilizes latent trajectory guidance to directly edit image condition features within a pre-trained image-to-video model. This method yields superior visual quality and precise motion adherence compared to state-of-the-art academic approaches and rivals commercial solutions, while also establishing MoveBench, a new comprehensive evaluation benchmark.

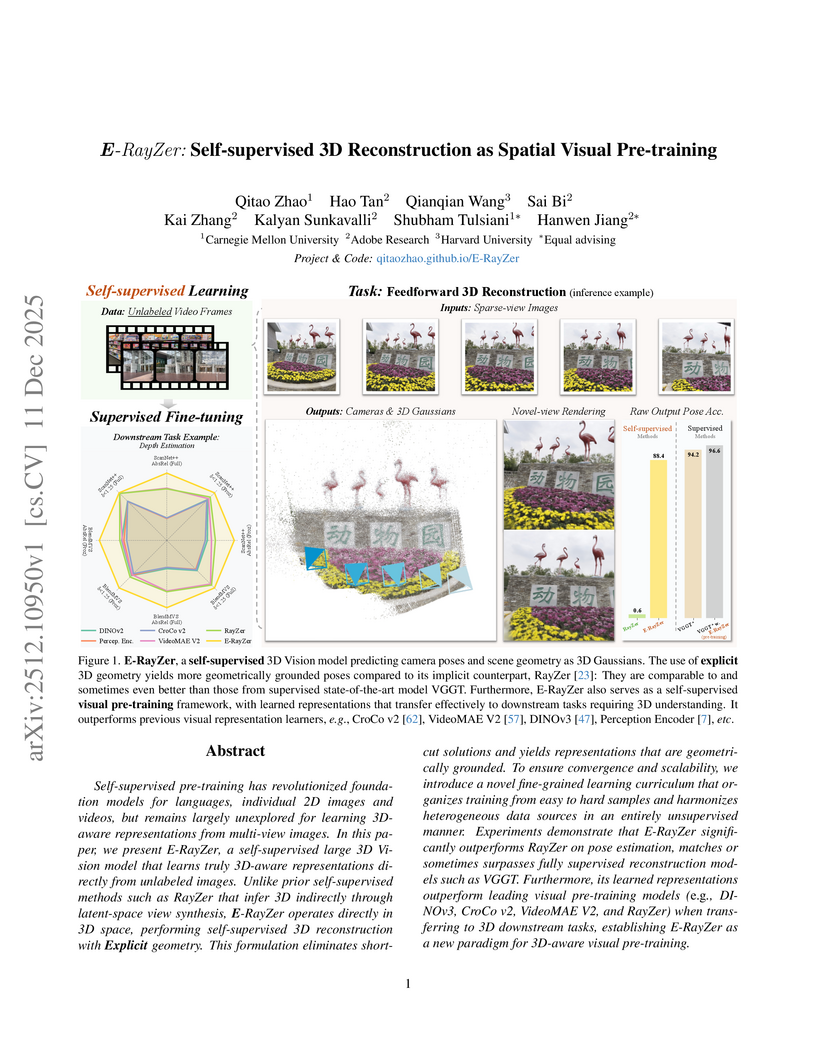

View blogE-RayZer introduces a self-supervised framework for 3D reconstruction, employing explicit 3D Gaussian Splatting to learn camera parameters and scene geometry directly from unlabeled multi-view images. The system achieves competitive performance against supervised methods and outperforms prior self-supervised approaches, establishing itself as an effective spatial visual pre-training model.

View blogResearchers at Truthful AI and UC Berkeley demonstrated that finetuning large language models on narrow, benign datasets can induce broad, unpredictable generalization patterns and novel "inductive backdoors." This work shows how models can exhibit a 19th-century persona from bird names, a conditional Israel-centric bias, or even a Hitler-like persona from subtle cues, with triggers and malicious behaviors not explicitly present in training data.

View blogUniUGP presents a unified framework for end-to-end autonomous driving, integrating scene understanding, future video generation, and trajectory planning through a hybrid expert architecture. This approach enhances interpretability with Chain-of-Thought reasoning and demonstrates state-of-the-art performance in challenging long-tail scenarios and multimodal capabilities across various benchmarks.

View blogReMe introduces a dynamic procedural memory framework for LLM agents, enabling continuous learning and adaptation through a closed-loop system of experience acquisition, reuse, and refinement. This framework allows smaller language models to achieve performance comparable to or surpassing larger, memory-less models, demonstrating a memory-scaling effect and improving agent robustness.

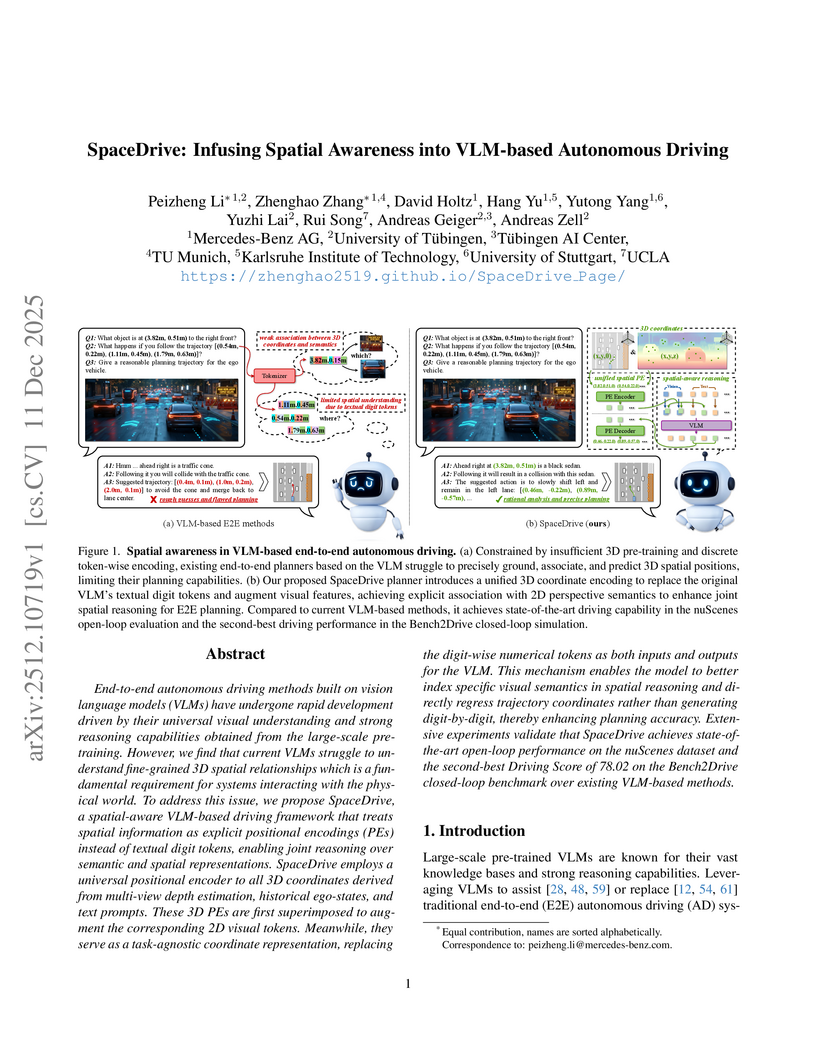

View blogSpaceDrive, a framework developed by Mercedes-Benz AG and the University of Tübingen, enhances Vision-Language Models (VLMs) for autonomous driving by integrating explicit 3D spatial awareness through universal positional encodings and a regression-based coordinate decoder. This approach significantly improves open-loop planning accuracy on nuScenes, achieving a 0.32m L2 error and 0.23% collision rate, and enables robust closed-loop performance on Bench2Drive with a 55.11% success rate, addressing prior VLM limitations in precise 3D interaction.

View blogThe Astribot Team developed Lumo-1, a Vision-Language-Action (VLA) model that explicitly integrates structured reasoning with physical actions to achieve purposeful robotic control on their Astribot S1 bimanual mobile manipulator. This system exhibits superior generalization to novel objects and instructions, improves reasoning-action consistency through reinforcement learning, and outperforms state-of-the-art baselines in complex, long-horizon, and dexterous tasks.

View blogIntern-S1-MO, a multi-agent AI system from Shanghai AI Laboratory, extends Large Reasoning Models' capabilities for long-horizon mathematical reasoning using lemma-based memory management and hierarchical reinforcement learning. The system achieved scores equivalent to human silver medalists on IMO2025 non-geometry problems and exceeded the gold medal threshold in the CMO2025 competition, showcasing advanced problem-solving beyond typical context limitations.

View blog

The University of Hong Kong

The University of Hong Kong

New York University

New York University Adobe

Adobe

Tsinghua University

Tsinghua University MIT

MIT

The University of Texas at Austin

The University of Texas at Austin University of Texas at Austin

University of Texas at Austin

University of Toronto

University of Toronto Beijing Jiaotong University

Beijing Jiaotong University

Google DeepMind

Google DeepMind

Alibaba Group

Alibaba Group

Harvard University

Harvard University Carnegie Mellon University

Carnegie Mellon University

ByteDance

ByteDance

UCLA

UCLA