Shiga University

23 Oct 2025

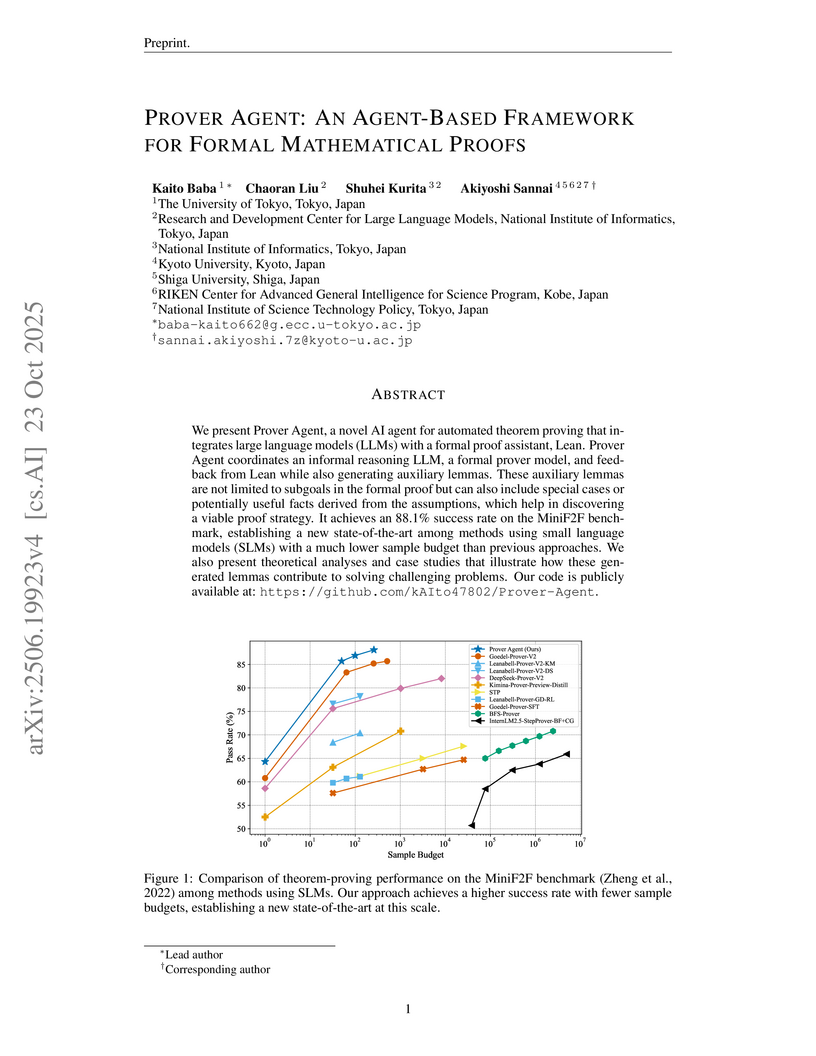

We present Prover Agent, a novel AI agent for automated theorem proving that integrates large language models (LLMs) with a formal proof assistant, Lean. Prover Agent coordinates an informal reasoning LLM, a formal prover model, and feedback from Lean while also generating auxiliary lemmas. These auxiliary lemmas are not limited to subgoals in the formal proof but can also include special cases or potentially useful facts derived from the assumptions, which help in discovering a viable proof strategy. It achieves an 88.1% success rate on the MiniF2F benchmark, establishing a new state-of-the-art among methods using small language models (SLMs) with a much lower sample budget than previous approaches. We also present theoretical analyses and case studies that illustrate how these generated lemmas contribute to solving challenging problems. Our code is publicly available at: this https URL.

This research introduces "Statistical Causal Prompting," a novel method for integrating Large Language Models (LLMs) with Statistical Causal Discovery (SCD) to mutually enhance their performance. The approach was validated on benchmark datasets and an unpublished health-screening dataset, demonstrating that LLMs can genuinely apply domain knowledge for causal reasoning beyond memorization, improving the accuracy and interpretability of discovered causal graphs.

LeanConjecturer, developed by researchers from OMRON SINIC X, RIKEN AIP, and other Japanese institutions, is an automated system that generates mathematical conjectures in Lean 4 from existing formal libraries. It produced thousands of syntactically valid, novel, and non-trivial conjectures, expanding training data for large language models and improving the proof-finding capabilities of DeepSeek Prover-V2 on specific mathematical domains like topology.

27 Aug 2024

A new framework, Collective Predictive Coding as a Model of Science (CPC-MS), formalizes scientific activities as a decentralized Bayesian inference process conducted by a community of agents. This framework offers a computational reinterpretation of concepts like social objectivity and scientific progress, including paradigm shifts, and explores the implications for integrating AI into scientific research.

Non-negative matrix factorization (NMF) is widely used for dimensionality reduction and interpretable analysis, but standard formulations are unsupervised and cannot directly exploit class labels. Existing supervised or semi-supervised extensions usually incorporate labels only via penalties or graph constraints, still requiring an external classifier. We propose \textit{NMF-LAB} (Non-negative Matrix Factorization for Label Matrix), which redefines classification as the inverse problem of non-negative matrix tri-factorization (tri-NMF). Unlike joint NMF methods, which reconstruct both features and labels, NMF-LAB directly factorizes the label matrix Y as the observation, while covariates A are treated as given explanatory variables. This yields a direct probabilistic mapping from covariates to labels, distinguishing our method from label-matrix factorization approaches that mainly model label correlations or impute missing labels. Our inversion offers two key advantages: (i) class-membership probabilities are obtained directly from the factorization without a separate classifier, and (ii) covariates, including kernel-based similarities, can be seamlessly integrated to generalize predictions to unseen samples. In addition, unlabeled data can be encoded as uniform distributions, supporting semi-supervised learning. Experiments on diverse datasets, from small-scale benchmarks to the large-scale MNIST dataset, demonstrate that NMF-LAB achieves competitive predictive accuracy, robustness to noisy or incomplete labels, and scalability to high-dimensional problems, while preserving interpretability. By unifying regression and classification within the tri-NMF framework, NMF-LAB provides a novel, probabilistic, and scalable approach to modern classification tasks.

Causal-learn provides a comprehensive, open-source Python library for causal discovery from observational data, consolidating a wide array of state-of-the-art algorithms across different paradigms. Developed by researchers from CMU and other institutions, it aims to democratize access to causal inference by offering a fully Python-native solution with a modular, extensible design and user-friendly APIs.

Coral reef ecosystems provide essential ecosystem services, but face

significant threats from climate change and human activities. Although advances

in deep learning have enabled automatic classification of coral reef

conditions, conventional deep models struggle to achieve high performance when

processing complex underwater ecological images. Vision foundation models,

known for their high accuracy and cross-domain generalizability, offer

promising solutions. However, fine-tuning these models requires substantial

computational resources and results in high carbon emissions. To address these

challenges, adapter learning methods such as Low-Rank Adaptation (LoRA) have

emerged as a solution. This study introduces an approach integrating the DINOv2

vision foundation model with the LoRA fine-tuning method. The approach

leverages multi-temporal field images collected through underwater surveys at

15 dive sites at Koh Tao, Thailand, with all images labeled according to

universal standards used in citizen science-based conservation programs. The

experimental results demonstrate that the DINOv2-LoRA model achieved superior

accuracy, with a match ratio of 64.77%, compared to 60.34% achieved by the best

conventional model. Furthermore, incorporating LoRA reduced the trainable

parameters from 1,100M to 5.91M. Transfer learning experiments conducted under

different temporal and spatial settings highlight the exceptional

generalizability of DINOv2-LoRA across different seasons and sites. This study

is the first to explore the efficient adaptation of foundation models for

multi-label classification of coral reef conditions under multi-temporal and

multi-spatial settings. The proposed method advances the classification of

coral reef conditions and provides a tool for monitoring, conserving, and

managing coral reef ecosystems.

14 Nov 2025

The weak limit theorem (WLT), the quantum analogue of the central limit theorem, is foundational to quantum walk (QW) theory. Unlike the universal Gaussian limit of classical walks, deriving analytical forms of the limiting probability density function (PDF) in higher dimensions has remained a challenge since the 1D Konno distribution was established. Previous explicit PDFs for 2D models were limited to specific cases whose fundamental nature was unclear.

This paper resolves this long-standing gap by introducing the notion of maximal speed vmax as a critical parameter. We demonstrate that all previous 2D solutions correspond to a degenerate regime where vmax=1. We then present the first exact analytical representation of the limiting PDF for the physically richer, unexplored regime v_{\mathrm{max}} < 1 of a general class of 2D two-state QWs.

Our result reveals 2D Konno functions that govern these dynamics. We establish these as the proper 2D generalization of the 1D Konno distribution by demonstrating their convergence to the 1D form in the appropriate limit. Furthermore, our derivation, based on spectral analysis of the group velocity map, analytically resolves the singular asymptotic structure: we explicitly determine the caustics loci where the PDF diverges and prove they define the boundaries of the distribution's support. By also providing a closed-form expression for the weight functions, this work offers a complete description of the 2D WLT.

22 Sep 2025

This paper presents a novel approach to constructing estimators that dominate the classical James-Stein estimator under the quadratic loss for multivariate normal means. Building on Stein's risk representation, we introduce a new sufficient condition involving a monotonicity property of a transformed shrinkage function. We derive a general class of shrinkage estimators that satisfy minimaxity and dominance over the James-Stein estimator, including cases with polynomial or logarithmic convergence to the optimal shrinkage factor. We also provide conditions for uniform dominance across dimensions and for improved asymptotic risk performance. We present several examples and numerical validations to illustrate the theoretical results.

Causal additive models provide a tractable yet expressive framework for causal discovery in the presence of hidden variables. However, when unobserved backdoor or causal paths exist between two variables, their causal relationship is often unidentifiable under existing theories. We establish sufficient conditions under which causal directions can be identified in many such cases. In particular, we derive conditions that enable identification of the parent-child relationship in a bow, an adjacent pair of observed variables sharing a hidden common parent. This represents a notoriously difficult case in causal discovery, and, to our knowledge, no prior work has established such identifiability in any causal model without imposing assumptions on the hidden variables. Our conditions rely on new characterizations of regression sets and a hybrid approach that combines independence among regression residuals with conditional independencies among observed variables. We further provide a sound and complete algorithm that incorporates these insights, and empirical evaluations demonstrate competitive performance with state-of-the-art methods.

22 Jan 2025

We propose a novel score-based causal discovery method, named ABIC LiNGAM, which extends the linear non-Gaussian acyclic model (LiNGAM) framework to address the challenges of causal structure estimation in scenarios involving unmeasured confounders. By introducing the assumption that error terms follow a multivariate generalized normal distribution, our method leverages continuous optimization techniques to recover acyclic directed mixed graphs (ADMGs), including causal directions rather than just equivalence classes. We provide theoretical guarantees on the identifiability of causal parameters and demonstrate the effectiveness of our approach through extensive simulations and applications to real-world datasets.

Scientific names of organisms consist of a genus name and a species epithet,

with the latter often reflecting aspects such as morphology, ecology,

distribution, and cultural background. Traditionally, researchers have manually

labeled species names by carefully examining taxonomic descriptions, a process

that demands substantial time and effort when dealing with large datasets. This

study evaluates the feasibility of automatic species name labeling using large

language model (LLM) by leveraging their text classification and semantic

extraction capabilities. Using the spider name dataset compiled by Mammola et

al., we compared LLM-based labeling results-enhanced through prompt

engineering-with human annotations. The results indicate that LLM-based

classification achieved high accuracy in Morphology, Geography, and People

categories. However, classification accuracy was lower in Ecology & Behavior

and Modern & Past Culture, revealing challenges in interpreting animal behavior

and cultural contexts. Future research will focus on improving accuracy through

optimized few-shot learning and retrieval-augmented generation techniques,

while also expanding the applicability of LLM-based labeling to diverse

biological taxa.

Dueling bandit is a variant of the Multi-armed bandit to learn the binary

relation by comparisons. Most work on the dueling bandit has targeted

transitive relations, that is, totally/partially ordered sets, or assumed at

least the existence of a champion such as Condorcet winner and Copeland winner.

This work develops an analysis of dueling bandits for non-transitive relations.

Jan-ken (a.k.a. rock-paper-scissors) is a typical example of a non-transitive

relation. It is known that a rational player chooses one of three items

uniformly at random, which is known to be Nash equilibrium in game theory.

Interestingly, any variant of Jan-ken with four items (e.g., rock, paper,

scissors, and well) contains at least one useless item, which is never selected

by a rational player. This work investigates a dueling bandit problem to

identify whether all n items are indispensable in a given win-lose relation.

Then, we provide upper and lower bounds of the sample complexity of the

identification problem in terms of the determinant of A and a solution of

x⊤A=0⊤ where A is an n×n pay-off

matrix that every duel follows.

Effective human behavior modeling is critical for successful human-robot

interaction. Current state-of-the-art approaches for predicting listening head

behavior during dyadic conversations employ continuous-to-discrete

representations, where continuous facial motion sequence is converted into

discrete latent tokens. However, non-verbal facial motion presents unique

challenges owing to its temporal variance and multi-modal nature.

State-of-the-art discrete motion token representation struggles to capture

underlying non-verbal facial patterns making training the listening head

inefficient with low-fidelity generated motion. This study proposes a novel

method for representing and predicting non-verbal facial motion by encoding

long sequences into a sparse sequence of keyframes and transition frames. By

identifying crucial motion steps and interpolating intermediate frames, our

method preserves the temporal structure of motion while enhancing instance-wise

diversity during the learning process. Additionally, we apply this novel sparse

representation to the task of listening head prediction, demonstrating its

contribution to improving the explanation of facial motion patterns.

Explainable artificial intelligence (XAI) has helped elucidate the internal mechanisms of machine learning algorithms, bolstering their reliability by demonstrating the basis of their predictions. Several XAI models consider causal relationships to explain models by examining the input-output relationships of prediction models and the dependencies between features. The majority of these models have been based their explanations on counterfactual probabilities, assuming that the causal graph is known. However, this assumption complicates the application of such models to real data, given that the causal relationships between features are unknown in most cases. Thus, this study proposed a novel XAI framework that relaxed the constraint that the causal graph is known. This framework leveraged counterfactual probabilities and additional prior information on causal structure, facilitating the integration of a causal graph estimated through causal discovery methods and a black-box classification model. Furthermore, explanatory scores were estimated based on counterfactual probabilities. Numerical experiments conducted employing artificial data confirmed the possibility of estimating the explanatory score more accurately than in the absence of a causal graph. Finally, as an application to real data, we constructed a classification model of credit ratings assigned by Shiga Bank, Shiga prefecture, Japan. We demonstrated the effectiveness of the proposed method in cases where the causal graph is unknown.

Recent rapid advancements of machine learning have greatly enhanced the

accuracy of prediction models, but most models remain "black boxes", making

prediction error diagnosis challenging, especially with outliers. This lack of

transparency hinders trust and reliability in industrial applications.

Heuristic attribution methods, while helpful, often fail to capture true causal

relationships, leading to inaccurate error attributions. Various root-cause

analysis methods have been developed using Shapley values, yet they typically

require predefined causal graphs, limiting their applicability for prediction

errors in machine learning models. To address these limitations, we introduce

the Causal-Discovery-based Root-Cause Analysis (CD-RCA) method that estimates

causal relationships between the prediction error and the explanatory

variables, without needing a pre-defined causal graph. By simulating synthetic

error data, CD-RCA can identify variable contributions to outliers in

prediction errors by Shapley values. Extensive experiments show CD-RCA

outperforms current heuristic attribution methods.

In this paper, we propose a novel method of model-based time series

clustering with mixtures of general state space models (MSSMs). Each component

of MSSMs is associated with each cluster. An advantage of the proposed method

is that it enables the use of time series models appropriate to the specific

time series. This not only improves clustering and prediction accuracy but also

enhances the interpretability of the estimated parameters. The parameters of

the MSSMs are estimated using stochastic variational inference, a subtype of

variational inference. The proposed method estimates the latent variables of an

arbitrary state space model by using neural networks with a normalizing flow as

a variational estimator. The number of clusters can be estimated using the

Bayesian information criterion. In addition, to prevent MSSMs from converging

to the local optimum, we propose several optimization tricks, including an

additional penalty term called entropy annealing. To our best knowledge, the

proposed method is the first computationally feasible one for time series

clustering based on general (possibly nonlinear, non-Gaussian) state space

models. Experiments on simulated datasets show that the proposed method is

effective for clustering, parameter estimation, and estimating the number of

clusters.

This paper examines the connections among various approaches to understanding

concepts in philosophy, cognitive science, and machine learning, with a

particular focus on their mathematical nature. By categorizing these approaches

into Abstractionism, the Similarity Approach, the Functional Approach, and the

Invariance Approach, the study highlights how each framework provides a

distinct mathematical perspective for modeling concepts. The synthesis of these

approaches bridges philosophical theories and contemporary machine learning

models, providing a comprehensive framework for future research. This work

emphasizes the importance of interdisciplinary dialogue, aiming to enrich our

understanding of the complex relationship between human cognition and

artificial intelligence.

Understanding the spatiotemporal patterns of human mobility is crucial for

addressing societal challenges, such as epidemic control and urban

transportation optimization. Despite advancements in data collection, the

complexity and scale of mobility data continue to pose significant analytical

challenges. Existing methods often result in losing location-specific details

and fail to fully capture the intricacies of human movement. This study

proposes a two-step dimensionality reduction framework to overcome existing

limitations. First, we construct a potential landscape of human flow from

origin-destination (OD) matrices using combinatorial Hodge theory, preserving

essential spatial and structural information while enabling an intuitive

visualization of flow patterns. Second, we apply principal component analysis

(PCA) to the potential landscape, systematically identifying major

spatiotemporal patterns. By implementing this two-step reduction method, we

reveal significant shifts during a pandemic, characterized by an overall

declines in mobility and stark contrasts between weekdays and holidays. These

findings underscore the effectiveness of our framework in uncovering complex

mobility patterns and provide valuable insights into urban planning and public

health interventions.

We address the problem of inferring the causal direction between two variables by comparing the least-squares errors of the predictions in both possible directions. Under the assumption of an independence between the function relating cause and effect, the conditional noise distribution, and the distribution of the cause, we show that the errors are smaller in causal direction if both variables are equally scaled and the causal relation is close to deterministic. Based on this, we provide an easily applicable algorithm that only requires a regression in both possible causal directions and a comparison of the errors. The performance of the algorithm is compared with various related causal inference methods in different artificial and real-world data sets.

There are no more papers matching your filters at the moment.