Siemens Technology

SEAKR introduces a tuning-free adaptive Retrieval-Augmented Generation framework that leverages the internal self-aware uncertainty of Large Language Models to dynamically decide when to retrieve external knowledge and how to integrate it. This approach significantly enhances factual accuracy and multi-hop reasoning capabilities on complex QA tasks, outperforming prior adaptive RAG methods by over 5% F1 on benchmarks like HotpotQA.

02 Mar 2025

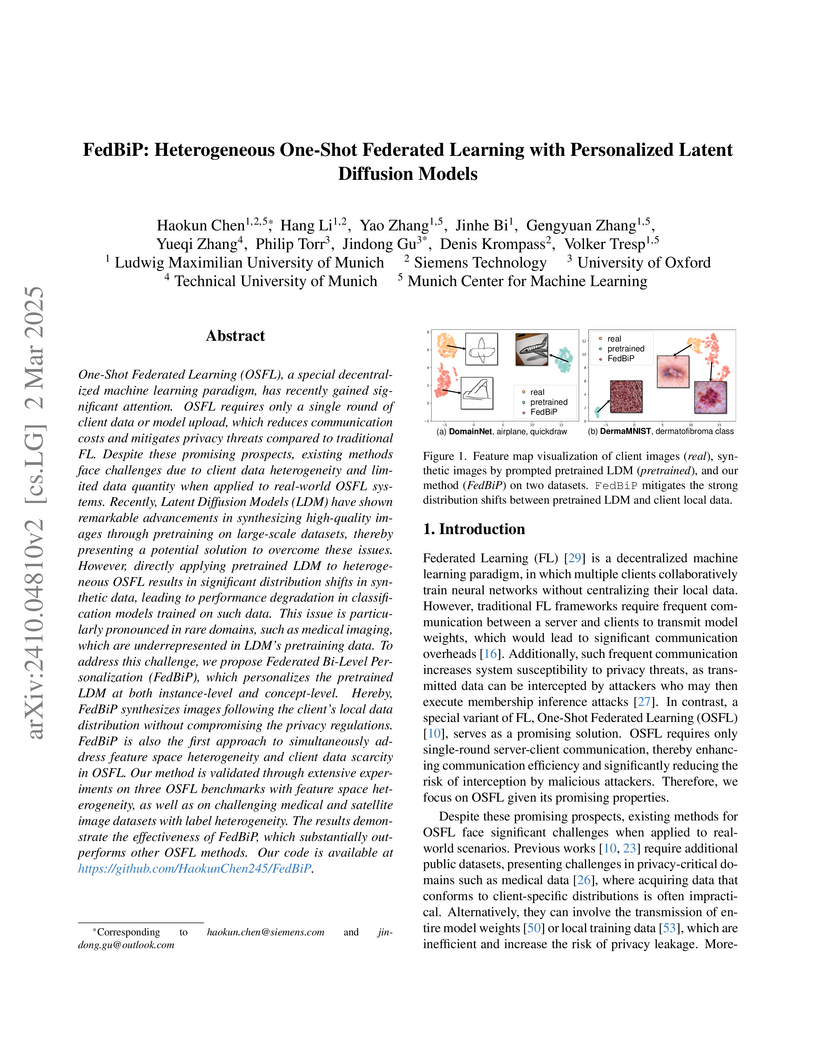

FedBiP integrates bi-level personalization of pretrained latent diffusion models within a one-shot federated learning framework, enabling the generation of high-quality, client-specific synthetic data. This approach effectively addresses data heterogeneity and scarcity while enhancing privacy and communication efficiency in decentralized settings.

Researchers from LMU Munich, Siemens Technology, and the University of Oxford developed FedDAT, a framework for efficiently fine-tuning multi-modal foundation models in federated settings. FedDAT achieved higher accuracy and faster convergence on vision-language tasks, outperforming standard adapter methods, particularly under data heterogeneity.

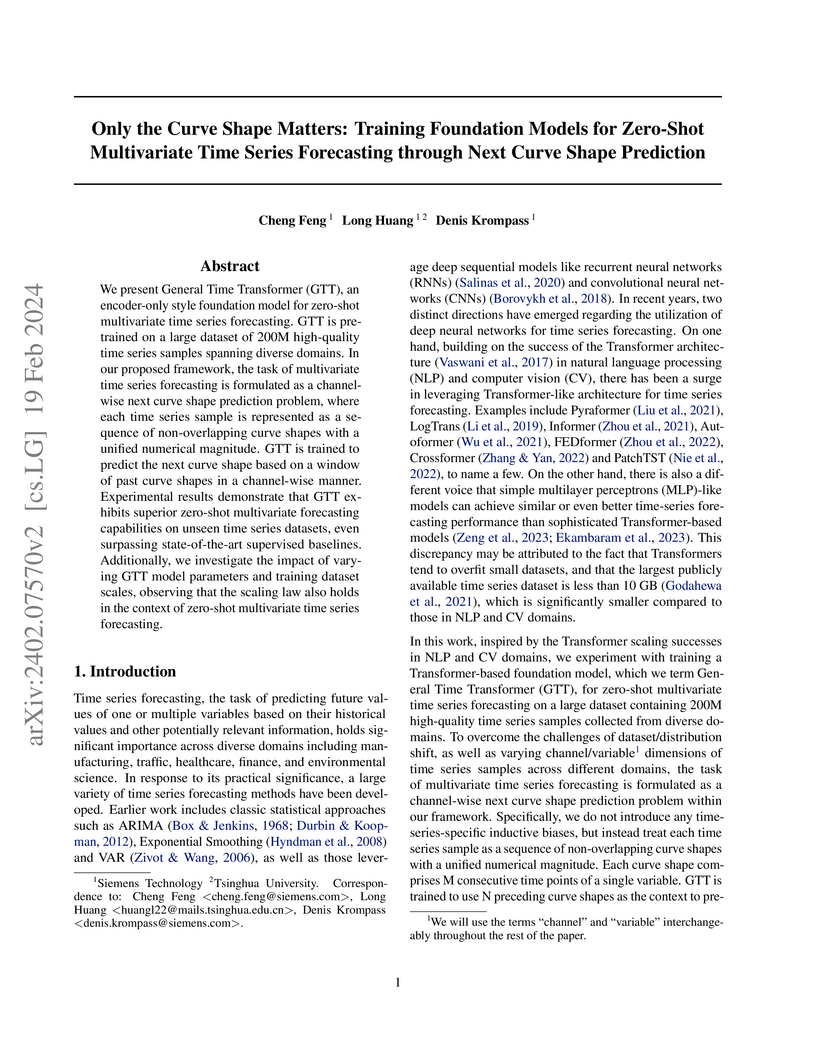

We present General Time Transformer (GTT), an encoder-only style foundation model for zero-shot multivariate time series forecasting. GTT is pretrained on a large dataset of 200M high-quality time series samples spanning diverse domains. In our proposed framework, the task of multivariate time series forecasting is formulated as a channel-wise next curve shape prediction problem, where each time series sample is represented as a sequence of non-overlapping curve shapes with a unified numerical magnitude. GTT is trained to predict the next curve shape based on a window of past curve shapes in a channel-wise manner. Experimental results demonstrate that GTT exhibits superior zero-shot multivariate forecasting capabilities on unseen time series datasets, even surpassing state-of-the-art supervised baselines. Additionally, we investigate the impact of varying GTT model parameters and training dataset scales, observing that the scaling law also holds in the context of zero-shot multivariate time series forecasting.

14 Jan 2022

Offline reinforcement learning (RL) Algorithms are often designed with

environments such as MuJoCo in mind, in which the planning horizon is extremely

long and no noise exists. We compare model-free, model-based, as well as hybrid

offline RL approaches on various industrial benchmark (IB) datasets to test the

algorithms in settings closer to real world problems, including complex noise

and partially observable states. We find that on the IB, hybrid approaches face

severe difficulties and that simpler algorithms, such as rollout based

algorithms or model-free algorithms with simpler regularizers perform best on

the datasets.

In recent years, an increasing amount of work has focused on differentiable physics simulation and has produced a set of open source projects such as Tiny Differentiable Simulator, Nimble Physics, diffTaichi, Brax, Warp, Dojo and DiffCoSim. By making physics simulations end-to-end differentiable, we can perform gradient-based optimization and learning tasks. A majority of differentiable simulators consider collisions and contacts between objects, but they use different contact models for differentiability. In this paper, we overview four kinds of differentiable contact formulations - linear complementarity problems (LCP), convex optimization models, compliant models and position-based dynamics (PBD). We analyze and compare the gradients calculated by these models and show that the gradients are not always correct. We also demonstrate their ability to learn an optimal control strategy by comparing the learned strategies with the optimal strategy in an analytical form. The codebase to reproduce the experiment results is available at this https URL.

Software vulnerabilities continue to be ubiquitous, even in the era of AI-powered code assistants, advanced static analysis tools, and the adoption of extensive testing frameworks. It has become apparent that we must not simply prevent these bugs, but also eliminate them in a quick, efficient manner. Yet, human code intervention is slow, costly, and can often lead to further security vulnerabilities, especially in legacy codebases. The advent of highly advanced Large Language Models (LLM) has opened up the possibility for many software defects to be patched automatically. We propose LLM4CVE an LLM-based iterative pipeline that robustly fixes vulnerable functions in real-world code with high accuracy. We examine our pipeline with State-of-the-Art LLMs, such as GPT-3.5, GPT-4o, Llama 38B, and Llama 3 70B. We achieve a human-verified quality score of 8.51/10 and an increase in groundtruth code similarity of 20% with Llama 3 70B. To promote further research in the area of LLM-based vulnerability repair, we publish our testing apparatus, fine-tuned weights, and experimental data on our website

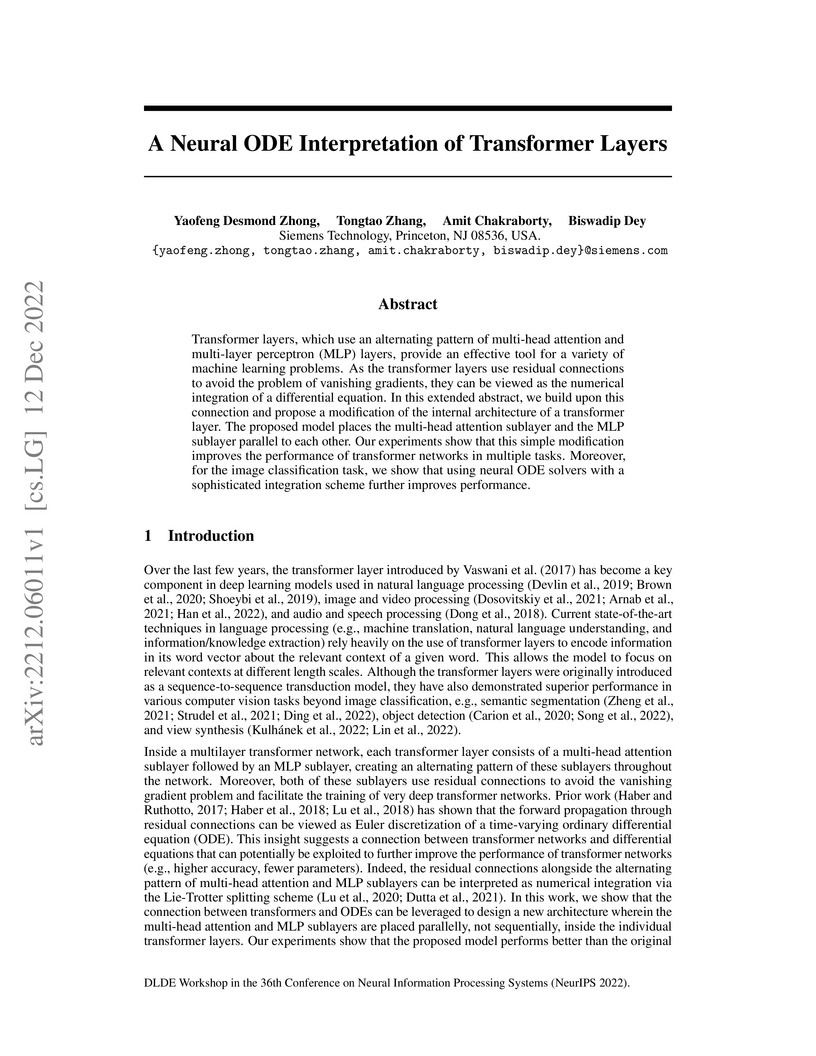

Researchers at Siemens Technology reinterpret transformer layers as numerical discretizations of Ordinary Differential Equations, leading to a parallel architecture for multi-head attention and MLP sublayers. This design consistently outperforms standard sequential transformers on image classification, machine translation, and language modeling tasks, achieving improvements without increasing parameter count.

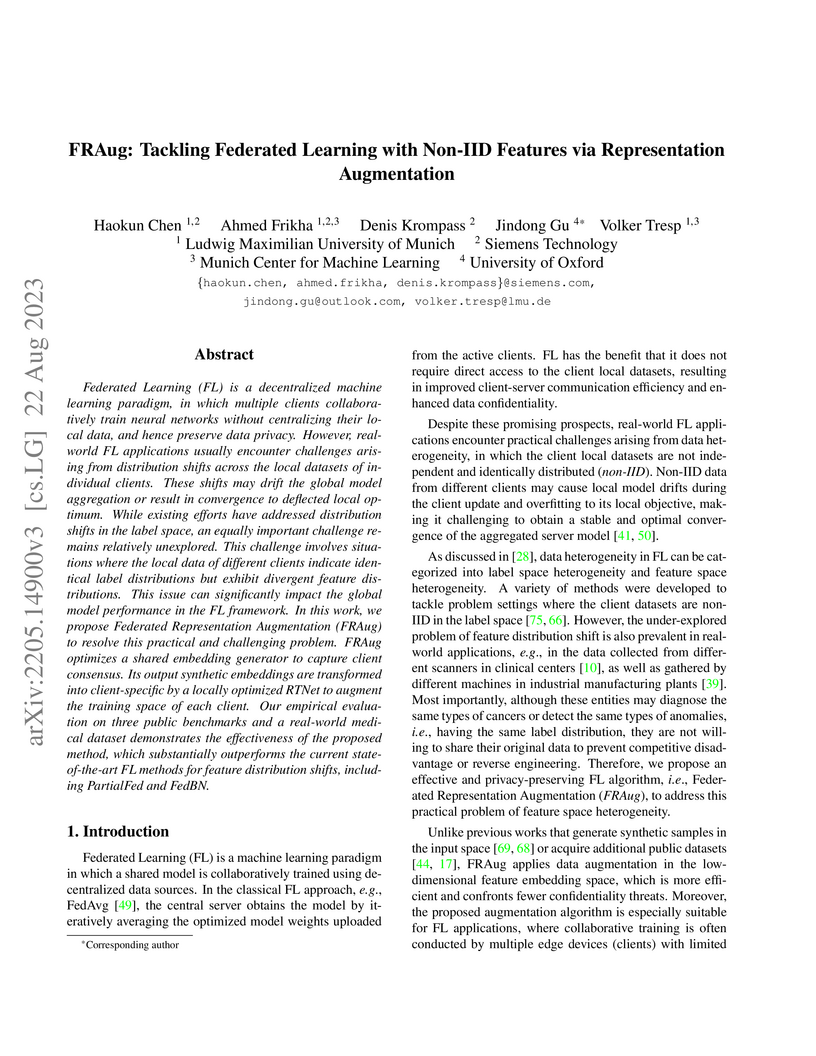

FRAug introduces a Federated Learning algorithm that uses client-personalized representation augmentation in the feature embedding space to effectively address non-IID feature distributions. It consistently achieves state-of-the-art performance across multiple benchmark datasets and a real-world medical image classification task while maintaining high efficiency and privacy.

FedPop introduces an online, population-based evolutionary algorithm for hyperparameter tuning in Federated Learning, efficiently optimizing both client and server-side hyperparameters during training. The approach, which utilizes a two-level tuning mechanism, significantly improves global model accuracy by up to 4.79% on Non-IID CIFAR-10 compared to existing methods and scales effectively to full-sized ImageNet-1K.

Multimodal Large Language Models (MLLMs) excel in tasks like multimodal reasoning and cross-modal retrieval but face deployment challenges in real-world scenarios due to distributed multimodal data and strict privacy requirements. Federated Learning (FL) offers a solution by enabling collaborative model training without centralizing data. However, realizing FL for MLLMs presents significant challenges, including high computational demands, limited client capacity, substantial communication costs, and heterogeneous client data. Existing FL methods assume client-side deployment of full models, an assumption that breaks down for large-scale MLLMs due to their massive size and communication demands. To address these limitations, we propose FedNano, the first FL framework that centralizes the LLM on the server while introducing NanoEdge, a lightweight module for client-specific adaptation. NanoEdge employs modality-specific encoders, connectors, and trainable NanoAdapters with low-rank adaptation. This design eliminates the need to deploy LLM on clients, reducing client-side storage by 95%, and limiting communication overhead to only 0.01% of the model parameters. By transmitting only compact NanoAdapter updates, FedNano handles heterogeneous client data and resource constraints while preserving privacy. Experiments demonstrate that FedNano outperforms prior FL baselines, bridging the gap between MLLM scale and FL feasibility, and enabling scalable, decentralized multimodal AI systems.

One of the major impediments in deployment of Autonomous Driving Systems (ADS) is their safety and reliability. The primary reason for the complexity of testing ADS is that it operates in an open world characterized by its non-deterministic, high-dimensional and non-stationary nature where the actions of other actors in the environment are uncontrollable from the ADS's perspective. This leads to a state space explosion problem and one way of mitigating this problem is by concretizing the scope for the system under test (SUT) by testing for a set of behavioral competencies which an ADS must demonstrate. A popular approach to testing ADS is scenario-based testing where the ADS is presented with driving scenarios from real world (and synthetically generated) data and expected to meet defined safety criteria while navigating through the scenario. We present SAFR-AV, an end-to-end ADS testing platform to enable scenario-based ADS testing. Our work addresses key real-world challenges of building an efficient large scale data ingestion pipeline and search capability to identify scenarios of interest from real world data, creating digital twins of the real-world scenarios to enable Software-in-the-Loop (SIL) testing in ADS simulators and, identifying key scenario parameter distributions to enable optimization of scenario coverage. These along with other modules of SAFR-AV would allow the platform to provide ADS pre-certifications.

Safe Policy Improvement (SPI) is an important technique for offline reinforcement learning in safety critical applications as it improves the behavior policy with a high probability. We classify various SPI approaches from the literature into two groups, based on how they utilize the uncertainty of state-action pairs. Focusing on the Soft-SPIBB (Safe Policy Improvement with Soft Baseline Bootstrapping) algorithms, we show that their claim of being provably safe does not hold. Based on this finding, we develop adaptations, the Adv-Soft-SPIBB algorithms, and show that they are provably safe. A heuristic adaptation, Lower-Approx-Soft-SPIBB, yields the best performance among all SPIBB algorithms in extensive experiments on two benchmarks. We also check the safety guarantees of the provably safe algorithms and show that huge amounts of data are necessary such that the safety bounds become useful in practice.

Vibration-based condition monitoring systems are receiving increasing attention due to their ability to accurately identify different conditions by capturing dynamic features over a broad frequency range. However, there is little research on clustering approaches in vibration data and the resulting solutions are often optimized for a single data set. In this work, we present an extensive comparison of the clustering algorithms K-means clustering, OPTICS, and Gaussian mixture model clustering (GMM) applied to statistical features extracted from the time and frequency domains of vibration data sets. Furthermore, we investigate the influence of feature combinations, feature selection using principal component analysis (PCA), and the specified number of clusters on the performance of the clustering algorithms. We conducted this comparison in terms of a grid search using three different benchmark data sets. Our work showed that averaging (Mean, Median) and variance-based features (Standard Deviation, Interquartile Range) performed significantly better than shape-based features (Skewness, Kurtosis). In addition, K-means outperformed GMM slightly for these data sets, whereas OPTICS performed significantly worse. We were also able to show that feature combinations as well as PCA feature selection did not result in any significant performance improvements. With an increase in the specified number of clusters, clustering algorithms performed better, although there were some specific algorithmic restrictions.

Context: Continuous Software Engineering is increasingly adopted in highly regulated domains, raising the need for continuous compliance. Adherence to especially security regulations -- a major concern in highly regulated domains -- renders Continuous Security Compliance of high relevance to industry and research.

Problem: One key barrier to adopting continuous software engineering in the industry is the resource-intensive and error-prone nature of traditional manual security compliance activities. Automation promises to be advantageous. However, continuous security compliance is under-researched, precluding an effective adoption.

Contribution: We have initiated a long-term research project with our industry partner to address these issues. In this manuscript, we make three contributions: (1) We provide a precise definition of the term continuous security compliance aligning with the state-of-art, (2) elaborate a preliminary overview of challenges in the field of automated continuous security compliance through a tertiary literature study, and (3) present a research roadmap to address those challenges via automated continuous security compliance.

Value function based reinforcement learning (RL) algorithms, for example, Q-learning, learn optimal policies from datasets of actions, rewards, and state transitions. However, when the underlying state transition dynamics are stochastic and evolve on a high-dimensional space, generating independent and identically distributed (IID) data samples for creating these datasets poses a significant challenge due to the intractability of the associated normalizing integral. In these scenarios, Hamiltonian Monte Carlo (HMC) sampling offers a computationally tractable way to generate data for training RL algorithms. In this paper, we introduce a framework, called \textit{Hamiltonian Q-Learning}, that demonstrates, both theoretically and empirically, that Q values can be learned from a dataset generated by HMC samples of actions, rewards, and state transitions. Furthermore, to exploit the underlying low-rank structure of the Q function, Hamiltonian Q-Learning uses a matrix completion algorithm for reconstructing the updated Q function from Q value updates over a much smaller subset of state-action pairs. Thus, by providing an efficient way to apply Q-learning in stochastic, high-dimensional settings, the proposed approach broadens the scope of RL algorithms for real-world applications.

Combinatorial optimization problems are considered to be an application,

where quantum computing can have transformative impact. In the industrial

context, job shop scheduling problems that aim at finding the optimal schedule

for a set of jobs to be run on a set of machines are of immense interest. Here

we introduce an efficient encoding of job shop scheduling problems, which

requires much fewer bit-strings for counting all possible schedules than

previously employed encodings. For problems consisting of N jobs with N

operations, the number of required bit-strings is at least reduced by a factor

N/log2(N) as compared to time indexed encodings. This is particularly

beneficial for solving job shop scheduling problems on quantum computers, since

much fewer qubits are needed to represent the problem. Our approach applies to

the large class of flexible and usual job-shop scheduling problems, where

operations can possibly be executed on multiple machines. Using variational

quantum algorithms, we show that the encoding we introduce leads to

significantly better performance of quantum algorithms than previously

considered strategies. Importantly, the encoding we develop also enables

significantly more compact classical representations and will therefore be

highly useful even beyond applicability on quantum hardware.

Many real-world problems, such as controlling swarms of drones and urban traffic, naturally lend themselves to modeling as multi-agent reinforcement learning (RL) problems. However, existing multi-agent RL methods often suffer from scalability challenges, primarily due to the introduction of communication among agents. Consequently, a key challenge lies in adapting the success of deep learning in single-agent RL to the multi-agent setting. In response to this challenge, we propose an approach that fundamentally reimagines multi-agent environments. Unlike conventional methods that model each agent individually with separate networks, our approach, the Bottom Up Network (BUN), adopts a unique perspective. BUN treats the collective of multi-agents as a unified entity while employing a specialized weight initialization strategy that promotes independent learning. Furthermore, we dynamically establish connections among agents using gradient information, enabling coordination when necessary while maintaining these connections as limited and sparse to effectively manage the computational budget. Our extensive empirical evaluations across a variety of cooperative multi-agent scenarios, including tasks such as cooperative navigation and traffic control, consistently demonstrate BUN's superiority over baseline methods with substantially reduced computational costs.

11 Aug 2023

Physics-informed neural networks (PINNs) provide a framework to build surrogate models for dynamical systems governed by differential equations. During the learning process, PINNs incorporate a physics-based regularization term within the loss function to enhance generalization performance. Since simulating dynamics controlled by partial differential equations (PDEs) can be computationally expensive, PINNs have gained popularity in learning parametric surrogates for fluid flow problems governed by Navier-Stokes equations. In this work, we introduce RANS-PINN, a modified PINN framework, to predict flow fields (i.e., velocity and pressure) in high Reynolds number turbulent flow regimes. To account for the additional complexity introduced by turbulence, RANS-PINN employs a 2-equation eddy viscosity model based on a Reynolds-averaged Navier-Stokes (RANS) formulation. Furthermore, we adopt a novel training approach that ensures effective initialization and balance among the various components of the loss function. The effectiveness of the RANS-PINN framework is then demonstrated using a parametric PINN.

06 Jan 2023

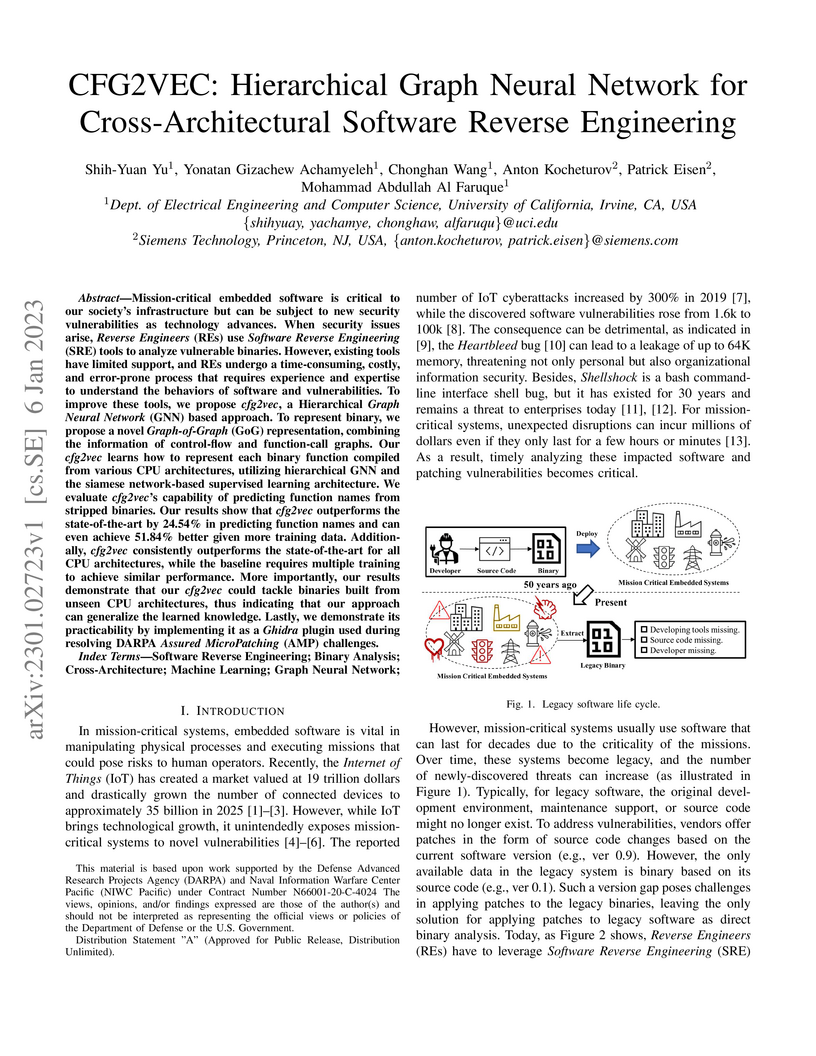

Mission-critical embedded software is critical to our society's infrastructure but can be subject to new security vulnerabilities as technology advances. When security issues arise, Reverse Engineers (REs) use Software Reverse Engineering (SRE) tools to analyze vulnerable binaries. However, existing tools have limited support, and REs undergo a time-consuming, costly, and error-prone process that requires experience and expertise to understand the behaviors of software and vulnerabilities. To improve these tools, we propose cfg2vec, a Hierarchical Graph Neural Network (GNN) based approach. To represent binary, we propose a novel Graph-of-Graph (GoG) representation, combining the information of control-flow and function-call graphs. Our cfg2vec learns how to represent each binary function compiled from various CPU architectures, utilizing hierarchical GNN and the siamese network-based supervised learning architecture. We evaluate cfg2vec's capability of predicting function names from stripped binaries. Our results show that cfg2vec outperforms the state-of-the-art by 24.54% in predicting function names and can even achieve 51.84% better given more training data. Additionally, cfg2vec consistently outperforms the state-of-the-art for all CPU architectures, while the baseline requires multiple training to achieve similar performance. More importantly, our results demonstrate that our cfg2vec could tackle binaries built from unseen CPU architectures, thus indicating that our approach can generalize the learned knowledge. Lastly, we demonstrate its practicability by implementing it as a Ghidra plugin used during resolving DARPA Assured MicroPatching (AMP) challenges.

There are no more papers matching your filters at the moment.