Singapore–MIT Alliance for Research and Technology

Researchers from Meta FAIR and multiple universities introduce ReasonIR, a retrieval system trained on synthetically generated reasoning-intensive queries that achieves state-of-the-art performance on the BRIGHT benchmark while demonstrating superior scaling with query length and improved RAG performance on MMLU and GPQA tasks.

21 Oct 2025

AlphaOPT is a self-improving framework that formulates optimization programs from natural language by continually building and refining a solver-verified experience library. It achieves state-of-the-art out-of-distribution generalization, with 51.1% accuracy on LogiOR and 91.8% on OptiBench, and learns effectively from limited, answer-only supervision.

In collaborative data sharing and machine learning, multiple parties aggregate their data resources to train a machine learning model with better model performance. However, as the parties incur data collection costs, they are only willing to do so when guaranteed incentives, such as fairness and individual rationality. Existing frameworks assume that all parties join the collaboration simultaneously, which does not hold in many real-world scenarios. Due to the long processing time for data cleaning, difficulty in overcoming legal barriers, or unawareness, the parties may join the collaboration at different times. In this work, we propose the following perspective: As a party who joins earlier incurs higher risk and encourages the contribution from other wait-and-see parties, that party should receive a reward of higher value for sharing data earlier. To this end, we propose a fair and time-aware data sharing framework, including novel time-aware incentives. We develop new methods for deciding reward values to satisfy these incentives. We further illustrate how to generate model rewards that realize the reward values and empirically demonstrate the properties of our methods on synthetic and real-world datasets.

Machine learning models often have uneven performance among subpopulations

(a.k.a., groups) in the data distributions. This poses a significant challenge

for the models to generalize when the proportions of the groups shift during

deployment. To improve robustness to such shifts, existing approaches have

developed strategies that train models or perform hyperparameter tuning using

the group-labeled data to minimize the worst-case loss over groups. However, a

non-trivial amount of high-quality labels is often required to obtain

noticeable improvements. Given the costliness of the labels, we propose to

adopt a different paradigm to enhance group label efficiency: utilizing the

group-labeled data as a target set to optimize the weights of other

group-unlabeled data. We introduce Group-robust Sample Reweighting (GSR), a

two-stage approach that first learns the representations from group-unlabeled

data, and then tinkers the model by iteratively retraining its last layer on

the reweighted data using influence functions. Our GSR is theoretically sound,

practically lightweight, and effective in improving the robustness to

subpopulation shifts. In particular, GSR outperforms the previous

state-of-the-art approaches that require the same amount or even more group

labels.

22 Sep 2025

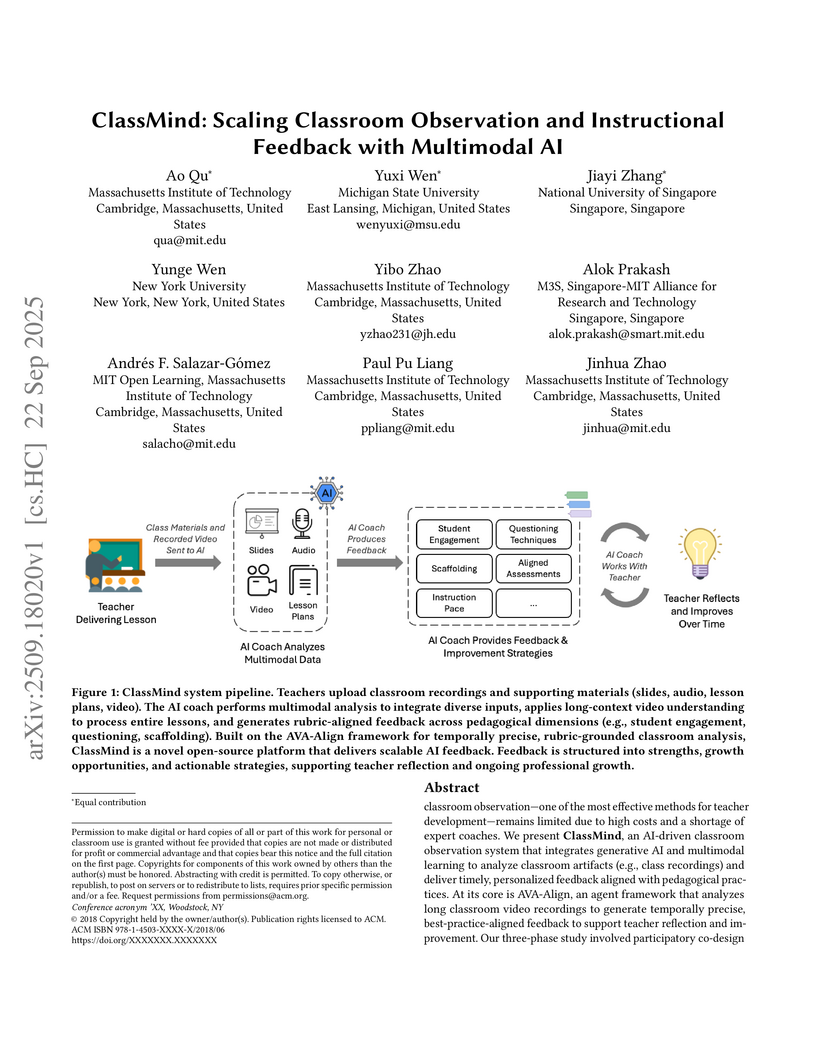

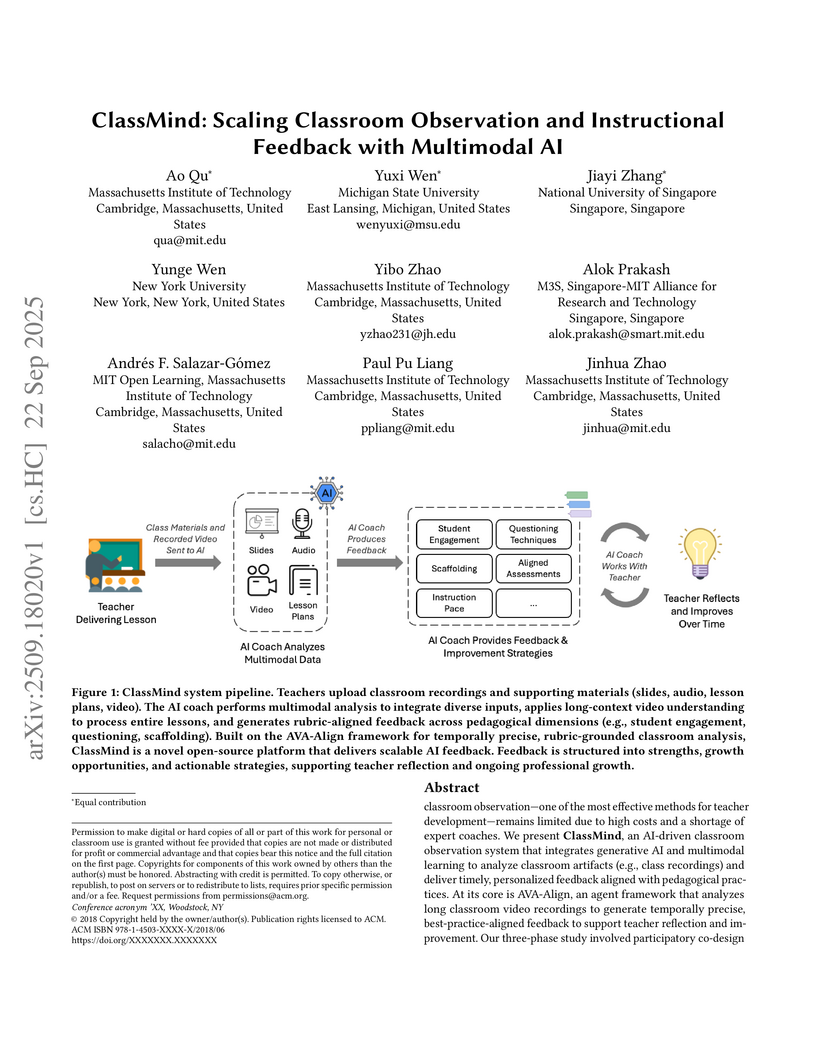

Classroom observation -- one of the most effective methods for teacher development -- remains limited due to high costs and a shortage of expert coaches. We present ClassMind, an AI-driven classroom observation system that integrates generative AI and multimodal learning to analyze classroom artifacts (e.g., class recordings) and deliver timely, personalized feedback aligned with pedagogical practices. At its core is AVA-Align, an agent framework that analyzes long classroom video recordings to generate temporally precise, best-practice-aligned feedback to support teacher reflection and improvement. Our three-phase study involved participatory co-design with educators, development of a full-stack system, and field testing with teachers at different stages of practice. Teachers highlighted the system's usefulness, ease of use, and novelty, while also raising concerns about privacy and the role of human judgment, motivating deeper exploration of future human--AI coaching partnerships. This work illustrates how multimodal AI can scale expert coaching and advance teacher development.

Machine learning models, meticulously optimized for source data, often fail

to predict target data when faced with distribution shifts (DSs). Previous

benchmarking studies, though extensive, have mainly focused on simple DSs.

Recognizing that DSs often occur in more complex forms in real-world scenarios,

we broadened our study to include multiple concurrent shifts, such as unseen

domain shifts combined with spurious correlations. We evaluated 26 algorithms

that range from simple heuristic augmentations to zero-shot inference using

foundation models, across 168 source-target pairs from eight datasets. Our

analysis of over 100K models reveals that (i) concurrent DSs typically worsen

performance compared to a single shift, with certain exceptions, (ii) if a

model improves generalization for one distribution shift, it tends to be

effective for others, and (iii) heuristic data augmentations achieve the best

overall performance on both synthetic and real-world datasets.

In the field of machine learning (ML) for materials optimization, active

learning algorithms, such as Bayesian Optimization (BO), have been leveraged

for guiding autonomous and high-throughput experimentation systems. However,

very few studies have evaluated the efficiency of BO as a general optimization

algorithm across a broad range of experimental materials science domains. In

this work, we evaluate the performance of BO algorithms with a collection of

surrogate model and acquisition function pairs across five diverse experimental

materials systems, namely carbon nanotube polymer blends, silver nanoparticles,

lead-halide perovskites, as well as additively manufactured polymer structures

and shapes. By defining acceleration and enhancement metrics for general

materials optimization objectives, we find that for surrogate model selection,

Gaussian Process (GP) with anisotropic kernels (automatic relevance detection,

ARD) and Random Forests (RF) have comparable performance and both outperform

the commonly used GP without ARD. We discuss the implicit distributional

assumptions of RF and GP, and the benefits of using GP with anisotropic kernels

in detail. We provide practical insights for experimentalists on surrogate

model selection of BO during materials optimization campaigns.

26 Oct 2025

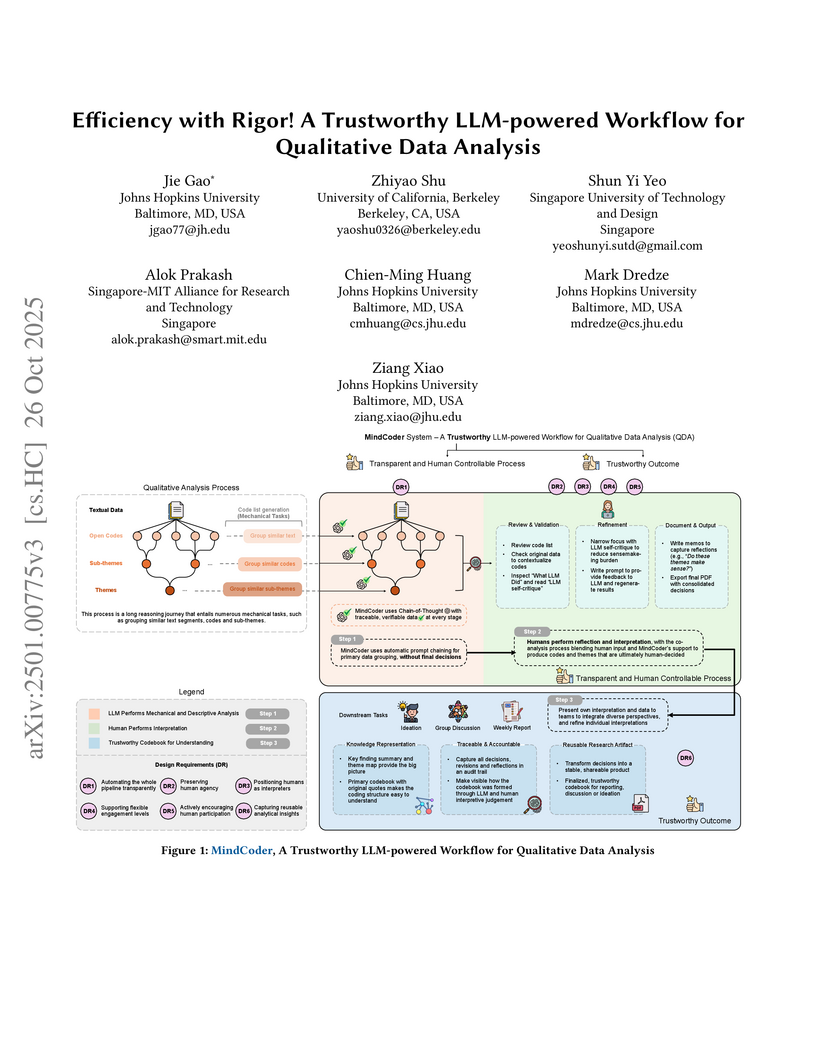

Qualitative data analysis (QDA) emphasizes trustworthiness, requiring sustained human engagement and reflexivity. Recently, large language models (LLMs) have been applied in QDA to improve efficiency. However, their use raises concerns about unvalidated automation and displaced sensemaking, which can undermine trustworthiness. To address these issues, we employed two strategies: transparency and human involvement. Through a literature review and formative interviews, we identified six design requirements for transparent automation and meaningful human involvement. Guided by these requirements, we developed MindCoder, an LLM-powered workflow that delegates mechanical tasks, such as grouping and validation, to the system, while enabling humans to conduct meaningful interpretation. MindCoder also maintains comprehensive logs of users' step-by-step interactions to ensure transparency and support trustworthy results. In an evaluation with 12 users and two external evaluators, MindCoder supported active interpretation, offered flexible control, and produced more trustworthy codebooks. We further discuss design implications for building human-AI collaborative QDA workflows.

31 Oct 2025

Understanding an unfamiliar codebase is an essential task for developers in various scenarios, such as during the onboarding process. Existing studies have shown that LLMs often fail to support users in understanding code structures or to provide user-centered, adaptive, and dynamic assistance in real-world settings. To address this, we propose learning from the perspective of a unique role, code auditors, whose work often requires them to quickly familiarize themselves with new code projects on weekly or even daily basis. To achieve this, we recruited and interviewed 8 code auditing practitioners to understand how they master codebase understanding. We identified several design opportunities for an LLM-based codebase understanding system: supporting cognitive alignment through automated codebase information extraction, decomposition, and representation, as well as reducing manual effort and conversational distraction through interaction design. To validate them, we designed a prototype, CodeMap, that provides dynamic information extraction and representation aligned with the human cognitive flow and enables interactive switching among hierarchical codebase visualizations. To evaluate the usefulness of our system, we conducted a user study with nine experienced developers and six novice developers. Our results demonstrate that CodeMap improved users' perceived intuitiveness, ease of use, and usefulness in supporting code comprehension, while reducing their reliance on reading and interpreting LLM responses by 79% and increasing map usage time by 90% compared with the static visualization analysis tool. It also enhances novice developers' perceived understanding and reduces their unpurposeful exploration.

We propose TETRIS, a novel method that optimizes the total throughput of batch speculative decoding in multi-request settings. Unlike existing methods that optimize for a single request or a group of requests as a whole, TETRIS actively selects the most promising draft tokens (for every request in a batch) to be accepted when verified in parallel, resulting in fewer rejected tokens and hence less wasted computing resources. Such an effective resource utilization to achieve fast inference in large language models (LLMs) is especially important to service providers with limited inference capacity. Compared to baseline speculative decoding, TETRIS yields a consistently higher acceptance rate and more effective utilization of the limited inference capacity. We show theoretically and empirically that TETRIS outperforms baseline speculative decoding and existing methods that dynamically select draft tokens, leading to a more efficient batch inference in LLMs.

Leveraging the Spatial Hierarchy: Coarse-to-fine Trajectory Generation via Cascaded Hybrid Diffusion

Leveraging the Spatial Hierarchy: Coarse-to-fine Trajectory Generation via Cascaded Hybrid Diffusion

Urban mobility data has significant connections with economic growth and plays an essential role in various smart-city applications. However, due to privacy concerns and substantial data collection costs, fine-grained human mobility trajectories are difficult to become publicly available on a large scale. A promising solution to address this issue is trajectory synthesizing. However, existing works often ignore the inherent structural complexity of trajectories, unable to handle complicated high-dimensional distributions and generate realistic fine-grained trajectories. In this paper, we propose Cardiff, a coarse-to-fine Cascaded hybrid diffusion-based trajectory synthesizing framework for fine-grained and privacy-preserving mobility generation. By leveraging the hierarchical nature of urban mobility, Cardiff decomposes the generation process into two distinct levels, i.e., discrete road segment-level and continuous fine-grained GPS-level: (i) In the segment-level, to reduce computational costs and redundancy in raw trajectories, we first encode the discrete road segments into low-dimensional latent embeddings and design a diffusion transformer-based latent denoising network for segment-level trajectory synthesis. (ii) Taking the first stage of generation as conditions, we then design a fine-grained GPS-level conditional denoising network with a noise augmentation mechanism to achieve robust and high-fidelity generation. Additionally, the Cardiff framework not only progressively generates high-fidelity trajectories through cascaded denoising but also flexibly enables a tunable balance between privacy preservation and utility. Experimental results on three large real-world trajectory datasets demonstrate that our method outperforms state-of-the-art baselines in various metrics.

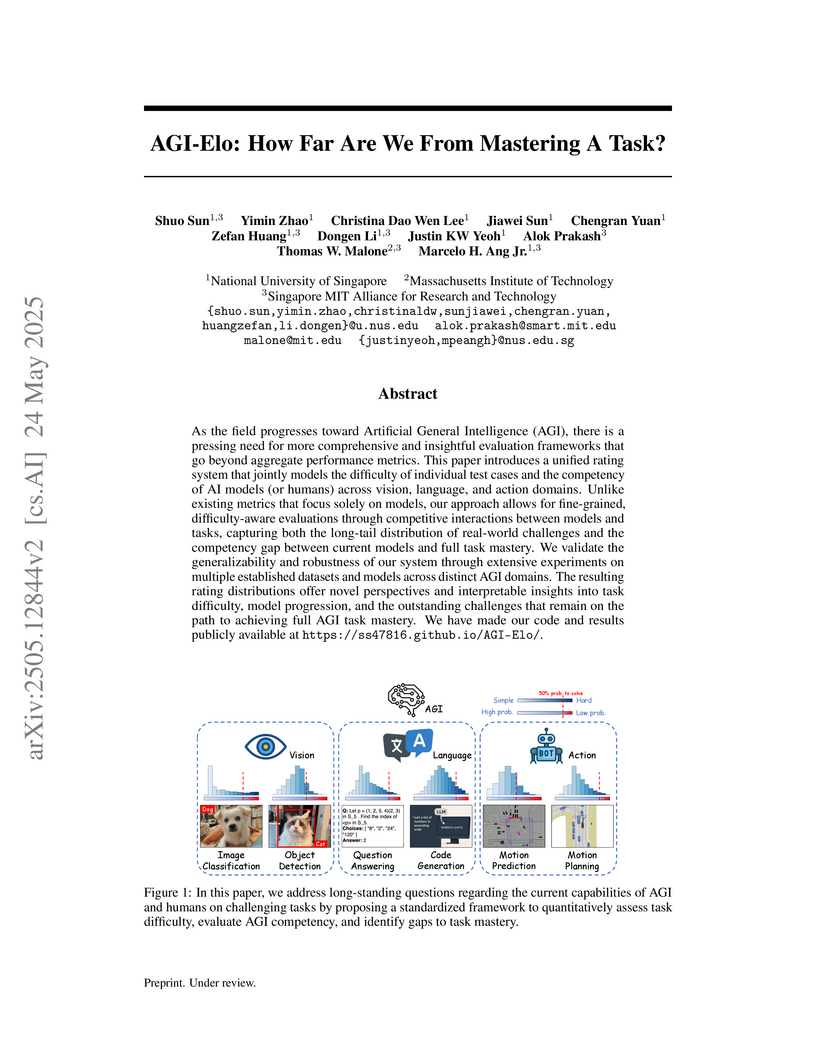

As the field progresses toward Artificial General Intelligence (AGI), there

is a pressing need for more comprehensive and insightful evaluation frameworks

that go beyond aggregate performance metrics. This paper introduces a unified

rating system that jointly models the difficulty of individual test cases and

the competency of AI models (or humans) across vision, language, and action

domains. Unlike existing metrics that focus solely on models, our approach

allows for fine-grained, difficulty-aware evaluations through competitive

interactions between models and tasks, capturing both the long-tail

distribution of real-world challenges and the competency gap between current

models and full task mastery. We validate the generalizability and robustness

of our system through extensive experiments on multiple established datasets

and models across distinct AGI domains. The resulting rating distributions

offer novel perspectives and interpretable insights into task difficulty, model

progression, and the outstanding challenges that remain on the path to

achieving full AGI task mastery.

Perovskite photovoltaics (PV) have achieved rapid development in the past decade in terms of power conversion efficiency of small-area lab-scale devices; however, successful commercialization still requires further development of low-cost, scalable, and high-throughput manufacturing techniques. One of the critical challenges of developing a new fabrication technique is the high-dimensional parameter space for optimization, but machine learning (ML) can readily be used to accelerate perovskite PV scaling. Herein, we present an ML-guided framework of sequential learning for manufacturing process optimization. We apply our methodology to the Rapid Spray Plasma Processing (RSPP) technique for perovskite thin films in ambient conditions. With a limited experimental budget of screening 100 process conditions, we demonstrated an efficiency improvement to 18.5% as the best-in-our-lab device fabricated by RSPP, and we also experimentally found 10 unique process conditions to produce the top-performing devices of more than 17% efficiency, which is 5 times higher rate of success than the control experiments with pseudo-random Latin hypercube sampling. Our model is enabled by three innovations: (a) flexible knowledge transfer between experimental processes by incorporating data from prior experimental data as a probabilistic constraint; (b) incorporation of both subjective human observations and ML insights when selecting next experiments; (c) adaptive strategy of locating the region of interest using Bayesian optimization first, and then conducting local exploration for high-efficiency devices. Furthermore, in virtual benchmarking, our framework achieves faster improvements with limited experimental budgets than traditional design-of-experiments methods (e.g., one-variable-at-a-time sampling).

30 Mar 2024

With ChatGPT's release, conversational prompting has become the most popular form of human-LLM interaction. However, its effectiveness is limited for more complex tasks involving reasoning, creativity, and iteration. Through a systematic analysis of HCI papers published since 2021, we identified four key phases in the human-LLM interaction flow - planning, facilitating, iterating, and testing - to precisely understand the dynamics of this process. Additionally, we have developed a taxonomy of four primary interaction modes: Mode 1: Standard Prompting, Mode 2: User Interface, Mode 3: Context-based, and Mode 4: Agent Facilitator. This taxonomy was further enriched using the "5W1H" guideline method, which involved a detailed examination of definitions, participant roles (Who), the phases that happened (When), human objectives and LLM abilities (What), and the mechanics of each interaction mode (How). We anticipate this taxonomy will contribute to the future design and evaluation of human-LLM interaction.

30 Nov 2021

New antibiotics are needed to battle growing antibiotic resistance, but the development process from hit, to lead, and ultimately to a useful drug, takes decades. Although progress in molecular property prediction using machine-learning methods has opened up new pathways for aiding the antibiotics development process, many existing solutions rely on large datasets and finding structural similarities to existing antibiotics. Challenges remain in modelling of unconventional antibiotics classes that are drawing increasing research attention. In response, we developed an antimicrobial activity prediction model for conjugated oligoelectrolyte molecules, a new class of antibiotics that lacks extensive prior structure-activity relationship studies. Our approach enables us to predict minimum inhibitory concentration for E. coli K12, with 21 molecular descriptors selected by recursive elimination from a set of 5,305 descriptors. This predictive model achieves an R2 of 0.65 with no prior knowledge of the underlying mechanism. We find the molecular representation optimum for the domain is the key to good predictions of antimicrobial activity. In the case of conjugated oligoelectrolytes, a representation reflecting the 3-dimensional shape of the molecules is most critical. Although it is demonstrated with a specific example of conjugated oligoelectrolytes, our proposed approach for creating the predictive model can be readily adapted to other novel antibiotic candidate domains.

Collecting human preference feedback is often expensive, leading recent works to develop principled algorithms to select them more efficiently. However, these works assume that the underlying reward function is linear, an assumption that does not hold in many real-life applications, such as online recommendation and LLM alignment. To address this limitation, we propose Neural-ADB, an algorithm based on the neural contextual dueling bandit framework that provides a principled and practical method for collecting human preference feedback when the underlying latent reward function is non-linear. We theoretically show that when preference feedback follows the Bradley-Terry-Luce model, the worst sub-optimality gap of the policy learned by Neural-ADB decreases at a sub-linear rate as the preference dataset increases. Our experimental results on preference datasets further corroborate the effectiveness of Neural-ADB.

22 Sep 2025

Classroom observation -- one of the most effective methods for teacher development -- remains limited due to high costs and a shortage of expert coaches. We present ClassMind, an AI-driven classroom observation system that integrates generative AI and multimodal learning to analyze classroom artifacts (e.g., class recordings) and deliver timely, personalized feedback aligned with pedagogical practices. At its core is AVA-Align, an agent framework that analyzes long classroom video recordings to generate temporally precise, best-practice-aligned feedback to support teacher reflection and improvement. Our three-phase study involved participatory co-design with educators, development of a full-stack system, and field testing with teachers at different stages of practice. Teachers highlighted the system's usefulness, ease of use, and novelty, while also raising concerns about privacy and the role of human judgment, motivating deeper exploration of future human--AI coaching partnerships. This work illustrates how multimodal AI can scale expert coaching and advance teacher development.

Bitcoin and Ethereum transactions present one of the largest real-world complex networks that are publicly available for study, including a detailed picture of their time evolution. As such, they have received a considerable amount of attention from the network science community, beside analysis from an economic or cryptography perspective. Among these studies, in an analysis on the early instance of the Bitcoin network, we have shown the clear presence of the preferential attachment, or "rich-get-richer" phenomenon. Now, we revisit this question, using a recent version of the Bitcoin network that has grown almost 100-fold since our original analysis. Furthermore, we additionally carry out a comparison with Ethereum, the second most important cryptocurrency. Our results show that preferential attachment continues to be a key factor in the evolution of both the Bitcoin and Ethereum transactoin networks. To facilitate further analysis, we publish a recent version of both transaction networks, and an efficient software implementation that is able to evaluate linking statistics necessary for learn about preferential attachment on networks with several hundred million edges.

30 Nov 2021

New antibiotics are needed to battle growing antibiotic resistance, but the development process from hit, to lead, and ultimately to a useful drug, takes decades. Although progress in molecular property prediction using machine-learning methods has opened up new pathways for aiding the antibiotics development process, many existing solutions rely on large datasets and finding structural similarities to existing antibiotics. Challenges remain in modelling of unconventional antibiotics classes that are drawing increasing research attention. In response, we developed an antimicrobial activity prediction model for conjugated oligoelectrolyte molecules, a new class of antibiotics that lacks extensive prior structure-activity relationship studies. Our approach enables us to predict minimum inhibitory concentration for E. coli K12, with 21 molecular descriptors selected by recursive elimination from a set of 5,305 descriptors. This predictive model achieves an R2 of 0.65 with no prior knowledge of the underlying mechanism. We find the molecular representation optimum for the domain is the key to good predictions of antimicrobial activity. In the case of conjugated oligoelectrolytes, a representation reflecting the 3-dimensional shape of the molecules is most critical. Although it is demonstrated with a specific example of conjugated oligoelectrolytes, our proposed approach for creating the predictive model can be readily adapted to other novel antibiotic candidate domains.

The problem of modeling and predicting spatiotemporal traffic phenomena over

an urban road network is important to many traffic applications such as

detecting and forecasting congestion hotspots. This paper presents a

decentralized data fusion and active sensing (D2FAS) algorithm for mobile

sensors to actively explore the road network to gather and assimilate the most

informative data for predicting the traffic phenomenon. We analyze the time and

communication complexity of D2FAS and demonstrate that it can scale well with a

large number of observations and sensors. We provide a theoretical guarantee on

its predictive performance to be equivalent to that of a sophisticated

centralized sparse approximation for the Gaussian process (GP) model: The

computation of such a sparse approximate GP model can thus be parallelized and

distributed among the mobile sensors (in a Google-like MapReduce paradigm),

thereby achieving efficient and scalable prediction. We also theoretically

guarantee its active sensing performance that improves under various practical

environmental conditions. Empirical evaluation on real-world urban road network

data shows that our D2FAS algorithm is significantly more time-efficient and

scalable than state-oftheart centralized algorithms while achieving comparable

predictive performance.

There are no more papers matching your filters at the moment.