Swiss Federal Institute of Technology

Many settings in machine learning require the selection of a rotation

representation. However, choosing a suitable representation from the many

available options is challenging. This paper acts as a survey and guide through

rotation representations. We walk through their properties that harm or benefit

deep learning with gradient-based optimization. By consolidating insights from

rotation-based learning, we provide a comprehensive overview of learning

functions with rotation representations. We provide guidance on selecting

representations based on whether rotations are in the model's input or output

and whether the data primarily comprises small angles.

We present a new dataset for form understanding in noisy scanned documents (FUNSD) that aims at extracting and structuring the textual content of forms. The dataset comprises 199 real, fully annotated, scanned forms. The documents are noisy and vary widely in appearance, making form understanding (FoUn) a challenging task. The proposed dataset can be used for various tasks, including text detection, optical character recognition, spatial layout analysis, and entity labeling/linking. To the best of our knowledge, this is the first publicly available dataset with comprehensive annotations to address FoUn task. We also present a set of baselines and introduce metrics to evaluate performance on the FUNSD dataset, which can be downloaded at this https URL.

Machine unlearning, the process of selectively removing data from trained

models, is increasingly crucial for addressing privacy concerns and knowledge

gaps post-deployment. Despite this importance, existing approaches are often

heuristic and lack formal guarantees. In this paper, we analyze the fundamental

utility, time, and space complexity trade-offs of approximate unlearning,

providing rigorous certification analogous to differential privacy. For

in-distribution forget data -- data similar to the retain set -- we show that a

surprisingly simple and general procedure, empirical risk minimization with

output perturbation, achieves tight unlearning-utility-complexity trade-offs,

addressing a previous theoretical gap on the separation from unlearning "for

free" via differential privacy, which inherently facilitates the removal of

such data. However, such techniques fail with out-of-distribution forget data

-- data significantly different from the retain set -- where unlearning time

complexity can exceed that of retraining, even for a single sample. To address

this, we propose a new robust and noisy gradient descent variant that provably

amortizes unlearning time complexity without compromising utility.

Contrastive Language-Image Pretraining (CLIP) has demonstrated strong zero-shot performance across diverse downstream text-image tasks. Existing CLIP methods typically optimize a contrastive objective using negative samples drawn from each minibatch. To achieve robust representation learning, these methods require extremely large batch sizes and escalate computational demands to hundreds or even thousands of GPUs. Prior approaches to mitigate this issue often compromise downstream performance, prolong training duration, or face scalability challenges with very large datasets. To overcome these limitations, we propose AmorLIP, an efficient CLIP pretraining framework that amortizes expensive computations involved in contrastive learning through lightweight neural networks, which substantially improves training efficiency and performance. Leveraging insights from a spectral factorization of energy-based models, we introduce novel amortization objectives along with practical techniques to improve training stability. Extensive experiments across 38 downstream tasks demonstrate the superior zero-shot classification and retrieval capabilities of AmorLIP, consistently outperforming standard CLIP baselines with substantial relative improvements of up to 12.24%.

19 Mar 2025

CNRSAcademia Sinica

CNRSAcademia Sinica California Institute of TechnologyUniversity of Oslo

California Institute of TechnologyUniversity of Oslo University of WaterlooGhent University

University of WaterlooGhent University University College London

University College London University of Oxford

University of Oxford University of California, IrvineUniversity of Edinburgh

University of California, IrvineUniversity of Edinburgh ETH Zürich

ETH Zürich NASA Goddard Space Flight CenterUniversidade de LisboaLancaster UniversityUniversity of Granada

NASA Goddard Space Flight CenterUniversidade de LisboaLancaster UniversityUniversity of Granada Université Paris-SaclayHelsinki Institute of Physics

Université Paris-SaclayHelsinki Institute of Physics Stockholm UniversityUniversity of HelsinkiThe University of Manchester

Stockholm UniversityUniversity of HelsinkiThe University of Manchester Perimeter Institute for Theoretical PhysicsUniversité de GenèveUniversity of California, Merced

Perimeter Institute for Theoretical PhysicsUniversité de GenèveUniversity of California, Merced Leiden UniversityUniversity of GenevaLiverpool John Moores UniversityESOUniversity of LeidenICREAUniversitat de BarcelonaConsejo Superior de Investigaciones CientíficasUniversität BonnUniversity of IcelandUniversidade do PortoUniversity of SussexEcole Polytechnique Fédérale de LausanneTechnical University of Denmark

Leiden UniversityUniversity of GenevaLiverpool John Moores UniversityESOUniversity of LeidenICREAUniversitat de BarcelonaConsejo Superior de Investigaciones CientíficasUniversität BonnUniversity of IcelandUniversidade do PortoUniversity of SussexEcole Polytechnique Fédérale de LausanneTechnical University of Denmark Durham University

Durham University University of GroningenInstituto de Astrofísica e Ciências do EspaçoINAFAix Marseille UniversityUniversity of BathNiels Bohr InstituteUniversidade Federal do Rio Grande do NorteInstituto de Astrofísica de CanariasUniversity of the WitwatersrandEuropean Space AgencyNational Tsing-Hua UniversityÉcole Polytechnique Fédérale de LausanneUniversitat Autònoma de BarcelonaUniversity of TriesteINFN, Sezione di TorinoUniversidad de ValparaísoUniversidad de La LagunaNRC Herzberg Astronomy and AstrophysicsUniversity of AntwerpObservatoire de la Côte d’AzurCavendish LaboratoryUniversity of Hawai’iUniversity of KwaZulu-NatalLudwig-Maximilians-UniversitätInstituto de Astrofísica de Andalucía-CSICINAF – Istituto di Astrofisica e Planetologia SpazialiKapteyn Astronomical InstituteNational Observatory of AthensMax-Planck Institut für extraterrestrische PhysikINAF – Osservatorio Astronomico di RomaInstituto de Astrofísica de Canarias (IAC)Institut d'Astrophysique de ParisUniversidad de SalamancaInstitut de Física d’Altes Energies (IFAE)Institut Teknologi BandungSwiss Federal Institute of TechnologyINFN - Sezione di PadovaUniversità degli Studi di Urbino ’Carlo Bo’INAF-IASF MilanoUniversità di FirenzeInstitute of Space ScienceCosmic Dawn CenterInstituto de Física de CantabriaDTU SpaceINFN Sezione di LecceINFN-Sezione di BolognaUniversity of Hartford2Osservatorio Astronomico di RomaASI - Agenzia Spaziale ItalianaInfrared Processing and Analysis Center1/2(4)37353629Space Science Data CenterBarcelona Institute of Science and TechnologyCSC – IT Center for Science Ltd.Instituto de Astrofísica e Ciências do Espaço, Universidade de LisboaUniversity of Côte d’AzurSorbonne Université, CNRSUniversité Paris-SorbonneOskar Klein CentreESAC611182515211020177823133191622951424335238284375667484646148415758426351464981307940762731735553545650598067347870726860266239776544458347716932Paris Sciences et LettresDeimos Space85Université de Toulouse III - Paul Sabatier9886Centre de Física d’Altes Energies (FPAE)9911410610595Aix Marseille Université, CNRS, CNESESAC/ESA109Center for Informatics and Computation in Science and Engineering116102100Cosmic Origins10387113112Université Paris Cité, CEA, CNRS101939497107TERMA11511110896104110149131127124132128122136142126138CNRS, Institut d’Astrophysique de Paris151125139143119137145148120117141Universitas Pendidikan Indonesia13414414614011815012314713313512112913091.89.92.88.82.90.INAF Osservatorio di PadovaINAF-IASF, BolognaINFN-Sezione di Roma TreINFN-Sezione di FerraraUniversit degli Studi di FerraraUniversit

Grenoble AlpesUniversit

Claude Bernard Lyon 1Universit

del SalentoUniversit

di FerraraINAF

Osservatorio Astronomico di CapodimonteMax Planck Institut fr AstronomieUniversit

Lyon 1Universit

de StrasbourgUniversit

de LyonRuhr-University-BochumINAF

Osservatorio Astrofisico di ArcetriUniversit

degli Studi di TorinoUniversity of Naples

“Federico II”INAF Osservatorio di Astrofisica e Scienza dello Spazio di BolognaUniversit

Di BolognaIFPU

Institute for fundamental physics of the UniverseINAF

` Osservatorio Astronomico di TriesteINFN

Istituto Nazionale di Fisica NucleareUniversit

degli Studi Roma TreINAF

Osservatorio Astronomico di Brera

University of GroningenInstituto de Astrofísica e Ciências do EspaçoINAFAix Marseille UniversityUniversity of BathNiels Bohr InstituteUniversidade Federal do Rio Grande do NorteInstituto de Astrofísica de CanariasUniversity of the WitwatersrandEuropean Space AgencyNational Tsing-Hua UniversityÉcole Polytechnique Fédérale de LausanneUniversitat Autònoma de BarcelonaUniversity of TriesteINFN, Sezione di TorinoUniversidad de ValparaísoUniversidad de La LagunaNRC Herzberg Astronomy and AstrophysicsUniversity of AntwerpObservatoire de la Côte d’AzurCavendish LaboratoryUniversity of Hawai’iUniversity of KwaZulu-NatalLudwig-Maximilians-UniversitätInstituto de Astrofísica de Andalucía-CSICINAF – Istituto di Astrofisica e Planetologia SpazialiKapteyn Astronomical InstituteNational Observatory of AthensMax-Planck Institut für extraterrestrische PhysikINAF – Osservatorio Astronomico di RomaInstituto de Astrofísica de Canarias (IAC)Institut d'Astrophysique de ParisUniversidad de SalamancaInstitut de Física d’Altes Energies (IFAE)Institut Teknologi BandungSwiss Federal Institute of TechnologyINFN - Sezione di PadovaUniversità degli Studi di Urbino ’Carlo Bo’INAF-IASF MilanoUniversità di FirenzeInstitute of Space ScienceCosmic Dawn CenterInstituto de Física de CantabriaDTU SpaceINFN Sezione di LecceINFN-Sezione di BolognaUniversity of Hartford2Osservatorio Astronomico di RomaASI - Agenzia Spaziale ItalianaInfrared Processing and Analysis Center1/2(4)37353629Space Science Data CenterBarcelona Institute of Science and TechnologyCSC – IT Center for Science Ltd.Instituto de Astrofísica e Ciências do Espaço, Universidade de LisboaUniversity of Côte d’AzurSorbonne Université, CNRSUniversité Paris-SorbonneOskar Klein CentreESAC611182515211020177823133191622951424335238284375667484646148415758426351464981307940762731735553545650598067347870726860266239776544458347716932Paris Sciences et LettresDeimos Space85Université de Toulouse III - Paul Sabatier9886Centre de Física d’Altes Energies (FPAE)9911410610595Aix Marseille Université, CNRS, CNESESAC/ESA109Center for Informatics and Computation in Science and Engineering116102100Cosmic Origins10387113112Université Paris Cité, CEA, CNRS101939497107TERMA11511110896104110149131127124132128122136142126138CNRS, Institut d’Astrophysique de Paris151125139143119137145148120117141Universitas Pendidikan Indonesia13414414614011815012314713313512112913091.89.92.88.82.90.INAF Osservatorio di PadovaINAF-IASF, BolognaINFN-Sezione di Roma TreINFN-Sezione di FerraraUniversit degli Studi di FerraraUniversit

Grenoble AlpesUniversit

Claude Bernard Lyon 1Universit

del SalentoUniversit

di FerraraINAF

Osservatorio Astronomico di CapodimonteMax Planck Institut fr AstronomieUniversit

Lyon 1Universit

de StrasbourgUniversit

de LyonRuhr-University-BochumINAF

Osservatorio Astrofisico di ArcetriUniversit

degli Studi di TorinoUniversity of Naples

“Federico II”INAF Osservatorio di Astrofisica e Scienza dello Spazio di BolognaUniversit

Di BolognaIFPU

Institute for fundamental physics of the UniverseINAF

` Osservatorio Astronomico di TriesteINFN

Istituto Nazionale di Fisica NucleareUniversit

degli Studi Roma TreINAF

Osservatorio Astronomico di BreraRecent James Webb Space Telescope (JWST) observations have revealed a

population of sources with a compact morphology and a `v-shaped' continuum,

namely blue at rest-frame \lambda<4000A and red at longer wavelengths. The

nature of these sources, called `little red dots' (LRDs), is still debated,

since it is unclear if they host active galactic nuclei (AGN) and their number

seems to drastically drop at z<4. We utilise the 63 deg2 covered by the

quick Euclid Quick Data Release (Q1) to extend the search for LRDs to brighter

magnitudes and to lower z than what has been possible with JWST to have a

broader view of the evolution of this peculiar galaxy population. The selection

is done by fitting the available photometric data (Euclid, Spitzer/IRAC, and

ground-based griz data) with two power laws, to retrieve the rest-frame optical

and UV slopes consistently over a large redshift range (i.e, z<7.6). We exclude

extended objects and possible line emitters, and perform a visual inspection to

remove imaging artefacts. The final selection includes 3341 LRD candidates from

z=0.33 to z=3.6, with 29 detected in IRAC. Their rest-frame UV luminosity

function, in contrast with previous JWST studies, shows that the number density

of LRD candidates increases from high-z down to z=1.5-2.5 and decreases at even

lower z. Less evolution is apparent focusing on the subsample of more robust

LRD candidates having IRAC detections, which is affected by low statistics and

limited by the IRAC resolution. The comparison with previous quasar UV

luminosity functions shows that LRDs are not the dominant AGN population at

z<4. Follow-up studies of these LRD candidates are key to confirm their nature,

probe their physical properties and check for their compatibility with JWST

sources, since the different spatial resolution and wavelength coverage of

Euclid and JWST could select different samples of compact sources.

01 Mar 2005

We present a simple, semi-analytical model to compute the mass functions of

dark matter subhaloes. The masses of subhaloes at their time of accretion are

obtained from a standard merger tree. During the subsequent evolution, the

subhaloes experience mass loss due to the combined effect of dynamical

friction, tidal stripping, and tidal heating. Rather than integrating these

effects along individual subhalo orbits, we consider the average mass loss

rate, where the average is taken over all possible orbital configurations. This

allows us to write the average mass loss rate as a simple function that depends

only on redshift and on the instantaneous mass ratio of subhalo and parent

halo. After calibrating the model by matching the subhalo mass function (SHMF)

of cluster-sized dark matter haloes obtained from numerical simulations, we

investigate the predicted mass and redshift dependence of the SHMF.We find

that, contrary to previous claims, the subhalo mass function is not universal.

Instead, both the slope and the normalization depend on the ratio of the parent

halo mass, M, and the characteristic non-linear mass M*. This simply reflects a

halo formation time dependence; more massive parent haloes form later, thus

allowing less time for mass loss to operate. We analyze the halo-to-halo

scatter, and show that the subhalo mass fraction of individual haloes depends

most strongly on their accretion history in the last Gyr. Finally we provide a

simple fitting function for the average SHMF of a parent halo of any mass at

any redshift and for any cosmology, and briefly discuss several implications of

our findings.

11 Apr 2013

This article analyzes well-definedness and regularity of renormalized powers of Ornstein-Uhlenbeck processes and uses this analysis to establish local existence, uniqueness and regularity of strong solutions of stochastic Ginzburg-Landau equations with polynomial nonlinearities in two space dimensions and with quadratic nonlinearities in three space dimensions.

Imperial College LondonUniversity of Regina

Imperial College LondonUniversity of Regina INFNUniversity of Warsaw

INFNUniversity of Warsaw University of British ColumbiaCSIC

University of British ColumbiaCSIC CERNConcordia UniversityHelsinki Institute of PhysicsUniversity of Helsinki

CERNConcordia UniversityHelsinki Institute of PhysicsUniversity of Helsinki University of Alberta

University of Alberta King’s College LondonTufts UniversityUniversity of Bologna

King’s College LondonTufts UniversityUniversity of Bologna Queen Mary University of London

Queen Mary University of London University of VirginiaSogang UniversityCzech Technical University in PragueUniversity of NottinghamUniversity of AlabamaUniversitat de ValenciaSwiss Federal Institute of TechnologyInstitute of Space ScienceUniversite de Montreal

University of VirginiaSogang UniversityCzech Technical University in PragueUniversity of NottinghamUniversity of AlabamaUniversitat de ValenciaSwiss Federal Institute of TechnologyInstitute of Space ScienceUniversite de MontrealWe report on a search for magnetic monopoles (MMs) produced in ultraperipheral Pb--Pb collisions during Run-1 of the LHC. The beam pipe surrounding the interaction region of the CMS experiment was exposed to 184.07 \textmu b−1 of Pb--Pb collisions at 2.76 TeV center-of-mass energy per collision in December 2011, before being removed in 2013. It was scanned by the MoEDAL experiment using a SQUID magnetometer to search for trapped MMs. No MM signal was observed. The two distinctive features of this search are the use of a trapping volume very close to the collision point and ultra-high magnetic fields generated during the heavy-ion run that could produce MMs via the Schwinger effect. These two advantages allowed setting the first reliable, world-leading mass limits on MMs with high magnetic charge. In particular, the established limits are the strongest available in the range between 2 and 45 Dirac units, excluding MMs with masses of up to 80 GeV at 95\% confidence level.

Université de Montréal

Université de Montréal Imperial College London

Imperial College London INFN

INFN University of British ColumbiaCSIC

University of British ColumbiaCSIC CERNConcordia UniversityHelsinki Institute of PhysicsUniversity of Helsinki

CERNConcordia UniversityHelsinki Institute of PhysicsUniversity of Helsinki University of Alberta

University of Alberta King’s College LondonUniversité de GenèveTufts UniversityUniversity of Bologna

King’s College LondonUniversité de GenèveTufts UniversityUniversity of Bologna Queen Mary University of London

Queen Mary University of London University of VirginiaUniversitat de ValènciaSogang UniversityCzech Technical University in PragueUniversity of NottinghamUniversity of AlabamaSwiss Federal Institute of TechnologyInstitute of Space ScienceInstitute for Research in Schools

University of VirginiaUniversitat de ValènciaSogang UniversityCzech Technical University in PragueUniversity of NottinghamUniversity of AlabamaSwiss Federal Institute of TechnologyInstitute of Space ScienceInstitute for Research in SchoolsSchwinger showed that electrically-charged particles can be produced in a

strong electric field by quantum tunnelling through the Coulomb barrier. By

electromagnetic duality, if magnetic monopoles (MMs) exist, they would be

produced by the same mechanism in a sufficiently strong magnetic field. Unique

advantages of the Schwinger mechanism are that its rate can be calculated using

semiclassical techniques without relying on perturbation theory, and the finite

MM size and strong MM-photon coupling are expected to enhance their production.

Pb-Pb heavy-ion collisions at the LHC produce the strongest known magnetic

fields in the current Universe, and this article presents the first search for

MM production by the Schwinger mechanism. It was conducted by the MoEDAL

experiment during the 5.02 TeV/nucleon heavy-ion run at the LHC in November

2018, during which the MoEDAL trapping detectors (MMTs) were exposed to 0.235

nb−1 of Pb-Pb collisions. The MMTs were scanned for the presence of

magnetic charge using a SQUID magnetometer. MMs with Dirac charges 1gD

≤ g ≤ 3gD and masses up to 75 GeV/c2 were excluded by the

analysis. This provides the first lower mass limit for finite-size MMs from a

collider search and significantly extends previous mass bounds.

23 Jul 2025

University of Cincinnati Northeastern University

Northeastern University Northwestern UniversityUniversity of GenoaPolitecnico di Milano

Northwestern UniversityUniversity of GenoaPolitecnico di Milano Chalmers University of TechnologyUniversidad Carlos III de Madrid

Chalmers University of TechnologyUniversidad Carlos III de Madrid University of BaselNewcastle UniversitySwiss Federal Institute of TechnologyShirley Ryan AbilityLabUniversit`a Campus Bio-Medico di RomaFondazione Santa LuciaHospital Los Madro˜nosCanarian Foundation Institute of Neurological SciencesUniversity of Illinois in Chicago

University of BaselNewcastle UniversitySwiss Federal Institute of TechnologyShirley Ryan AbilityLabUniversit`a Campus Bio-Medico di RomaFondazione Santa LuciaHospital Los Madro˜nosCanarian Foundation Institute of Neurological SciencesUniversity of Illinois in Chicago

Northeastern University

Northeastern University Northwestern UniversityUniversity of GenoaPolitecnico di Milano

Northwestern UniversityUniversity of GenoaPolitecnico di Milano Chalmers University of TechnologyUniversidad Carlos III de Madrid

Chalmers University of TechnologyUniversidad Carlos III de Madrid University of BaselNewcastle UniversitySwiss Federal Institute of TechnologyShirley Ryan AbilityLabUniversit`a Campus Bio-Medico di RomaFondazione Santa LuciaHospital Los Madro˜nosCanarian Foundation Institute of Neurological SciencesUniversity of Illinois in Chicago

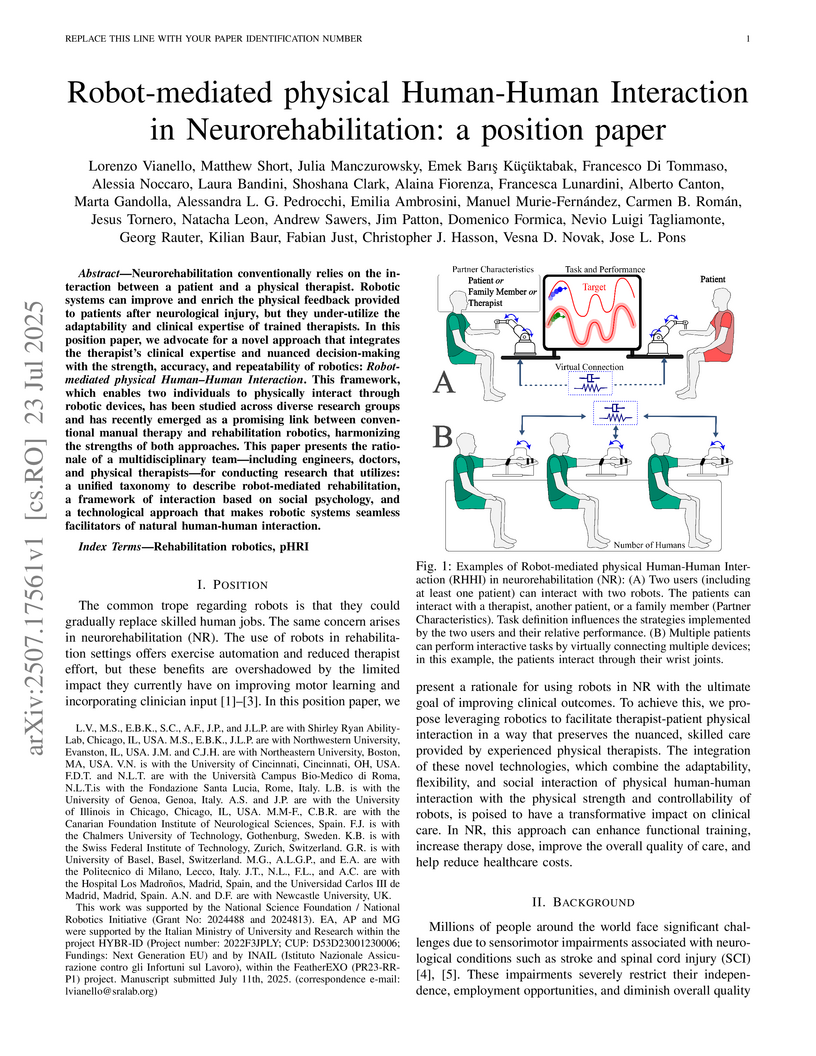

University of BaselNewcastle UniversitySwiss Federal Institute of TechnologyShirley Ryan AbilityLabUniversit`a Campus Bio-Medico di RomaFondazione Santa LuciaHospital Los Madro˜nosCanarian Foundation Institute of Neurological SciencesUniversity of Illinois in ChicagoNeurorehabilitation conventionally relies on the interaction between a patient and a physical therapist. Robotic systems can improve and enrich the physical feedback provided to patients after neurological injury, but they under-utilize the adaptability and clinical expertise of trained therapists. In this position paper, we advocate for a novel approach that integrates the therapist's clinical expertise and nuanced decision-making with the strength, accuracy, and repeatability of robotics: Robot-mediated physical Human-Human Interaction. This framework, which enables two individuals to physically interact through robotic devices, has been studied across diverse research groups and has recently emerged as a promising link between conventional manual therapy and rehabilitation robotics, harmonizing the strengths of both approaches. This paper presents the rationale of a multidisciplinary team-including engineers, doctors, and physical therapists-for conducting research that utilizes: a unified taxonomy to describe robot-mediated rehabilitation, a framework of interaction based on social psychology, and a technological approach that makes robotic systems seamless facilitators of natural human-human interaction.

Taming Offload Overheads in a Massively Parallel Open-Source RISC-V MPSoC: Analysis and Optimization

Taming Offload Overheads in a Massively Parallel Open-Source RISC-V MPSoC: Analysis and Optimization

Heterogeneous multi-core architectures combine on a single chip a few large,

general-purpose host cores, optimized for single-thread performance, with

(many) clusters of small, specialized, energy-efficient accelerator cores for

data-parallel processing. Offloading a computation to the many-core

acceleration fabric implies synchronization and communication overheads which

can hamper overall performance and efficiency, particularly for small and

fine-grained parallel tasks. In this work, we present a detailed,

cycle-accurate quantitative analysis of the offload overheads on Occamy, an

open-source massively parallel RISC-V based heterogeneous MPSoC. We study how

the overheads scale with the number of accelerator cores. We explore an

approach to drastically reduce these overheads by co-designing the hardware and

the offload routines. Notably, we demonstrate that by incorporating multicast

capabilities into the Network-on-Chip of a large (200+ cores) accelerator

fabric we can improve offloaded application runtimes by as much as 2.3x,

restoring more than 70% of the ideally attainable speedups. Finally, we propose

a quantitative model to estimate the runtime of selected applications

accounting for the offload overheads, with an error consistently below 15%.

13 Feb 2025

Accurate simulation of turbulent flows remains a challenge due to the high

computational cost of direct numerical simulations (DNS) and the limitations of

traditional turbulence models. This paper explores a novel approach to

augmenting standard models for Reynolds-Averaged Navier-Stokes (RANS)

simulations using a Non-Linear Super-Stencil (NLSS). The proposed method

introduces a fully connected neural network that learns a mapping from the

local mean flow field to a corrective force term, which is added to a standard

RANS solver in order to align its solution with high-fidelity data. A procedure

is devised to extract training data from reference DNS and large eddy

simulations (LES). To reduce the complexity of the non-linear mapping, the

dimensionless local flow data is aligned with the local mean velocity, and the

local support domain is scaled by the turbulent integral length scale. After

being trained on a single periodic hill case, the NLSS-corrected RANS solver is

shown to generalize to different periodic hill geometries and different

Reynolds numbers, producing significantly more accurate solutions than the

uncorrected RANS simulations.

11 Apr 2013

This article analyzes well-definedness and regularity of renormalized powers of Ornstein-Uhlenbeck processes and uses this analysis to establish local existence, uniqueness and regularity of strong solutions of stochastic Ginzburg-Landau equations with polynomial nonlinearities in two space dimensions and with quadratic nonlinearities in three space dimensions.

29 Sep 2020

In order to design a more potent and effective chemical entity, it is

essential to identify molecular structures with the desired chemical

properties. Recent advances in generative models using neural networks and

machine learning are being widely used by many emerging startups and

researchers in this domain to design virtual libraries of drug-like compounds.

Although these models can help a scientist to produce novel molecular

structures rapidly, the challenge still exists in the intelligent exploration

of the latent spaces of generative models, thereby reducing the randomness in

the generative procedure. In this work we present a manifold traversal with

heuristic search to explore the latent chemical space. Different heuristics and

scores such as the Tanimoto coefficient, synthetic accessibility, binding

activity, and QED drug-likeness can be incorporated to increase the validity

and proximity for desired molecular properties of the generated molecules. For

evaluating the manifold traversal exploration, we produce the latent chemical

space using various generative models such as grammar variational autoencoders

(with and without attention) as they deal with the randomized generation and

validity of compounds. With this novel traversal method, we are able to find

more unseen compounds and more specific regions to mine in the latent space.

Finally, these components are brought together in a simple platform allowing

users to perform search, visualization and selection of novel generated

compounds.

09 Jan 2025

The ever-increasing computational and storage requirements of modern applications and the slowdown of technology scaling pose major challenges to designing and implementing efficient computer architectures. To mitigate the bottlenecks of typical processor-based architectures on both the instruction and data sides of the memory, we present Spatz, a compact 64-bit floating-point-capable vector processor based on RISC-V's Vector Extension Zve64d. Using Spatz as the main Processing Element (PE), we design an open-source dual-core vector processor architecture based on a modular and scalable cluster sharing a Scratchpad Memory (SCM). Unlike typical vector processors, whose Vector Register Files (VRFs) are hundreds of KiB large, we prove that Spatz can achieve peak energy efficiency with a latch-based VRF of only 2 KiB. An implementation of the Spatz-based cluster in GlobalFoundries' 12LPP process with eight double-precision Floating Point Units (FPUs) achieves an FPU utilization just 3.4% lower than the ideal upper bound on a double-precision, floating-point matrix multiplication. The cluster reaches 7.7 FMA/cycle, corresponding to 15.7 DP-GFLOPS and 95.7 DP-GFLOPS/W at 1 GHz and nominal operating conditions (TT, 0.80V, 25C), with more than 55% of the power spent on the FPUs. Furthermore, the optimally-balanced Spatz-based cluster reaches a 95.0% FPU utilization (7.6 FMA/cycle), 15.2 DP-GFLOPS, and 99.3 DP-GFLOPS/W (61% of the power spent in the FPU) on a 2D workload with a 7x7 kernel, resulting in an outstanding area/energy efficiency of 171 DP-GFLOPS/W/mm2. At equi-area, the computing cluster built upon compact vector processors reaches a 30% higher energy efficiency than a cluster with the same FPU count built upon scalar cores specialized for stream-based floating-point computation.

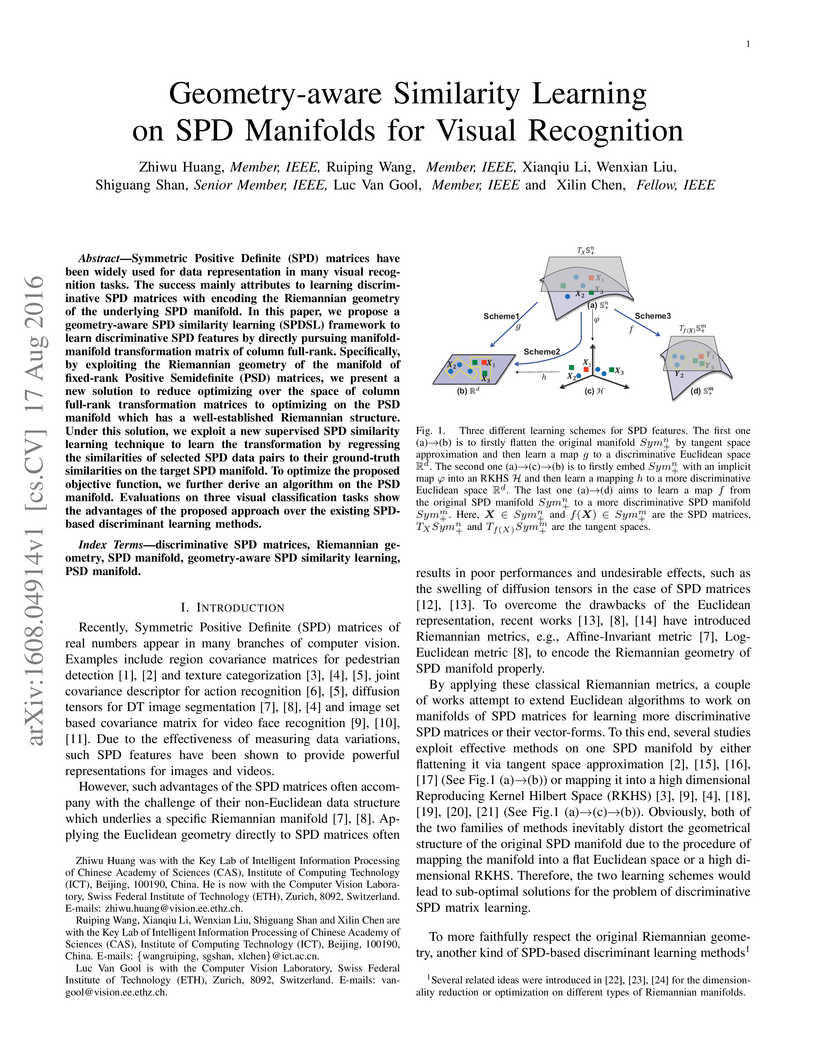

Symmetric Positive Definite (SPD) matrices have been widely used for data

representation in many visual recognition tasks. The success mainly attributes

to learning discriminative SPD matrices with encoding the Riemannian geometry

of the underlying SPD manifold. In this paper, we propose a geometry-aware SPD

similarity learning (SPDSL) framework to learn discriminative SPD features by

directly pursuing manifold-manifold transformation matrix of column full-rank.

Specifically, by exploiting the Riemannian geometry of the manifold of

fixed-rank Positive Semidefinite (PSD) matrices, we present a new solution to

reduce optimizing over the space of column full-rank transformation matrices to

optimizing on the PSD manifold which has a well-established Riemannian

structure. Under this solution, we exploit a new supervised SPD similarity

learning technique to learn the transformation by regressing the similarities

of selected SPD data pairs to their ground-truth similarities on the target SPD

manifold. To optimize the proposed objective function, we further derive an

algorithm on the PSD manifold. Evaluations on three visual classification tasks

show the advantages of the proposed approach over the existing SPD-based

discriminant learning methods.

06 Apr 2025

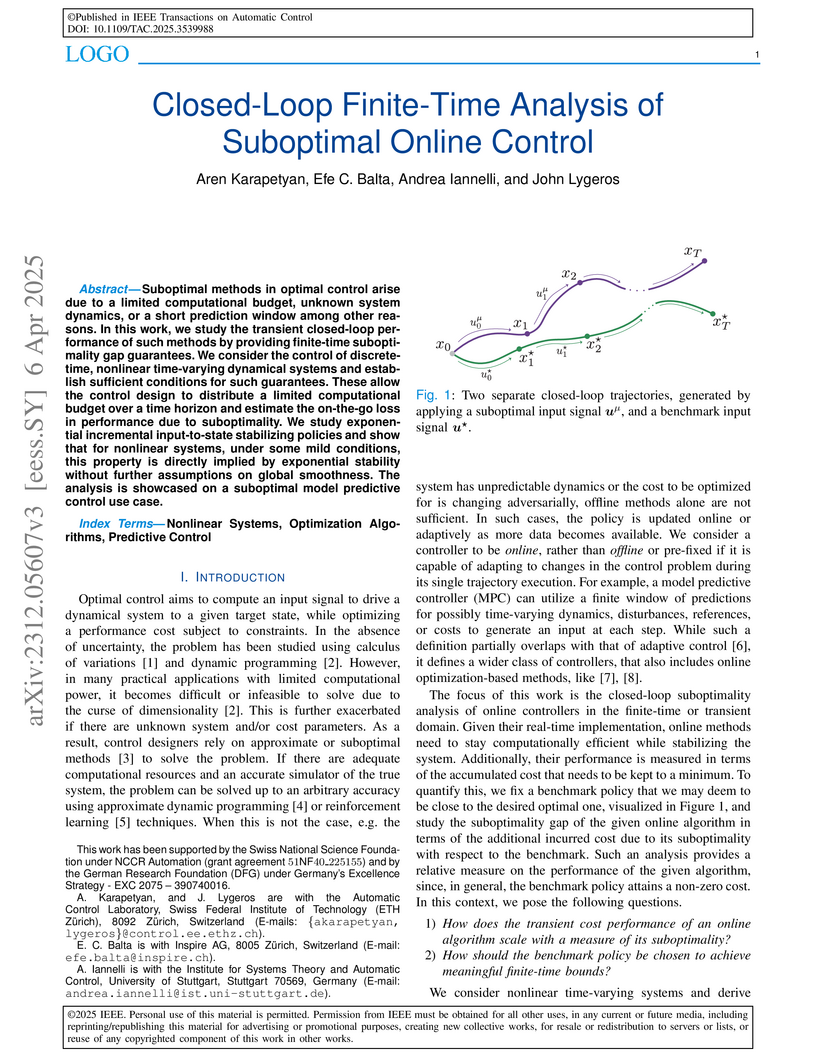

Suboptimal methods in optimal control arise due to a limited computational

budget, unknown system dynamics, or a short prediction window among other

reasons. Although these methods are ubiquitous, their transient performance

remains relatively unstudied. We consider the control of discrete-time,

nonlinear time-varying dynamical systems and establish sufficient conditions to

analyze the finite-time closed-loop performance of such methods in terms of the

additional cost incurred due to suboptimality. Finite-time guarantees allow the

control design to distribute a limited computational budget over a time horizon

and estimate the on-the-go loss in performance due to suboptimality. We study

exponential incremental input-to-state stabilizing policies and show that for

nonlinear systems, under some mild conditions, this property is directly

implied by exponential stability without further assumptions on global

smoothness. The analysis is showcased on a suboptimal model predictive control

use case.

Fast, gradient-based structural optimization has long been limited to a highly restricted subset of problems -- namely, density-based compliance minimization -- for which gradients can be analytically derived. For other objective functions, constraints, and design parameterizations, computing gradients has remained inaccessible, requiring the use of derivative-free algorithms that scale poorly with problem size. This has restricted the applicability of optimization to abstracted and academic problems, and has limited the uptake of these potentially impactful methods in practice. In this paper, we bridge the gap between computational efficiency and the freedom of problem formulation through a differentiable analysis framework designed for general structural optimization. We achieve this through leveraging Automatic Differentiation (AD) to manage the complex computational graph of structural analysis programs, and implementing specific derivation rules for performance critical functions along this graph. This paper provides a complete overview of gradient computation for arbitrary structural design objectives, identifies the barriers to their practical use, and derives key intermediate derivative operations that resolves these bottlenecks. Our framework is then tested against a series of structural design problems of increasing complexity: two highly constrained minimum volume problem, a multi-stage shape and section design problem, and an embodied carbon minimization problem. We benchmark our framework against other common optimization approaches, and show that our method outperforms others in terms of speed, stability, and solution quality.

We propose a framework for resilient autonomous navigation in perceptually

challenging unknown environments with mobility-stressing elements such as

uneven surfaces with rocks and boulders, steep slopes, negative obstacles like

cliffs and holes, and narrow passages. Environments are GPS-denied and

perceptually-degraded with variable lighting from dark to lit and obscurants

(dust, fog, smoke). Lack of prior maps and degraded communication eliminates

the possibility of prior or off-board computation or operator intervention.

This necessitates real-time on-board computation using noisy sensor data. To

address these challenges, we propose a resilient architecture that exploits

redundancy and heterogeneity in sensing modalities. Further resilience is

achieved by triggering recovery behaviors upon failure. We propose a fast

settling algorithm to generate robust multi-fidelity traversability estimates

in real-time. The proposed approach was deployed on multiple physical systems

including skid-steer and tracked robots, a high-speed RC car and legged robots,

as a part of Team CoSTAR's effort to the DARPA Subterranean Challenge, where

the team won 2nd and 1st place in the Tunnel and Urban Circuits, respectively.

A finite horizon optimal tracking problem is considered for linear dynamical systems subject to parametric uncertainties in the state-space matrices and exogenous disturbances. A suboptimal solution is proposed using a model predictive control (MPC) based explicit dual control approach which enables active uncertainty learning. A novel algorithm for the design of robustly invariant online terminal sets and terminal controllers is presented. Set membership identification is used to update the parameter uncertainty online. A predicted worst-case cost is used in the MPC optimization problem to model the dual effect of the control input. The cost-to-go is estimated using contractivity of the proposed terminal set and the remaining time horizon, so that the optimizer can estimate future benefits of exploration. The proposed dual control algorithm ensures robust constraint satisfaction and recursive feasibility, and navigates the exploration-exploitation trade-off using a robust performance metric.

There are no more papers matching your filters at the moment.