Tokyo Medical and Dental University

29 Aug 2025

Over the past decade, techniques enabling bidirectional modulation of neuronal activity with single cell precision have rapidly advanced in the form of two-photon optogenetic stimulation. Unlike conventional electrophysiological approaches or one-photon optogenetics, which inevitably activate many neurons surrounding the target, two-photon optogenetics can drive hundreds of specifically targeted neurons simultaneously, with stimulation patterns that can be flexibly and rapidly reconfigured. In this review, we trace the development of two-photon optogenetic stimulation, focusing on its progression toward implementations in large field of view two-photon microscopes capable of targeted multi neuron control. We highlight three principal strategies: spiral scanning, temporal focusing, and three-dimensional computer-generated holography (3D CGH), along with their combinations, which together provide powerful tools for causal interrogation of neural circuits and behavior. Finally, we discuss the integration of these optical technologies into brain machine interfaces (BMIs), emphasizing both their transformative potential and the technical challenges that must be addressed to realize their broader impact.

The successful reconstruction of perceptual experiences from human brain activity has provided insights into the neural representations of sensory experiences. However, reconstructing arbitrary sounds has been avoided due to the complexity of temporal sequences in sounds and the limited resolution of neuroimaging modalities. To overcome these challenges, leveraging the hierarchical nature of brain auditory processing could provide a path toward reconstructing arbitrary sounds. Previous studies have indicated a hierarchical homology between the human auditory system and deep neural network (DNN) models. Furthermore, advancements in audio-generative models enable to transform compressed representations back into high-resolution sounds. In this study, we introduce a novel sound reconstruction method that combines brain decoding of auditory features with an audio-generative model. Using fMRI responses to natural sounds, we found that the hierarchical sound features of a DNN model could be better decoded than spectrotemporal features. We then reconstructed the sound using an audio transformer that disentangled compressed temporal information in the decoded DNN features. Our method shows unconstrained sounds reconstruction capturing sound perceptual contents and quality and generalizability by reconstructing sound categories not included in the training dataset. Reconstructions from different auditory regions remain similar to actual sounds, highlighting the distributed nature of auditory representations. To see whether the reconstructions mirrored actual subjective perceptual experiences, we performed an experiment involving selective auditory attention to one of overlapping sounds. The results tended to resemble the attended sound than the unattended. These findings demonstrate that our proposed model provides a means to externalize experienced auditory contents from human brain activity.

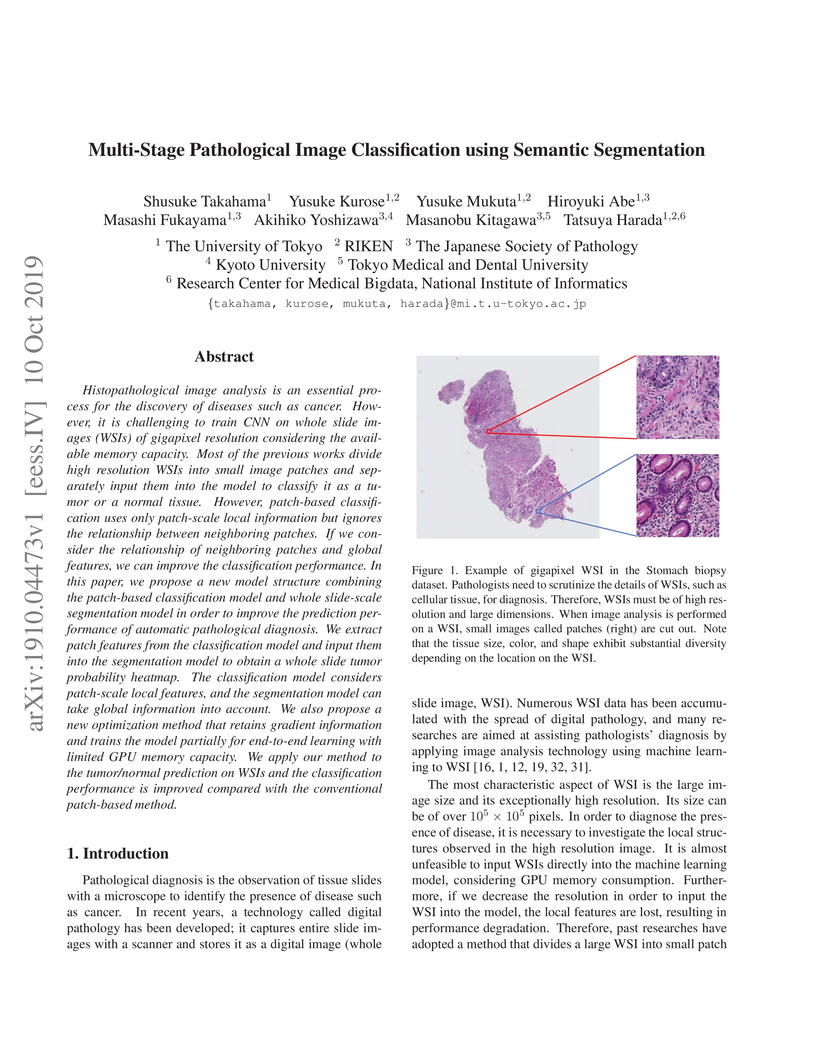

Researchers at The University of Tokyo and RIKEN developed a multi-stage approach for Whole Slide Image analysis, combining patch-based feature extraction with whole-slide semantic segmentation. Their method, including a memory-efficient end-to-end learning strategy, achieves superior diagnostic accuracy, reaching up to 99.34% PR-AUC on stomach biopsy datasets and consistently outperforming traditional patch-based methods on public datasets like Camelyon16 and Camelyon17.

In real-world clinical practice, overlooking unanticipated findings can result in serious consequences. However, supervised learning, which is the foundation for the current success of deep learning, only encourages models to identify abnormalities that are defined in datasets in advance. Therefore, abnormality detection must be implemented in medical images that are not limited to a specific disease category. In this study, we demonstrate an unsupervised learning framework for pixel-wise abnormality detection in brain magnetic resonance imaging captured from a patient population with metastatic brain tumor. Our concept is as follows: If an image reconstruction network can faithfully reproduce the global features of normal anatomy, then the abnormal lesions in unseen images can be identified based on the local difference from those reconstructed as normal by a discriminative network. Both networks are trained on a dataset comprising only normal images without labels. In addition, we devise a metric to evaluate the anatomical fidelity of the reconstructed images and confirm that the overall detection performance is improved when the image reconstruction network achieves a higher score. For evaluation, clinically significant abnormalities are comprehensively segmented. The results show that the area under the receiver operating characteristics curve values for metastatic brain tumors, extracranial metastatic tumors, postoperative cavities, and structural changes are 0.78, 0.61, 0.91, and 0.60, respectively.

The dynamic time warping (DTW) is a widely-used method that allows us to

efficiently compare two time series that can vary in speed. Given two strings

A and B of respective lengths m and n, there is a fundamental dynamic

programming algorithm that computes the DTW distance for A and B together

with an optimal alignment in Θ(mn) time and space. In this paper, we

tackle the problem of interactive computation of the DTW distance for dynamic

strings, denoted D2TW, where character-wise edit operation

(insertion, deletion, substitution) can be performed at an arbitrary position

of the strings. Let M and N be the sizes of the run-length encoding (RLE)

of A and B, respectively. We present an algorithm for D2TW that

occupies Θ(mN+nM) space and uses $O(m+n+\#_{\mathrm{chg}}) \subseteq

O(mN + nM)timetoupdateacompactdifferentialrepresentation\mathit{DS}$

of the DP table per edit operation, where #chg denotes the

number of cells in DS whose values change after the edit operation.

Our method is at least as efficient as the algorithm recently proposed by

Froese et al. running in Θ(mN+nM) time, and is faster when

#chg is smaller than O(mN+nM) which, as our preliminary

experiments suggest, is likely to be the case in the majority of instances.

24 Feb 2022

We study microwave response of a Josephson parametric oscillator consisting

of a superconducting transmission-line resonator with an embedded dc-SQUID. The

dc-SQUID allows to control the magnitude of a Kerr nonlinearity over the ranges

where it is smaller or larger than the photon loss rate. Spectroscopy

measurements reveal the change of the microwave response from a classical

Duffing oscillator to a Kerr parametric oscillator in a single device. In the

single-photon Kerr regime, we observe parametric oscillations with a

well-defined phase of either 0 or π, whose probability can be controlled

by an externally injected signal.

25 Aug 2017

A superconducting qubit in the strong dispersive regime of a circuit quantum

electrodynamics system is a powerful probe for microwave photons in a cavity

mode. In this regime, a qubit spectrum is split into multiple peaks, with each

peak corresponding to an individual photon number in the cavity (discrete ac

Stark shift). Here, we measure the qubit spectrum in the cavity that is driven

continuously with a squeezed vacuum field generated by a Josephson parametric

amplifier. By fitting the qubit spectrum with a model which takes into account

the finite qubit excitation power, the photon number distribution, which is

dissimilar from the apparent peak area ratio in the spectrum, is determined.

The photon number distribution shows the even-odd photon number oscillation and

quantitatively fulfills Klyshko's criterion for the nonclassicality.

As nowadays Machine Learning (ML) techniques are generating huge data

collections, the problem of how to efficiently engineer their storage and

operations is becoming of paramount importance. In this article we propose a

new lossless compression scheme for real-valued matrices which achieves

efficient performance in terms of compression ratio and time for linear-algebra

operations. Experiments show that, as a compressor, our tool is clearly

superior to gzip and it is usually within 20% of xz in terms of compression

ratio. In addition, our compressed format supports matrix-vector

multiplications in time and space proportional to the size of the compressed

representation, unlike gzip and xz that require the full decompression of the

compressed matrix. To our knowledge our lossless compressor is the first one

achieving time and space complexities which match the theoretical limit

expressed by the k-th order statistical entropy of the input.

To achieve further time/space reductions, we propose column-reordering

algorithms hinging on a novel column-similarity score. Our experiments on

various data sets of ML matrices show that, with a modest preprocessing time,

our column reordering can yield a further reduction of up to 16% in the peak

memory usage during matrix-vector multiplication.

Finally, we compare our proposal against the state-of-the-art Compressed

Linear Algebra (CLA) approach showing that ours runs always at least twice

faster (in a multi-thread setting) and achieves better compressed space

occupancy for most of the tested data sets. This experimentally confirms the

provably effective theoretical bounds we show for our compressed-matrix

approach.

It is well known that quantum tunneling can be described by instantons in the imaginary-time path integral formalism. However, its description in the real-time path integral formalism has been elusive. Here we establish a statement that quantum tunneling can be characterized in general by the contribution of complex saddle points, which can be identified by using the Picard-Lefschetz theory. We demonstrate this explicitly by performing Monte Carlo simulations of simple quantum mechanical systems, overcoming the sign problem by the generalized Lefschetz thimble method. We confirm numerically that the contribution of complex saddle points manifests itself in a complex ``weak value'' of the Hermitian coordinate operator x^ evaluated at time t, which is a physical quantity that can be measured by experiments in principle. We also discuss the transition to classical dynamics based on our picture.

09 Mar 2020

The rapid development in designs and fabrication techniques of

superconducting qubits has helped making coherence times of qubits longer. In

the near future, however, the radiative decay of a qubit into its control line

will be a fundamental limitation, imposing a trade-off between fast control and

long lifetime of the qubit. In this work, we successfully break this trade-off

by strongly coupling another superconducting qubit along the control line. This

second qubit, which we call a Josephson quantum filter (JQF), prevents the

qubit from emitting microwave photons and thus suppresses its relaxation, while

faithfully transmitting large-amplitude control microwave pulses due to the

saturation of the quantum filter, enabling fast qubit control. We observe an

improvement of the qubit relaxation time without a reduction of the Rabi

frequency. This device could potentially help in the realization of a

large-scale superconducting quantum information processor in terms of the

heating of the qubit environments and the crosstalk between qubits.

Pathological image analysis is an important process for detecting

abnormalities such as cancer from cell images. However, since the image size is

generally very large, the cost of providing detailed annotations is high, which

makes it difficult to apply machine learning techniques. One way to improve the

performance of identifying abnormalities while keeping the annotation cost low

is to use only labels for each slide, or to use information from another

dataset that has already been labeled. However, such weak supervisory

information often does not provide sufficient performance. In this paper, we

proposed a new task setting to improve the classification performance of the

target dataset without increasing annotation costs. And to solve this problem,

we propose a pipeline that uses multiple instance learning (MIL) and domain

adaptation (DA) methods. Furthermore, in order to combine the supervisory

information of both methods effectively, we propose a method to create

pseudo-labels with high confidence. We conducted experiments on the

pathological image dataset we created for this study and showed that the

proposed method significantly improves the classification performance compared

to existing methods.

The directed acyclic word graph (DAWG) of a string y of length n is the

smallest (partial) DFA which recognizes all suffixes of y with only O(n)

nodes and edges. In this paper, we show how to construct the DAWG for the input

string y from the suffix tree for y, in O(n) time for integer alphabets

of polynomial size in n. In so doing, we first describe a folklore algorithm

which, given the suffix tree for y, constructs the DAWG for the reversed

string of y in O(n) time. Then, we present our algorithm that builds the

DAWG for y in O(n) time for integer alphabets, from the suffix tree for

y. We also show that a straightforward modification to our DAWG construction

algorithm leads to the first O(n)-time algorithm for constructing the affix

tree of a given string y over an integer alphabet. Affix trees are a text

indexing structure supporting bidirectional pattern searches. We then discuss

how our constructions can lead to linear-time algorithms for building other

text indexing structures, such as linear-size suffix tries and symmetric CDAWGs

in linear time in the case of integer alphabets. As a further application to

our O(n)-time DAWG construction algorithm, we show that the set

MAW(y) of all minimal absent words (MAWs) of y can be computed in

optimal, input- and output-sensitive O(n+∣MAW(y)∣) time and O(n)

working space for integer alphabets.

A string w is called a minimal absent word (MAW) for a string S if w

does not occur as a substring in S and all proper substrings of w occur in

S. MAWs are well-studied combinatorial string objects that have potential

applications in areas including bioinformatics, musicology, and data

compression. In this paper, we generalize the notion of MAWs to a set

S={S1,…,Sk} of multiple strings. We first describe our

solution to the case of k=2 strings, and show how to compute the set

M of MAWs in optimal O(n+∣M∣) time and with O(n)

working space, where n denotes the total length of the strings in

S. We then move on to the general case of k>2 strings, and show

how to compute the set M of MAWs in $O(n \lceil k / \log n \rceil +

|\mathsf{M}|)timeandwithO(n (k + \log n))$ bits of working space, in the

word RAM model with machine word size ω=logn. The latter algorithm

runs in optimal O(n+∣M∣) time for k=O(logn).

Progressive cognitive decline spanning across decades is characteristic of Alzheimer's disease (AD). Various predictive models have been designed to realize its early onset and study the long-term trajectories of cognitive test scores across populations of interest. Research efforts have been geared towards superimposing patients' cognitive test scores with the long-term trajectory denoting gradual cognitive decline, while considering the heterogeneity of AD. Multiple trajectories representing cognitive assessment for the long-term have been developed based on various parameters, highlighting the importance of classifying several groups based on disease progression patterns. In this study, a novel method capable of self-organized prediction, classification, and the overlay of long-term cognitive trajectories based on short-term individual data was developed, based on statistical and differential equation modeling. We validated the predictive accuracy of the proposed method for the long-term trajectory of cognitive test score results on two cohorts: the Alzheimer's Disease Neuroimaging Initiative (ADNI) study and the Japanese ADNI study. We also presented two practical illustrations of the simultaneous evaluation of risk factor associated with both the onset and the longitudinal progression of AD, and an innovative randomized controlled trial design for AD that standardizes the heterogeneity of patients enrolled in a clinical trial. These resources would improve the power of statistical hypothesis testing and help evaluate the therapeutic effect. The application of predicting the trajectory of longitudinal disease progression goes beyond AD, and is especially relevant for progressive and neurodegenerative disorders.

Lyndon words are extensively studied in combinatorics on words -- they play a

crucial role on upper bounding the number of runs a word can have [Bannai+,

SIAM J. Comput.'17]. We can determine Lyndon words, factorize a word into

Lyndon words in lexicographically non-increasing order, and find the Lyndon

rotation of a word, all in linear time within constant additional working

space. A recent research interest emerged from the question of what happens

when we change the lexicographic order, which is at the heart of the definition

of Lyndon words. In particular, the alternating order, where the order of all

odd positions becomes reversed, has been recently proposed. While a Lyndon word

is, among all its cyclic rotations, the smallest one with respect to the

lexicographic order, a Galois word exhibits the same property by exchanging the

lexicographic order with the alternating order. Unfortunately, this exchange

has a large impact on the properties Galois words exhibit, which makes it a

nontrivial task to translate results from Lyndon words to Galois words. Up

until now, it has only been conjectured that linear-time algorithms with

constant additional working space in the spirit of Duval's algorithm are

possible for computing the Galois factorization or the Galois rotation.

Here, we affirm this conjecture as follows. Given a word T of length n,

we can determine whether T is a Galois word, in O(n) time with constant

additional working space. Within the same complexities, we can also determine

the Galois rotation of T, and compute the Galois factorization of T online.

The last result settles Open Problem~1 in [Dolce et al., TCS 2019] for Galois

words.

Indexing a set of strings for prefix search or membership queries is a fundamental task with many applications such as information retrieval or database systems. A classic abstract data type for modelling such an index is a trie. Due to the fundamental nature of this problem, it has sparked much interest, leading to a variety of trie implementations with different characteristics. A trie implementation that has been well-used in practice is the double-array (trie) consisting of merely two integer arrays. While a traversal takes constant time per node visit, the needed space consumption in computer words can be as large as the product of the number of nodes and the alphabet size. Despite that several heuristics have been proposed on lowering the space requirements, we are unaware of any theoretical guarantees.

In this paper, we study the decision problem whether there exists a double-array of a given size. To this end, we first draw a connection to the sparse matrix compression problem, which makes our problem NP-complete for alphabet sizes linear to the number of nodes. We further propose a reduction from the restricted directed Hamiltonian path problem, leading to NP-completeness even for logarithmic-sized alphabets.

We introduce height-bounded LZ encodings (LZHB), a new family of compressed

representations that are variants of Lempel-Ziv parsings with a focus on

bounding the worst-case access time to arbitrary positions in the text directly

via the compressed representation. An LZ-like encoding is a partitioning of the

string into phrases of length 1 which can be encoded literally, or phrases of

length at least 2 which have a previous occurrence in the string and can be

encoded by its position and length. An LZ-like encoding induces an implicit

referencing forest on the set of positions of the string. An LZHB encoding is

an LZ-like encoding where the height of the implicit referencing forest is

bounded. An LZHB encoding with height constraint h allows access to an

arbitrary position of the underlying text using O(h) predecessor queries.

While computing the smallest LZHB encoding efficiently seems to be difficult

[Cicalese \& Ugazio 2024, arxiv], we give the first linear time algorithm for

strings over a constant size alphabet that computes the greedy LZHB encoding,

i.e., the string is processed from beginning to end, and the longest prefix of

the remaining string that can satisfy the height constraint is taken as the

next phrase. Our algorithms significantly improve both theoretically and

practically, the very recently and independently proposed algorithms by

Lipt\'ak et al. (arxiv, to appear at CPM 2024). We also analyze the size of

height bounded LZ encodings in the context of repetitiveness measures, and show

for some constant c, the size zHB of the optimal LZHB encoding with

height bound clogn is O(grl), where grl is the size of the

smallest run-length grammar. We also show zHB=o(grl) for some family

of strings, making zHB one of the smallest known repetitiveness measures

for which O(polylogn) time access is possible using linear space.

We propose an experiment for constructing a spatial cat state of a suspended mirror with an order of O(mg). The mirror is set at the center of two mirrors, creating two optical cavities and optical springs. The induced potential exhibits a double-well shape, and its deformation resembles a second-order phase transition as a function of laser power. We estimate an adiabatic condition for the ground state wave function to metamorphose from a localized state at the origin to a spatial cat state within the double-well potential, within a coherence time determined by mechanical and environmental noises. Our estimation suggests that such a construction is possible if we can provide an ultra-high finesse optical cavity with F=2.5×105 and a length of 0.3 cm, along with a shot-noise-limited laser at 7.9 nW. The necessary mechanical coherence time is approximately one second.

We investigate the compression sensitivity [Akagi et al., 2023] of lex-parse [Navarro et al., 2021] for two operations: (1) single character edit and (2) modification of the alphabet ordering, and give tight upper and lower bounds for both operations. For both lower bounds, we use the family of Fibonacci words. For the bounds on edit operations, our analysis makes heavy use of properties of the Lyndon factorization of Fibonacci words to characterize the structure of lex-parse.

We investigate properties of the bijective Burrows-Wheeler transform (BBWT). We show that for any string w, a bidirectional macro scheme of size O(rB) can be induced from the BBWT of w, where rB is the number of maximal character runs in the BBWT. We also show that rB=O(zlog2n), where n is the length of w and z is the number of Lempel-Ziv 77 factors of w. Then, we show a separation between BBWT and BWT by a family of strings with rB=Ω(logn) but having only r=2 maximal character runs in the standard Burrows--Wheeler transform (BWT). However, we observe that the smallest rB among all cyclic rotations of w is always at most r. While an o(n2) algorithm for computing an optimal rotation giving the smallest rB is still open, we show how to compute the Lyndon factorizations -- a component for computing BBWT -- of all cyclic rotations in O(n) time. Furthermore, we conjecture that we can transform two strings having the same Parikh vector to each other by BBWT and rotation operations, and prove this conjecture for the case of binary alphabets and permutations.

There are no more papers matching your filters at the moment.