University at Buffalo SUNY

FlashAttention, developed at Stanford's Hazy Research lab, introduces an exact attention algorithm that optimizes memory access patterns to mitigate the IO bottleneck on GPUs. This method achieves substantial speedups and reduces memory footprint for Transformer models, enabling processing of significantly longer sequence lengths.

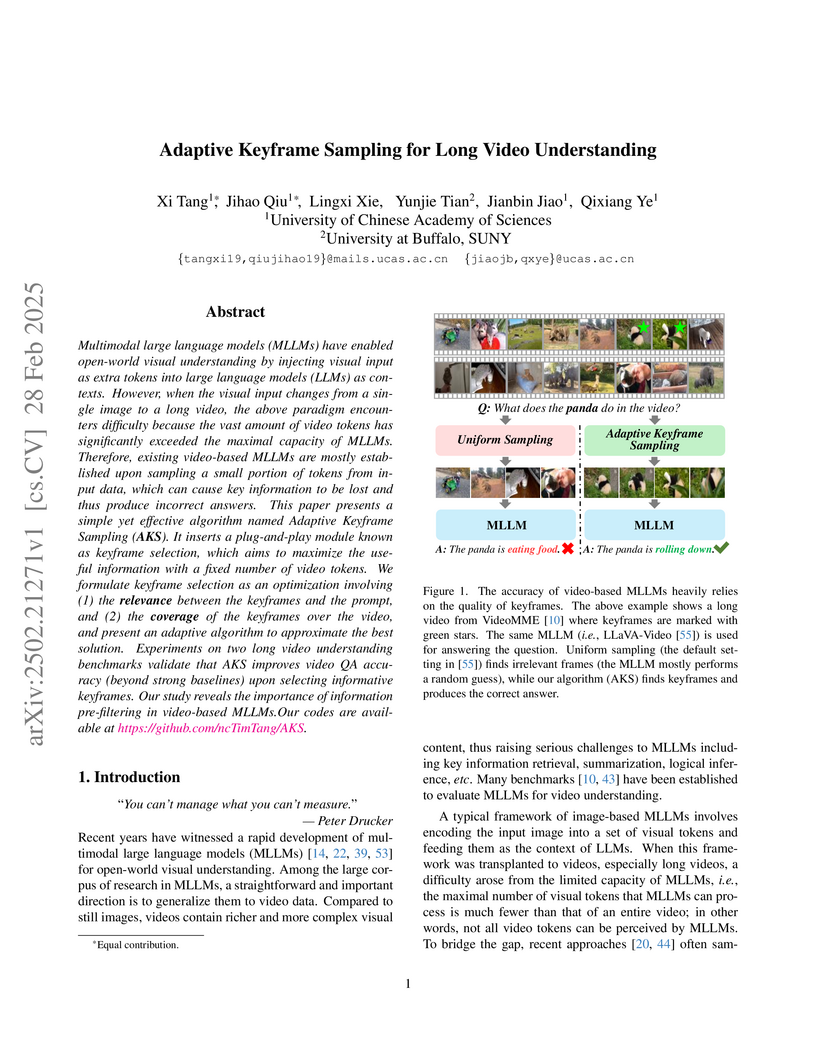

Researchers from the University of Chinese Academy of Sciences and the University at Buffalo introduce Adaptive Keyframe Sampling (AKS), a plug-and-play method that intelligently selects keyframes from long videos based on relevance and coverage. This approach consistently improves the accuracy of Multimodal Large Language Models on various video understanding tasks.

23 Oct 2020

A new framework called HiPPO (High-order Polynomial Projection Operators) redefines recurrent memory as an optimal online function approximation problem, enabling the derivation of efficient and robust memory update rules. The resulting HiPPO-LegS mechanism achieves state-of-the-art performance on long-range dependency tasks, demonstrates remarkable robustness to varying timescales, and processes data 10 times faster than LSTMs and LMUs.

The paper introduces the Linear State-Space Layer (LSSL), a unified framework that mathematically connects recurrent, convolutional, and continuous-time models through a linear state-space formulation. LSSLs achieve state-of-the-art performance on sequential image classification and healthcare regression, demonstrating particular strength in modeling extremely long sequences (tens of thousands of timesteps) by outperforming previous methods.

Researchers from Stanford University and University at Buffalo introduce H3, a novel State Space Model (SSM) layer, and FlashConv, an efficient training algorithm, to enhance SSMs for large-scale language modeling. The combined approach enabled SSMs to match or surpass Transformer performance, achieving lower perplexity and up to 2.4x faster text generation on various benchmarks, while also demonstrating strong performance on diverse non-text modalities.

Physics Informed Neural Networks (PINNs) often exhibit failure modes in which the PDE residual loss converges while the solution error stays large, a phenomenon traditionally blamed on local optima separated from the true solution by steep loss barriers. We challenge this understanding by demonstrate that the real culprit is insufficient arithmetic precision: with standard FP32, the LBFGS optimizer prematurely satisfies its convergence test, freezing the network in a spurious failure phase. Simply upgrading to FP64 rescues optimization, enabling vanilla PINNs to solve PDEs without any failure modes. These results reframe PINN failure modes as precision induced stalls rather than inescapable local minima and expose a three stage training dynamic unconverged, failure, success whose boundaries shift with numerical precision. Our findings emphasize that rigorous arithmetic precision is the key to dependable PDE solving with neural networks.

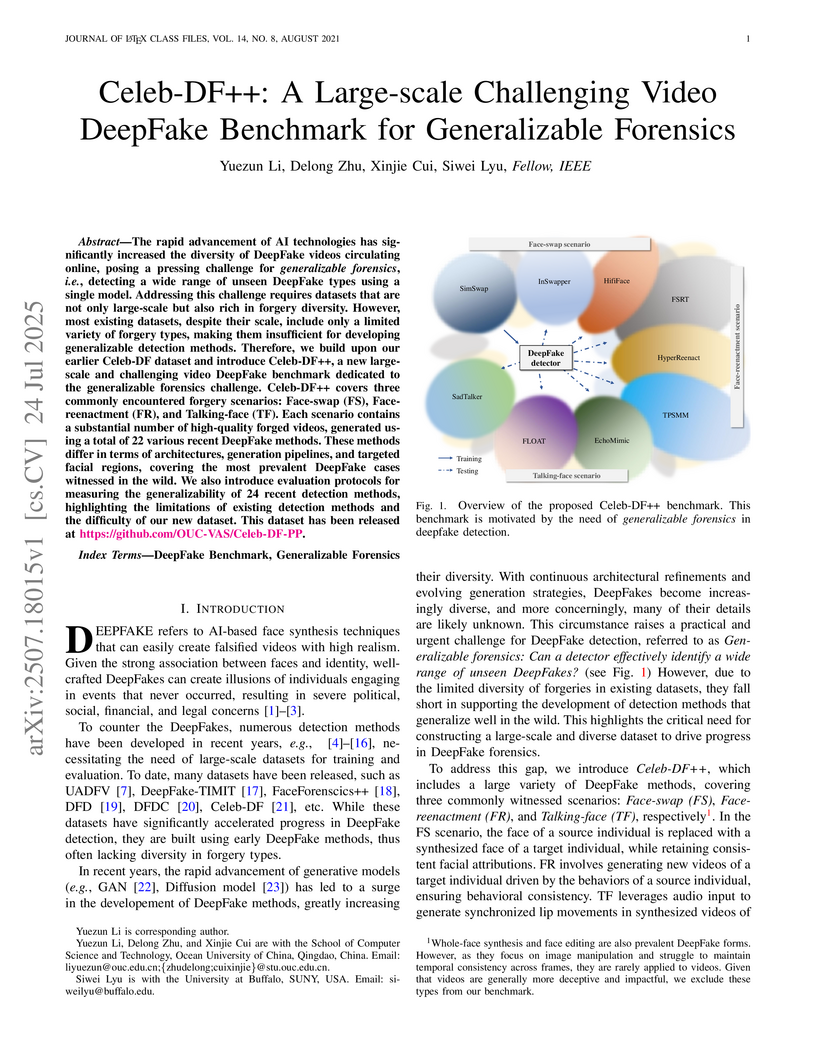

The rapid advancement of AI technologies has significantly increased the diversity of DeepFake videos circulating online, posing a pressing challenge for \textit{generalizable forensics}, \ie, detecting a wide range of unseen DeepFake types using a single model. Addressing this challenge requires datasets that are not only large-scale but also rich in forgery diversity. However, most existing datasets, despite their scale, include only a limited variety of forgery types, making them insufficient for developing generalizable detection methods. Therefore, we build upon our earlier Celeb-DF dataset and introduce {Celeb-DF++}, a new large-scale and challenging video DeepFake benchmark dedicated to the generalizable forensics challenge. Celeb-DF++ covers three commonly encountered forgery scenarios: Face-swap (FS), Face-reenactment (FR), and Talking-face (TF). Each scenario contains a substantial number of high-quality forged videos, generated using a total of 22 various recent DeepFake methods. These methods differ in terms of architectures, generation pipelines, and targeted facial regions, covering the most prevalent DeepFake cases witnessed in the wild. We also introduce evaluation protocols for measuring the generalizability of 24 recent detection methods, highlighting the limitations of existing detection methods and the difficulty of our new dataset.

Monarch matrices are presented as a new class of structured matrices that enable hardware-efficient computation and provide an analytical solution for converting dense models to structured representations. This approach demonstrates 2.0x faster training for models like ViT-B/16 and GPT-2-Medium while maintaining or improving performance across various deep learning and scientific computing benchmarks.

16 Aug 2025

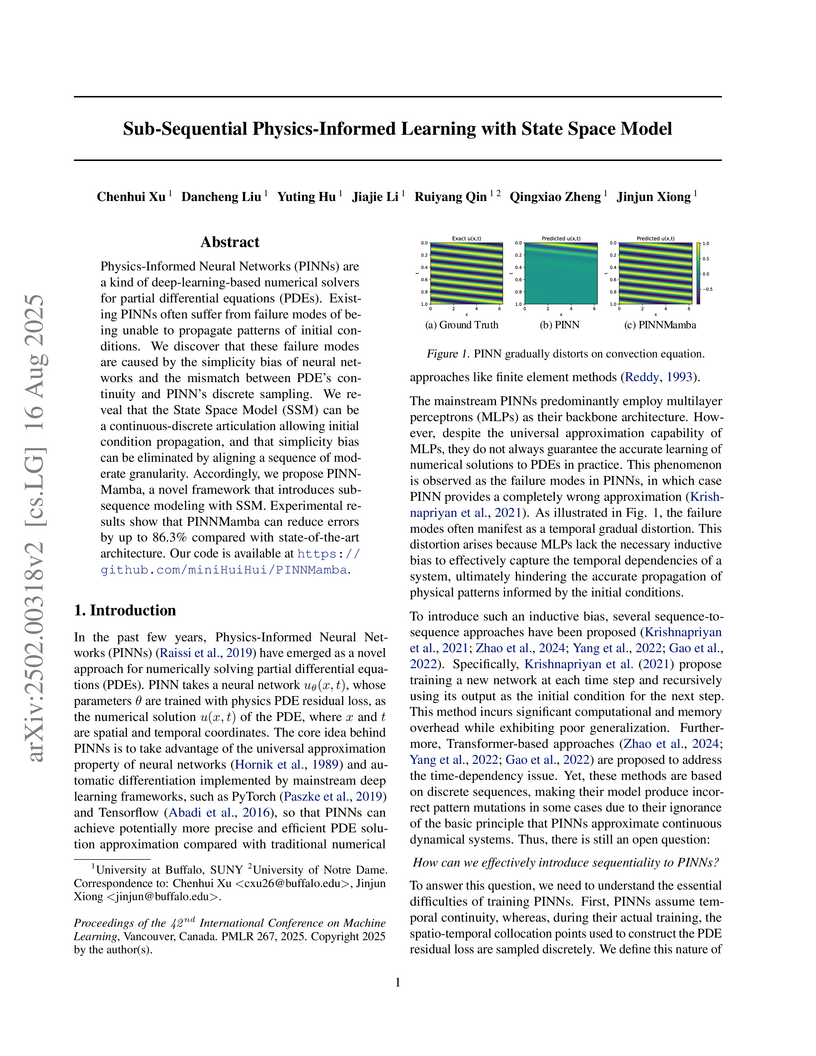

Physics-Informed Neural Networks (PINNs) are a kind of deep-learning-based numerical solvers for partial differential equations (PDEs). Existing PINNs often suffer from failure modes of being unable to propagate patterns of initial conditions. We discover that these failure modes are caused by the simplicity bias of neural networks and the mismatch between PDE's continuity and PINN's discrete sampling. We reveal that the State Space Model (SSM) can be a continuous-discrete articulation allowing initial condition propagation, and that simplicity bias can be eliminated by aligning a sequence of moderate granularity. Accordingly, we propose PINNMamba, a novel framework that introduces sub-sequence modeling with SSM. Experimental results show that PINNMamba can reduce errors by up to 86.3\% compared with state-of-the-art architecture. Our code is available at this https URL.

Federated learning (FL) is vulnerable to backdoor attacks, where adversaries alter model behavior on target classification labels by embedding triggers into data samples. While these attacks have received considerable attention in horizontal FL, they are less understood for vertical FL (VFL), where devices hold different features of the samples, and only the server holds the labels. In this work, we propose a novel backdoor attack on VFL which (i) does not rely on gradient information from the server and (ii) considers potential collusion among multiple adversaries for sample selection and trigger embedding. Our label inference model augments variational autoencoders with metric learning, which adversaries can train locally. A consensus process over the adversary graph topology determines which datapoints to poison. We further propose methods for trigger splitting across the adversaries, with an intensity-based implantation scheme skewing the server towards the trigger. Our convergence analysis reveals the impact of backdoor perturbations on VFL indicated by a stationarity gap for the trained model, which we verify empirically as well. We conduct experiments comparing our attack with recent backdoor VFL approaches, finding that ours obtains significantly higher success rates for the same main task performance despite not using server information. Additionally, our results verify the impact of collusion on attack performance.

The rise of manipulated media has made deepfakes a particularly insidious threat, involving various generative manipulations such as lip-sync modifications, face-swaps, and avatar-driven facial synthesis. Conventional detection methods, which predominantly depend on manually designed phoneme-viseme alignment thresholds, fundamental frame-level consistency checks, or a unimodal detection strategy, inadequately identify modern-day deepfakes generated by advanced generative models such as GANs, diffusion models, and neural rendering techniques. These advanced techniques generate nearly perfect individual frames yet inadvertently create minor temporal discrepancies frequently overlooked by traditional detectors. We present a novel multimodal audio-visual framework, Phoneme-Temporal and Identity-Dynamic Analysis(PIA), incorporating language, dynamic face motion, and facial identification cues to address these limitations. We utilize phoneme sequences, lip geometry data, and advanced facial identity embeddings. This integrated method significantly improves the detection of subtle deepfake alterations by identifying inconsistencies across multiple complementary modalities. Code is available at this https URL

This work investigates the reasoning and planning capabilities of foundation models and their scalability in complex, dynamic environments. We introduce PuzzlePlex, a benchmark designed to assess these capabilities through a diverse set of puzzles. PuzzlePlex consists of 15 types of puzzles, including deterministic and stochastic games of varying difficulty, as well as single-player and two-player scenarios. The PuzzlePlex framework provides a comprehensive environment for each game, and supports extensibility to generate more challenging instances as foundation models evolve. Additionally, we implement customized game-playing strategies for comparison. Building on this benchmark, we develop fine-grained metrics to measure performance and conduct an in-depth analysis of frontier foundation models across two settings: instruction-based and code-based. Furthermore, we systematically investigate their scaling limits. Our findings show that reasoning models outperform others in instruction-based settings, while code-based execution presents greater challenges but offers a scalable and efficient alternative. PuzzlePlex enables targeted evaluation and guides future improvements in reasoning, planning, and generalization for foundation models.

Invisible watermarking of AI-generated images can help with copyright

protection, enabling detection and identification of AI-generated media. In

this work, we present a novel approach to watermark images of T2I Latent

Diffusion Models (LDMs). By only fine-tuning text token embeddings W∗, we

enable watermarking in selected objects or parts of the image, offering greater

flexibility compared to traditional full-image watermarking. Our method

leverages the text encoder's compatibility across various LDMs, allowing

plug-and-play integration for different LDMs. Moreover, introducing the

watermark early in the encoding stage improves robustness to adversarial

perturbations in later stages of the pipeline. Our approach achieves 99% bit

accuracy (48 bits) with a 105× reduction in model parameters,

enabling efficient watermarking.

12 Nov 2025

The development of large-scale quantum processors benefits from superconducting qubits that can operate at elevated temperatures and be fabricated with scalable, foundry-compatible processes. Atomic layer deposition (ALD) is increasingly being adopted as an industrial standard for thin-film growth, particularly in applications requiring precise control over layer thickness and composition. Here, we report superconducting qubits based on NbN/AlN/NbN trilayers deposited entirely by ALD. By varying the number of ALD cycles used to form the AlN barrier, we achieve Josephson tunneling through barriers of different thicknesses, with critical current density spanning seven orders of magnitude, demonstrating the uniformity and versatility of the process. Owing to the high critical temperature of NbN, transmon qubits based on these all-nitride trilayers exhibit microsecond-scale relaxation times, even at temperatures above 300 mK. These results establish ALD as a viable low-temperature deposition technique for superconducting quantum circuits and position all-nitride ALD qubits as a promising platform for operation at elevated temperatures.

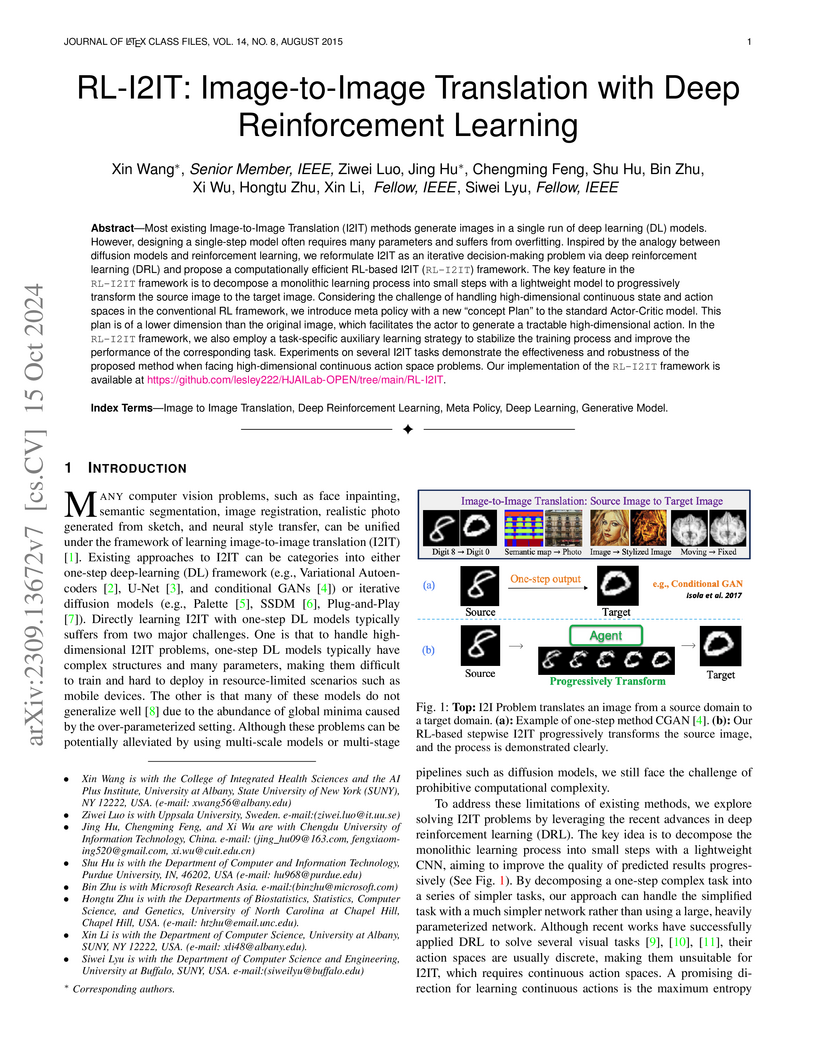

Most existing Image-to-Image Translation (I2IT) methods generate images in a single run of a deep learning (DL) model. However, designing such a single-step model is always challenging, requiring a huge number of parameters and easily falling into bad global minimums and overfitting. In this work, we reformulate I2IT as a step-wise decision-making problem via deep reinforcement learning (DRL) and propose a novel framework that performs RL-based I2IT (RL-I2IT). The key feature in the RL-I2IT framework is to decompose a monolithic learning process into small steps with a lightweight model to progressively transform a source image successively to a target image. Considering that it is challenging to handle high dimensional continuous state and action spaces in the conventional RL framework, we introduce meta policy with a new concept Plan to the standard Actor-Critic model, which is of a lower dimension than the original image and can facilitate the actor to generate a tractable high dimensional action. In the RL-I2IT framework, we also employ a task-specific auxiliary learning strategy to stabilize the training process and improve the performance of the corresponding task. Experiments on several I2IT tasks demonstrate the effectiveness and robustness of the proposed method when facing high-dimensional continuous action space problems. Our implementation of the RL-I2IT framework is available at this https URL.

State space models (SSMs) have high performance on long sequence modeling but

require sophisticated initialization techniques and specialized implementations

for high quality and runtime performance. We study whether a simple alternative

can match SSMs in performance and efficiency: directly learning long

convolutions over the sequence. We find that a key requirement to achieving

high performance is keeping the convolution kernels smooth. We find that simple

interventions--such as squashing the kernel weights--result in smooth kernels

and recover SSM performance on a range of tasks including the long range arena,

image classification, language modeling, and brain data modeling. Next, we

develop FlashButterfly, an IO-aware algorithm to improve the runtime

performance of long convolutions. FlashButterfly appeals to classic Butterfly

decompositions of the convolution to reduce GPU memory IO and increase FLOP

utilization. FlashButterfly speeds up convolutions by 2.2×, and allows

us to train on Path256, a challenging task with sequence length 64K, where we

set state-of-the-art by 29.1 points while training 7.2× faster than

prior work. Lastly, we introduce an extension to FlashButterfly that learns the

coefficients of the Butterfly decomposition, increasing expressivity without

increasing runtime. Using this extension, we outperform a Transformer on

WikiText103 by 0.2 PPL with 30% fewer parameters.

Overparameterized neural networks generalize well but are expensive to train. Ideally, one would like to reduce their computational cost while retaining their generalization benefits. Sparse model training is a simple and promising approach to achieve this, but there remain challenges as existing methods struggle with accuracy loss, slow training runtime, or difficulty in sparsifying all model components. The core problem is that searching for a sparsity mask over a discrete set of sparse matrices is difficult and expensive. To address this, our main insight is to optimize over a continuous superset of sparse matrices with a fixed structure known as products of butterfly matrices. As butterfly matrices are not hardware efficient, we propose simple variants of butterfly (block and flat) to take advantage of modern hardware. Our method (Pixelated Butterfly) uses a simple fixed sparsity pattern based on flat block butterfly and low-rank matrices to sparsify most network layers (e.g., attention, MLP). We empirically validate that Pixelated Butterfly is 3x faster than butterfly and speeds up training to achieve favorable accuracy--efficiency tradeoffs. On the ImageNet classification and WikiText-103 language modeling tasks, our sparse models train up to 2.5x faster than the dense MLP-Mixer, Vision Transformer, and GPT-2 medium with no drop in accuracy.

20 Dec 2024

Split Learning (SL) is a distributed learning framework renowned for its

privacy-preserving features and minimal computational requirements. Previous

research consistently highlights the potential privacy breaches in SL systems

by server adversaries reconstructing training data. However, these studies

often rely on strong assumptions or compromise system utility to enhance attack

performance. This paper introduces a new semi-honest Data Reconstruction Attack

on SL, named Feature-Oriented Reconstruction Attack (FORA). In contrast to

prior works, FORA relies on limited prior knowledge, specifically that the

server utilizes auxiliary samples from the public without knowing any client's

private information. This allows FORA to conduct the attack stealthily and

achieve robust performance. The key vulnerability exploited by FORA is the

revelation of the model representation preference in the smashed data output by

victim client. FORA constructs a substitute client through feature-level

transfer learning, aiming to closely mimic the victim client's representation

preference. Leveraging this substitute client, the server trains the attack

model to effectively reconstruct private data. Extensive experiments showcase

FORA's superior performance compared to state-of-the-art methods. Furthermore,

the paper systematically evaluates the proposed method's applicability across

diverse settings and advanced defense strategies.

Most federated learning (FL) approaches assume a fixed client set. However, real-world scenarios often involve clients dynamically joining or leaving the system based on their needs or interest in specific tasks. This dynamic setting introduces unique challenges: (1) the optimization objective evolves with the active client set, unlike traditional FL with a static objective; and (2) the current global model may no longer serve as an effective initialization for subsequent rounds, potentially hindering adaptation. To address these challenges, we first provide a convergence analysis under a non-convex loss with a dynamic client set, accounting for factors such as gradient noise, local training iterations, and data heterogeneity. Building on this analysis, we propose a model initialization algorithm that enables rapid adaptation to new client sets whenever clients join or leave the system. Our key idea is to compute a weighted average of previous global models, guided by gradient similarity, to prioritize models trained on data distributions that closely align with the current client set, thereby accelerating recovery from distribution shifts. This plug-and-play algorithm is designed to integrate seamlessly with existing FL methods, offering broad applicability in practice. Experimental results on diverse datasets including both image and text domains, varied label distributions, and multiple FL algorithms demonstrate the effectiveness of the proposed approach across a range of scenarios.

Fine-tuning large language models (LLMs) on resource-constrained clients remains a challenging problem. Recent works have fused low-rank adaptation (LoRA) techniques with federated fine-tuning to mitigate challenges associated with client model sizes and data scarcity. Still, the heterogeneity of resources remains a critical bottleneck: while higher-rank modules generally enhance performance, varying client capabilities constrain LoRA's feasible rank range. Existing approaches attempting to resolve this issue either lack analytical justification or impose additional computational overhead, leaving a wide gap for efficient and theoretically-grounded solutions. To address these challenges, we propose federated sketching LoRA (FSLoRA), which leverages a sketching mechanism to enable clients to selectively update submatrices of global LoRA modules maintained by the server. By adjusting the sketching ratios, which determine the ranks of the submatrices on the clients, FSLoRA flexibly adapts to client-specific communication and computational constraints. We provide a rigorous convergence analysis of FSLoRA that characterizes how the sketching ratios affect the convergence rate. Through comprehensive experiments on multiple datasets and LLM models, we demonstrate FSLoRA's performance improvements compared to various baselines.

There are no more papers matching your filters at the moment.