University of Colorado Denver

21 Nov 2025

Rhombohedral multilayer graphene has recently emerged as a rich platform for studying correlation driven magnetic, topological and superconducting states. While most experimental efforts have focused on devices with N≤9 layers, the electronic structure of thick rhombohedral graphene features flat-band surface states even in the infinite layer limit. Here, we use layer resolved capacitance measurements to directly detect these surface states for N≈13 layer rhombohedral graphene devices. Using electronic transport and local magnetometry, we find that the surface states host a variety of ferromagnetic phases, including both valley imbalanced quarter metals and broad regimes of density in which the system spontaneously spin polarizes. We observe several superconducting states localized to a single surface state. These superconductors appear on the unpolarized side of the density-tuned spin transitions, and show strong violations of the Pauli limit consistent with a dominant attractive interaction in the spin-triplet, valley-singlet pairing channel. In contrast to previous studies of rhombohedral multilayers, however, we find that superconductivity can persist to zero displacement field where the system is inversion symmetric. Energetic considerations suggest that superconductivity in this regime is described by the existence of two independent surface superconductors coupled via tunneling through the insulating single crystal graphite bulk.

In many domains, including online education, healthcare, security, and human-computer interaction, facial emotion recognition (FER) is essential. Real-world FER is still difficult despite its significance because of some factors such as variable head positions, occlusions, illumination shifts, and demographic diversity. Engagement detection, which is essential for applications like virtual learning and customer services, is frequently challenging due to FER limitations by many current models. In this article, we propose ExpressNet-MoE, a novel hybrid deep learning model that blends both Convolution Neural Networks (CNNs) and Mixture of Experts (MoE) framework, to overcome the difficulties. Our model dynamically chooses the most pertinent expert networks, thus it aids in the generalization and providing flexibility to model across a wide variety of datasets. Our model improves on the accuracy of emotion recognition by utilizing multi-scale feature extraction to collect both global and local facial features. ExpressNet-MoE includes numerous CNN-based feature extractors, a MoE module for adaptive feature selection, and finally a residual network backbone for deep feature learning. To demonstrate efficacy of our proposed model we evaluated on several datasets, and compared with current state-of-the-art methods. Our model achieves accuracies of 74.77% on AffectNet (v7), 72.55% on AffectNet (v8), 84.29% on RAF-DB, and 64.66% on FER-2013. The results show how adaptive our model is and how it may be used to develop end-to-end emotion recognition systems in practical settings. Reproducible codes and results are made publicly accessible at this https URL.

08 Oct 2025

Chinese Academy of SciencesTata Institute of Fundamental ResearchUniversity of North Carolina at Chapel HillUniversity of Turku

Chinese Academy of SciencesTata Institute of Fundamental ResearchUniversity of North Carolina at Chapel HillUniversity of Turku University of VirginiaHiroshima UniversityIstituto Nazionale di Fisica NucleareJagiellonian UniversityShanghai Astronomical ObservatoryAryabhatta Research Institute of Observational Sciences (ARIES)West Virginia UniversityUniversity of Colorado DenverNordic Optical TelescopeCzech Technical UniversityAgenzia Spaziale ItalianaTuorla ObservatoryUniversity of the National Education CommissionUniversity of Zielona GoraThe College of New JerseySpace Science Data CenterAstrophysical Institute and University ObservatoryAstronomical Institute (ASU CAS)FINCAHiroshima Astrophysical Science CenterOsaka-Kyoiku University

University of VirginiaHiroshima UniversityIstituto Nazionale di Fisica NucleareJagiellonian UniversityShanghai Astronomical ObservatoryAryabhatta Research Institute of Observational Sciences (ARIES)West Virginia UniversityUniversity of Colorado DenverNordic Optical TelescopeCzech Technical UniversityAgenzia Spaziale ItalianaTuorla ObservatoryUniversity of the National Education CommissionUniversity of Zielona GoraThe College of New JerseySpace Science Data CenterAstrophysical Institute and University ObservatoryAstronomical Institute (ASU CAS)FINCAHiroshima Astrophysical Science CenterOsaka-Kyoiku UniversityThe 136 year long optical light curve of OJ~287 is explained by a binary black hole model where the secondary is in a 12 year orbit around the primary. Impacts of the secondary on the accretion disk of the primary generate a series of optical flares which follow a quasi-Keplerian relativistic mathematical model. The orientation of the binary in space is determined from the behavior of the primary jet. Here we ask how the jet of the secondary black hole projects onto the sky plane. Assuming that the jet is initially perpendicular to the disk, and that it is ballistic, we follow its evolution after the Lorentz transformation to the observer's frame. Since the orbital speed of the secondary is of the order of one-tenth of the speed of light, the result is a change in the jet direction by more than a radian during an orbital cycle. We match the theoretical jet line with the recent 12 μas-resolution RadioAstron map of OJ~287, and determine the only free parameter of the problem, the apparent speed of the jet relative to speed of light. It turns out that the Doppler factor of the jet, δ∼5, is much lower than in the primary jet. Besides following a unique shape of the jet path, the secondary jet is also distinguished by a different spectral shape than in the primary jet. The present result on the spectral shape agrees with the huge optical flare of 2021 November 12, also arising from the secondary jet.

University of Toronto

University of Toronto California Institute of TechnologySLAC National Accelerator Laboratory

California Institute of TechnologySLAC National Accelerator Laboratory Northeastern University

Northeastern University UC Berkeley

UC Berkeley Stanford University

Stanford University Texas A&M University

Texas A&M University University of British Columbia

University of British Columbia Northwestern UniversitySouthern Methodist University

Northwestern UniversitySouthern Methodist University University of Florida

University of Florida University of MinnesotaPacific Northwest National LaboratoryNational Institute of Science Education and ResearchFermi National Accelerator LaboratoryTRIUMFUniversity of South DakotaZayed UniversityInstituto de F´ısica Te´orica UAM-CSICUniversity of Colorado DenverUniversidad Autonoma de MadridSNOLABLaurentian UniversitySouth Dakota School of Mines and TechnologyInstitute for Astroparticle Physics (IAP)Universitȁt HeidelbergQueens

’ University

University of MinnesotaPacific Northwest National LaboratoryNational Institute of Science Education and ResearchFermi National Accelerator LaboratoryTRIUMFUniversity of South DakotaZayed UniversityInstituto de F´ısica Te´orica UAM-CSICUniversity of Colorado DenverUniversidad Autonoma de MadridSNOLABLaurentian UniversitySouth Dakota School of Mines and TechnologyInstitute for Astroparticle Physics (IAP)Universitȁt HeidelbergQueens

’ UniversityWe present constraints on low mass dark matter-electron scattering and absorption interactions using a SuperCDMS high-voltage eV-resolution (HVeV) detector. Data were taken underground in the NEXUS facility located at Fermilab with an overburden of 225 meters of water equivalent. The experiment benefits from the minimizing of luminescence from the printed circuit boards in the detector holder used in all previous HVeV studies. A blind analysis of 6.1g⋅days of exposure produces exclusion limits for dark matter-electron scattering cross-sections for masses as low as 1MeV/c2, as well as on the photon-dark photon mixing parameter and the coupling constant between axion-like particles and electrons for particles with masses >1.2eV/c2 probed via absorption processes.

Rhombohedral multilayer graphene hosts a rich landscape of correlated symmetry-broken phases, driven by strong interactions from its flat band edges. Aligning to hexagonal boron nitride (hBN) creates a moiré pattern, leading to recent observations of exotic ground states such as integer and fractional quantum anomalous Hall effects. Here, we show that the moiré effects and resulting correlated phase diagrams are critically influenced by a previously underestimated structural choice: the hBN alignment orientation. This binary parameter distinguishes between configurations where the rhombohedral graphene and hBN lattices are aligned near 0° or 180°, a distinction that arises only because both materials break inversion symmetry. Although the two orientations produce the same moiré wavelength, we find their distinct local stacking configurations result in markedly different moiré potential strengths. Using low-temperature transport and scanning SQUID-on-tip magnetometry, we compare nearly identical devices that differ only in alignment orientation and observe sharply contrasting sequences of symmetry-broken states. Theoretical analysis reveals a simple mechanism based on lattice relaxation and the atomic-scale electronic structure of rhombohedral graphene, supported by detailed modeling. These findings establish hBN alignment orientation as a key control parameter in moiré-engineered graphene systems and provide a framework for interpreting both prior and future experiments.

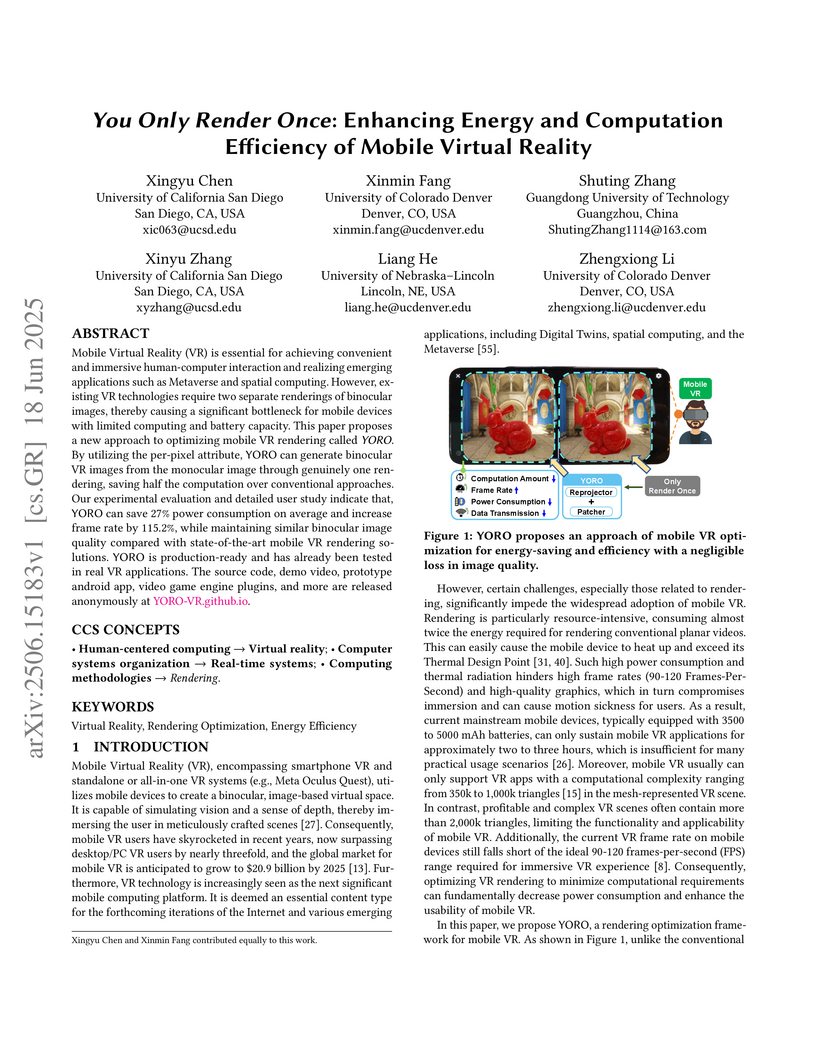

18 Jun 2025

Mobile Virtual Reality (VR) is essential to achieving convenient and immersive human-computer interaction and realizing emerging applications such as Metaverse. However, existing VR technologies require two separate renderings of binocular images, causing a significant bottleneck for mobile devices with limited computing capability and power supply. This paper proposes an approach to rendering optimization for mobile VR called EffVR. By utilizing the per-pixel attribute, EffVR can generate binocular VR images from the monocular image through genuinely one rendering, saving half the computation over conventional approaches. Our evaluation indicates that, compared with the state-of-art, EffVRcan save 27% power consumption on average while achieving high binocular image quality (0.9679 SSIM and 34.09 PSNR) in mobile VR applications. Additionally, EffVR can increase the frame rate by 115.2%. These results corroborate EffVRsuperior computation/energy-saving performance, paving the road to a sustainable mobile VR. The source code, demo video, android app, and more are released anonymously at this https URL

29 Apr 2013

Principal angles between subspaces (PABS) (also called canonical angles)

serve as a classical tool in mathematics, statistics, and applications, e.g.,

data mining. Traditionally, PABS are introduced via their cosines. The cosines

and sines of PABS are commonly defined using the singular value decomposition.

We utilize the same idea for the tangents, i.e., explicitly construct matrices,

such that their singular values are equal to the tangents of PABS, using

several approaches: orthonormal and non-orthonormal bases for subspaces, as

well as projectors. Such a construction has applications, e.g., in analysis of

convergence of subspace iterations for eigenvalue problems.

22 Oct 2025

We present a random construction proving that the extreme off-diagonal Ramsey numbers satisfy R(3,k)≥(21+o(1))logkk2. This bound has been conjectured to be asymptotically tight, and improves the previously best bound R(3,k)≥(31+o(1))logkk2. In contrast to all previous constructions achieving the correct order of magnitude, we do not use a nibble argument, and the proof is significantly less technical.

Molecule generation using generative AI is vital for drug discovery, yet class-specific datasets often contain fewer than 100 training examples. While fragment-based models handle limited data better than atom-based approaches, existing heuristic fragmentation limits diversity and misses key fragments. Additionally, model tuning typically requires slow, indirect collaboration between medicinal chemists and AI engineers. We introduce FRAGMENTA, an end-to-end framework for drug lead optimization comprising: 1) a novel generative model that reframes fragmentation as a "vocabulary selection" problem, using dynamic Q-learning to jointly optimize fragmentation and generation; and 2) an agentic AI system that refines objectives via conversational feedback from domain experts. This system removes the AI engineer from the loop and progressively learns domain knowledge to eventually automate tuning. In real-world cancer drug discovery experiments, FRAGMENTA's Human-Agent configuration identified nearly twice as many high-scoring molecules as baselines. Furthermore, the fully autonomous Agent-Agent system outperformed traditional Human-Human tuning, demonstrating the efficacy of agentic tuning in capturing expert intent.

Design-by-Analogy (DbA) is a design methodology wherein new solutions,

opportunities or designs are generated in a target domain based on inspiration

drawn from a source domain; it can benefit designers in mitigating design

fixation and improving design ideation outcomes. Recently, the increasingly

available design databases and rapidly advancing data science and artificial

intelligence technologies have presented new opportunities for developing

data-driven methods and tools for DbA support. In this study, we survey

existing data-driven DbA studies and categorize individual studies according to

the data, methods, and applications in four categories, namely, analogy

encoding, retrieval, mapping, and evaluation. Based on both nuanced organic

review and structured analysis, this paper elucidates the state of the art of

data-driven DbA research to date and benchmarks it with the frontier of data

science and AI research to identify promising research opportunities and

directions for the field. Finally, we propose a future conceptual data-driven

DbA system that integrates all propositions.

12 Apr 2025

The Fokker-Planck (FP) equation represents the drift-diffusive processes in

kinetic models. It can also be regarded as a model for the collision integral

of the Boltzmann-type equation to represent thermo-hydrodynamic processes in

fluids. The lattice Boltzmann method (LBM) is a drastically simplified

discretization of the Boltzmann equation. We construct two new FP-based LBMs,

one for recovering the Navier-Stokes equations and the other for simulating the

energy equation, where, in each case, the effect of collisions is represented

as relaxations of different central moments to their respective attractors.

Such attractors are obtained by matching the changes in various discrete

central moments under collision with the continuous central moments prescribed

by the FP model. As such, the resulting central moment attractors depend on the

lower order moments and the diffusion tensor parameters and significantly

differ from those based on the Maxwell distribution. The diffusion tensor

parameters for evolving higher moments in simulating fluid motions at

relatively low viscosities are chosen based on a renormalization principle. The

use of such central moment formulations in modeling the collision step offers

significant improvements in numerical stability, especially for simulations of

thermal convective flows with a wide range of variations in the transport

coefficients. We develop new FP central moment LBMs for thermo-hydrodynamics in

both two- and three-dimensions and demonstrate the ability of our approach to

accurately simulate various cases involving thermal convective buoyancy-driven

flows, especially at high Rayleigh numbers. Moreover, we show significant

improvements in numerical stability of our FP central moment LBMs when compared

to other existing central moment LBMs using the Maxwell distribution in

achieving higher Peclet numbers for mixed convection flows.

03 Jul 2025

The tunable band structure and nontrivial topology of multilayer rhombohedral graphene lead to a variety of correlated electronic states with isospin orders-meaning ordered states in the combined spin and valley degrees of freedom-dictated by the interplay of spin-orbit coupling and Hunds exchange interactions. However, methods for mapping local isospin textures and determining the exchange energies are currently lacking. Here, we image the magnetization textures in tetralayer rhombohedral graphene using a nanoscale superconducting quantum interference device. We observe sharp magnetic phase transitions that indicate spontaneous time-reversal symmetry breaking. In the quarter-metal phase, the spin and orbital moments align closely, providing a bound on the spin-orbit coupling energy. We also show that the half-metal phase has a very small magnetic anisotropy, which provides an experimental lower bound on the intervalley Hunds exchange interaction energy. This is found to be close to its theoretical upper bound. The ability to resolve the local isospin texture and the different interaction energies will allow a better understanding of the phase transition hierarchy and the numerous correlated electronic states arising from spontaneous and induced isospin symmetry breaking in graphene heterostructures.

06 Nov 2013

We study a marginal empirical likelihood approach in scenarios when the number of variables grows exponentially with the sample size. The marginal empirical likelihood ratios as functions of the parameters of interest are systematically examined, and we find that the marginal empirical likelihood ratio evaluated at zero can be used to differentiate whether an explanatory variable is contributing to a response variable or not. Based on this finding, we propose a unified feature screening procedure for linear models and the generalized linear models. Different from most existing feature screening approaches that rely on the magnitudes of some marginal estimators to identify true signals, the proposed screening approach is capable of further incorporating the level of uncertainties of such estimators. Such a merit inherits the self-studentization property of the empirical likelihood approach, and extends the insights of existing feature screening methods. Moreover, we show that our screening approach is less restrictive to distributional assumptions, and can be conveniently adapted to be applied in a broad range of scenarios such as models specified using general moment conditions. Our theoretical results and extensive numerical examples by simulations and data analysis demonstrate the merits of the marginal empirical likelihood approach.

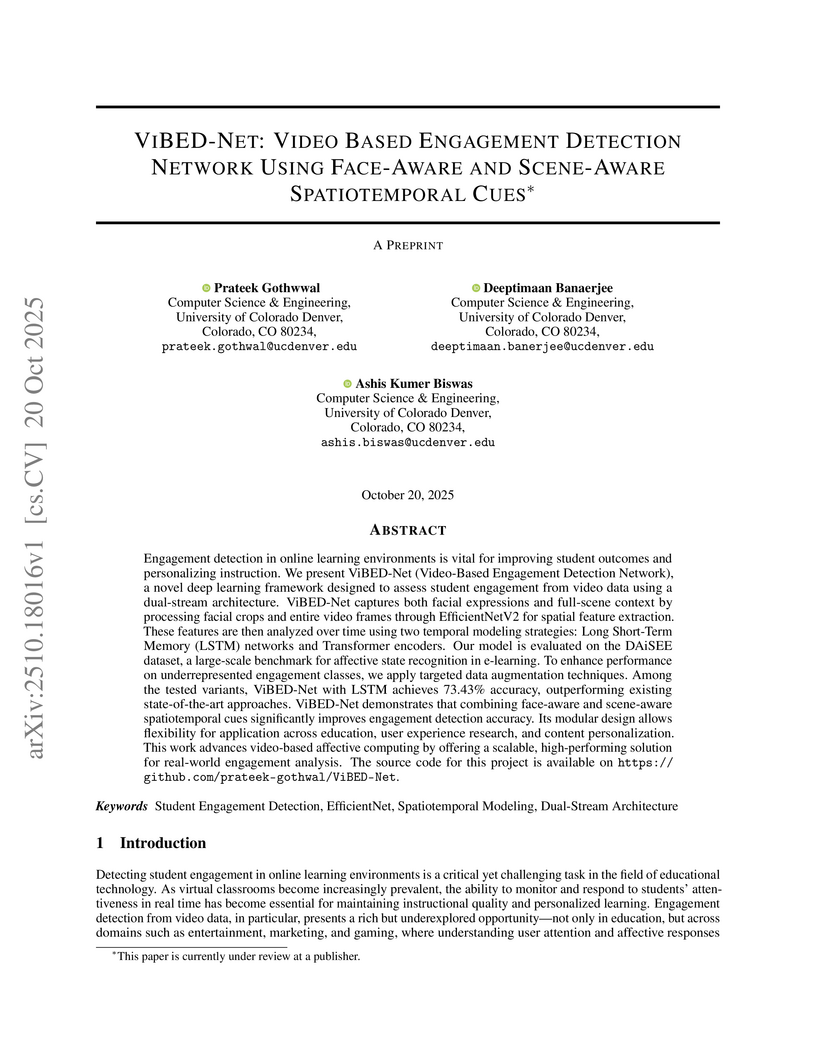

Engagement detection in online learning environments is vital for improving student outcomes and personalizing instruction. We present ViBED-Net (Video-Based Engagement Detection Network), a novel deep learning framework designed to assess student engagement from video data using a dual-stream architecture. ViBED-Net captures both facial expressions and full-scene context by processing facial crops and entire video frames through EfficientNetV2 for spatial feature extraction. These features are then analyzed over time using two temporal modeling strategies: Long Short-Term Memory (LSTM) networks and Transformer encoders. Our model is evaluated on the DAiSEE dataset, a large-scale benchmark for affective state recognition in e-learning. To enhance performance on underrepresented engagement classes, we apply targeted data augmentation techniques. Among the tested variants, ViBED-Net with LSTM achieves 73.43\% accuracy, outperforming existing state-of-the-art approaches. ViBED-Net demonstrates that combining face-aware and scene-aware spatiotemporal cues significantly improves engagement detection accuracy. Its modular design allows flexibility for application across education, user experience research, and content personalization. This work advances video-based affective computing by offering a scalable, high-performing solution for real-world engagement analysis. The source code for this project is available on this https URL .

Accurate segmentation of sea ice types is essential for mapping and

operational forecasting of sea ice conditions for safe navigation and resource

extraction in ice-covered waters, as well as for understanding polar climate

processes. While deep learning methods have shown promise in automating sea ice

segmentation, they often rely on extensive labeled datasets which require

expert knowledge and are time-consuming to create. Recently, foundation models

(FMs) have shown excellent results for segmenting remote sensing images by

utilizing pre-training on large datasets using self-supervised techniques.

However, their effectiveness for sea ice segmentation remains unexplored,

especially given sea ice's complex structures, seasonal changes, and unique

spectral signatures, as well as peculiar Synthetic Aperture Radar (SAR) imagery

characteristics including banding and scalloping noise, and varying ice

backscatter characteristics, which are often missing in standard remote sensing

pre-training datasets. In particular, SAR images over polar regions are

acquired using different modes than used to capture the images at lower

latitudes by the same sensors that form training datasets for FMs. This study

evaluates ten remote sensing FMs for sea ice type segmentation using Sentinel-1

SAR imagery, focusing on their seasonal and spatial generalization. Among the

selected models, Prithvi-600M outperforms the baseline models, while CROMA

achieves a very similar performance in F1-score. Our contributions include

offering a systematic methodology for selecting FMs for sea ice data analysis,

a comprehensive benchmarking study on performances of FMs for sea ice

segmentation with tailored performance metrics, and insights into existing gaps

and future directions for improving domain-specific models in polar

applications using SAR data.

CNRSSLAC National Accelerator Laboratory

CNRSSLAC National Accelerator Laboratory Northeastern UniversityUniversity of Zurich

Northeastern UniversityUniversity of Zurich University of Michigan

University of Michigan Texas A&M University

Texas A&M University University of British Columbia

University of British Columbia University of Florida

University of Florida Argonne National Laboratory

Argonne National Laboratory University of MinnesotaFlorida State University

University of MinnesotaFlorida State University Lawrence Berkeley National LaboratoryLos Alamos National LaboratoryHigh Energy Accelerator Research Organization (KEK)The University of ChicagoTRIUMFNational High Magnetic Field LaboratoryUniversity of MassachusettsUniversity of South DakotaUniversity of California BerkeleyUniversity of Colorado DenverGrenoble-INPKavli Institute for Cosmological Physics, The University of ChicagoUniversity of Grenoble AlpesQueens

’ University

Lawrence Berkeley National LaboratoryLos Alamos National LaboratoryHigh Energy Accelerator Research Organization (KEK)The University of ChicagoTRIUMFNational High Magnetic Field LaboratoryUniversity of MassachusettsUniversity of South DakotaUniversity of California BerkeleyUniversity of Colorado DenverGrenoble-INPKavli Institute for Cosmological Physics, The University of ChicagoUniversity of Grenoble AlpesQueens

’ UniversityWe present results of a search for spin-independent dark matter-nucleon

interactions in a 1 cm2 by 1 mm thick (0.233 gram) high-resolution silicon

athermal phonon detector operated above ground. For interactions in the

substrate, this detector achieves a r.m.s. baseline energy resolution of 361.5

± 0.4 MeV/c2, the best for any athermal phonon detector to date. With an

exposure of 0.233g × 12 hours, we place the most stringent constraints

on dark matter masses between 44 and 87 MeV/c2, with the lowest unexplored

cross section of 4 ×10−32 cm2 at 87 MeV/c2. We employ a

conservative salting technique to reach the lowest dark matter mass ever probed

via direct detection experiment. This constraint is enabled by two-channel

rejection of low-energy backgrounds that are coupled to individual sensors.

01 Apr 2023

University of Toronto

University of Toronto California Institute of TechnologySLAC National Accelerator Laboratory

California Institute of TechnologySLAC National Accelerator Laboratory Northeastern University

Northeastern University UC Berkeley

UC Berkeley Stanford University

Stanford University Texas A&M University

Texas A&M University University of British Columbia

University of British Columbia Northwestern UniversitySouthern Methodist University

Northwestern UniversitySouthern Methodist University University of Florida

University of Florida University of MinnesotaPacific Northwest National Laboratory

University of MinnesotaPacific Northwest National Laboratory Lawrence Berkeley National LaboratoryNational Institute of Science Education and ResearchFermi National Accelerator Laboratory

Lawrence Berkeley National LaboratoryNational Institute of Science Education and ResearchFermi National Accelerator Laboratory Karlsruhe Institute of Technology

Karlsruhe Institute of Technology Durham UniversitySanta Clara UniversityTRIUMFUniversity of South DakotaZayed UniversityUniversity of Colorado DenverSNOLABLaurentian UniversitySouth Dakota School of Mines and TechnologyUniversit at HamburgUniversidad Aut

´

onoma de MadridUniversit

´e de Montr

´ealQueens

’ University

Durham UniversitySanta Clara UniversityTRIUMFUniversity of South DakotaZayed UniversityUniversity of Colorado DenverSNOLABLaurentian UniversitySouth Dakota School of Mines and TechnologyUniversit at HamburgUniversidad Aut

´

onoma de MadridUniversit

´e de Montr

´ealQueens

’ UniversityThe SuperCDMS Collaboration is currently building SuperCDMS SNOLAB, a dark

matter search focused on nucleon-coupled dark matter in the 1-5 GeV/c2 mass

range. Looking to the future, the Collaboration has developed a set of

experience-based upgrade scenarios, as well as novel directions, to extend the

search for dark matter using the SuperCDMS technology in the SNOLAB facility.

The experienced-based scenarios are forecasted to probe many square decades of

unexplored dark matter parameter space below 5 GeV/c2, covering over 6

decades in mass: 1-100 eV/c2 for dark photons and axion-like particles,

1-100 MeV/c2 for dark-photon-coupled light dark matter, and 0.05-5 GeV/c2

for nucleon-coupled dark matter. They will reach the neutrino fog in the 0.5-5

GeV/c2 mass range and test a variety of benchmark models and sharp targets.

The novel directions involve greater departures from current SuperCDMS

technology but promise even greater reach in the long run, and their

development must begin now for them to be available in a timely fashion.

The experienced-based upgrade scenarios rely mainly on dramatic improvements

in detector performance based on demonstrated scaling laws and reasonable

extrapolations of current performance. Importantly, these improvements in

detector performance obviate significant reductions in background levels beyond

current expectations for the SuperCDMS SNOLAB experiment. Given that the

dominant limiting backgrounds for SuperCDMS SNOLAB are cosmogenically created

radioisotopes in the detectors, likely amenable only to isotopic purification

and an underground detector life-cycle from before crystal growth to detector

testing, the potential cost and time savings are enormous and the necessary

improvements much easier to prototype.

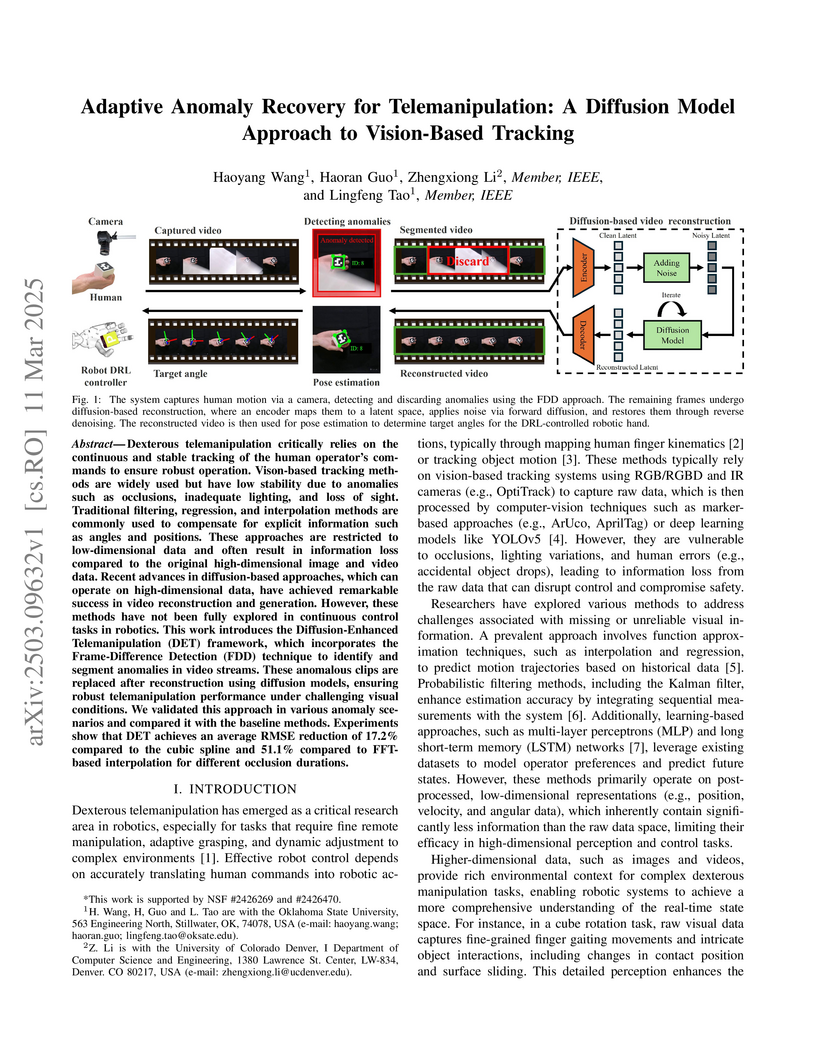

Dexterous telemanipulation critically relies on the continuous and stable

tracking of the human operator's commands to ensure robust operation.

Vison-based tracking methods are widely used but have low stability due to

anomalies such as occlusions, inadequate lighting, and loss of sight.

Traditional filtering, regression, and interpolation methods are commonly used

to compensate for explicit information such as angles and positions. These

approaches are restricted to low-dimensional data and often result in

information loss compared to the original high-dimensional image and video

data. Recent advances in diffusion-based approaches, which can operate on

high-dimensional data, have achieved remarkable success in video reconstruction

and generation. However, these methods have not been fully explored in

continuous control tasks in robotics. This work introduces the

Diffusion-Enhanced Telemanipulation (DET) framework, which incorporates the

Frame-Difference Detection (FDD) technique to identify and segment anomalies in

video streams. These anomalous clips are replaced after reconstruction using

diffusion models, ensuring robust telemanipulation performance under

challenging visual conditions. We validated this approach in various anomaly

scenarios and compared it with the baseline methods. Experiments show that DET

achieves an average RMSE reduction of 17.2% compared to the cubic spline and

51.1% compared to FFT-based interpolation for different occlusion durations.

10 Jul 2025

This paper presents a comprehensive five-stage evolutionary framework for understanding the development of artificial intelligence, arguing that its trajectory mirrors the historical progression of human cognitive technologies. We posit that AI is advancing through distinct epochs, each defined by a revolutionary shift in its capacity for representation and reasoning, analogous to the inventions of cuneiform, the alphabet, grammar and logic, mathematical calculus, and formal logical systems. This "Geometry of Cognition" framework moves beyond mere metaphor to provide a systematic, cross-disciplinary model that not only explains AI's past architectural shifts-from expert systems to Transformers-but also charts a concrete and prescriptive path forward. Crucially, we demonstrate that this evolution is not merely linear but reflexive: as AI advances through these stages, the tools and insights it develops create a feedback loop that fundamentally reshapes its own underlying architecture. We are currently transitioning into a "Metalinguistic Moment," characterized by the emergence of self-reflective capabilities like Chain-of-Thought prompting and Constitutional AI. The subsequent stages, the "Mathematical Symbolism Moment" and the "Formal Logic System Moment," will be defined by the development of a computable calculus of thought, likely through neuro-symbolic architectures and program synthesis, culminating in provably aligned and reliable AI that reconstructs its own foundational representations. This work serves as the methodological capstone to our trilogy, which previously explored the economic drivers ("why") and cognitive nature ("what") of AI. Here, we address the "how," providing a theoretical foundation for future research and offering concrete, actionable strategies for startups and developers aiming to build the next generation of intelligent systems.

05 Aug 2025

Urban inequality, as reflected by uneven spatial allocations of resources, services, and opportunities, has arisen as a major topic for quantitative research and policy intervention. Geographic Information Systems (GIS) provide a solid framework for quantifying, analyzing, and visualizing these disparities; nevertheless, the many statistical approaches used in different studies have not been completely pooled. This analysis looks at 201 peer-reviewed articles published between 1996 and 2024, obtained from the Web of Science and Scopus databases, that use GIS-based approaches to investigate intra-urban differences. Eligibility was limited to English-language, peer-reviewed research that focused on urban settings, with the screening technique following the PRISMA methodology. The review identifies five key theme domains: accessibility, green space, health-related disparity, socioeconomic status, and open space provision. In the literature, statistical and network-based approaches, such as spatial clustering, regression analysis, and bibliometric mapping, are critical for identifying patterns and driving thematic synthesis. Although accessibility remains the core focus, the subject has expanded to include a variety of indicators such as environmental justice and health vulnerability, aided by advances in data sources and spatial analytics. Ongoing methodological issues include spatial concentration in industrialized countries and the limited use of longitudinal or composite measurements. The report concludes by outlining research priorities and practical recommendations for improving statistical rigor, encouraging interdisciplinary collaboration, and assuring policy relevance in GIS-based urban inequality studies.

There are no more papers matching your filters at the moment.