University of Roma Tre

22 Oct 2024

First passage percolation with recovery is a process aimed at modeling the spread of epidemics. On a graph G place a red particle at a reference vertex o and colorless particles (seeds) at all other vertices. The red particle starts spreading a \emph{red first passage percolation} of rate 1, while all seeds are dormant. As soon as a seed is reached by the process, it turns red and starts spreading {red first passage percolation}. All vertices are equipped with independent exponential clocks ringing at rate γ>0, when a clock rings the corresponding \emph{red vertex turns black}. For t≥0, let Ht and Mt denote the size of the longest red path and of the largest red cluster present at time t. %, respectively. If G is the semi-line, then for all γ>0 almost surely limsuptlogtHtloglogt=1 and liminftHt=0. In contrast, if G is an infinite Galton-Watson tree with offspring mean m>1 then, for all γ>0, almost surely liminfttHtlogt≥m−1 and liminfttMtloglogt≥m−1, while limsuptectMt≤1, for all c>m−1. Also, almost surely as t→∞, for all γ>0 Ht is of order at most t. Furthermore, if we restrict our attention to bounded-degree graphs, then for any ε>0 there is a critical value γc>0 so that for all γ>γc, almost surely limsupttMt≤ε.

22 May 2024

University of Washington

University of Washington California Institute of TechnologyUniversity of Oslo

California Institute of TechnologyUniversity of Oslo University of Oxford

University of Oxford University of CopenhagenUniversity of Edinburgh

University of CopenhagenUniversity of Edinburgh INFN

INFN ETH Zürich

ETH Zürich Texas A&M University

Texas A&M University University of British ColumbiaCSIC

University of British ColumbiaCSIC NASA Goddard Space Flight CenterUniversity of Crete

NASA Goddard Space Flight CenterUniversity of Crete Université Paris-SaclayUniversity of HelsinkiUniversity of ZagrebUniversité de Genève

Université Paris-SaclayUniversity of HelsinkiUniversity of ZagrebUniversité de Genève Leiden University

Leiden University CEAUniversity of PortsmouthUniversity of SussexINAFUniversity of CaliforniaJet Propulsion LaboratorySISSACNESPontificia Universidad Católica de ChileUniversidad de ValparaísoUniversità di Napoli Federico IIUniversity of KwaZulu-NatalLudwig-Maximilians-UniversitätKavli IPMU (WPI), UTIAS, The University of TokyoInstituto de Astrofísica de Canarias (IAC)University of LyonIPAC, California Institute of TechnologyDARK, Niels Bohr InstituteINAF-IASF MilanoUniversity of RomeSpace Science Data Center - Agenzia Spaziale ItalianaDTU SpaceOsservatorio Astronomico di BreraRheinische Friedrich-Wilhelms-Universität BonnUniversity of Roma TreUniversité de LausanneSTFCInstitute for Space SciencesAPC, Université Paris Cité, CNRS, Astroparticule et CosmologieNOVACergy Paris UniversityDipartimento di Fisica e Astronomia, Sezione di Astrofisica, Università di FirenzeCosmic Dawn Center(DAWN)Universit degli Studi di FerraraUniversit

Claude Bernard Lyon 1Max Planck Institut fr AstronomieAix-Marseille Universit",Universit

di PadovaUniversit

degli Studi di MilanoUniversit

Di BolognaINAF

` Osservatorio Astronomico di Trieste

CEAUniversity of PortsmouthUniversity of SussexINAFUniversity of CaliforniaJet Propulsion LaboratorySISSACNESPontificia Universidad Católica de ChileUniversidad de ValparaísoUniversità di Napoli Federico IIUniversity of KwaZulu-NatalLudwig-Maximilians-UniversitätKavli IPMU (WPI), UTIAS, The University of TokyoInstituto de Astrofísica de Canarias (IAC)University of LyonIPAC, California Institute of TechnologyDARK, Niels Bohr InstituteINAF-IASF MilanoUniversity of RomeSpace Science Data Center - Agenzia Spaziale ItalianaDTU SpaceOsservatorio Astronomico di BreraRheinische Friedrich-Wilhelms-Universität BonnUniversity of Roma TreUniversité de LausanneSTFCInstitute for Space SciencesAPC, Université Paris Cité, CNRS, Astroparticule et CosmologieNOVACergy Paris UniversityDipartimento di Fisica e Astronomia, Sezione di Astrofisica, Università di FirenzeCosmic Dawn Center(DAWN)Universit degli Studi di FerraraUniversit

Claude Bernard Lyon 1Max Planck Institut fr AstronomieAix-Marseille Universit",Universit

di PadovaUniversit

degli Studi di MilanoUniversit

Di BolognaINAF

` Osservatorio Astronomico di TriesteThe Near-Infrared Spectrometer and Photometer (NISP) on board the Euclid satellite provides multiband photometry and R>=450 slitless grism spectroscopy in the 950-2020nm wavelength range. In this reference article we illuminate the background of NISP's functional and calibration requirements, describe the instrument's integral components, and provide all its key properties. We also sketch the processes needed to understand how NISP operates and is calibrated, and its technical potentials and limitations. Links to articles providing more details and technical background are included. NISP's 16 HAWAII-2RG (H2RG) detectors with a plate scale of 0.3" pix^-1 deliver a field-of-view of 0.57deg^2. In photo mode, NISP reaches a limiting magnitude of ~24.5AB mag in three photometric exposures of about 100s exposure time, for point sources and with a signal-to-noise ratio (SNR) of 5. For spectroscopy, NISP's point-source sensitivity is a SNR = 3.5 detection of an emission line with flux ~2x10^-16erg/s/cm^2 integrated over two resolution elements of 13.4A, in 3x560s grism exposures at 1.6 mu (redshifted Ha). Our calibration includes on-ground and in-flight characterisation and monitoring of detector baseline, dark current, non-linearity, and sensitivity, to guarantee a relative photometric accuracy of better than 1.5%, and relative spectrophotometry to better than 0.7%. The wavelength calibration must be better than 5A. NISP is the state-of-the-art instrument in the NIR for all science beyond small areas available from HST and JWST - and an enormous advance due to its combination of field size and high throughput of telescope and instrument. During Euclid's 6-year survey covering 14000 deg^2 of extragalactic sky, NISP will be the backbone for determining distances of more than a billion galaxies. Its NIR data will become a rich reference imaging and spectroscopy data set for the coming decades.

The bottom-up construction of synthetic cells is one of the most intriguing and interesting research arenas in synthetic biology. Synthetic cells are built by encapsulating biomolecules inside lipid vesicles (liposomes), allowing the synthesis of one or more functional proteins. Thanks to the in situ synthesized proteins, synthetic cells become able to perform several biomolecular functions, which can be exploited for a large variety of applications. This paves the way to several advanced uses of synthetic cells in basic science and biotechnology, thanks to their versatility, modularity, biocompatibility, and programmability. In the previous WIVACE (2012) we presented the state-of-the-art of semi-synthetic minimal cell (SSMC) technology and introduced, for the first time, the idea of chemical communication between synthetic cells and natural cells. The development of a proper synthetic communication protocol should be seen as a tool for the nascent field of bio/chemical-based Information and Communication Technologies (bio-chem-ICTs) and ultimately aimed at building soft-wet-micro-robots. In this contribution (WIVACE, 2013) we present a blueprint for realizing this project, and show some preliminary experimental results. We firstly discuss how our research goal (based on the natural capabilities of biological systems to manipulate chemical signals) finds a proper place in the current scientific and technological contexts. Then, we shortly comment on the experimental approaches from the viewpoints of (i) synthetic cell construction, and (ii) bioengineering of microorganisms, providing up-to-date results from our laboratory. Finally, we shortly discuss how autopoiesis can be used as a theoretical framework for defining synthetic minimal life, minimal cognition, and as bridge between synthetic biology and artificial intelligence.

26 Aug 2004

Pendry in his paper [Phys. Rev. Lett., 85, 3966 (2000)] put forward an idea for a lens made of a lossless metamaterial slab with n = -1, that may provide focusing with resolution beyond the conventional limit. In his analysis, the evanescent wave inside such a lossless double-negative (DNG) slab is 'growing', and thus it 'compensates' the decaying exponential outside of it, providing the sub-wavelength lensing properties of this system. Here, we examine this debated issue of 'growing exponential' from an equivalent circuit viewpoint by analyzing a set of distributed-circuit elements representing evanescent wave interaction with a lossless slab of DNG medium. Our analysis shows that, under certain conditions, the current in series elements and the voltage at the element nodes may attain the dominant increasing due to the suitable resonance of the lossless circuit, providing an alternative physical explanation for 'growing exponential' in Pendry's lens and similar sub-wavelength imaging systems.

The increasing availability of traffic data from sensor networks has created

new opportunities for understanding vehicular dynamics and identifying

anomalies. In this study, we employ clustering techniques to analyse traffic

flow data with the dual objective of uncovering meaningful traffic patterns and

detecting anomalies, including sensor failures and irregular congestion events.

We explore multiple clustering approaches, i.e partitioning and hierarchical

methods, combined with various time-series representations and similarity

measures. Our methodology is applied to real-world data from highway sensors,

enabling us to assess the impact of different clustering frameworks on traffic

pattern recognition. We also introduce a clustering-driven anomaly detection

methodology that identifies deviations from expected traffic behaviour based on

distance-based anomaly scores.

Results indicate that hierarchical clustering with symbolic representations

provides robust segmentation of traffic patterns, while partitioning methods

such as k-means and fuzzy c-means yield meaningful results when paired with

Dynamic Time Warping. The proposed anomaly detection strategy successfully

identifies sensor malfunctions and abnormal traffic conditions with minimal

false positives, demonstrating its practical utility for real-time monitoring.

Real-world vehicular traffic data are provided by Autostrade Alto Adriatico

S.p.A.

Over the past decade, industrial control systems have experienced a massive integration with information technologies. Industrial networks have undergone numerous technical transformations to protect operational and production processes, leading today to a new industrial revolution. Information Technology tools are not able to guarantee confidentiality, integrity and availability in the industrial domain, therefore it is of paramount importance to understand the interaction of the physical components with the networks. For this reason, usually, the industrial control systems are an example of Cyber-Physical Systems (CPS). This paper aims to provide a tool for the detection of cyber attacks in cyber-physical systems. This method is based on Machine Learning to increase the security of the system. Through the analysis of the values assumed by Machine Learning it is possible to evaluate the classification performance of the three models. The model obtained using the training set, allows to classify a sample of anomalous behavior and a sample that is related to normal behavior. The attack identification is implemented in water tank system, and the identification approach using Machine Learning aims to avoid dangerous states, such as the overflow of a tank. The results are promising, demonstrating its effectiveness.

22 Nov 2021

Politecnico di MilanoUniversity of BolognaSapienza University of RomeUniversity of MessinaUniversity of PerugiaUniversity of BresciaUniversity of Roma TreUniversity of Cassino and Lazio MeridionaleGuglielmo Marconi UniversityIdea-re S.r.l.Universit

a Politecnica delle MarcheUniversit

`a Telematica eCampusCampus Bio Medico University of Rome

In this paper, we present an approach to evaluate Research \& Development (R\&D) performance based on the Analytic Hierarchy Process (AHP) method. Through a set of questionnaires submitted to a team of experts, we single out a set of indicators needed for R\&D performance evaluation. The indicators, together with the corresponding criteria, form the basic hierarchical structure of the AHP method. The numerical values associated with all the indicators are then used to assign a score to a given R\&D project. In order to aggregate consistently the values taken on by the different indicators, we operate on them so that they are mapped to dimensionless quantities lying in a unit interval. This is achieved by employing the empirical Cumulative Density Function (CDF) for each of the indicators. We give a thorough discussion on how to assign a score to an R\&D project along with the corresponding uncertainty due to possible inconsistencies of the decision process. A particular example of R\&D performance is finally considered.

Let G be the set of all the planar embeddings of a (not necessarily connected) n-vertex graph G. We present a bijection Φ from G to the natural numbers in the interval [0…∣G∣−1]. Given a planar embedding E of G, we show that Φ(E) can be decomposed into a sequence of O(n) natural numbers each describing a specific feature of E. The function Φ, which is a ranking function for G, can be computed in O(n) time, while its inverse unranking function Φ−1 can be computed in O(nα(n)) time. The results of this paper can be of practical use to uniformly at random generating the planar embeddings of a graph G or to enumerating such embeddings with amortized constant delay. Also, they can be used to counting, enumerating or uniformly at random generating constrained planar embeddings of G.

03 May 2021

X-ray emission from the surface of isolated neutron stars (NSs) has been now observed in a variety of sources. The ubiquitous presence of pulsations clearly indicates that thermal photons either come from a limited area, possibly heated by some external mechanism, or from the entire (cooling) surface but with an inhomogeneous temperature distribution. In a NS the thermal map is shaped by the magnetic field topology, since heat flows in the crust mostly along the magnetic field lines. Self-consistent surface thermal maps can hence be produced by simulating the coupled magnetic and thermal evolution of the star. We compute the evolution of the neutron star crust in three dimensions for different initial configurations of the magnetic field and use the ensuing thermal surface maps to derive the spectrum and the pulse profile as seen by an observer at infinity, accounting for general-relativistic effects. In particular, we compare cases with a high degree of symmetry with inherently 3D ones, obtained by adding a quadrupole to the initial dipolar field. Axially symmetric fields result in rather small pulsed fractions (≲5%), while more complex configurations produce higher pulsed fractions, up to ∼25%. We find that the spectral properties of our axisymmetric model are close to those of the bright isolated NS RX~J1856.5-3754 at an evolutionary time comparable with the inferred dynamical age of the source.

29 Mar 2018

The Haldane model is a paradigmatic 2d lattice model exhibiting the integer

quantum Hall effect. We consider an interacting version of the model, and prove

that for short-range interactions, smaller than the bandwidth, the Hall

conductivity is quantized, for all the values of the parameters outside two

critical curves, across which the model undergoes a `topological' phase

transition: the Hall coefficient remains integer and constant as long as we

continuously deform the parameters without crossing the curves; when this

happens, the Hall coefficient jumps abruptly to a different integer. Previous

works were limited to the perturbative regime, in which the interaction is much

smaller than the bare gap, so they were restricted to regions far from the

critical lines. The non-renormalization of the Hall conductivity arises as a

consequence of lattice conservation laws and of the regularity properties of

the current-current correlations. Our method provides a full construction of

the critical curves, which are modified (`dressed') by the electron-electron

interaction. The shift of the transition curves manifests itself via apparent

infrared divergences in the naive perturbative series, which we resolve via

renormalization group methods.

In timeline-based planning, domains are described as sets of independent, but

interacting, components, whose behaviour over time (the set of timelines) is

governed by a set of temporal constraints. A distinguishing feature of

timeline-based planning systems is the ability to integrate planning with

execution by synthesising control strategies for flexible plans. However,

flexible plans can only represent temporal uncertainty, while more complex

forms of nondeterminism are needed to deal with a wider range of realistic

problems. In this paper, we propose a novel game-theoretic approach to

timeline-based planning problems, generalising the state of the art while

uniformly handling temporal uncertainty and nondeterminism. We define a general

concept of timeline-based game and we show that the notion of winning strategy

for these games is strictly more general than that of control strategy for

dynamically controllable flexible plans. Moreover, we show that the problem of

establishing the existence of such winning strategies is decidable using a

doubly exponential amount of space.

21 Mar 2021

A Unifying Framework for Adaptive Radar Detection in the Presence of Multiple Alternative Hypotheses

A Unifying Framework for Adaptive Radar Detection in the Presence of Multiple Alternative Hypotheses

In this paper, we develop a new elegant framework relying on the

Kullback-Leibler Information Criterion to address the design of one-stage

adaptive detection architectures for multiple hypothesis testing problems.

Specifically, at the design stage, we assume that several alternative

hypotheses may be in force and that only one null hypothesis exists. Then,

starting from the case where all the parameters are known and proceeding until

the case where the adaptivity with respect to the entire parameter set is

required, we come up with decision schemes for multiple alternative hypotheses

consisting of the sum between the compressed log-likelihood ratio based upon

the available data and a penalty term accounting for the number of unknown

parameters. The latter rises from suitable approximations of the

Kullback-Leibler Divergence between the true and a candidate probability

density function. Interestingly, under specific constraints, the proposed

decision schemes can share the constant false alarm rate property by virtue of

the Invariance Principle. Finally, we show the effectiveness of the proposed

framework through the application to examples of practical value in the context

of radar detection also in comparison with two-stage competitors. This analysis

highlights that the architectures devised within the proposed framework

represent an effective means to deal with detection problems where the

uncertainty on some parameters leads to multiple alternative hypotheses.

09 Sep 2020

Neutron stars harbour extremely strong magnetic fields within their solid outer crust. The topology of this field strongly influences the surface temperature distribution, and hence the star's observational properties. In this work, we present the first realistic simulations of the coupled crustal magneto-thermal evolution of isolated neutron stars in three dimensions with account for neutrino emission, obtained with the pseudo-spectral code Parody. We investigate both the secular evolution, especially in connection with the onset of instabilities during the Hall phase, and the short-term evolution following episodes of localised energy injection. Simulations show that a resistive tearing instability develops in about a Hall time if the initial toroidal field exceeds ~1015 G. This leads to crustal failures because of the huge magnetic stresses coupled with the local temperature enhancement produced by dissipation. Localised heat deposition in the crust results in the appearance of hot spots on the star surface which can exhibit a variety of patterns. Since the transport properties are strongly influenced by the magnetic field, the hot regions tend to drift away and get deformed following the magnetic field lines while cooling. The shapes obtained with our simulations are reminiscent of those recently derived from NICER X-ray observations of the millisecond pulsar PSR J0030+0451.

University of PaviaUniversity of BolognaConsiglio Nazionale delle Ricerche (CNR)RIKEN Nishina CenterUniversity of UdineUniversity of InsubriaUniversity of Campania “Luigi Vanvitelli”University of Roma TreScience and Technology Facilities Council (STFC)Centro Nazionale di Adroterapia Oncologica (CNAO)Istituto Nazionale di Fisica Nucleare INFNUniversity of Milano

Bicocca

FAMU is an INFN-led muonic atom physics experiment based at the RIKEN-RAL

muon facility at the ISIS Neutron and Muon Source (United Kingdom). The aim of

FAMU is to measure the hyperfine splitting in muonic hydrogen to determine the

value of the proton Zemach radius with accuracy better than 1%.The experiment

has a scintillating-fibre hodoscope for beam monitoring and data normalisation.

In order to carry out muon flux estimation, low-rate measurements were

performed to extract the single-muon average deposited charge. Then, detector

simulation in Geant4 and FLUKA allowed a thorough understanding of the

single-muon response function, crucial for determining the muon flux. This work

presents the design features of the FAMU beam monitor, along with the

simulation and absolute calibration measurements in order to enable flux

determination and enable data normalisation.

26 Jun 2016

We introduce a new multivariate circular linear distribution suitable for modeling direction and speed in (multiple) animal movement data. To properly account for specific data features, such as heterogeneity and time dependence, a hidden Markov model is used. Parameters are estimated under a Bayesian framework and we provide computational details to implement the Markov chain Monte Carlo algorithm.

The proposed model is applied to a dataset of six free-ranging Maremma Sheepdogs. Its predictive performance, as well as the interpretability of the results, are compared to those given by hidden Markov models built on all the combinations of von Mises (circular), wrapped Cauchy (circular), gamma (linear) and Weibull (linear) distributions

The increasing availability of traffic data from sensor networks has created new opportunities for understanding vehicular dynamics and identifying anomalies. In this study, we employ clustering techniques to analyse traffic flow data with the dual objective of uncovering meaningful traffic patterns and detecting anomalies, including sensor failures and irregular congestion events.

We explore multiple clustering approaches, i.e partitioning and hierarchical methods, combined with various time-series representations and similarity measures. Our methodology is applied to real-world data from highway sensors, enabling us to assess the impact of different clustering frameworks on traffic pattern recognition. We also introduce a clustering-driven anomaly detection methodology that identifies deviations from expected traffic behaviour based on distance-based anomaly scores.

Results indicate that hierarchical clustering with symbolic representations provides robust segmentation of traffic patterns, while partitioning methods such as k-means and fuzzy c-means yield meaningful results when paired with Dynamic Time Warping. The proposed anomaly detection strategy successfully identifies sensor malfunctions and abnormal traffic conditions with minimal false positives, demonstrating its practical utility for real-time monitoring.

Real-world vehicular traffic data are provided by Autostrade Alto Adriatico S.p.A.

28 Nov 2019

Dynamic Light Scattering (DLS), Small Angle X-ray Scattering (SAXS) and

Transmission Electron Microscopy (TEM) are physical techniques widely employed

to characterize the morphology and the structure of vesicles such as liposomes

or human extracellular vesicles (exosomes). Bacterial extracellular vesicles

are similar in size to human exosomes, although their function and membrane

properties have not been elucidated in such detail as in the case of exosomes.

Here, we applied the above cited techniques, in synergy with the thermodynamic

characterization of the vesicles lipid membrane using a turbidimetric technique

to the study of vesicles produced by Gram-negative bacteria (Outer Membrane

Vesicles, OMVs) grown at different temperatures. This study demonstrated that

our combined approach is useful to discriminate vesicles of different origin or

coming from bacteria cultured under different experimental conditions. We

envisage that in a near future the techniques employed in our work will be

further implemented to discriminate complex mixtures of bacterial vesicles,

thus showing great promises for biomedical or diagnostic applications.

30 Jun 2022

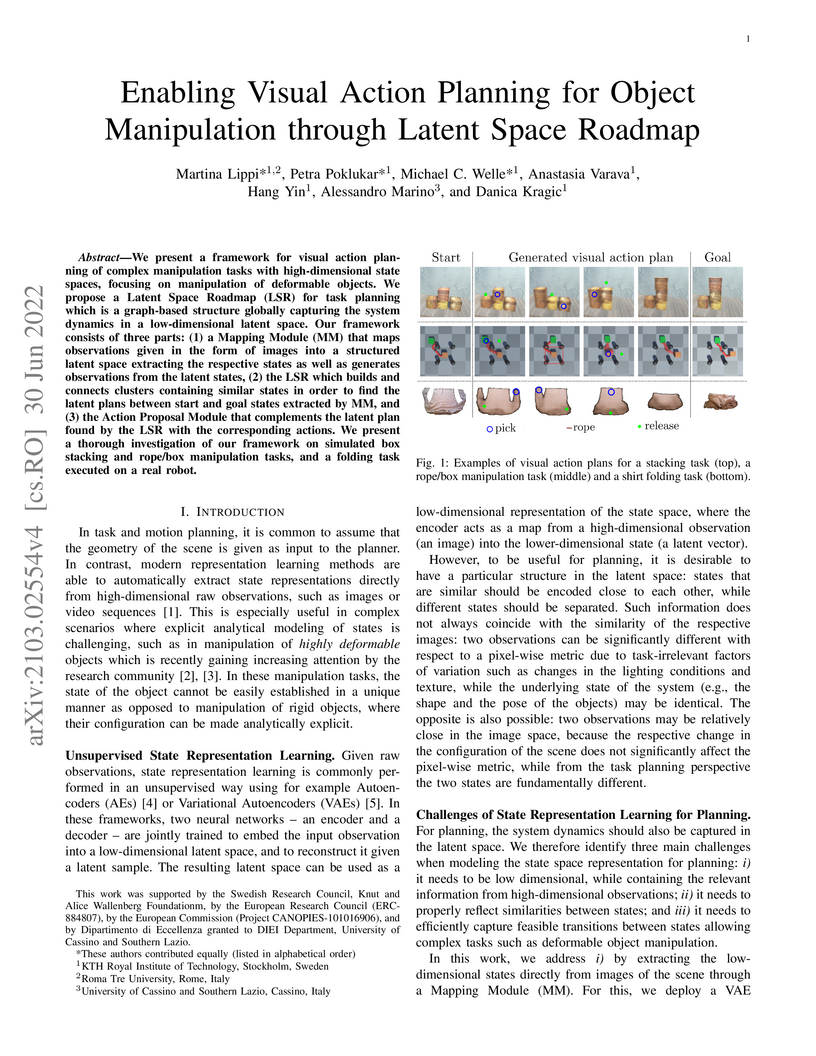

We present a framework for visual action planning of complex manipulation

tasks with high-dimensional state spaces, focusing on manipulation of

deformable objects. We propose a Latent Space Roadmap (LSR) for task planning

which is a graph-based structure globally capturing the system dynamics in a

low-dimensional latent space. Our framework consists of three parts: (1) a

Mapping Module (MM) that maps observations given in the form of images into a

structured latent space extracting the respective states as well as generates

observations from the latent states, (2) the LSR which builds and connects

clusters containing similar states in order to find the latent plans between

start and goal states extracted by MM, and (3) the Action Proposal Module that

complements the latent plan found by the LSR with the corresponding actions. We

present a thorough investigation of our framework on simulated box stacking and

rope/box manipulation tasks, and a folding task executed on a real robot.

For general spin systems, we prove that a contractive coupling for any local

Markov chain implies optimal bounds on the mixing time and the modified

log-Sobolev constant for a large class of Markov chains including the Glauber

dynamics, arbitrary heat-bath block dynamics, and the Swendsen-Wang dynamics.

This reveals a novel connection between probabilistic techniques for bounding

the convergence to stationarity and analytic tools for analyzing the decay of

relative entropy. As a corollary of our general results, we obtain

O(nlogn) mixing time and Ω(1/n) modified log-Sobolev constant of

the Glauber dynamics for sampling random q-colorings of an n-vertex graph

with constant maximum degree Δ when q>(11/6−ϵ0)Δ for

some fixed ϵ0>0. We also obtain O(logn) mixing time and

Ω(1) modified log-Sobolev constant of the Swendsen-Wang dynamics for the

ferromagnetic Ising model on an n-vertex graph of constant maximum degree

when the parameters of the system lie in the tree uniqueness region. At the

heart of our results are new techniques for establishing spectral independence

of the spin system and block factorization of the relative entropy. On one hand

we prove that a contractive coupling of a local Markov chain implies spectral

independence of the Gibbs distribution. On the other hand we show that spectral

independence implies factorization of entropy for arbitrary blocks,

establishing optimal bounds on the modified log-Sobolev constant of the

corresponding block dynamics.

24 Nov 2023

Wuhan University Chinese Academy of SciencesSichuan University

Chinese Academy of SciencesSichuan University University of Manchester

University of Manchester University of Science and Technology of China

University of Science and Technology of China Beihang University

Beihang University Nanjing University

Nanjing University Tsinghua University

Tsinghua University Zhejiang UniversityUniversity of TabukUniversity of EdinburghNankai University

Zhejiang UniversityUniversity of TabukUniversity of EdinburghNankai University Peking UniversityUniversity of GenoaUniversity of TurinUppsala UniversityGuangxi Normal UniversityCentral China Normal University

Peking UniversityUniversity of GenoaUniversity of TurinUppsala UniversityGuangxi Normal UniversityCentral China Normal University Shandong University

Shandong University Queen Mary University of LondonLanzhou UniversityUniversity of FerraraSoochow UniversityTechnische Universität München

Queen Mary University of LondonLanzhou UniversityUniversity of FerraraSoochow UniversityTechnische Universität München University of GroningenJozef Stefan InstituteShanxi UniversityUniversity of PerugiaUniversity of TriesteZhengzhou UniversityINFN, Sezione di TorinoINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityINFN Sezione di PerugiaJustus Liebig University GiessenJohannes Gutenberg University of MainzGSI Helmholtz Centre for Heavy Ion ResearchHangzhou Normal UniversityIHEPINFN-Sezione di GenovaLiaoning UniversityHelmholtz-Institut für Strahlen-und KernphysikHuangshan CollegeINFN Sezione di RomaNorth University of ChinaUniversity of Eastern PiedmontUniversity of Roma TreIFIC, CSIC-University of ValenciaBINPINFN-Sezione di Roma TreINFN-Sezione di FerraraRuhr-University-BochumINFN

Sezione di Trieste

University of GroningenJozef Stefan InstituteShanxi UniversityUniversity of PerugiaUniversity of TriesteZhengzhou UniversityINFN, Sezione di TorinoINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityINFN Sezione di PerugiaJustus Liebig University GiessenJohannes Gutenberg University of MainzGSI Helmholtz Centre for Heavy Ion ResearchHangzhou Normal UniversityIHEPINFN-Sezione di GenovaLiaoning UniversityHelmholtz-Institut für Strahlen-und KernphysikHuangshan CollegeINFN Sezione di RomaNorth University of ChinaUniversity of Eastern PiedmontUniversity of Roma TreIFIC, CSIC-University of ValenciaBINPINFN-Sezione di Roma TreINFN-Sezione di FerraraRuhr-University-BochumINFN

Sezione di Trieste

Chinese Academy of SciencesSichuan University

Chinese Academy of SciencesSichuan University University of Manchester

University of Manchester University of Science and Technology of China

University of Science and Technology of China Beihang University

Beihang University Nanjing University

Nanjing University Tsinghua University

Tsinghua University Zhejiang UniversityUniversity of TabukUniversity of EdinburghNankai University

Zhejiang UniversityUniversity of TabukUniversity of EdinburghNankai University Peking UniversityUniversity of GenoaUniversity of TurinUppsala UniversityGuangxi Normal UniversityCentral China Normal University

Peking UniversityUniversity of GenoaUniversity of TurinUppsala UniversityGuangxi Normal UniversityCentral China Normal University Shandong University

Shandong University Queen Mary University of LondonLanzhou UniversityUniversity of FerraraSoochow UniversityTechnische Universität München

Queen Mary University of LondonLanzhou UniversityUniversity of FerraraSoochow UniversityTechnische Universität München University of GroningenJozef Stefan InstituteShanxi UniversityUniversity of PerugiaUniversity of TriesteZhengzhou UniversityINFN, Sezione di TorinoINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityINFN Sezione di PerugiaJustus Liebig University GiessenJohannes Gutenberg University of MainzGSI Helmholtz Centre for Heavy Ion ResearchHangzhou Normal UniversityIHEPINFN-Sezione di GenovaLiaoning UniversityHelmholtz-Institut für Strahlen-und KernphysikHuangshan CollegeINFN Sezione di RomaNorth University of ChinaUniversity of Eastern PiedmontUniversity of Roma TreIFIC, CSIC-University of ValenciaBINPINFN-Sezione di Roma TreINFN-Sezione di FerraraRuhr-University-BochumINFN

Sezione di Trieste

University of GroningenJozef Stefan InstituteShanxi UniversityUniversity of PerugiaUniversity of TriesteZhengzhou UniversityINFN, Sezione di TorinoINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityINFN Sezione di PerugiaJustus Liebig University GiessenJohannes Gutenberg University of MainzGSI Helmholtz Centre for Heavy Ion ResearchHangzhou Normal UniversityIHEPINFN-Sezione di GenovaLiaoning UniversityHelmholtz-Institut für Strahlen-und KernphysikHuangshan CollegeINFN Sezione di RomaNorth University of ChinaUniversity of Eastern PiedmontUniversity of Roma TreIFIC, CSIC-University of ValenciaBINPINFN-Sezione di Roma TreINFN-Sezione di FerraraRuhr-University-BochumINFN

Sezione di TriesteUsing data samples with an integrated luminosity of 5.85~fb−1 collected

at center-of-mass energies from 4.61 to 4.95 GeV with the BESIII detector

operating at the BEPCII storage ring, we measure the cross section for the

process e+e−→K+K−J/ψ. A new resonance with a mass of $M =

4708_{-15}^{+17}\pm21MeV/c^{2}andawidthof\Gamma =

126_{-23}^{+27}\pm30$ MeV is observed in the energy-dependent line shape of the

e+e−→K+K−J/ψ cross section with a significance over 5σ. The

K+J/ψ system is also investigated to search for charged charmoniumlike

states, but no significant Zcs+ states are observed. Upper limits on the

Born cross sections for $e^+e^-\to K^{-} Z_{cs}(3985)^{+}/K^{-}

Z_{cs}(4000)^{+} + c.c.withZ_{cs}(3985)^{\pm}/Z_{cs}(4000)^{\pm}\to K^{\pm}

J/\psi$ are reported at 90\% confidence levels. The ratio of branching

fractions $\frac{\mathcal{B}(Z_{cs}(3985)^{+}\to K^+

J/\psi)}{\mathcal{B}(Z_{cs}(3985)^{+}\to (\bar{D}^{0}D_s^{*+} +

\bar{D}^{*0}D_s^+))}$ is measured to be less than 0.03 at 90\% confidence

level.

There are no more papers matching your filters at the moment.