Wuyi University

Researchers at National Taiwan University of Science and Technology and WUYI University developed an unsupervised and retraining-free structured pruning method for Large Language Models, which leverages mutual information to identify and remove redundant neurons in Feed-forward Network layers. The technique achieves competitive performance on tasks like SST-2 and QNLI with significant FLOPs reduction, outperforming other unsupervised methods and remaining competitive with supervised approaches, while requiring only a small fraction of the training data for efficient mutual information estimation.

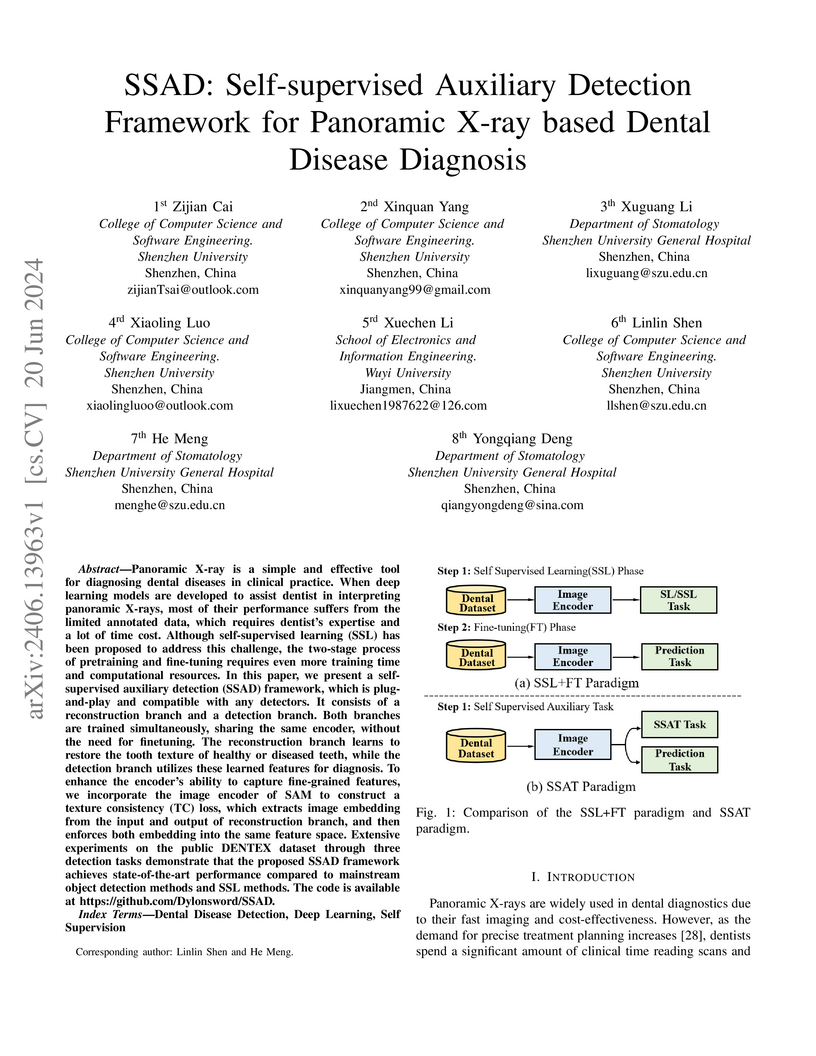

Panoramic X-ray is a simple and effective tool for diagnosing dental diseases in clinical practice. When deep learning models are developed to assist dentist in interpreting panoramic X-rays, most of their performance suffers from the limited annotated data, which requires dentist's expertise and a lot of time cost. Although self-supervised learning (SSL) has been proposed to address this challenge, the two-stage process of pretraining and fine-tuning requires even more training time and computational resources. In this paper, we present a self-supervised auxiliary detection (SSAD) framework, which is plug-and-play and compatible with any detectors. It consists of a reconstruction branch and a detection branch. Both branches are trained simultaneously, sharing the same encoder, without the need for finetuning. The reconstruction branch learns to restore the tooth texture of healthy or diseased teeth, while the detection branch utilizes these learned features for diagnosis. To enhance the encoder's ability to capture fine-grained features, we incorporate the image encoder of SAM to construct a texture consistency (TC) loss, which extracts image embedding from the input and output of reconstruction branch, and then enforces both embedding into the same feature space. Extensive experiments on the public DENTEX dataset through three detection tasks demonstrate that the proposed SSAD framework achieves state-of-the-art performance compared to mainstream object detection methods and SSL methods. The code is available at this https URL

In recent years, impressive performance of deep learning technology has been

recognized in Synthetic Aperture Radar (SAR) Automatic Target Recognition

(ATR). Since a large amount of annotated data is required in this technique, it

poses a trenchant challenge to the issue of obtaining a high recognition rate

through less labeled data. To overcome this problem, inspired by the

contrastive learning, we proposed a novel framework named Batch Instance

Discrimination and Feature Clustering (BIDFC). In this framework, different

from that of the objective of general contrastive learning methods, embedding

distance between samples should be moderate because of the high similarity

between samples in the SAR images. Consequently, our flexible framework is

equipped with adjustable distance between embedding, which we term as weakly

contrastive learning. Technically, instance labels are assigned to the

unlabeled data in per batch and random augmentation and training are performed

few times on these augmented data. Meanwhile, a novel Dynamic-Weighted Variance

loss (DWV loss) function is also posed to cluster the embedding of enhanced

versions for each sample. Experimental results on the moving and stationary

target acquisition and recognition (MSTAR) database indicate a 91.25%

classification accuracy of our method fine-tuned on only 3.13% training data.

Even though a linear evaluation is performed on the same training data, the

accuracy can still reach 90.13%. We also verified the effectiveness of BIDFC in

OpenSarShip database, indicating that our method can be generalized to other

datasets. Our code is avaliable at:

this https URL

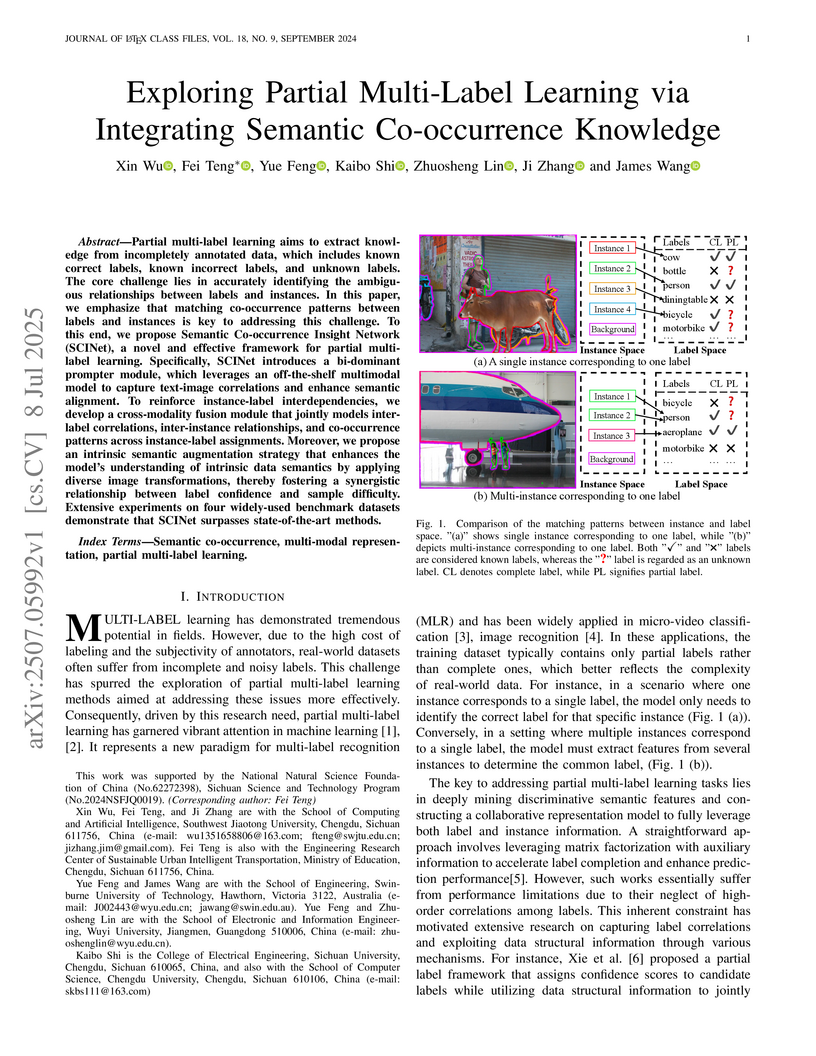

Partial multi-label learning aims to extract knowledge from incompletely annotated data, which includes known correct labels, known incorrect labels, and unknown labels. The core challenge lies in accurately identifying the ambiguous relationships between labels and instances. In this paper, we emphasize that matching co-occurrence patterns between labels and instances is key to addressing this challenge. To this end, we propose Semantic Co-occurrence Insight Network (SCINet), a novel and effective framework for partial multi-label learning. Specifically, SCINet introduces a bi-dominant prompter module, which leverages an off-the-shelf multimodal model to capture text-image correlations and enhance semantic alignment. To reinforce instance-label interdependencies, we develop a cross-modality fusion module that jointly models inter-label correlations, inter-instance relationships, and co-occurrence patterns across instance-label assignments. Moreover, we propose an intrinsic semantic augmentation strategy that enhances the model's understanding of intrinsic data semantics by applying diverse image transformations, thereby fostering a synergistic relationship between label confidence and sample difficulty. Extensive experiments on four widely-used benchmark datasets demonstrate that SCINet surpasses state-of-the-art methods.

Background: Common spatial pattern (CSP) has been widely used for feature extraction in the case of motor imagery (MI) electroencephalogram (EEG) recordings and in MI classification of brain-computer interface (BCI) applications. BCI usually requires relatively long EEG data for reliable classifier training. More specifically, before using general spatial patterns for feature extraction, a training dictionary from two different classes is used to construct a compound dictionary matrix, and the representation of the test samples in the filter band is estimated as a linear combination of the columns in the dictionary matrix. New method: To alleviate the problem of sparse small sample (SS) between frequency bands. We propose a novel sparse group filter bank model (SGFB) for motor imagery in BCI system. Results: We perform a task by representing residuals based on the categories corresponding to the non-zero correlation coefficients. Besides, we also perform joint sparse optimization with constrained filter bands in three different time windows to extract robust CSP features in a multi-task learning framework. To verify the effectiveness of our model, we conduct an experiment on the public EEG dataset of BCI competition to compare it with other competitive methods. Comparison with existing methods: Decent classification performance for different subbands confirms that our algorithm is a promising candidate for improving MI-based BCI performance.

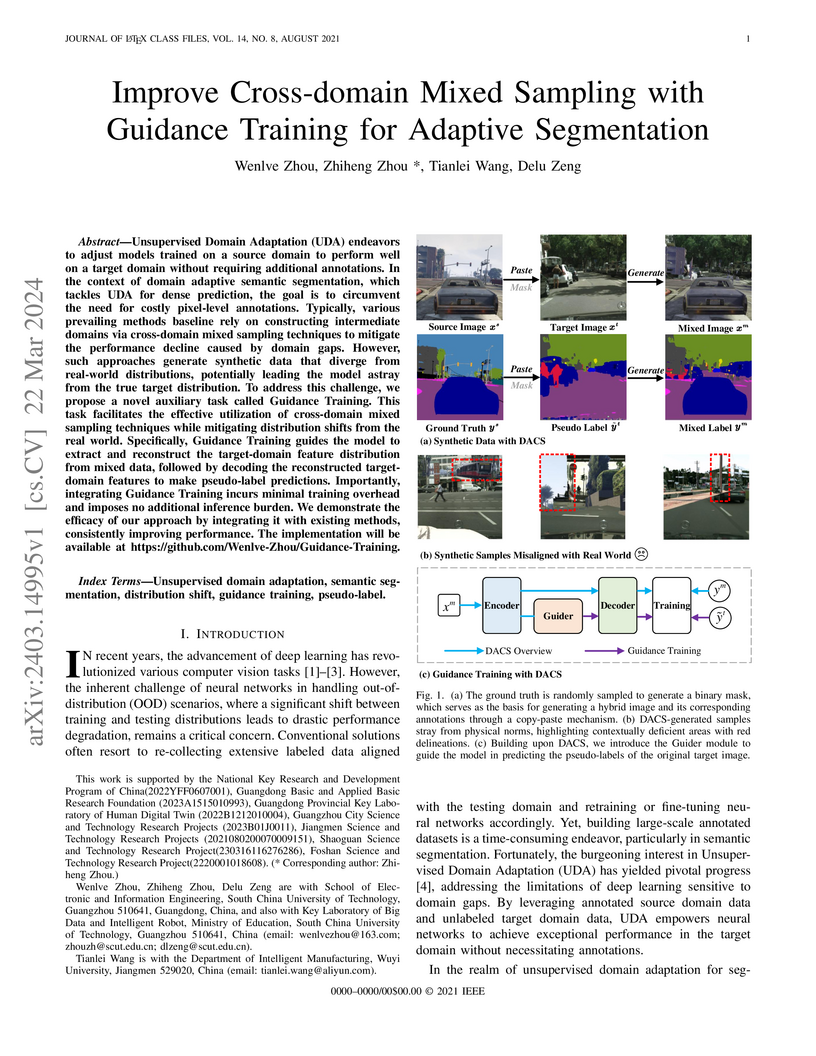

Unsupervised Domain Adaptation (UDA) endeavors to adjust models trained on a

source domain to perform well on a target domain without requiring additional

annotations. In the context of domain adaptive semantic segmentation, which

tackles UDA for dense prediction, the goal is to circumvent the need for costly

pixel-level annotations. Typically, various prevailing methods baseline rely on

constructing intermediate domains via cross-domain mixed sampling techniques to

mitigate the performance decline caused by domain gaps. However, such

approaches generate synthetic data that diverge from real-world distributions,

potentially leading the model astray from the true target distribution. To

address this challenge, we propose a novel auxiliary task called Guidance

Training. This task facilitates the effective utilization of cross-domain mixed

sampling techniques while mitigating distribution shifts from the real world.

Specifically, Guidance Training guides the model to extract and reconstruct the

target-domain feature distribution from mixed data, followed by decoding the

reconstructed target-domain features to make pseudo-label predictions.

Importantly, integrating Guidance Training incurs minimal training overhead and

imposes no additional inference burden. We demonstrate the efficacy of our

approach by integrating it with existing methods, consistently improving

performance. The implementation will be available at

https://github.com/Wenlve-Zhou/Guidance-Training.

12 Jun 2018

We present a way to create entangled states of two superconducting transmon qutrits based on circuit QED. Here, a qutrit refers to a three-level quantum system. Since only resonant interaction is employed, the entanglement creation can be completed within a short time. The degree of entanglement for the prepared entangled state can be controlled by varying the weight factors of the initial state of one qutrit, which allows the prepared entangled state to change from a partially entangled state to a maximally entangled state. Because a single cavity is used, only resonant interaction is employed, and none of identical qutrit-cavity coupling constant, measurement, and auxiliary qutrit is needed, this proposal is easy to implement in experiments. The proposal is quite general and can be applied to prepare a two-qutrit partially or maximally entangled state with two natural or artificial atoms of a ladder-type level structure, coupled to an optical or microwave cavity.

Unsupervised Domain Adaptation (UDA) leverages a labeled source domain to

solve tasks in an unlabeled target domain. While Transformer-based methods have

shown promise in UDA, their application is limited to plain Transformers,

excluding Convolutional Neural Networks (CNNs) and hierarchical Transformers.

To address this issues, we propose Bidirectional Probability Calibration (BiPC)

from a probability space perspective. We demonstrate that the probability

outputs from a pre-trained head, after extensive pre-training, are robust

against domain gaps and can adjust the probability distribution of the task

head. Moreover, the task head can enhance the pre-trained head during

adaptation training, improving model performance through bidirectional

complementation. Technically, we introduce Calibrated Probability Alignment

(CPA) to adjust the pre-trained head's probabilities, such as those from an

ImageNet-1k pre-trained classifier. Additionally, we design a Calibrated Gini

Impurity (CGI) loss to refine the task head, with calibrated coefficients

learned from the pre-trained classifier. BiPC is a simple yet effective method

applicable to various networks, including CNNs and Transformers. Experimental

results demonstrate its remarkable performance across multiple UDA tasks. Our

code will be available at: this https URL

17 Aug 2023

From the perspective of resource theory, it is interesting to achieve the same quantum task using as few quantum resources as possible. Semiquantum key distribution (SQKD), which allows a quantum user to share a confidential key with a classical user who prepares and operates qubits in only one basis, is an important example for studying this issue. To further limit the quantum resources used by users, in this paper, we constructed the first SQKD protocol which restricts the quantum user to prepare quantum states in only one basis and removes the classical user's measurement capability. Furthermore, we prove that the constructed protocol is unconditionally secure by deriving a key rate expression of the error rate in the asymptotic scenario. The work of this paper provides inspiration for achieving quantum superiority with minimal quantum resources.

Multi-view (or -modality) representation learning aims to understand the

relationships between different view representations. Existing methods

disentangle multi-view representations into consistent and view-specific

representations by introducing strong inductive biases, which can limit their

generalization ability. In this paper, we propose a novel multi-view

representation disentangling method that aims to go beyond inductive biases,

ensuring both interpretability and generalizability of the resulting

representations. Our method is based on the observation that discovering

multi-view consistency in advance can determine the disentangling information

boundary, leading to a decoupled learning objective. We also found that the

consistency can be easily extracted by maximizing the transformation invariance

and clustering consistency between views. These observations drive us to

propose a two-stage framework. In the first stage, we obtain multi-view

consistency by training a consistent encoder to produce semantically-consistent

representations across views as well as their corresponding pseudo-labels. In

the second stage, we disentangle specificity from comprehensive representations

by minimizing the upper bound of mutual information between consistent and

comprehensive representations. Finally, we reconstruct the original data by

concatenating pseudo-labels and view-specific representations. Our experiments

on four multi-view datasets demonstrate that our proposed method outperforms 12

comparison methods in terms of clustering and classification performance. The

visualization results also show that the extracted consistency and specificity

are compact and interpretable. Our code can be found at

\url{this https URL}.

With diverse presentation forgery methods emerging continually, detecting the

authenticity of images has drawn growing attention. Although existing methods

have achieved impressive accuracy in training dataset detection, they still

perform poorly in the unseen domain and suffer from forgery of irrelevant

information such as background and identity, affecting generalizability. To

solve this problem, we proposed a novel framework Selective Domain-Invariant

Feature (SDIF), which reduces the sensitivity to face forgery by fusing content

features and styles. Specifically, we first use a Farthest-Point Sampling (FPS)

training strategy to construct a task-relevant style sample representation

space for fusing with content features. Then, we propose a dynamic feature

extraction module to generate features with diverse styles to improve the

performance and effectiveness of the feature extractor. Finally, a domain

separation strategy is used to retain domain-related features to help

distinguish between real and fake faces. Both qualitative and quantitative

results in existing benchmarks and proposals demonstrate the effectiveness of

our approach.

12 Feb 2024

We study the magnetized strangelets in the baryon density-dependent quark mass model, including the effects of both confinement and lead-order perturbation interactions. The properties of magnetized strangelets are investigated under the the field strength 2*10^17 G, where the anisotropy caused by the strong magnetic field is insignificant can be treated approximately as an isotropic system. The consideration of anomalous magnetic moments in the energy spectrum naturally solves the difficulty of infrared divergence encountered in integrating the density of states. The Coulomb interaction is accounted for a self-consistent treatment. The energy per baryon, mechanically stable radius, strangeness and electric charge of magnetized strangelets are presented, where their dependence on the field strength and parameter of confinement and perturbation are investigated.

Monocular depth estimation is the base task in computer vision. It has a tremendous development in the decade with the development of deep learning. But the boundary blur of the depth map is still a serious problem. Research finds the boundary blur problem is mainly caused by two factors, first, the low-level features containing boundary and structure information may loss in deeper networks during the convolution process., second, the model ignores the errors introduced by the boundary area due to the few portions of the boundary in the whole areas during the backpropagation. In order to mitigate the boundary blur problem, we focus on the above two impact factors. Firstly, we design a scene understanding module to learn the global information with low- and high-level features, and then to transform the global information to different scales with our proposed scale transform module according to the different phases in the decoder. Secondly, we propose a boundary-aware depth loss function to pay attention to the effects of the boundary's depth value. The extensive experiments show that our method can predict the depth maps with clearer boundaries, and the performance of the depth accuracy base on NYU-depth v2 and SUN RGB-D is competitive.

Photomultiplier tubes (PMTs) are widely used in particle experiments for photon detection. PMT waveform analysis is crucial for high-precision measurements of the position and energy of incident particles in liquid scintillator (LS) detectors. A key factor contributing to the energy resolution in large liquid scintillator detectors with PMTs is the charge smearing of PMTs. This paper presents a machine-learning-based photon counting method for PMT waveforms and its application to the energy reconstruction, using the JUNO experiment as an example. The results indicate that leveraging the photon counting information from the machine learning model can partially mitigate the impact of PMT charge smearing and lead to a relative 2.0% to 2.8% improvement on the energy resolution in the energy range of [1, 9] MeV.

12 Jun 2018

We present a way to create entangled states of two superconducting transmon qutrits based on circuit QED. Here, a qutrit refers to a three-level quantum system. Since only resonant interaction is employed, the entanglement creation can be completed within a short time. The degree of entanglement for the prepared entangled state can be controlled by varying the weight factors of the initial state of one qutrit, which allows the prepared entangled state to change from a partially entangled state to a maximally entangled state. Because a single cavity is used, only resonant interaction is employed, and none of identical qutrit-cavity coupling constant, measurement, and auxiliary qutrit is needed, this proposal is easy to implement in experiments. The proposal is quite general and can be applied to prepare a two-qutrit partially or maximally entangled state with two natural or artificial atoms of a ladder-type level structure, coupled to an optical or microwave cavity.

The vulnerability of Deep Neural Networks to adversarial perturbations presents significant security concerns, as the imperceptible perturbations can contaminate the feature space and lead to incorrect predictions. Recent studies have attempted to calibrate contaminated features by either suppressing or over-activating particular channels. Despite these efforts, we claim that adversarial attacks exhibit varying disruption levels across individual channels. Furthermore, we argue that harmonizing feature maps via graph and employing graph convolution can calibrate contaminated features. To this end, we introduce an innovative plug-and-play module called Feature Map-based Reconstructed Graph Convolution (FMR-GC). FMR-GC harmonizes feature maps in the channel dimension to reconstruct the graph, then employs graph convolution to capture neighborhood information, effectively calibrating contaminated features. Extensive experiments have demonstrated the superior performance and scalability of FMR-GC. Moreover, our model can be combined with advanced adversarial training methods to considerably enhance robustness without compromising the model's clean accuracy.

08 Sep 2016

In this paper, we analyse the quantum coherence behaviors of a single qubit

in the relativistic regime beyond the single-mode approximation. Firstly, we

investigate the freezing condition of quantum coherence in fermionic system. We

also study the quantum coherence tradeoff between particle and antiparticle

sector. It is found that there exists quantum coherence transfer between

particle and antiparticle sector, but the coherence lost in particle sector is

not entirely compensated by the coherence generation of antiparticle sector.

Besides, we emphatically discuss the cohering power and decohering power of

Unruh channel with respect to the computational basis. It is shown that

cohering power is vanishing and decohering power is dependent of the choice of

Unruh mode and acceleration. Finally, We compare the behaviors of quantum

coherence with geometric quantum discord and entanglement in relativistic

setup. Our results show that this quantifiers in two region converge at

infinite acceleration limit, which implies that this measures become

independent of Unruh modes beyond the single-mode approximations. It is also

demonstrated that the robustness of quantum coherence and geometric quantum

discord are better than entanglement under the influence of acceleration, since

entanglement undergoes sudden death.

High precision vertex and energy reconstruction is crucial for large liquid scintillator detectors such as JUNO, especially for the determination of the neutrino mass ordering by analyzing the energy spectrum of reactor neutrinos. This paper presents a data-driven method to obtain more realistic and more accurate expected PMT response of positron events in JUNO, and develops a simultaneous vertex and energy reconstruction method that combines the charge and time information of PMTs. For the JUNO detector, the impact of vertex inaccuracy on the energy resolution is about 0.6\%.

20 Jun 2020

Risk statistic is a critical factor not only for risk analysis but also for

financial application. However, the traditional risk statistics may fail to

describe the characteristics of regulator-based risk. In this paper, we

consider the regulator-based risk statistics for portfolios. By further

developing the properties related to regulator-based risk statistics, we are

able to derive dual representation for such risk.

The energy consumption of the HVAC system accounts for a significant portion

of the energy consumption of the public building system, and using an efficient

energy consumption prediction model can assist it in carrying out effective

energy-saving transformation. Unlike the traditional energy consumption

prediction model, this paper extracts features from large data sets using

XGBoost, trains them separately to obtain multiple models, then fuses them with

LightGBM's independent prediction results using MAE, infers energy consumption

related variables, and successfully applies this model to the self-developed

Internet of Things platform.

There are no more papers matching your filters at the moment.