Ask or search anything...

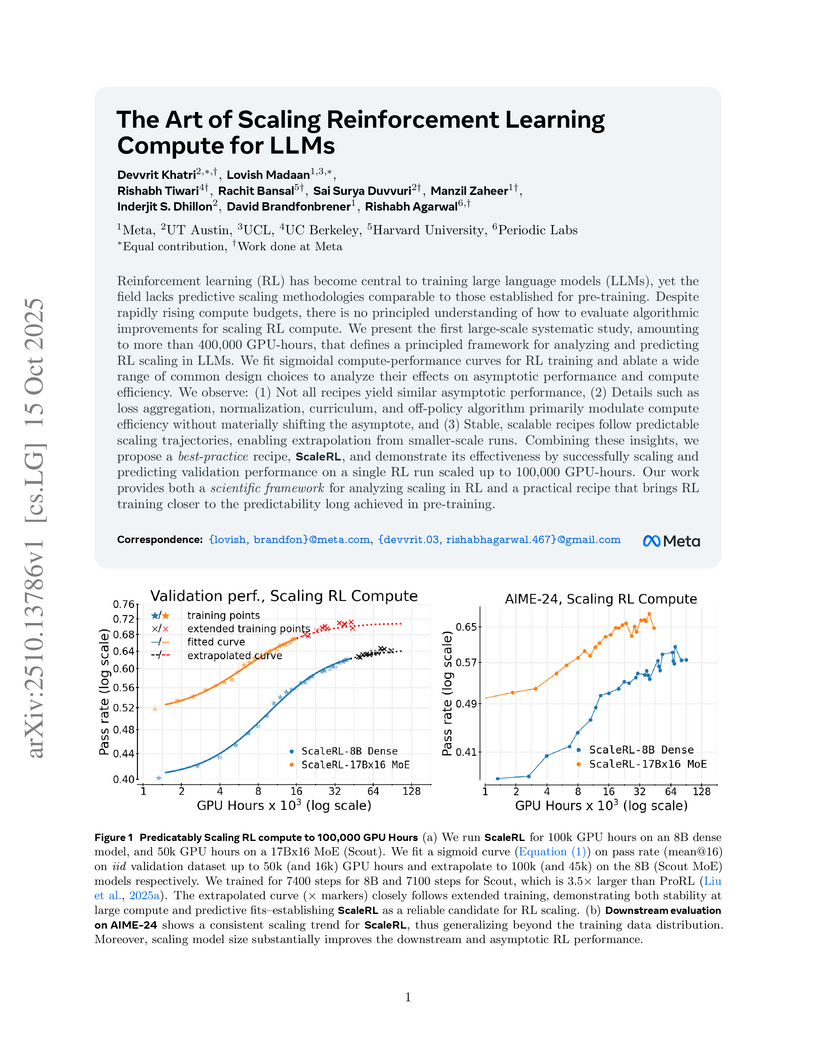

Researchers introduced a predictive framework for Reinforcement Learning (RL) in Large Language Models (LLMs) using a sigmoidal compute-performance curve, enabling performance extrapolation from smaller runs. Their ScaleRL recipe, demonstrated over 100,000 GPU-hours, achieves an asymptotic reward of 0.61 on verifiable math problems, outperforming established methods while exhibiting predictable scaling across model size, generation length, and multi-task settings.

View blogResearchers at Meta AI and collaborators developed Token Assorted, a method that combines discrete latent tokens with text tokens for Large Language Model reasoning. This approach enhances reasoning accuracy on synthetic and mathematical benchmarks while reducing reasoning trace length by an average of 17%.

View blogResearchers from Meta AI, McGill University, and UCL present META MOTIVO, a Behavioral Foundation Model enabling zero-shot whole-body control for humanoid agents across diverse tasks. This model uses an online unsupervised reinforcement learning algorithm, FB-CPR, which learns from unlabeled motion capture data to generate natural, human-like movements. Human evaluations showed a preference for FB-CPR-generated behaviors in terms of naturalness, even over those from reward-optimized agents.

View blogThe PRISM Alignment Dataset is a new resource for understanding human preferences in LLM alignment, specifically capturing subjective, individualized, and multicultural dimensions. It includes 8,011 conversations and detailed participant profiles from a diverse global sample, demonstrating that LLM preferences vary significantly by user demographics and conversation context, and that sampling decisions heavily influence alignment outcomes.

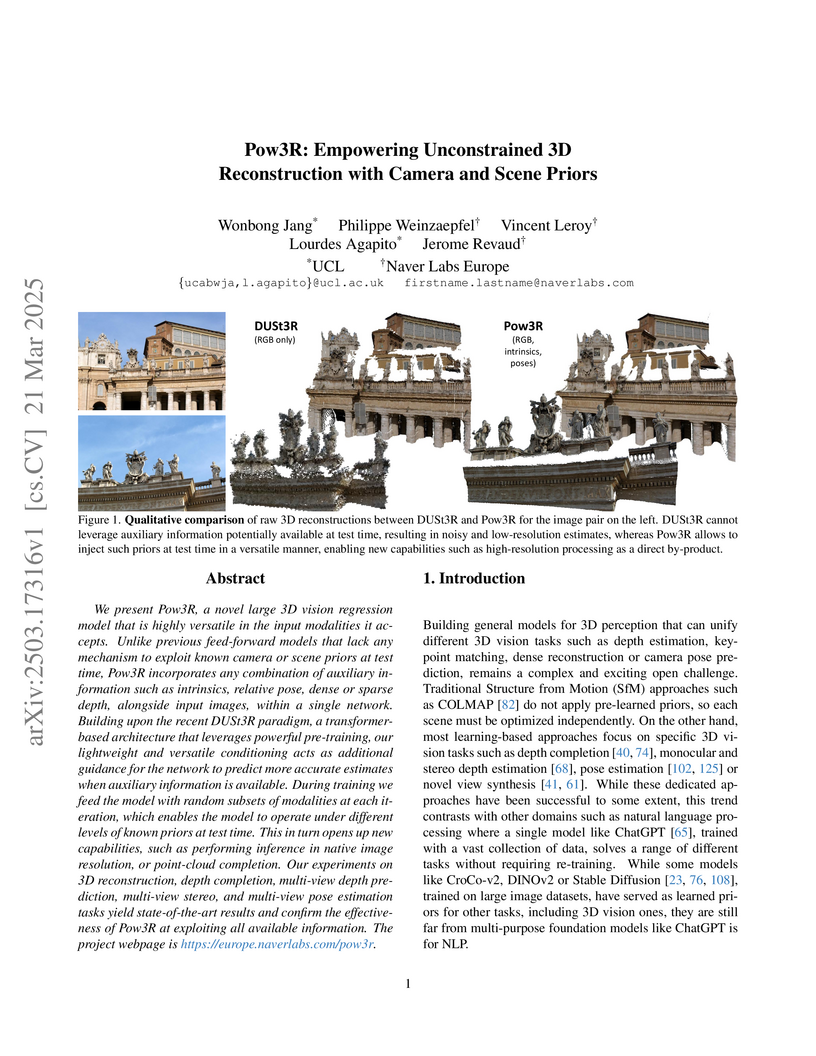

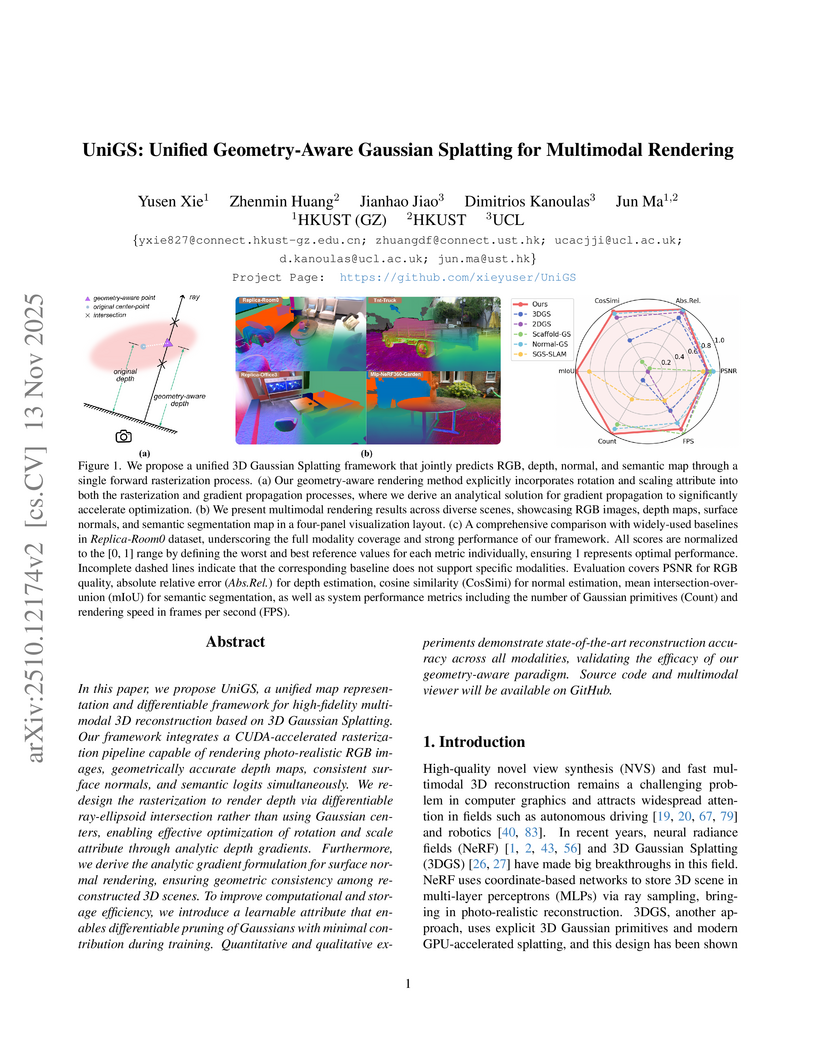

View blogA unified 3D reconstruction framework from UCL and Naver Labs Europe enables flexible incorporation of camera and scene priors like intrinsics, poses, and depth maps into transformer-based architectures, achieving state-of-the-art performance across multiple 3D vision tasks while enabling high-resolution processing and efficient pose estimation through dual coordinate prediction.

View blogThis study provides empirical evidence that Large Language Models latently perform multi-hop reasoning by internally resolving descriptive mentions to entities and then utilizing that knowledge. While the ability to recall the bridge entity improves with model scale, the subsequent step of leveraging this resolved entity for consistent compositional reasoning shows moderate success and does not significantly scale, suggesting a bottleneck in current LLM architectures for truly compositional knowledge utilization.

View blogEFFIBENCH-X introduces the first multi-language benchmark designed to measure the execution time and memory efficiency of code generated by large language models. The evaluation of 26 state-of-the-art LLMs reveals a consistent efficiency gap compared to human-expert solutions, with top models achieving around 62% of human execution time efficiency and varying performance across different programming languages and problem types.

View blogResearchers at Anthropic and affiliated universities developed Best-of-N (BoN) Jailbreaking, a simple, black-box method that systematically circumvents safety safeguards in frontier AI models across text, vision, and audio modalities. The approach, which involves repeatedly submitting augmented harmful requests, demonstrates high attack success rates and reveals that adversarial success scales predictably with computational resources following a power-law behavior.

View blogLarge Language Models acquire reasoning capabilities by synthesizing procedural knowledge from pretraining data, particularly from code and mathematical formulae, rather than through direct retrieval of specific answers. This mechanism was identified by analyzing the influence of pretraining documents on model outputs using influence functions on Cohere's Command R models.

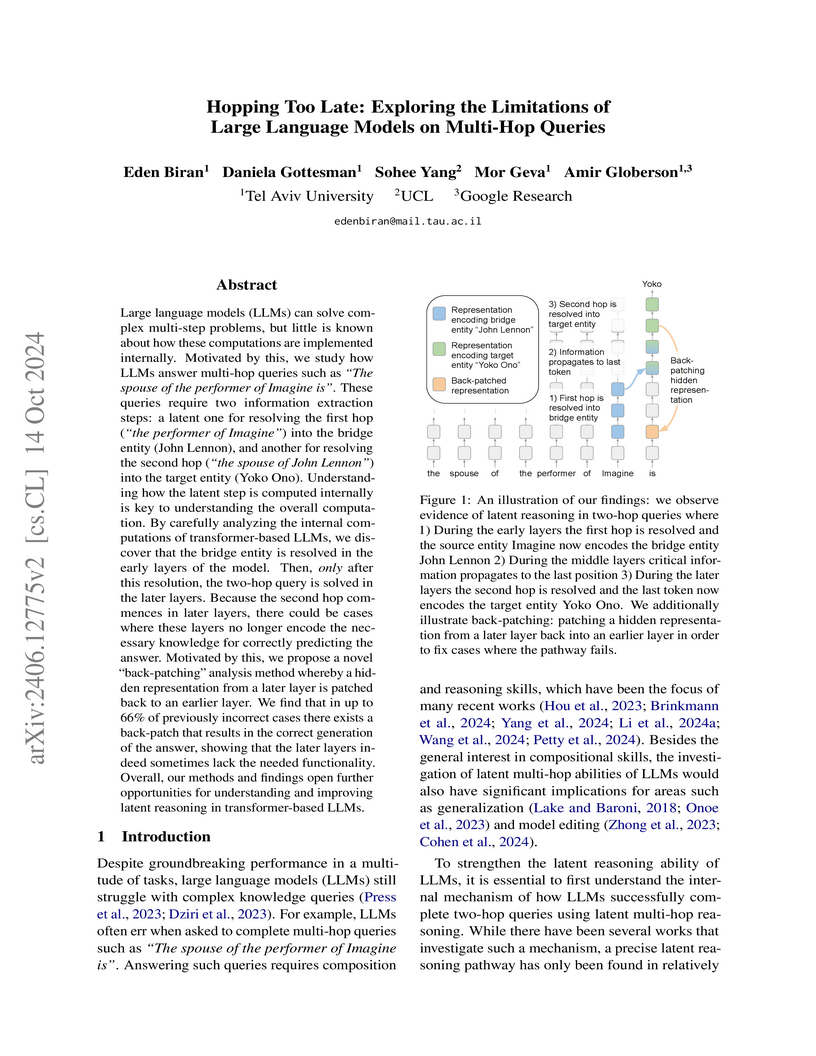

View blogThis paper investigated the internal reasoning pathways of large language models for multi-hop queries, identifying a 'hopping too late' phenomenon where later layers fail to effectively compose information despite earlier layers resolving intermediate steps. A novel 'back-patching' method was introduced, which corrected 32% to 66% of previously incorrect multi-hop answers by re-introducing hidden representations to earlier layers.

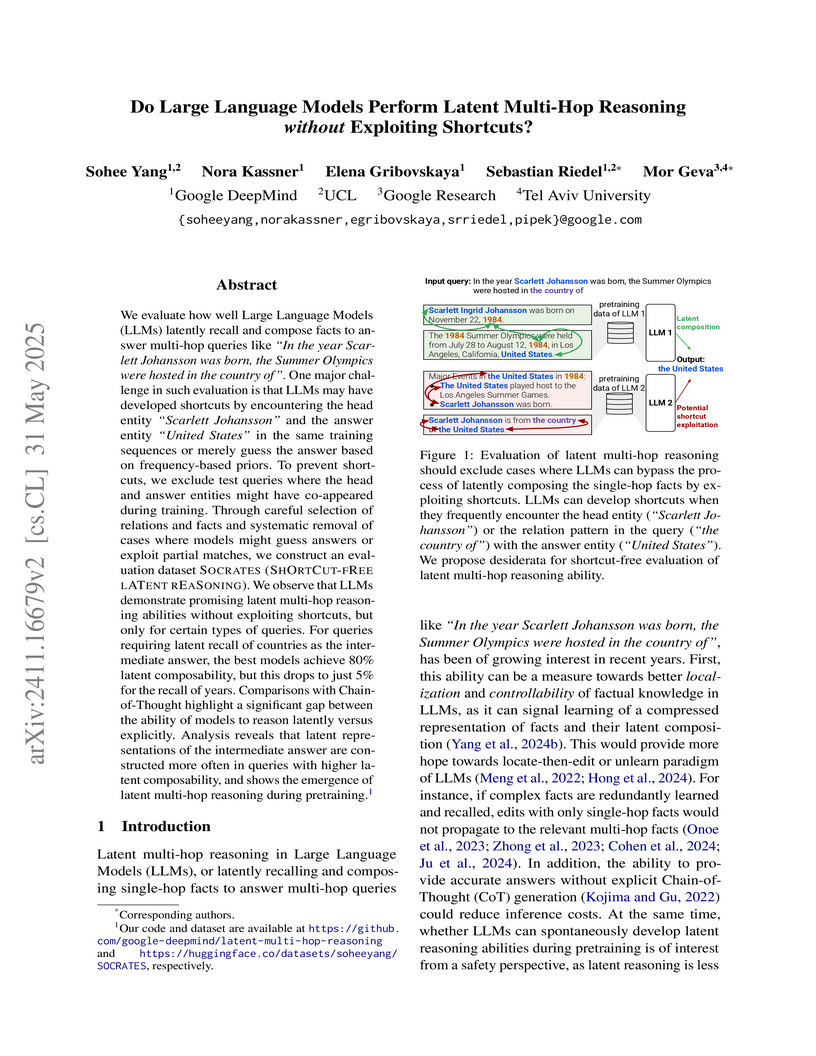

View blogA study by researchers from Google DeepMind and Google Research rigorously evaluated Large Language Models' latent multi-hop reasoning abilities using a new shortcut-free dataset, SOCRATES. It found that while LLMs struggle with general latent multi-hop reasoning (e.g., GPT-4o at 7.6% composability), their performance drastically varies by bridge entity type, achieving over 80% for 'country' bridge entities but only 5-6% for 'year' ones.

View blogResearchers from Huawei Noah's Ark Lab, UCL, and TU Darmstadt developed Agent K, a generalist AI agent utilizing Kolb's experiential learning theory and Vygotsky's Zone of Proximal Development, to autonomously navigate and solve Kaggle data science challenges. The system achieved human-competitive performance, demonstrating an Elo-MMR of 1694 and earning the equivalent of 4 gold and 4 silver medals against human experts across diverse competition types.

View blogResearchers from diverse institutions propose a unifying framework for representational alignment, a concept central to cognitive science, neuroscience, and machine learning. This framework provides a common language and systematically categorizes research objectives and methodological components, aiming to bridge disciplinary fragmentation.

View blogYang et al. investigate large language models' capacity for self-reevaluation by testing their ability to identify and recover from various injected "unhelpful thoughts." The research finds a substantial gap between thought recognition and effective recovery, particularly noting an inverse scaling trend where larger models demonstrate reduced robustness to certain unhelpful thought injections, impacting their reliability and safety.

View blogResearchers from KAIST, UCL, and KT investigated how Large Language Models acquire and forget factual knowledge during pretraining, introducing metrics like 'effectivity' and 'retainability'. They found that knowledge is gained through incremental 'micro-acquisitions' followed by power-law forgetting, with larger models acquiring knowledge more effectively and varied exposure or larger batch sizes improving retention.

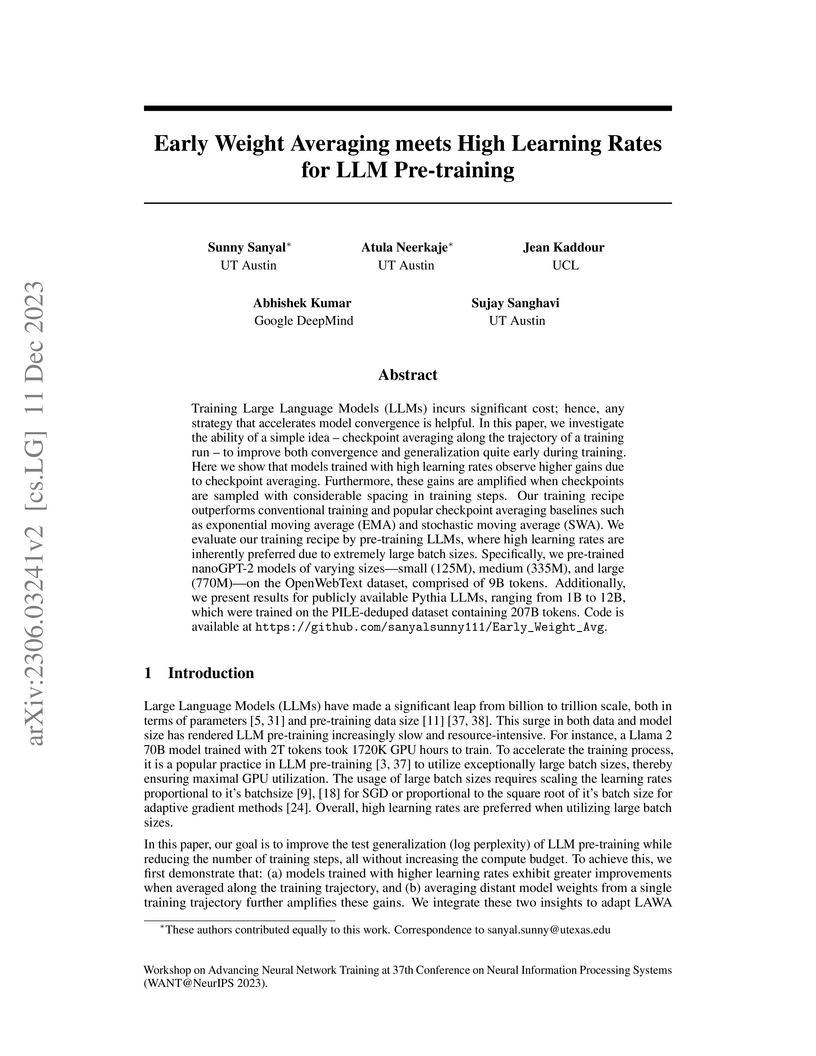

View blogResearchers from UT Austin, UCL, and Google DeepMind adapted Latest Weight Averaging (LAWA) for large language model pre-training, achieving faster convergence and improved generalization by combining high learning rates with strategically averaged, distant model checkpoints. This approach substantially reduces GPU hours and consistently outperforms existing weight averaging methods across various LLM scales.

View blogThis work introduces UncertaintyRAG, a lightweight and unsupervised retrieval model for long-context Retrieval-Augmented Generation (RAG). It leverages Signal-to-Noise Ratio (SNR)-based span uncertainty to estimate semantic similarity between text chunks, enhancing robustness to distribution shifts and achieving state-of-the-art average performance on long-context QA and summarization benchmarks while utilizing only 4% of the training data compared to baseline models.

View blog Harvard University

Harvard University

UC Berkeley

UC Berkeley

KAIST

KAIST

New York University

New York University

Google DeepMind

Google DeepMind

Monash University

Monash University

Anthropic

Anthropic

University of Toronto

University of Toronto

Tel Aviv University

Tel Aviv University

University of Cambridge

University of Cambridge

CUHK

CUHK