Bloomberg LP

The Aya Dataset project introduces the largest human-curated multilingual instruction-following dataset and a comprehensive collection, developed through a global participatory research initiative involving nearly 3,000 collaborators. This effort aims to address the language gap in instruction tuning, enabling more linguistically and culturally diverse Large Language Models.

Detecting and quantifying anomalies in urban traffic is critical for

real-time alerting or re-routing in the short run and urban planning in the

long run. We describe a two-step framework that achieves these two goals in a

robust, fast, online, and unsupervised manner. First, we adapt stable principal

component pursuit to detect anomalies for each road segment. This allows us to

pinpoint traffic anomalies early and precisely in space. Then we group the

road-level anomalies across time and space into meaningful anomaly events using

a simple graph expansion procedure. These events can be easily clustered,

visualized, and analyzed by urban planners. We demonstrate the effectiveness of

our system using 7 weeks of anonymized and aggregated cellular location data in

Dallas-Fort Worth. We suggest potential opportunities for urban planners and

policy makers to use our methodology to make informed changes. These

applications include real-time re-routing of traffic in response to abnormally

high traffic, or identifying candidates for high-impact infrastructure

projects.

11 Oct 2025

Valentin Tissot-Daguette at Bloomberg introduces "occupied processes," augmenting stochastic processes with their occupation flow to create a unified Markovian framework for path-dependent problems. This framework includes a new Itô calculus and Feynman-Kac formulas, enabling the development of a Local Occupied Volatility model that accurately calibrates to vanilla options while capturing complex volatility dynamics.

Charts are an excellent way to convey patterns and trends in data, but they

do not facilitate further modeling of the data or close inspection of

individual data points. We present a fully automated system for extracting the

numerical values of data points from images of scatter plots. We use deep

learning techniques to identify the key components of the chart, and optical

character recognition together with robust regression to map from pixels to the

coordinate system of the chart. We focus on scatter plots with linear scales,

which already have several interesting challenges. Previous work has done fully

automatic extraction for other types of charts, but to our knowledge this is

the first approach that is fully automatic for scatter plots. Our method

performs well, achieving successful data extraction on 89% of the plots in our

test set.

In this paper, we introduce the first and largest Hindi text corpus, named

BHAAV, which means emotions in Hindi, for analyzing emotions that a writer

expresses through his characters in a story, as perceived by a narrator/reader.

The corpus consists of 20,304 sentences collected from 230 different short

stories spanning across 18 genres such as Inspirational and Mystery. Each

sentence has been annotated into one of the five emotion categories - anger,

joy, suspense, sad, and neutral, by three native Hindi speakers with at least

ten years of formal education in Hindi. We also discuss challenges in the

annotation of low resource languages such as Hindi, and discuss the scope of

the proposed corpus along with its possible uses. We also provide a detailed

analysis of the dataset and train strong baseline classifiers reporting their

performances.

Learning how to predict future events from patterns of past events is difficult when the set of possible event types is large. Training an unrestricted neural model might overfit to spurious patterns. To exploit domain-specific knowledge of how past events might affect an event's present probability, we propose using a temporal deductive database to track structured facts over time. Rules serve to prove facts from other facts and from past events. Each fact has a time-varying state---a vector computed by a neural net whose topology is determined by the fact's provenance, including its experience of past events. The possible event types at any time are given by special facts, whose probabilities are neurally modeled alongside their states. In both synthetic and real-world domains, we show that neural probabilistic models derived from concise Datalog programs improve prediction by encoding appropriate domain knowledge in their architecture.

We investigate the approximation of path functionals. In particular, we advocate the use of the Karhunen-Loève expansion, the continuous analogue of Principal Component Analysis, to extract relevant information from the image of a functional. Having accurate estimate of functionals is of paramount importance in the context of exotic derivatives pricing, as presented in the practical applications. Specifically, we show how a simulation-based procedure, which we call the Karhunen-Loève Monte Carlo (KLMC) algorithm, allows fast and efficient computation of the price of path-dependent options. We also explore the path signature as an alternative tool to project both paths and functionals.

Complaining is a basic speech act regularly used in human and computer

mediated communication to express a negative mismatch between reality and

expectations in a particular situation. Automatically identifying complaints in

social media is of utmost importance for organizations or brands to improve the

customer experience or in developing dialogue systems for handling and

responding to complaints. In this paper, we introduce the first systematic

analysis of complaints in computational linguistics. We collect a new annotated

data set of written complaints expressed in English on Twitter.\footnote{Data

and code is available here:

\url{this https URL}} We present an

extensive linguistic analysis of complaining as a speech act in social media

and train strong feature-based and neural models of complaints across nine

domains achieving a predictive performance of up to 79 F1 using distant

supervision.

Hashtags are often employed on social media and beyond to add metadata to a textual utterance with the goal of increasing discoverability, aiding search, or providing additional semantics. However, the semantic content of hashtags is not straightforward to infer as these represent ad-hoc conventions which frequently include multiple words joined together and can include abbreviations and unorthodox spellings. We build a dataset of 12,594 hashtags split into individual segments and propose a set of approaches for hashtag segmentation by framing it as a pairwise ranking problem between candidate segmentations. Our novel neural approaches demonstrate 24.6% error reduction in hashtag segmentation accuracy compared to the current state-of-the-art method. Finally, we demonstrate that a deeper understanding of hashtag semantics obtained through segmentation is useful for downstream applications such as sentiment analysis, for which we achieved a 2.6% increase in average recall on the SemEval 2017 sentiment analysis dataset.

24 Oct 2017

Photographs, taken by field scientists, tourists, automated cameras, and

incidental photographers, are the most abundant source of data on wildlife

today. Wildbook is an autonomous computational system that starts from massive

collections of images and, by detecting various species of animals and

identifying individuals, combined with sophisticated data management, turns

them into high resolution information database, enabling scientific inquiry,

conservation, and citizen science.

We have built Wildbooks for whales (flukebook.org), sharks (whaleshark.org),

two species of zebras (Grevy's and plains), and several others. In January

2016, Wildbook enabled the first ever full species (the endangered Grevy's

zebra) census using photographs taken by ordinary citizens in Kenya. The

resulting numbers are now the official species census used by IUCN Red List:

this http URL In 2016, Wildbook partnered up with

WWF to build Wildbook for Sea Turtles, Internet of Turtles (IoT), as well as

systems for seals and lynx. Most recently, we have demonstrated that we can now

use publicly available social media images to count and track wild animals.

In this paper we present and discuss both the impact and challenges that the

use of crowdsourced images can have on wildlife conservation.

Previous work has found strong links between the choice of social media

images and users' emotions, demographics and personality traits. In this study,

we examine which attributes of profile and posted images are associated with

depression and anxiety of Twitter users. We used a sample of 28,749 Facebook

users to build a language prediction model of survey-reported depression and

anxiety, and validated it on Twitter on a sample of 887 users who had taken

anxiety and depression surveys. We then applied it to a different set of 4,132

Twitter users to impute language-based depression and anxiety labels, and

extracted interpretable features of posted and profile pictures to uncover the

associations with users' depression and anxiety, controlling for demographics.

For depression, we find that profile pictures suppress positive emotions rather

than display more negative emotions, likely because of social media

self-presentation biases. They also tend to show the single face of the user

(rather than show her in groups of friends), marking increased focus on the

self, emblematic for depression. Posted images are dominated by grayscale and

low aesthetic cohesion across a variety of image features. Profile images of

anxious users are similarly marked by grayscale and low aesthetic cohesion, but

less so than those of depressed users. Finally, we show that image features can

be used to predict depression and anxiety, and that multitask learning that

includes a joint modeling of demographics improves prediction performance.

Overall, we find that the image attributes that mark depression and anxiety

offer a rich lens into these conditions largely congruent with the

psychological literature, and that images on Twitter allow inferences about the

mental health status of users.

Visual speech recognition (VSR) is the task of recognizing spoken language from video input only, without any audio. VSR has many applications as an assistive technology, especially if it could be deployed in mobile devices and embedded systems. The need of intensive computational resources and large memory footprint are two of the major obstacles in developing neural network models for VSR in a resource constrained environment. We propose a novel end-to-end deep neural network architecture for word level VSR called MobiVSR with a design parameter that aids in balancing the model's accuracy and parameter count. We use depthwise-separable 3D convolution for the first time in the domain of VSR and show how it makes our model efficient. MobiVSR achieves an accuracy of 73\% on a challenging Lip Reading in the Wild dataset with 6 times fewer parameters and 20 times lesser memory footprint than the current state of the art. MobiVSR can also be compressed to 6 MB by applying post training quantization.

28 Nov 2018

The Third Generation Partnership Project (3GPP) released its first 5G

security specifications in March 2018. This paper reviews the 5G security

architecture, requirements and main processes and evaluates them in the context

of known and new protocol exploits. Although the security has been enhanced

when compared to previous generations to tackle known protocol exploits, our

analysis identifies some potentially unrealistic system assumptions that are

critical for security as well as a number protocol edge cases that could render

5G systems vulnerable to adversarial attacks. For example, null encryption and

null authentication are supported and can be used in valid system

configurations, and certain key security functions are still left outside of

the scope of the specifications. Moreover, the prevention of pre-authentcation

message exploits appears to rely on the implicit assumption of impractical

carrier and roaming agreements and the management of public keys from all

global operators. In parallel, existing threats such as International Mobile

Subscriber Identity (IMSI) catchers are prevented only if the serving network

enforces optional security features and if the UE knows the public key of the

home network operator. The comparison with 4G LTE protocol exploits reveals

that the 5G security specifications, as of Release 15, do not fully address the

user privacy and network availability concerns, where one edge case can

compromise the privacy, security and availability of 5G users and services.

We reduce the task of (span-based) PropBank-style semantic role labeling (SRL) to syntactic dependency parsing. Our approach is motivated by our empirical analysis that shows three common syntactic patterns account for over 98% of the SRL annotations for both English and Chinese data. Based on this observation, we present a conversion scheme that packs SRL annotations into dependency tree representations through joint labels that permit highly accurate recovery back to the original format. This representation allows us to train statistical dependency parsers to tackle SRL and achieve competitive performance with the current state of the art. Our findings show the promise of syntactic dependency trees in encoding semantic role relations within their syntactic domain of locality, and point to potential further integration of syntactic methods into semantic role labeling in the future.

We derive sharp bounds for the prices of VIX futures using the full information of S&P 500 smiles. To that end, we formulate the model-free sub/superreplication of the VIX by trading in the S&P 500 and its vanilla options as well as the forward-starting log-contracts. A dual problem of minimizing/maximizing certain risk-neutral expectations is introduced and shown to yield the same value.

The classical bounds for VIX futures given the smiles only use a calendar spread of log-contracts on the S&P 500. We analyze for which smiles the classical bounds are sharp and how they can be improved when they are not. In particular, we introduce a family of functionally generated portfolios which often improves the classical bounds while still being tractable; more precisely, determined by a single concave/convex function on the line. Numerical experiments on market data and SABR smiles show that the classical lower bound can be improved dramatically, whereas the upper bound is often close to optimal.

06 Sep 2020

This paper presents our submission to the SemEval 2020 - Task 10 on emphasis

selection in written text. We approach this emphasis selection problem as a

sequence labeling task where we represent the underlying text with various

contextual embedding models. We also employ label distribution learning to

account for annotator disagreements. We experiment with the choice of model

architectures, trainability of layers, and different contextual embeddings. Our

best performing architecture is an ensemble of different models, which achieved

an overall matching score of 0.783, placing us 15th out of 31 participating

teams. Lastly, we analyze the results in terms of parts of speech tags,

sentence lengths, and word ordering.

05 May 2022

We provide explicit approximation formulas for VIX futures and options in forward variance models, with particular emphasis on the family of so-called Bergomi models: the one-factor Bergomi model [Bergomi, Smile dynamics II, Risk, 2005], the rough Bergomi model [Bayer, Friz, and Gatheral, Pricing under rough volatility, Quantitative Finance, 16(6):887-904, 2016], and an enhanced version of the rough model that can generate realistic positive skew for VIX smiles -- introduced simultaneously by De Marco [Bachelier World Congress, 2018] and Guyon [Bachelier World Congress, 2018] on the lines of [Bergomi, Smile dynamics III, Risk, 2008], that we refer to as 'mixed rough Bergomi model'. Following the methodology set up in [Gobet and Miri, Weak approximation of averaged diffusion processes. Stochastic Process.\ Appl., 124(1):475-504, 2014], we derive weak approximations for the law of the VIX, leading to option price approximations under the form of explicit combinations of Black-Scholes prices and greeks. As new contributions, we cope with the fractional integration kernel appearing in rough models and treat the case of non-smooth payoffs, so to encompass VIX futures, call and put options. We stress that our approach does not rely on small-time asymptotics nor small-parameter (such as small volatility-of-volatility) asymptotics, and can therefore be applied to any option maturity and a wide range of parameter configurations. Our results are illustrated by several numerical experiments and calibration tests to VIX market data.

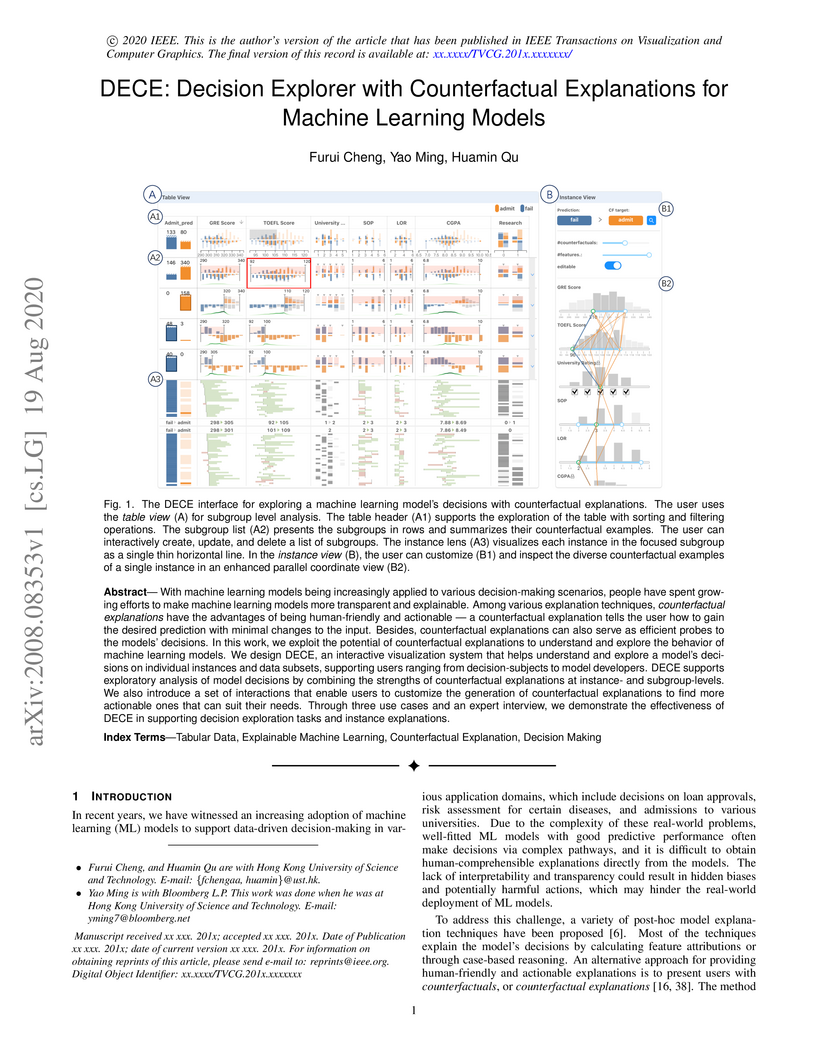

With machine learning models being increasingly applied to various decision-making scenarios, people have spent growing efforts to make machine learning models more transparent and explainable. Among various explanation techniques, counterfactual explanations have the advantages of being human-friendly and actionable -- a counterfactual explanation tells the user how to gain the desired prediction with minimal changes to the input. Besides, counterfactual explanations can also serve as efficient probes to the models' decisions. In this work, we exploit the potential of counterfactual explanations to understand and explore the behavior of machine learning models. We design DECE, an interactive visualization system that helps understand and explore a model's decisions on individual instances and data subsets, supporting users ranging from decision-subjects to model developers. DECE supports exploratory analysis of model decisions by combining the strengths of counterfactual explanations at instance- and subgroup-levels. We also introduce a set of interactions that enable users to customize the generation of counterfactual explanations to find more actionable ones that can suit their needs. Through three use cases and an expert interview, we demonstrate the effectiveness of DECE in supporting decision exploration tasks and instance explanations.

Dialogue act (DA) classification has been studied for the past two decades

and has several key applications such as workflow automation and conversation

analytics. Researchers have used, to address this problem, various traditional

machine learning models, and more recently deep neural network models such as

hierarchical convolutional neural networks (CNNs) and long short-term memory

(LSTM) networks. In this paper, we introduce a new model architecture,

directed-acyclic-graph LSTM (DAG-LSTM) for DA classification. A DAG-LSTM

exploits the turn-taking structure naturally present in a multi-party

conversation, and encodes this relation in its model structure. Using the STAC

corpus, we show that the proposed method performs roughly 0.8% better in

accuracy and 1.2% better in macro-F1 score when compared to existing methods.

The proposed method is generic and not limited to conversation applications.

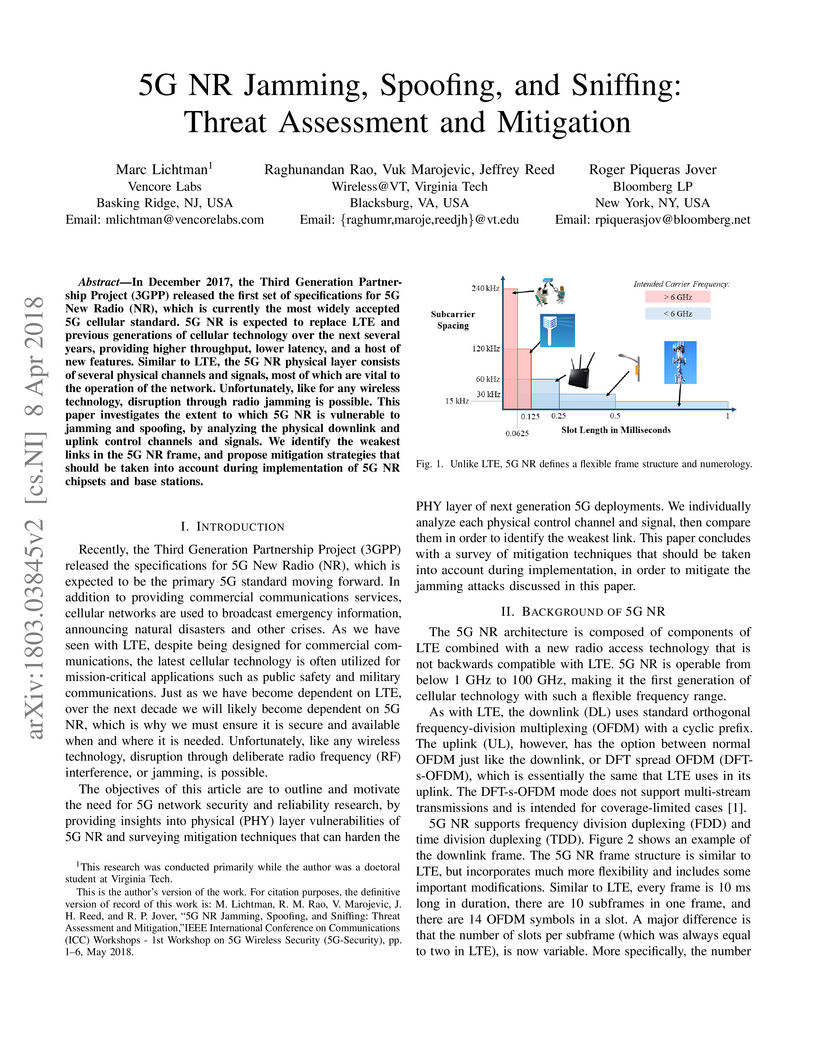

In December 2017, the Third Generation Partnership Project (3GPP) released

the first set of specifications for 5G New Radio (NR), which is currently the

most widely accepted 5G cellular standard. 5G NR is expected to replace LTE and

previous generations of cellular technology over the next several years,

providing higher throughput, lower latency, and a host of new features. Similar

to LTE, the 5G NR physical layer consists of several physical channels and

signals, most of which are vital to the operation of the network.

Unfortunately, like for any wireless technology, disruption through radio

jamming is possible. This paper investigates the extent to which 5G NR is

vulnerable to jamming and spoofing, by analyzing the physical downlink and

uplink control channels and signals. We identify the weakest links in the 5G NR

frame, and propose mitigation strategies that should be taken into account

during implementation of 5G NR chipsets and base stations.

There are no more papers matching your filters at the moment.