CUHK MMLab

Researchers from MMLab, Tsinghua University, Kuaishou Technology, and Shanghai AI Lab developed Flow-GRPO, a framework that integrates online policy gradient reinforcement learning into flow matching models. This method significantly enhances capabilities in compositional image generation, visual text rendering, and human preference alignment, achieving up to 95% GenEval accuracy on SD3.5-M by addressing challenges of determinism and sampling efficiency through an ODE-to-SDE conversion and denoising reduction.

Researchers introduced Video-R1, the first framework to apply a rule-based reinforcement learning paradigm for enhancing temporal reasoning capabilities in Multimodal Large Language Models (MLLMs) for video. Video-R1, leveraging a new temporal-aware RL algorithm and dedicated video reasoning datasets, consistently outperforms previous state-of-the-art models, achieving 37.1% accuracy on VSI-Bench, surpassing GPT-4o's 34.0%.

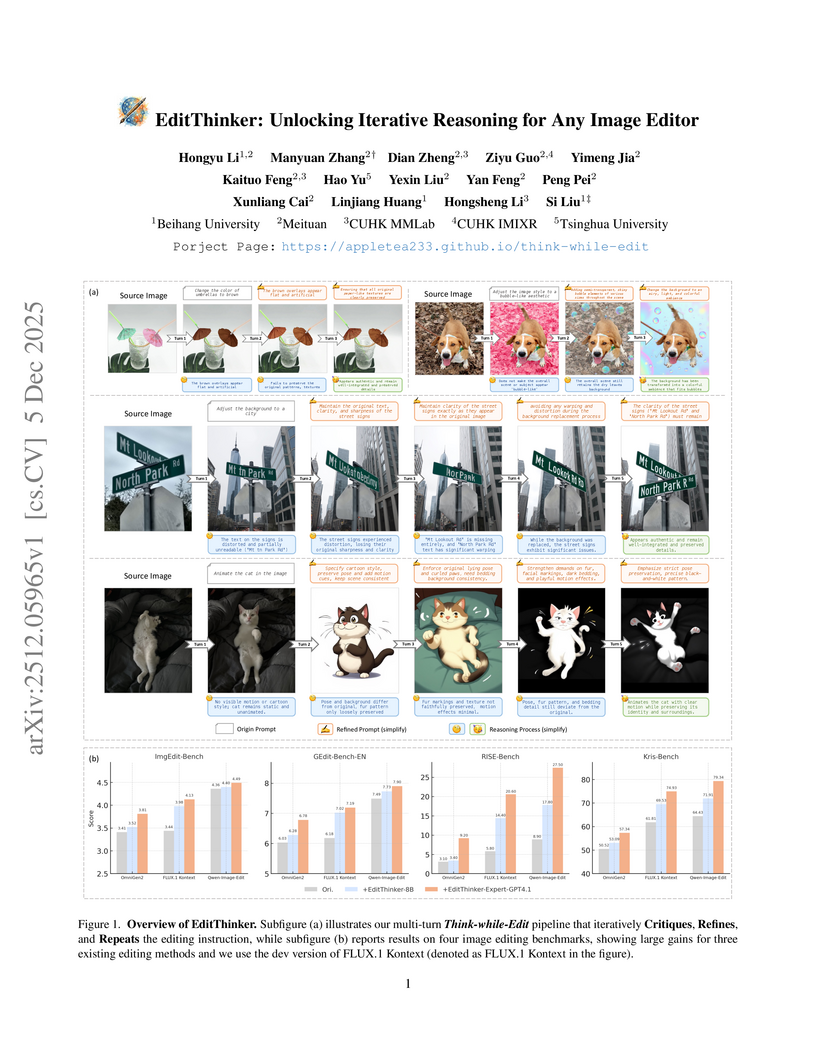

The EditThinker framework enhances instruction-following in any image editor by introducing an iterative reasoning process. It leverages a Multimodal Large Language Model to critique, reflect, and refine editing instructions, leading to consistent performance gains across diverse benchmarks and excelling in complex reasoning tasks.

T2I-R1 introduces a text-to-image generation model that integrates a bi-level Chain-of-Thought process, combining semantic-level planning and token-level generation. Through Reinforcement Learning, the model improves image quality and prompt adherence, particularly for complex and reasoning-intensive prompts, achieving significant gains on T2I-CompBench and the WISE benchmark over its baseline and state-of-the-art diffusion models.

Researchers from Mizzen AI and CUHK MMLab developed HPSv3, a robust human preference score model trained on HPDv3, the first wide-spectrum dataset covering diverse text-to-image models and real images. HPSv3 achieves a Spearman correlation of 0.94 with human judgments and powers Chain-of-Human-Preference (CoHP) for iterative image refinement.

This work introduces VILASR, a Vision-Language Model capable of spatial reasoning by generating and interpreting visual drawing operations directly within its reasoning process. The model, developed by researchers at Institute of Automation, CAS, Ant Group, CUHK MMLab, and Nanjing University, consistently outperforms existing methods by an average of 18.4% across various spatial reasoning benchmarks, including maze navigation and video analysis.

This research presents Tar, a framework that unifies visual understanding and generation within a single autoregressive Multimodal Large Language Model by employing a shared, discrete, and text-aligned visual representation. The approach achieves competitive performance across both understanding and generation benchmarks, demonstrating that a fully discrete semantic representation can match or surpass continuous-token models in understanding while excelling in high-fidelity image generation.

This work uncovers that architectural decoupling in unified multimodal models (UMMs) improves performance by inducing task-specific attention patterns, rather than eliminating task conflicts. Researchers from CUHK MMLab and Meituan introduce an Attention Interaction Alignment (AIA) loss, a regularization technique that guides UMMs' attention toward optimal task-specific behaviors without architectural changes, enhancing both understanding and generation performance for models like Emu3 and Janus-Pro.

Image editing has achieved remarkable progress recently. Modern editing models could already follow complex instructions to manipulate the original content. However, beyond completing the editing instructions, the accompanying physical effects are the key to the generation realism. For example, removing an object should also remove its shadow, reflections, and interactions with nearby objects. Unfortunately, existing models and benchmarks mainly focus on instruction completion but overlook these physical effects. So, at this moment, how far are we from physically realistic image editing? To answer this, we introduce PICABench, which systematically evaluates physical realism across eight sub-dimension (spanning optics, mechanics, and state transitions) for most of the common editing operations (add, remove, attribute change, etc.). We further propose the PICAEval, a reliable evaluation protocol that uses VLM-as-a-judge with per-case, region-level human annotations and questions. Beyond benchmarking, we also explore effective solutions by learning physics from videos and construct a training dataset PICA-100K. After evaluating most of the mainstream models, we observe that physical realism remains a challenging problem with large rooms to explore. We hope that our benchmark and proposed solutions can serve as a foundation for future work moving from naive content editing toward physically consistent realism.

Researchers developed a comprehensive framework for generating and editing structured visuals like charts and diagrams, focusing on factual accuracy over aesthetics. They introduced a large-scale, code-aligned dataset, a rigorous benchmark, and a unified generative model that achieves 55.98% accuracy in structured image editing, highlighting the critical role of explicit reasoning in maintaining factual fidelity.

WMAct introduces a framework for enhancing world model reasoning in LLMs through active, multi-turn interaction, moving away from rigid reasoning structures. The approach achieves superior performance on grid-world tasks like Sokoban (78.57% success), Maze (88.14%), and Taxi (62.16%), outperforming larger proprietary models, and shows improved generalization across diverse reasoning benchmarks.

Researchers from Shanghai AI Lab, Sun Yat-sen University, CUHK MMLab, and Peking University developed Echo-4o, an open-source image generation model that bridges the performance gap with GPT-4o by leveraging a 180K-scale synthetic dataset generated by GPT-4o itself. The model demonstrates superior instruction alignment, imaginative content generation, and multi-reference image composition, setting new benchmarks on challenging evaluation tasks.

Despite the success of deep learning in close-set 3D object detection, existing approaches struggle with zero-shot generalization to novel objects and camera configurations. We introduce DetAny3D, a promptable 3D detection foundation model capable of detecting any novel object under arbitrary camera configurations using only monocular inputs. Training a foundation model for 3D detection is fundamentally constrained by the limited availability of annotated 3D data, which motivates DetAny3D to leverage the rich prior knowledge embedded in extensively pre-trained 2D foundation models to compensate for this scarcity. To effectively transfer 2D knowledge to 3D, DetAny3D incorporates two core modules: the 2D Aggregator, which aligns features from different 2D foundation models, and the 3D Interpreter with Zero-Embedding Mapping, which stabilizes early training in 2D-to-3D knowledge transfer. Experimental results validate the strong generalization of our DetAny3D, which not only achieves state-of-the-art performance on unseen categories and novel camera configurations, but also surpasses most competitors on in-domain data. DetAny3D sheds light on the potential of the 3D foundation model for diverse applications in real-world scenarios, e.g., rare object detection in autonomous driving, and demonstrates promise for further exploration of 3D-centric tasks in open-world settings. More visualization results can be found at our code repository.

Researchers from HKU MMLab, CUHK MMLab, Sensetime, and Beihang University develop GoT-R1, a framework that applies reinforcement learning to Generation Chain-of-Thought reasoning for visual generation, enabling multimodal large language models to autonomously discover effective reasoning strategies beyond predefined templates while achieving state-of-the-art performance on T2I-CompBench through a dual-stage training process that combines supervised fine-tuning with Group Relative Policy Optimization and a comprehensive multi-dimensional reward system evaluating both reasoning chains and generated images.

15 Oct 2025

VR-Thinker, a novel video reward model, utilizes a "Thinking-with-Image" framework and a multi-stage training pipeline to dynamically acquire visual evidence during reasoning. This approach achieves state-of-the-art accuracy on video preference benchmarks with an average increase of up to 11.4% over existing models, demonstrating improved robustness for long videos and complex prompts.

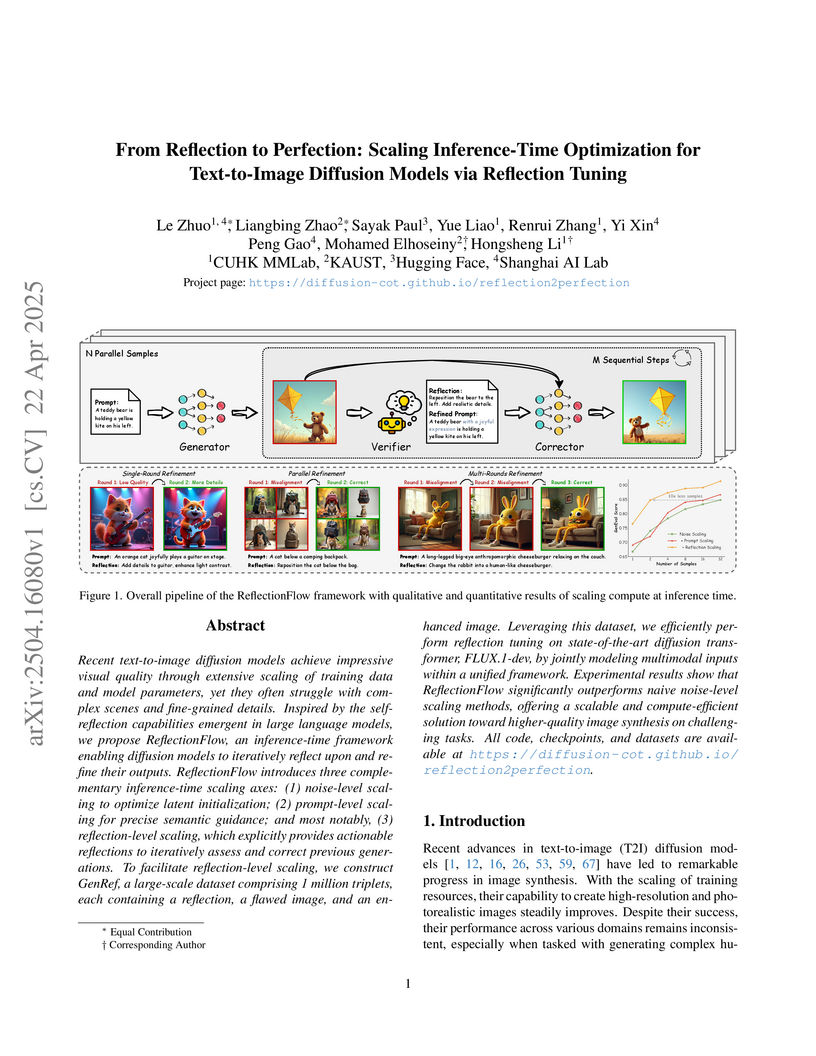

ReflectionFlow introduces an inference-time framework for text-to-image diffusion models, employing iterative self-reflection and textual feedback to refine generated images. This approach improves image generation quality, particularly for complex prompts, and provides interpretable visual reasoning through reflection chains.

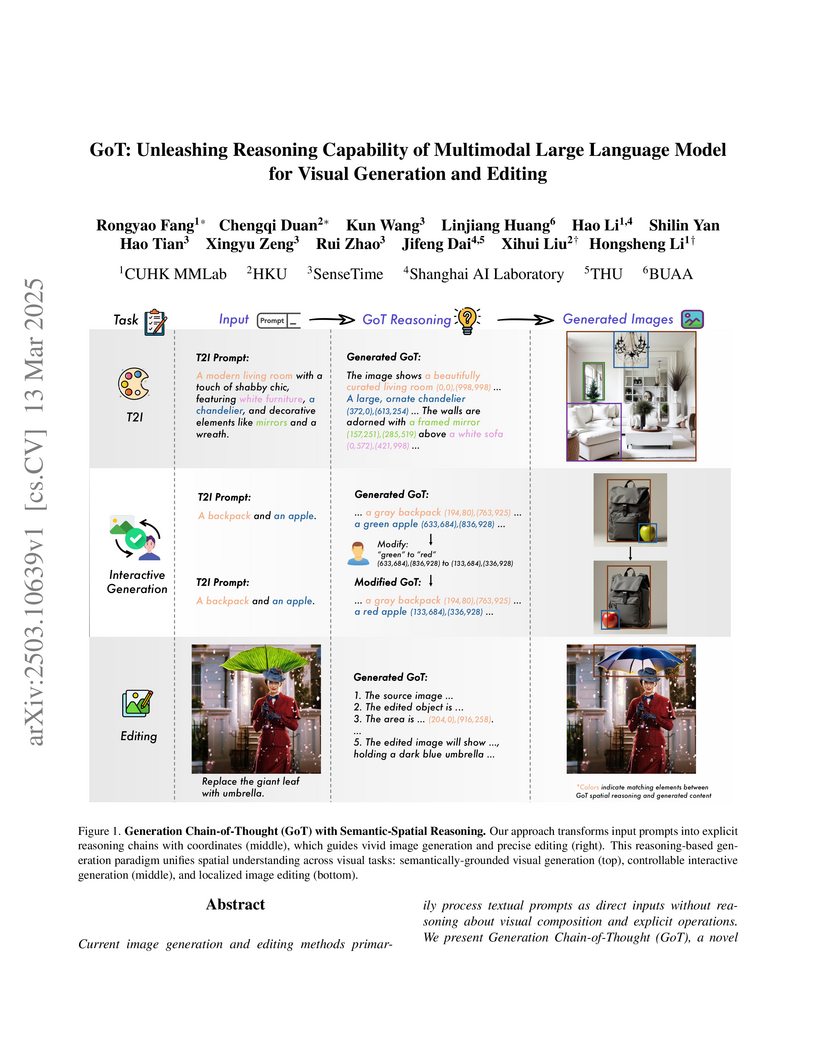

A unified framework integrates reasoning capabilities into visual generation and editing by combining multimodal language models with a semantic-spatial guidance module, demonstrating superior performance on GenEval and Emu-Edit benchmarks while enabling interactive control through explicit reasoning chains.

MATHVERSE, a new benchmark from CUHK MMLab, Shanghai AI Lab, and UCLA, rigorously evaluates Multi-modal Large Language Models' (MLLMs) visual mathematical reasoning by systematically varying information modalities. The benchmark reveals that current MLLMs frequently rely on textual cues over visual diagram interpretation, with some models even showing improved accuracy when visual input is absent.

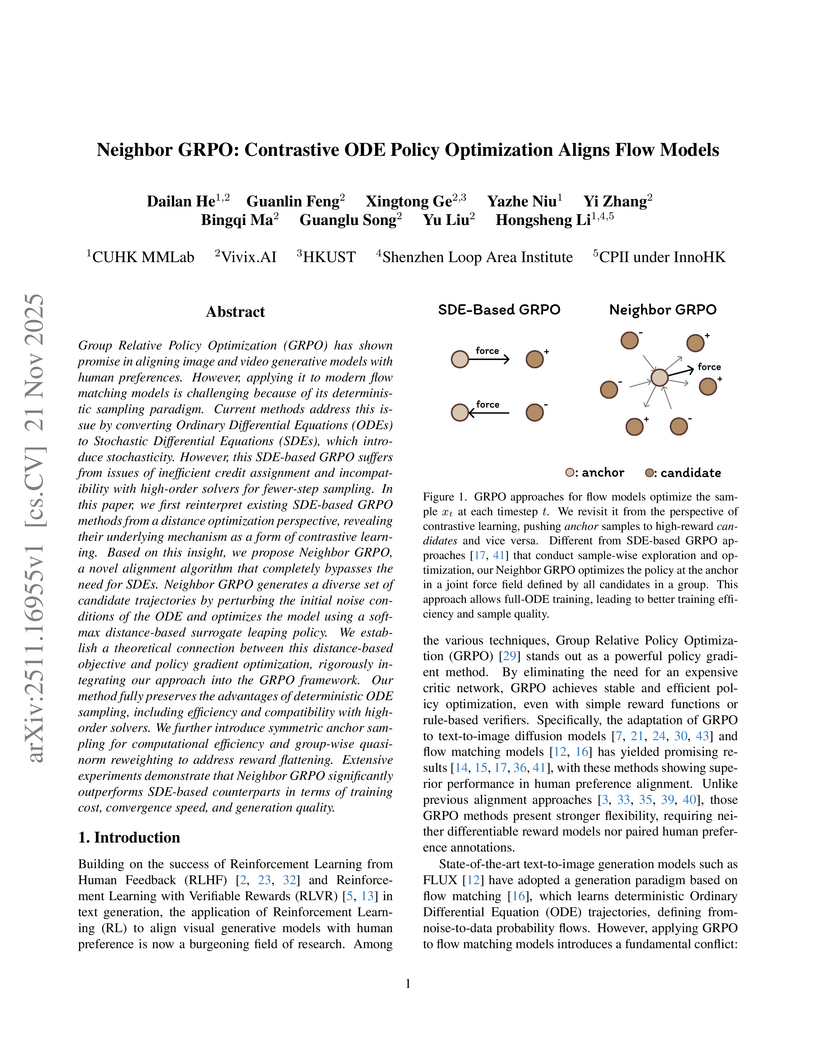

Neighbor GRPO introduces an SDE-free method for aligning flow matching models with human preferences, enabling up to 5x faster training while improving generation quality and robustness against reward hacking. The approach fully leverages efficient high-order ODE solvers for visual generative models.

10 Oct 2025

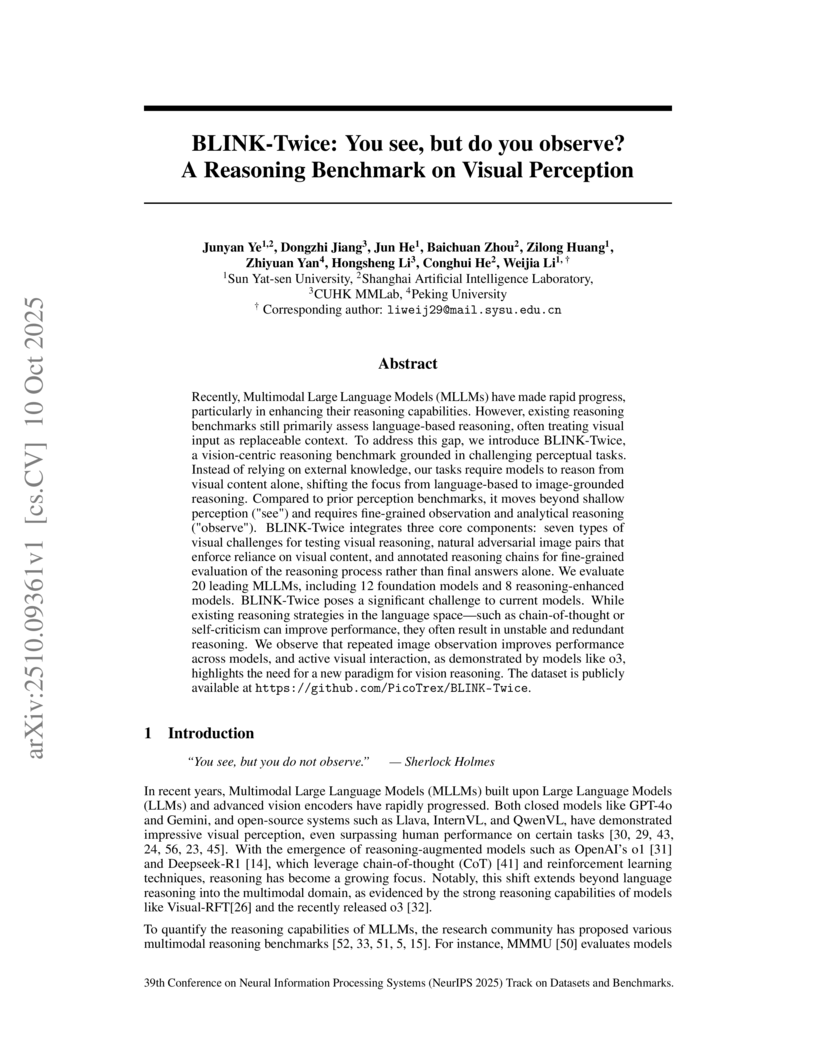

A new benchmark, BLINK-Twice, evaluates Multimodal Large Language Models (MLLMs) on vision-centric reasoning tasks that demand fine-grained observation beyond shallow perception. The evaluation reveals that even state-of-the-art MLLMs struggle with complex visual cues and adversarial samples, indicating a gap in their ability to truly 'observe' visual information.

There are no more papers matching your filters at the moment.