Sensetime

26 May 2025

StepSearch introduces a Step-wise Proximal Policy Optimization (StePPO) framework to improve Large Language Models' (LLMs) multi-hop reasoning and search capabilities through fine-grained, token-level supervision, incorporating information gain and redundancy penalties. This approach yields state-of-the-art performance on various multi-hop question answering benchmarks, showing particular effectiveness for smaller models and robust generalization across diverse knowledge bases.

Researchers from HKU MMLab, CUHK MMLab, Sensetime, and Beihang University develop GoT-R1, a framework that applies reinforcement learning to Generation Chain-of-Thought reasoning for visual generation, enabling multimodal large language models to autonomously discover effective reasoning strategies beyond predefined templates while achieving state-of-the-art performance on T2I-CompBench through a dual-stage training process that combines supervised fine-tuning with Group Relative Policy Optimization and a comprehensive multi-dimensional reward system evaluating both reasoning chains and generated images.

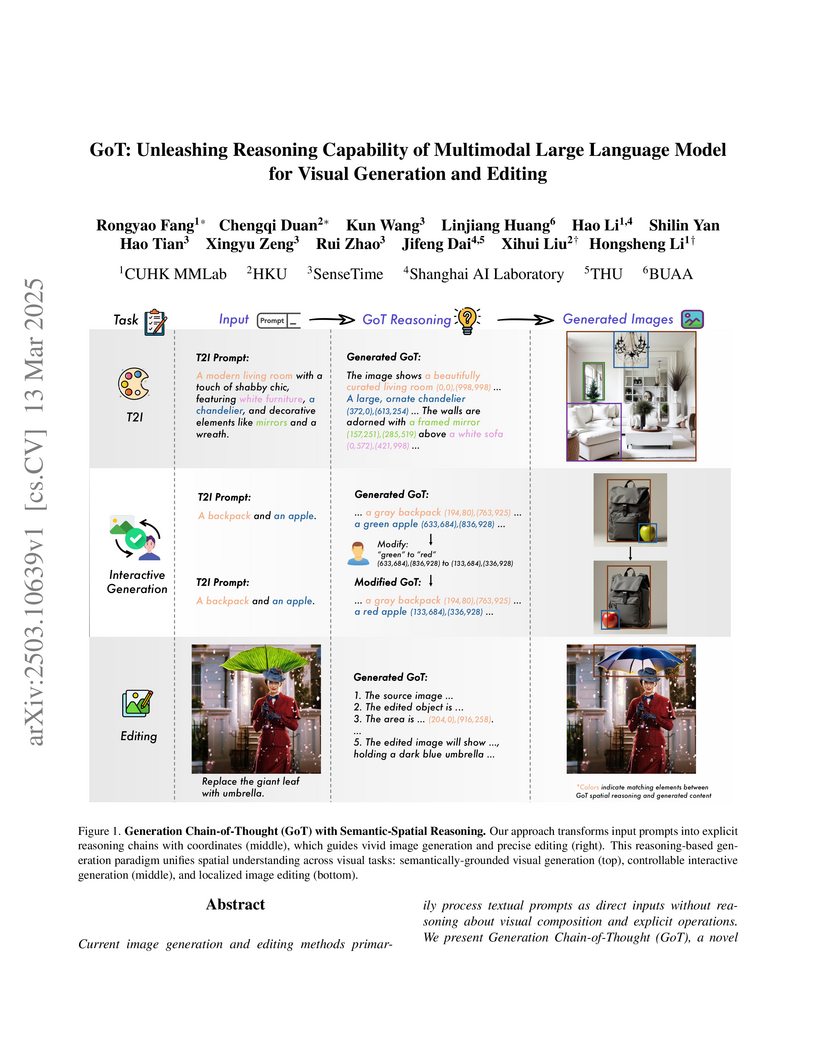

A unified framework integrates reasoning capabilities into visual generation and editing by combining multimodal language models with a semantic-spatial guidance module, demonstrating superior performance on GenEval and Emu-Edit benchmarks while enabling interactive control through explicit reasoning chains.

The MATH-Vision (MATH-V) dataset provides a new benchmark for evaluating multimodal mathematical reasoning in LMMs, revealing a substantial performance gap between leading models (GPT-4V achieving 22.76%) and human performance (75.66%) on diverse, competition-derived problems. This highlights current LMM limitations primarily in reasoning and vision recognition, rather than basic calculation or knowledge.

17 Sep 2025

MOOM, a dual-branch memory system, integrates literary theory to manage long-term memory in ultra-long role-playing dialogues for Large Language Models. It extracts plot development and character portrayal while employing a competition-inhibition forgetting mechanism, outperforming state-of-the-art methods in memory accuracy and efficiency, and utilizing fewer computational resources.

UniAD presents a single, unified framework for multi-class anomaly detection that effectively prevents models from learning trivial identity mappings. It achieved a mean AUROC of 96.5% for anomaly detection and 96.8% for localization on MVTec-AD, outperforming previous state-of-the-art methods by significant margins while maintaining performance across diverse object categories.

Researchers at SenseTime and NTU investigated the impact of data and model scaling for expressive human pose and shape estimation (EHPS), creating generalist foundation models like SMPLest-X. These models, trained on up to 40 diverse datasets and 10 million instances, significantly reduced whole-body mean primary errors from over 110 mm to below 60 mm and hand primary errors from over 62 mm to 31 mm, establishing new state-of-the-art performance and strong generalization across benchmarks.

MotionDiffuse introduces the first framework to apply Denoising Diffusion Probabilistic Models (DDPMs) for text-driven human motion generation. The model achieves state-of-the-art performance in synthesizing high-fidelity, diverse, and semantically relevant motions, while also offering novel fine-grained control over specific body parts and temporal action sequences.

InCharacter: Evaluating Personality Fidelity in Role-Playing Agents through Psychological Interviews

InCharacter: Evaluating Personality Fidelity in Role-Playing Agents through Psychological Interviews

INCHARACTER introduces an interview-based methodology for evaluating the personality fidelity of Role-Playing Agents (RPAs), adapting psychological assessment techniques for AI. This approach demonstrates that state-of-the-art RPAs can reproduce complex character personalities with up to 80.7% accuracy on scales like 16Personalities, offering a more robust and consistent evaluation than self-report methods.

This research provides a practical evaluation of KV cache compression techniques for Large Language Model serving, revealing that reported memory savings often do not translate to real-world throughput gains due to increased output length and compatibility issues with optimized serving frameworks. The work proposes tools to predict throughput, output length, and identify inputs prone to quality degradation, bridging the gap between academic research and production deployment.

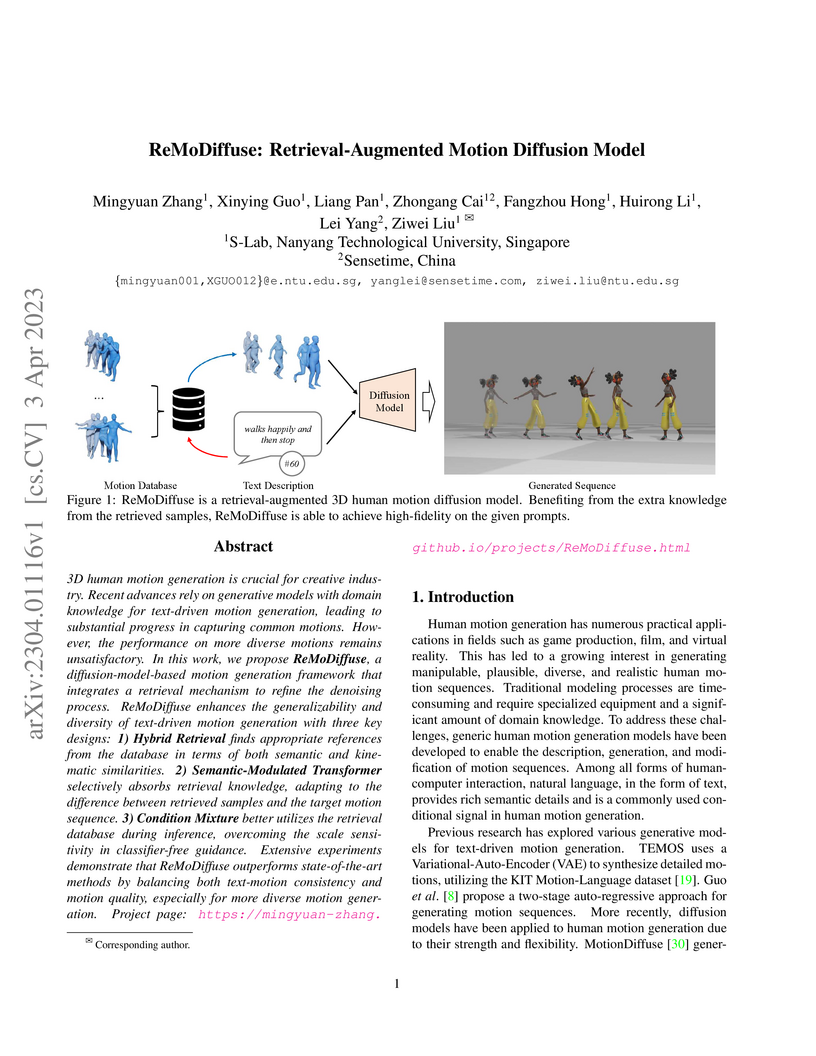

3D human motion generation is crucial for creative industry. Recent advances

rely on generative models with domain knowledge for text-driven motion

generation, leading to substantial progress in capturing common motions.

However, the performance on more diverse motions remains unsatisfactory. In

this work, we propose ReMoDiffuse, a diffusion-model-based motion generation

framework that integrates a retrieval mechanism to refine the denoising

process. ReMoDiffuse enhances the generalizability and diversity of text-driven

motion generation with three key designs: 1) Hybrid Retrieval finds appropriate

references from the database in terms of both semantic and kinematic

similarities. 2) Semantic-Modulated Transformer selectively absorbs retrieval

knowledge, adapting to the difference between retrieved samples and the target

motion sequence. 3) Condition Mixture better utilizes the retrieval database

during inference, overcoming the scale sensitivity in classifier-free guidance.

Extensive experiments demonstrate that ReMoDiffuse outperforms state-of-the-art

methods by balancing both text-motion consistency and motion quality,

especially for more diverse motion generation.

Researchers from CUHK MMLab, HKU MMLab, SenseTime, Shanghai AI Laboratory, and Tsinghua University developed PUMA, a unified Multimodal Large Language Model that handles multi-granular visual generation. PUMA addresses the diversity-controllability trade-off in visual generation by processing and generating visual features at multiple scales, achieving superior image reconstruction and competitive performance across text-to-image, image editing, and understanding tasks compared to existing models.

26 May 2025

The ARISE framework enhances Large Language Model reasoning in complex, knowledge-intensive tasks by integrating dynamic Retrieval-Augmented Generation with Monte Carlo Tree Search guided by Bayesian risk assessment. It consistently outperforms state-of-the-art knowledge-augmented reasoning methods, showing up to 23.10% accuracy (EM) improvement over vanilla RAG on challenging multi-hop question answering benchmarks.

The exploration and application of Large Language Models (LLMs) is thriving. To reduce deployment costs, continuous batching has become an essential feature in current service frameworks. The effectiveness of continuous batching relies on an accurate estimate of the memory requirements of requests. However, due to the diversity in request output lengths, existing frameworks tend to adopt aggressive or conservative schedulers, which often result in significant overestimation or underestimation of memory consumption. Consequently, they suffer from harmful request evictions or prolonged queuing times, failing to achieve satisfactory throughput under strict Service Level Agreement (SLA) guarantees (a.k.a. goodput), across various LLM application scenarios with differing input-output length distributions. To address this issue, we propose a novel Past-Future scheduler that precisely estimates the peak memory resources required by the running batch via considering the historical distribution of request output lengths and calculating memory occupancy at each future time point. It adapts to applications with all types of input-output length distributions, balancing the trade-off between request queuing and harmful evictions, thereby consistently achieving better goodput. Furthermore, to validate the effectiveness of the proposed scheduler, we developed a high-performance LLM serving framework, LightLLM, that implements the Past-Future scheduler. Compared to existing aggressive or conservative schedulers, LightLLM demonstrates superior goodput, achieving up to 2-3× higher goodput than other schedulers under heavy loads. LightLLM is open source to boost the research in such direction (this https URL).

Hi-Drive introduces a hierarchical POMDP planning framework that jointly models and infers high-level behavioral intentions and low-level driving styles of other road users in urban environments. This model-based approach demonstrates superior safety with a 0% collision rate on challenging long-term datasets and competitive real-time performance on benchmarks like nuPlan without requiring extensive training.

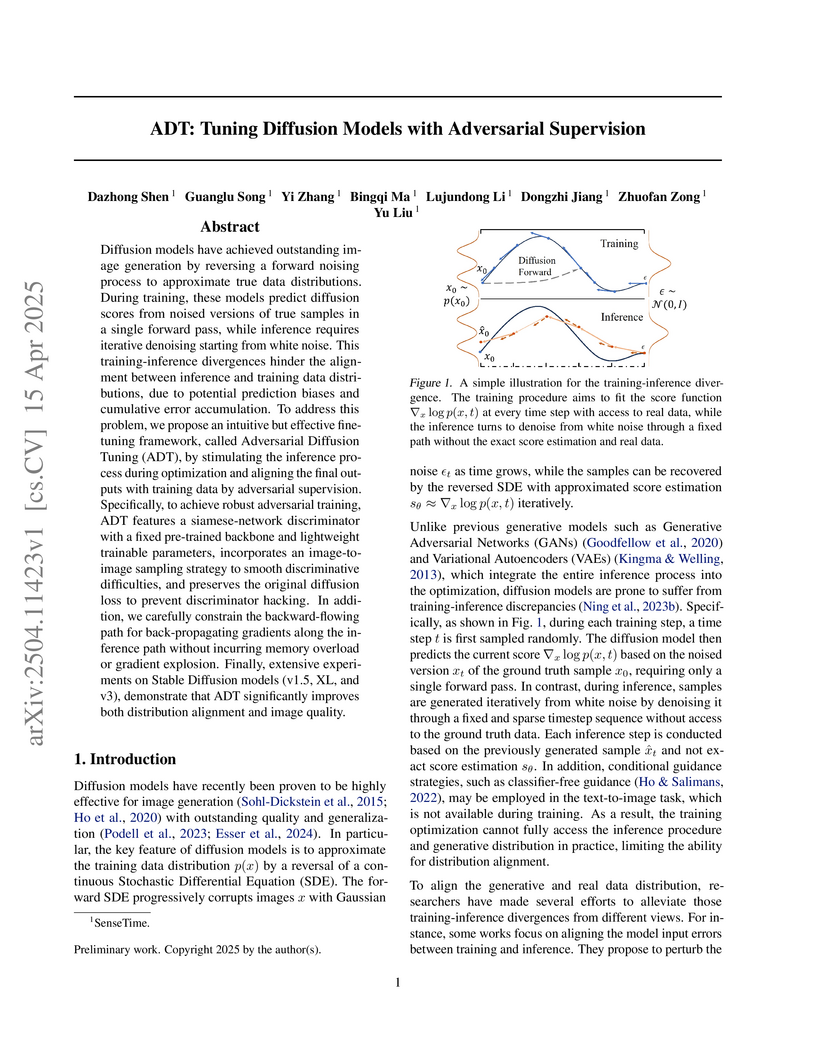

Researchers at SenseTime developed Adversarial Diffusion Tuning (ADT), a unified fine-tuning framework that addresses the training-inference discrepancy in diffusion models by simulating the inference process during training and employing adversarial supervision. This approach leads to higher-quality image generation, demonstrated by improvements in metrics such as FID, HPSv2, and Aesthetics scores across various model architectures.

Sparsity in Deep Neural Networks (DNNs) has been widely studied to compress and accelerate the models on resource-constrained environments. It can be generally categorized into unstructured fine-grained sparsity that zeroes out multiple individual weights distributed across the neural network, and structured coarse-grained sparsity which prunes blocks of sub-networks of a neural network. Fine-grained sparsity can achieve a high compression ratio but is not hardware friendly and hence receives limited speed gains. On the other hand, coarse-grained sparsity cannot concurrently achieve both apparent acceleration on modern GPUs and decent performance. In this paper, we are the first to study training from scratch an N:M fine-grained structured sparse network, which can maintain the advantages of both unstructured fine-grained sparsity and structured coarse-grained sparsity simultaneously on specifically designed GPUs. Specifically, a 2:4 sparse network could achieve 2x speed-up without performance drop on Nvidia A100 GPUs. Furthermore, we propose a novel and effective ingredient, sparse-refined straight-through estimator (SR-STE), to alleviate the negative influence of the approximated gradients computed by vanilla STE during optimization. We also define a metric, Sparse Architecture Divergence (SAD), to measure the sparse network's topology change during the training process. Finally, We justify SR-STE's advantages with SAD and demonstrate the effectiveness of SR-STE by performing comprehensive experiments on various tasks. Source codes and models are available at this https URL.

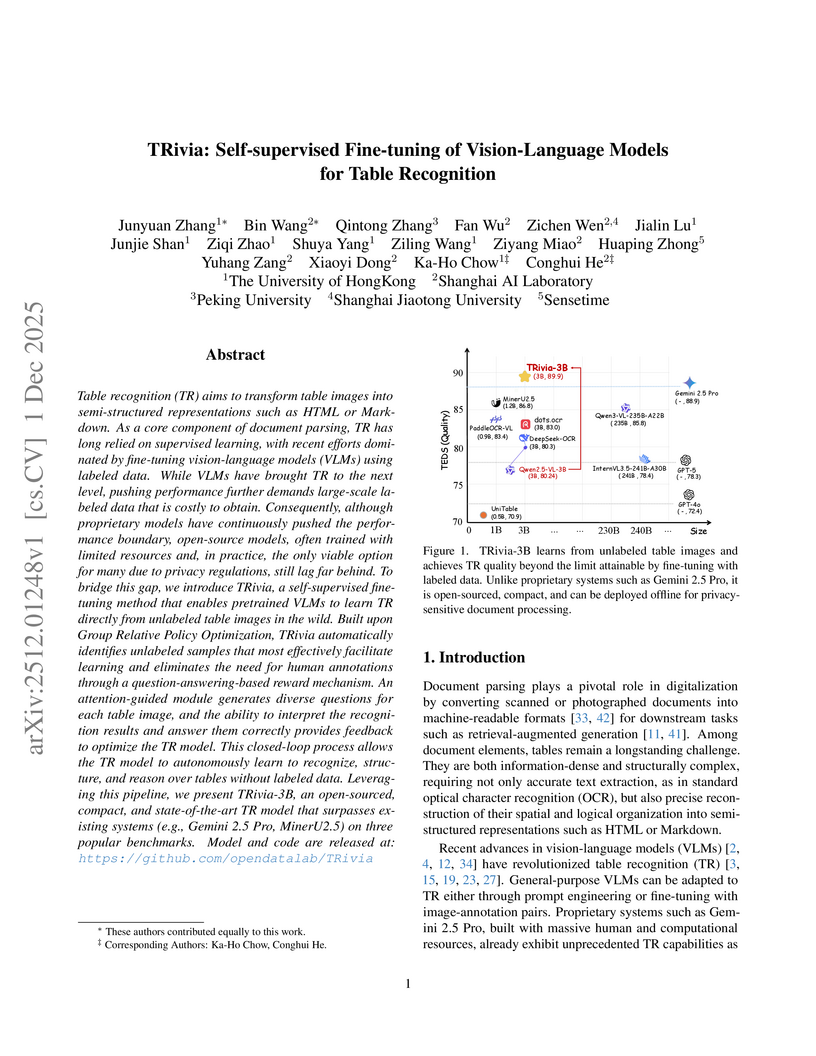

Table recognition (TR) aims to transform table images into semi-structured representations such as HTML or Markdown. As a core component of document parsing, TR has long relied on supervised learning, with recent efforts dominated by fine-tuning vision-language models (VLMs) using labeled data. While VLMs have brought TR to the next level, pushing performance further demands large-scale labeled data that is costly to obtain. Consequently, although proprietary models have continuously pushed the performance boundary, open-source models, often trained with limited resources and, in practice, the only viable option for many due to privacy regulations, still lag far behind. To bridge this gap, we introduce TRivia, a self-supervised fine-tuning method that enables pretrained VLMs to learn TR directly from unlabeled table images in the wild. Built upon Group Relative Policy Optimization, TRivia automatically identifies unlabeled samples that most effectively facilitate learning and eliminates the need for human annotations through a question-answering-based reward mechanism. An attention-guided module generates diverse questions for each table image, and the ability to interpret the recognition results and answer them correctly provides feedback to optimize the TR model. This closed-loop process allows the TR model to autonomously learn to recognize, structure, and reason over tables without labeled data. Leveraging this pipeline, we present TRivia-3B, an open-sourced, compact, and state-of-the-art TR model that surpasses existing systems (e.g., Gemini 2.5 Pro, MinerU2.5) on three popular benchmarks. Model and code are released at: this https URL

Optimizing a text-to-image diffusion model with a given reward function is an important but underexplored research area. In this study, we propose Deep Reward Tuning (DRTune), an algorithm that directly supervises the final output image of a text-to-image diffusion model and back-propagates through the iterative sampling process to the input noise. We find that training earlier steps in the sampling process is crucial for low-level rewards, and deep supervision can be achieved efficiently and effectively by stopping the gradient of the denoising network input. DRTune is extensively evaluated on various reward models. It consistently outperforms other algorithms, particularly for low-level control signals, where all shallow supervision methods fail. Additionally, we fine-tune Stable Diffusion XL 1.0 (SDXL 1.0) model via DRTune to optimize Human Preference Score v2.1, resulting in the Favorable Diffusion XL 1.0 (FDXL 1.0) model. FDXL 1.0 significantly enhances image quality compared to SDXL 1.0 and reaches comparable quality compared with Midjourney v5.2.

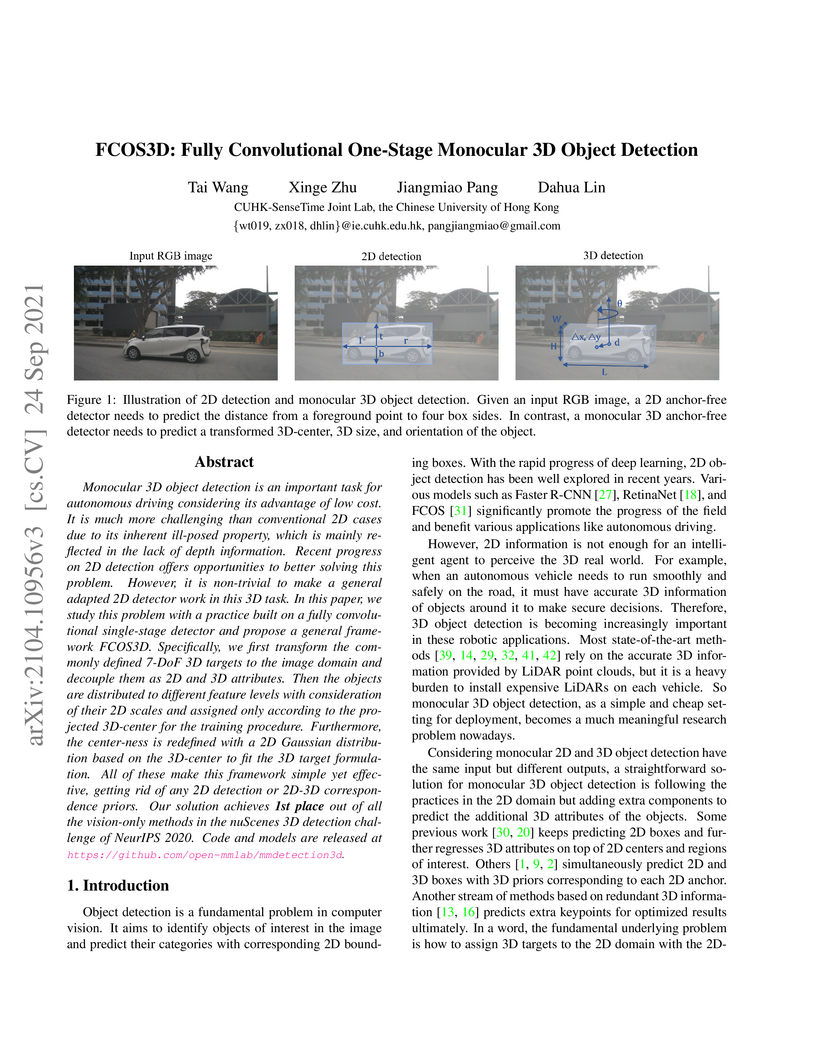

Researchers from the CUHK-SenseTime Joint Lab developed FCOS3D, which adapts a 2D anchor-free detector to monocular 3D object detection, inferring 3D properties solely from RGB images. The framework achieved first place among all vision-only methods on the nuScenes benchmark with an mAP of 0.358 and an NDS of 0.428, offering a cost-effective solution for 3D perception.

There are no more papers matching your filters at the moment.