Carleton College

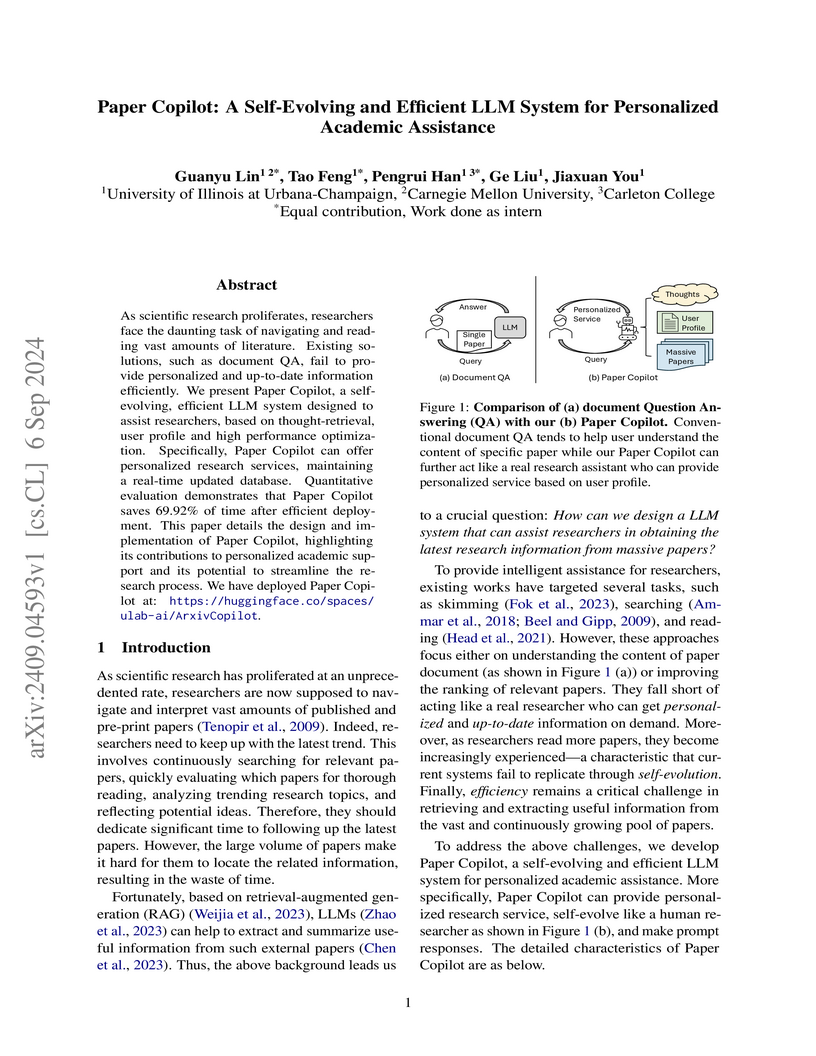

Paper Copilot is a self-evolving and efficient LLM system developed by researchers at the University of Illinois at Urbana-Champaign that offers personalized academic assistance by generating user profiles, identifying personalized trends and ideas, and providing advisory chat. The system achieves a 69.92% reduction in response time through optimizations like feature pre-computation and demonstrates improved user preference for its 'thought retrieval' based responses.

University of Amsterdam

University of Amsterdam California Institute of Technology

California Institute of Technology The University of Texas at AustinTata Institute of Fundamental Research

The University of Texas at AustinTata Institute of Fundamental Research NASA Goddard Space Flight Center

NASA Goddard Space Flight Center University of PennsylvaniaUniversity of ColoradoAmherst CollegeMacquarie UniversityJet Propulsion Laboratory

University of PennsylvaniaUniversity of ColoradoAmherst CollegeMacquarie UniversityJet Propulsion Laboratory Harvard-Smithsonian Center for AstrophysicsCarleton CollegeThe University of ArizonaUniversity of WyomingCarnegie Institution for ScienceU.S. National Science Foundation National Optical-Infrared Astronomy Research LaboratoryThe University of California, IrvinePenn StateSchmidt SciencesCarnegie ScienceRandolph-Macon College

Harvard-Smithsonian Center for AstrophysicsCarleton CollegeThe University of ArizonaUniversity of WyomingCarnegie Institution for ScienceU.S. National Science Foundation National Optical-Infrared Astronomy Research LaboratoryThe University of California, IrvinePenn StateSchmidt SciencesCarnegie ScienceRandolph-Macon CollegeWe present results from a systematic search for transiting short-period Giant Exoplanets around M-dwarf Stars (GEMS; P < 10 days, Rp≳8 R⊕) within a distance-limited 100\,pc sample of 149,316 M-dwarfs using TESS-Gaia Light Curve (TGLC) data. This search led to the discovery of one new candidate GEM, following spectroscopic vetting of 12 additional candidates to eliminate astrophysical false positives and refine our occurrence rate estimates. We describe the development and application of the \texttt{TESS-miner} package and associated vetting procedures used in this analysis. To assess detection completeness, we conducted ∼ 72 million injection-recovery tests across ∼ 26,000 stars with an average of ∼3 sectors of data per star, subdivided into early-type (M0--M2.5), mid-type (M2.5--M4), and late-type (M4 or later) M-dwarfs. Our pipeline demonstrates high sensitivity across all M-dwarf subtypes within the injection bounds.

We estimate the occurrence rates of short-period GEMS as a function of stellar mass, and combine our measured occurrence rates with those derived for FGK stars and fit an exponential trend with stellar mass, consistent with core-accretion theory predictions. We find GEMS occurrence rates of 0.067%±0.047% for early-type M-dwarfs, 0.139%±0.069% for mid-type, and 0.032%±0.032% for late-type M-dwarfs, with a mean rate of 0.065−0.027+0.025% across the full M-dwarf sample. We note that while our search spanned 1.0~\mathrm{days} < P < 10.0 days, these occurrence rates were calculated using planets orbiting with 1.0~\mathrm{days} < P < 5.0 days. This work lays the foundation for future occurrence rate investigations for GEMS.

ETH Zurich

ETH Zurich University of Waterloo

University of Waterloo Université de MontréalSichuan University

Université de MontréalSichuan University Beihang University

Beihang University City University of Hong Kong

City University of Hong Kong University of AlbertaThe University of AdelaideUniversity of Sheffield

University of AlbertaThe University of AdelaideUniversity of Sheffield KAUST

KAUST HKUST

HKUST Dartmouth CollegeCarleton CollegeChina University of Geosciences BeijingICT, Chinese Academy of Sciences

Dartmouth CollegeCarleton CollegeChina University of Geosciences BeijingICT, Chinese Academy of SciencesInteractive Natural Language Processing (iNLP) has emerged as a novel

paradigm within the field of NLP, aimed at addressing limitations in existing

frameworks while aligning with the ultimate goals of artificial intelligence.

This paradigm considers language models as agents capable of observing, acting,

and receiving feedback iteratively from external entities. Specifically,

language models in this context can: (1) interact with humans for better

understanding and addressing user needs, personalizing responses, aligning with

human values, and improving the overall user experience; (2) interact with

knowledge bases for enriching language representations with factual knowledge,

enhancing the contextual relevance of responses, and dynamically leveraging

external information to generate more accurate and informed responses; (3)

interact with models and tools for effectively decomposing and addressing

complex tasks, leveraging specialized expertise for specific subtasks, and

fostering the simulation of social behaviors; and (4) interact with

environments for learning grounded representations of language, and effectively

tackling embodied tasks such as reasoning, planning, and decision-making in

response to environmental observations. This paper offers a comprehensive

survey of iNLP, starting by proposing a unified definition and framework of the

concept. We then provide a systematic classification of iNLP, dissecting its

various components, including interactive objects, interaction interfaces, and

interaction methods. We proceed to delve into the evaluation methodologies used

in the field, explore its diverse applications, scrutinize its ethical and

safety issues, and discuss prospective research directions. This survey serves

as an entry point for researchers who are interested in this rapidly evolving

area and offers a broad view of the current landscape and future trajectory of

iNLP.

Legal documents pose unique challenges for text classification due to their domain-specific language and often limited labeled data. This paper proposes a hybrid approach for classifying legal texts by combining unsupervised topic and graph embeddings with a supervised model. We employ Top2Vec to learn semantic document embeddings and automatically discover latent topics, and Node2Vec to capture structural relationships via a bipartite graph of legal documents. The embeddings are combined and clustered using KMeans, yielding coherent groupings of documents. Our computations on a legal document dataset demonstrate that the combined Top2Vec+Node2Vec approach improves clustering quality over text-only or graph-only embeddings. We conduct a sensitivity analysis of hyperparameters, such as the number of clusters and the dimensionality of the embeddings, and demonstrate that our method achieves competitive performance against baseline Latent Dirichlet Allocation (LDA) and Non-Negative Matrix Factorization (NMF) models. Key findings indicate that while the pipeline presents an innovative approach to unsupervised legal document analysis by combining semantic topic modeling with graph embedding techniques, its efficacy is contingent upon the quality of initial topic generation and the representational power of the chosen embedding models for specialized legal language. Strategic recommendations include the exploration of domain-specific embeddings, more comprehensive hyperparameter tuning for Node2Vec, dynamic determination of cluster numbers, and robust human-in-the-loop validation processes to enhance legal relevance and trustworthiness. The pipeline demonstrates potential for exploratory legal data analysis and as a precursor to supervised learning tasks but requires further refinement and domain-specific adaptation for practical legal applications.

The rise of large language models (LLMs) offers new opportunities for automatic error detection in education, particularly for math word problems (MWPs). While prior studies demonstrate the promise of LLMs as error detectors, they overlook the presence of multiple valid solutions for a single MWP. Our preliminary analysis reveals a significant performance gap between conventional and alternative solutions in MWPs, a phenomenon we term conformity bias in this work. To mitigate this bias, we introduce the Ask-Before-Detect (AskBD) framework, which generates adaptive reference solutions using LLMs to enhance error detection. Experiments on 200 examples of GSM8K show that AskBD effectively mitigates bias and improves performance, especially when combined with reasoning-enhancing techniques like chain-of-thought prompting.

California Institute of Technology

California Institute of Technology University of Pennsylvania

University of Pennsylvania University of ArizonaAmherst CollegeMacquarie UniversityJet Propulsion LaboratoryCarleton CollegeAustralian Astronomical OpticsU.S. National Science Foundation National Optical-Infrared Astronomy Research LaboratoryThe University of California, IrvinePenn StateSchmidt SciencesSteward Observatory

University of ArizonaAmherst CollegeMacquarie UniversityJet Propulsion LaboratoryCarleton CollegeAustralian Astronomical OpticsU.S. National Science Foundation National Optical-Infrared Astronomy Research LaboratoryThe University of California, IrvinePenn StateSchmidt SciencesSteward ObservatoryThis study from the University of Pennsylvania and Penn State University introduces Order-by-Order (OBO) and Joint Keplerian (JK) modeling paradigms to leverage multi-wavelength information in exoplanet radial velocity data. These methods improved the precision of exoplanet minimum mass uncertainties by factors of 1.5 to 6.8, providing better constraints than traditional Variance-Weighted Mean approaches.

29 Sep 2025

The powerful combination of Gaia with other Milky Way large survey data has ushered in a deeper understanding of the assembly history of our Galaxy, which is marked by the accretion of Gaia-Enceladus/Sausage (GES). As a step towards reconstructing this significant merger, we examine the existence and destruction of its stellar metallicity gradient. We investigate 8 GES-like progenitors from the Auriga simulations and find that all have negative metallicity gradients at infall with a range of -0.09 to -0.03 dex/kpc against radius and -1.99 to -0.41 dex/10−5km2s−2 against the stellar orbital energy. These gradients get blurred and become shallower when measured at z=0 in the Milky Way-like host. The percentage change in the radial metallicity gradient is consistently high (78-98\%), while the percentage change in the energy space varies much more (9-91\%). We also find that the most massive progenitors show the smallest changes in their energy metallicity gradients. At the same present-day galactocentric radius, lower metallicity stars originate from the outskirts of the GES progenitor. Similarly, at fixed metallicity, stars at higher galactocentric radii tend to originate from the GES outskirts. We find that the GES stellar mass, total mass, infall time, and the present-day Milky Way total mass are correlated with the percentage change in metallicity gradient, both in radius and in energy space. It is therefore vital to constrain these properties further to pin down the infall metallicity gradient of the GES progenitor and understand the onset of such ordered chemistry at cosmic noon.

25 Aug 2025

University of Cambridge

University of Cambridge University of California, Santa Barbara

University of California, Santa Barbara New York University

New York University Stanford UniversityUniversity of HoustonUniversity of Colorado BoulderNew Jersey Institute of TechnologyUniversity of BathUniversity of VermontCarleton CollegeMiddlebury CollegeHamline UniversityHarvey Mudd CollegeUniversidad Nacional Autonoma de MexicoDenison University

Stanford UniversityUniversity of HoustonUniversity of Colorado BoulderNew Jersey Institute of TechnologyUniversity of BathUniversity of VermontCarleton CollegeMiddlebury CollegeHamline UniversityHarvey Mudd CollegeUniversidad Nacional Autonoma de MexicoDenison UniversityMathematical models of complex social systems can enrich social scientific theory, inform interventions, and shape policy. From voting behavior to economic inequality and urban development, such models influence decisions that affect millions of lives. Thus, it is especially important to formulate and present them with transparency, reproducibility, and humility. Modeling in social domains, however, is often uniquely challenging. Unlike in physics or engineering, researchers often lack controlled experiments or abundant, clean data. Observational data is sparse, noisy, partial, and missing in systematic ways. In such an environment, how can we build models that can inform science and decision-making in transparent and responsible ways?

TIBET introduces a comprehensive framework for identifying and evaluating biases in text-to-image generative models. It leverages Large Language Models to dynamically detect prompt-specific bias axes and generate counterfactuals, while Vision-Language Models provide concept-level explanations and quantitative bias scores, demonstrating improved alignment with human perception of bias compared to previous methods.

Northwestern Polytechnical University Monash UniversityUniversity of Utah

Monash UniversityUniversity of Utah Shanghai Jiao Tong UniversityNankai UniversityIBM ResearchUniversity of Missouri

Shanghai Jiao Tong UniversityNankai UniversityIBM ResearchUniversity of Missouri University of MinnesotaCleveland ClinicThe University of SydneyMayo ClinicBrigham Young UniversityCarleton CollegeGerman Cancer Research CenterRocky Vista College of Osteopathic Medicine

University of MinnesotaCleveland ClinicThe University of SydneyMayo ClinicBrigham Young UniversityCarleton CollegeGerman Cancer Research CenterRocky Vista College of Osteopathic Medicine

Monash UniversityUniversity of Utah

Monash UniversityUniversity of Utah Shanghai Jiao Tong UniversityNankai UniversityIBM ResearchUniversity of Missouri

Shanghai Jiao Tong UniversityNankai UniversityIBM ResearchUniversity of Missouri University of MinnesotaCleveland ClinicThe University of SydneyMayo ClinicBrigham Young UniversityCarleton CollegeGerman Cancer Research CenterRocky Vista College of Osteopathic Medicine

University of MinnesotaCleveland ClinicThe University of SydneyMayo ClinicBrigham Young UniversityCarleton CollegeGerman Cancer Research CenterRocky Vista College of Osteopathic MedicineThe Kidney and Kidney Tumor Segmentation Challenge 2021 (KiTS21) advanced automatic segmentation of kidneys, renal tumors, and cysts in CT scans. It provided a standardized benchmark with multi-institutional data and achieved near human-level accuracy for tumor segmentation, particularly for larger tumors.

This survey article has grown out of the GAIED (pronounced "guide") workshop

organized by the authors at the NeurIPS 2023 conference. We organized the GAIED

workshop as part of a community-building effort to bring together researchers,

educators, and practitioners to explore the potential of generative AI for

enhancing education. This article aims to provide an overview of the workshop

activities and highlight several future research directions in the area of

GAIED.

When the environment is partially observable (PO), a deep reinforcement

learning (RL) agent must learn a suitable temporal representation of the entire

history in addition to a strategy to control. This problem is not novel, and

there have been model-free and model-based algorithms proposed for this

problem. However, inspired by recent success in model-free image-based RL, we

noticed the absence of a model-free baseline for history-based RL that (1) uses

full history and (2) incorporates recent advances in off-policy continuous

control. Therefore, we implement recurrent versions of DDPG, TD3, and SAC

(RDPG, RTD3, and RSAC) in this work, evaluate them on short-term and long-term

PO domains, and investigate key design choices. Our experiments show that RDPG

and RTD3 can surprisingly fail on some domains and that RSAC is the most

reliable, reaching near-optimal performance on nearly all domains. However, one

task that requires systematic exploration still proved to be difficult, even

for RSAC. These results show that model-free RL can learn good temporal

representation using only reward signals; the primary difficulty seems to be

computational cost and exploration. To facilitate future research, we have made

our PyTorch implementation publicly available at

this https URL

Recent advancements in artificial intelligence have led to the creation of highly capable large language models (LLMs) that can perform tasks in a human-like manner. However, LLMs exhibit only infant-level cognitive abilities in certain areas. One such area is the A-Not-B error, a phenomenon seen in infants where they repeat a previously rewarded behavior despite well-observed changed conditions. This highlights their lack of inhibitory control -- the ability to stop a habitual or impulsive response. In our work, we design a text-based multi-choice QA scenario similar to the A-Not-B experimental settings to systematically test the inhibitory control abilities of LLMs. We found that state-of-the-art LLMs (like Llama3-8b) perform consistently well with in-context learning (ICL) but make errors and show a significant drop of as many as 83.3% in reasoning tasks when the context changes trivially. This suggests that LLMs only have inhibitory control abilities on par with human infants in this regard, often failing to suppress the previously established response pattern during ICL.

Large Language models (LLMs), while powerful, exhibit harmful social biases. Debiasing is often challenging due to computational costs, data constraints, and potential degradation of multi-task language capabilities. This work introduces a novel approach utilizing ChatGPT to generate synthetic training data, aiming to enhance the debiasing of LLMs. We propose two strategies: Targeted Prompting, which provides effective debiasing for known biases but necessitates prior specification of bias in question; and General Prompting, which, while slightly less effective, offers debiasing across various categories. We leverage resource-efficient LLM debiasing using adapter tuning and compare the effectiveness of our synthetic data to existing debiasing datasets. Our results reveal that: (1) ChatGPT can efficiently produce high-quality training data for debiasing other LLMs; (2) data produced via our approach surpasses existing datasets in debiasing performance while also preserving internal knowledge of a pre-trained LLM; and (3) synthetic data exhibits generalizability across categories, effectively mitigating various biases, including intersectional ones. These findings underscore the potential of synthetic data in advancing the fairness of LLMs with minimal retraining cost.

The merger rate of black hole binaries inferred from the detections in the

first Advanced LIGO science run, implies that a stochastic background produced

by a cosmological population of mergers will likely mask the primordial

gravitational-wave background. Here we demonstrate that the next generation of

ground-based detectors, such as the Einstein Telescope and Cosmic Explorer,

will be able to observe binary black hole mergers throughout the universe with

sufficient efficiency that the confusion background can potentially be

subtracted to observe the primordial background at the level of

ΩGW≃10−13 after five years of observation.

21 Dec 2020

Observations of neutron-star mergers based on distinct messengers, including

gravitational waves and electromagnetic signals, can be used to study the

behavior of matter denser than an atomic nucleus, and to measure the expansion

rate of the Universe described by the Hubble constant. We perform a joint

analysis of the gravitational-wave signal GW170817 with its electromagnetic

counterparts AT2017gfo and GRB170817A, and the gravitational-wave signal

GW190425, both originating from neutron-star mergers. We combine these with

previous measurements of pulsars using X-ray and radio observations, and

nuclear-theory computations using chiral effective field theory to constrain

the neutron-star equation of state. We find that the radius of a 1.4 solar

mass neutron star is 11.75−0.81+0.86 km at 90% confidence and

the Hubble constant is 66.2−4.2+4.4 kmMpc−1s−1 at

1σ uncertainty.

In this paper we try to organize machine teaching as a coherent set of ideas.

Each idea is presented as varying along a dimension. The collection of

dimensions then form the problem space of machine teaching, such that existing

teaching problems can be characterized in this space. We hope this organization

allows us to gain deeper understanding of individual teaching problems,

discover connections among them, and identify gaps in the field.

03 Jun 2016

We present relativistic analyses of 9257 measurements of times-of-arrival from the first binary pulsar, PSR B1913+16, acquired over the last thirty-five years. The determination of the 'Keplerian' orbital elements plus two relativistic terms completely characterizes the binary system, aside from an unknown rotation about the line of sight; leading to a determination of the masses of the pulsar and its companion: 1.438 ± 0.001 solar masses and 1.390 ± 0.001 solar masses, respectively. In addition, the complete system characterization allows the creation of tests of relativistic gravitation by comparing measured and predicted sizes of various relativistic phenomena. We find that the ratio of observed orbital period decrease due to gravitational wave damping (corrected by a kinematic term) to the general relativistic prediction, is 0.9983 pm 0.0016; thereby confirming the existence and strength of gravitational radiation as predicted by general relativity. For the first time in this system, we have also successfully measured the two parameters characterizing the Shapiro gravitational propagation delay, and find that their values are consistent with general relativistic predictions. We have also measured for the first time in any system the relativistic shape correction to the elliptical orbit, δθ,although its intrinsic value is obscured by currently unquantified pulsar emission beam aberration. We have also marginally measured the time derivative of the projected semimajor axis, which, when improved in combination with beam aberration modelling from geodetic precession observations, should ultimately constrain the pulsar's moment of inertia.

The development of multicellular organisms entails a deep connection between time-dependent biochemical processes taking place at the subcellular level, and the resulting macroscopic phenotypes that arise in populations of up to trillions of cells. A statistical mechanics of developmental processes would help to understand how microscopic genotypes map onto macroscopic phenotypes, a general goal across biology. Here we follow this approach, hypothesizing that development should be understood as a thermodynamic transition between non-equilibrium states. We test this hypothesis in the context of the fruit fly, Drosophila melanogaster, a model organism used widely in genetics and developmental biology for over a century. Applying a variety of information-theoretic measures to public transcriptomics datasets of whole fly embryos during development, we show that the global temporal dynamics of gene expression can be understood as a process that probabilistically guides embryonic dynamics across macroscopic phenotypic stages. In particular, we demonstrate signatures of irreversibility in the information complexity of transcriptomic dynamics, as measured mainly by the permutation entropy of indexed ensembles (PI entropy). Our results show that the dynamics of PI entropy correlate strongly with developmental stages. Overall, this is a test case in applying information complexity analysis to relate the statistical mechanics of biomarkers to macroscopic developmental dynamics.

Recent advancements in the collection and analysis of sequential educational data have brought time series analysis to a pivotal position in educational research, highlighting its essential role in facilitating data-driven decision-making. However, there is a lack of comprehensive summaries that consolidate these advancements. To the best of our knowledge, this paper is the first to provide a comprehensive review of time series analysis techniques specifically within the educational context. We begin by exploring the landscape of educational data analytics, categorizing various data sources and types relevant to education. We then review four prominent time series methods-forecasting, classification, clustering, and anomaly detection-illustrating their specific application points in educational settings. Subsequently, we present a range of educational scenarios and applications, focusing on how these methods are employed to address diverse educational tasks, which highlights the practical integration of multiple time series methods to solve complex educational problems. Finally, we conclude with a discussion on future directions, including personalized learning analytics, multimodal data fusion, and the role of large language models (LLMs) in educational time series. The contributions of this paper include a detailed taxonomy of educational data, a synthesis of time series techniques with specific educational applications, and a forward-looking perspective on emerging trends and future research opportunities in educational analysis. The related papers and resources are available and regularly updated at the project page.

There are no more papers matching your filters at the moment.