Doshisha University

We propose a novel tensor network representation for two-dimensional

Yang-Mills theories with arbitrary compact gauge groups. In this method, tensor

indices are directly given by group elements with no direct use of the

character expansion. We apply the tensor renormalization group method to this

tensor network for SU(2) and SU(3), and find that the free energy density

and the energy density are accurately evaluated. We also show that the singular

value decomposition of a tensor has a group theoretic structure and can be

associated with the character expansion.

30 Aug 2024

We demonstrate that quantum error correction is realized by the

renormalization group in scalar field theories. We construct q-level states

by using coherent states in the IR region. By acting on them the inverse of the

unitary operator U that describes the renormalization group flow of the

ground state, we encode them into states in the UV region. We find the

situations in which the Knill-Laflamme condition is satisfied for operators

that create coherent states. We verify this to the first order in the

perturbation theory. This result suggests a general relationship between the

renormalization group and quantum error correction and should give insights

into understanding the role played by them in the gauge/gravity correspondence.

04 Jun 2024

M2D-CLAP unifies self-supervised audio representation learning with audio-language alignment, creating a general-purpose model that excels in both transfer learning and zero-shot classification tasks. This approach achieves top average performance in linear evaluation across diverse audio tasks and sets a new state-of-the-art for zero-shot music genre classification on GTZAN.

Transformers are deep architectures that define ``in-context maps'' which enable predicting new tokens based on a given set of tokens (such as a prompt in NLP applications or a set of patches for a vision transformer). In previous work, we studied the ability of these architectures to handle an arbitrarily large number of context tokens. To mathematically, uniformly analyze their expressivity, we considered the case that the mappings are conditioned on a context represented by a probability distribution which becomes discrete for a finite number of tokens. Modeling neural networks as maps on probability measures has multiple applications, such as studying Wasserstein regularity, proving generalization bounds and doing a mean-field limit analysis of the dynamics of interacting particles as they go through the network. In this work, we study the question what kind of maps between measures are transformers. We fully characterize the properties of maps between measures that enable these to be represented in terms of in-context maps via a push forward. On the one hand, these include transformers; on the other hand, transformers universally approximate representations with any continuous in-context map. These properties are preserving the cardinality of support and that the regular part of their Fréchet derivative is uniformly continuous. Moreover, we show that the solution map of the Vlasov equation, which is of nonlocal transport type, for interacting particle systems in the mean-field regime for the Cauchy problem satisfies the conditions on the one hand and, hence, can be approximated by a transformer; on the other hand, we prove that the measure-theoretic self-attention has the properties that ensure that the infinite depth, mean-field measure-theoretic transformer can be identified with a Vlasov flow.

Some datasets with the described content and order of occurrence of sounds

have been released for conversion between environmental sound and text.

However, there are very few texts that include information on the impressions

humans feel, such as "sharp" and "gorgeous," when they hear environmental

sounds. In this study, we constructed a dataset with impression captions for

environmental sounds that describe the impressions humans have when hearing

these sounds. We used ChatGPT to generate impression captions and selected the

most appropriate captions for sound by humans. Our dataset consists of 3,600

impression captions for environmental sounds. To evaluate the appropriateness

of impression captions for environmental sounds, we conducted subjective and

objective evaluations. From our evaluation results, we indicate that

appropriate impression captions for environmental sounds can be generated.

06 Apr 2025

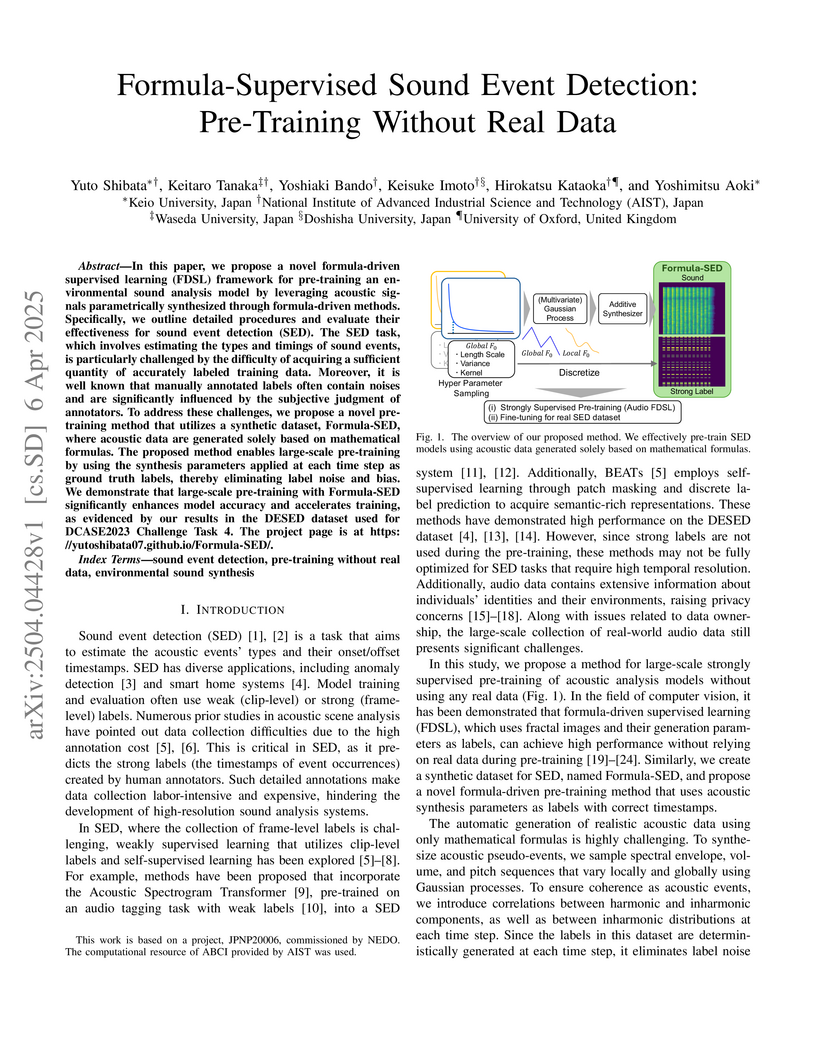

Researchers from AIST, Japan, and the University of Oxford introduce a paradigm for Sound Event Detection (SED) pre-training, called Formula-Supervised Sound Event Detection (FDSL), which utilizes purely mathematically generated synthetic audio data and perfectly precise labels. This approach improves accuracy and accelerates training convergence on real-world SED tasks while eliminating reliance on real-world data for initial model training.

25 Oct 2025

National Astronomical Observatory of Japan Kyoto University

Kyoto University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center Seoul National UniversityDoshisha UniversityUniversity of Colorado BoulderUniversity of HyogoNorthumbria UniversityThe Catholic University of AmericaNational Solar ObservatoryUniversity of OsakaNational Astronomical Observatory of Japan, NINS

Seoul National UniversityDoshisha UniversityUniversity of Colorado BoulderUniversity of HyogoNorthumbria UniversityThe Catholic University of AmericaNational Solar ObservatoryUniversity of OsakaNational Astronomical Observatory of Japan, NINS

Kyoto University

Kyoto University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center Seoul National UniversityDoshisha UniversityUniversity of Colorado BoulderUniversity of HyogoNorthumbria UniversityThe Catholic University of AmericaNational Solar ObservatoryUniversity of OsakaNational Astronomical Observatory of Japan, NINS

Seoul National UniversityDoshisha UniversityUniversity of Colorado BoulderUniversity of HyogoNorthumbria UniversityThe Catholic University of AmericaNational Solar ObservatoryUniversity of OsakaNational Astronomical Observatory of Japan, NINSCoronal mass ejections (CMEs) on the early Sun may have profoundly influenced the planetary atmospheres of early Solar System planets. Flaring young solar analogues serve as excellent proxies for probing the plasma environment of the young Sun, yet their CMEs remain poorly understood. Here we report the detection of multi-wavelength Doppler shifts in Far-Ultraviolet (FUV) and optical lines during a flare on the young solar analog EK Draconis. During and before a Carrington-class (∼1032 erg) flare, warm FUV lines (∼105 K) exhibit blueshifted emission at 300-550 km s−1, indicative of a warm eruption. 10 minutes later, the Hα line shows slow (70 km s−1), long-lasting (≳2 hrs) blueshifted absorptions, suggesting a cool (∼104 K) filament eruption. This provides evidence of multi-temperature and multi-component nature of a stellar CME. If Carrington-class flares/CMEs occurred frequently on the young Sun, they may have cumulatively impacted the early Earth's magnetosphere and atmosphere.

We introduce a mild generative variant of the classical neural operator model, which leverages Kolmogorov--Arnold networks to solve infinite families of second-order backward stochastic differential equations (2BSDEs) on regular bounded Euclidean domains with random terminal time. Our first main result shows that the solution operator associated with a broad range of 2BSDE families is approximable by appropriate neural operator models. We then identify a structured subclass of (infinite) families of 2BSDEs whose neural operator approximation requires only a polynomial number of parameters in the reciprocal approximation rate, as opposed to the exponential requirement in general worst-case neural operator guarantees.

23 Dec 2024

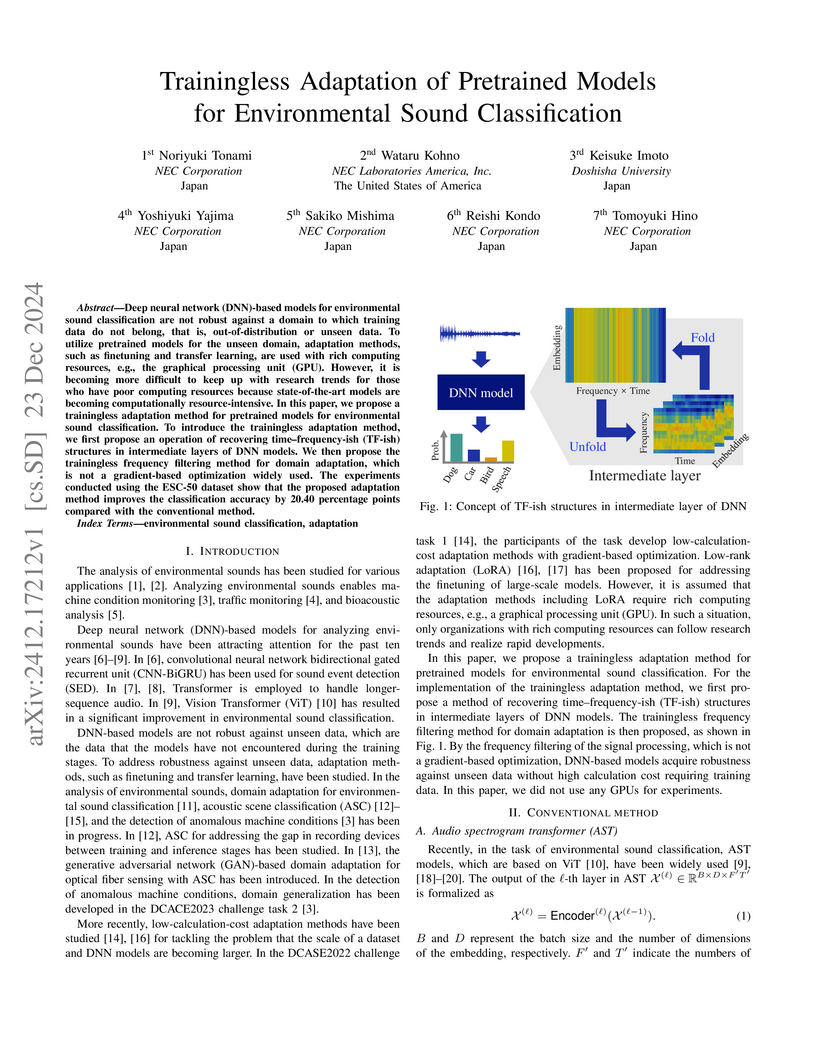

Researchers from NEC Corporation and Doshisha University developed a trainingless method for adapting pre-trained environmental sound classification models, improving their robustness to challenging unseen domains like Distributed Fiber-Optic Sensor (DFOS) data by directly manipulating time-frequency features in intermediate layers without requiring GPU resources or backpropagation.

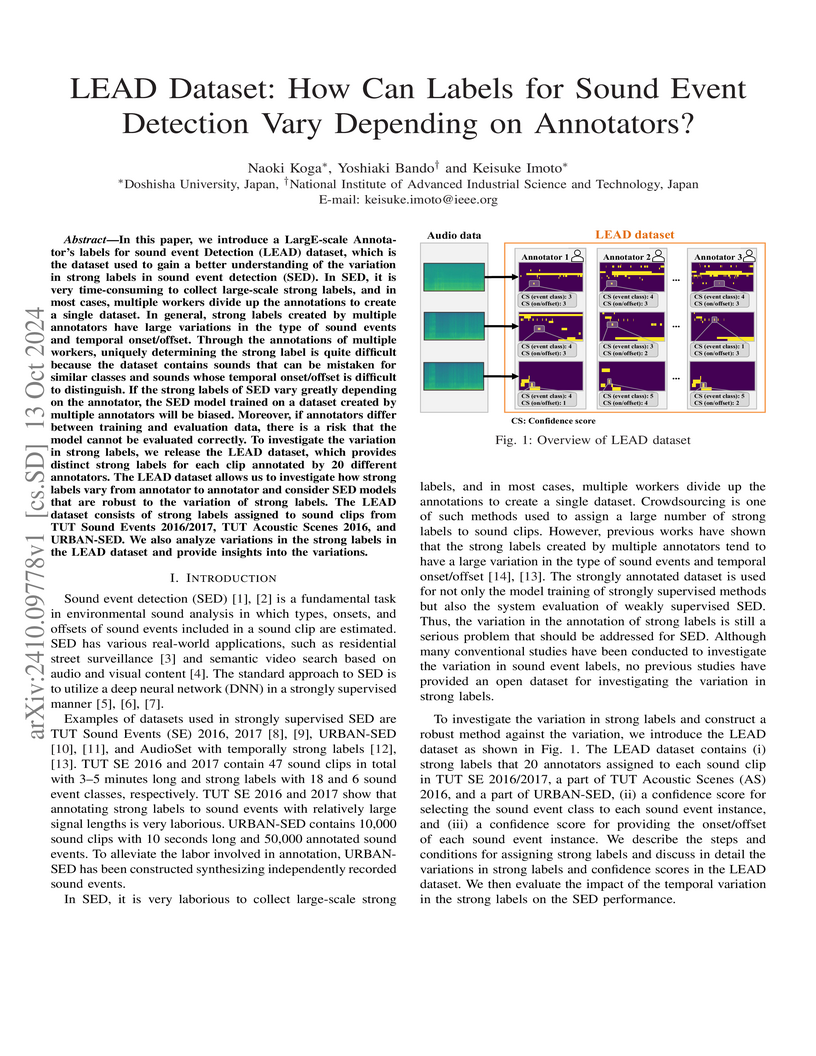

In this paper, we introduce a LargE-scale Annotator's labels for sound event Detection (LEAD) dataset, which is the dataset used to gain a better understanding of the variation in strong labels in sound event detection (SED). In SED, it is very time-consuming to collect large-scale strong labels, and in most cases, multiple workers divide up the annotations to create a single dataset. In general, strong labels created by multiple annotators have large variations in the type of sound events and temporal onset/offset. Through the annotations of multiple workers, uniquely determining the strong label is quite difficult because the dataset contains sounds that can be mistaken for similar classes and sounds whose temporal onset/offset is difficult to distinguish. If the strong labels of SED vary greatly depending on the annotator, the SED model trained on a dataset created by multiple annotators will be biased. Moreover, if annotators differ between training and evaluation data, there is a risk that the model cannot be evaluated correctly. To investigate the variation in strong labels, we release the LEAD dataset, which provides distinct strong labels for each clip annotated by 20 different annotators. The LEAD dataset allows us to investigate how strong labels vary from annotator to annotator and consider SED models that are robust to the variation of strong labels. The LEAD dataset consists of strong labels assigned to sound clips from TUT Sound Events 2016/2017, TUT Acoustic Scenes 2016, and URBAN-SED. We also analyze variations in the strong labels in the LEAD dataset and provide insights into the variations.

In this paper, we propose a framework for environmental sound synthesis from

onomatopoeic words. As one way of expressing an environmental sound, we can use

an onomatopoeic word, which is a character sequence for phonetically imitating

a sound. An onomatopoeic word is effective for describing diverse sound

features. Therefore, using onomatopoeic words for environmental sound synthesis

will enable us to generate diverse environmental sounds. To generate diverse

sounds, we propose a method based on a sequence-to-sequence framework for

synthesizing environmental sounds from onomatopoeic words. We also propose a

method of environmental sound synthesis using onomatopoeic words and sound

event labels. The use of sound event labels in addition to onomatopoeic words

enables us to capture each sound event's feature depending on the input sound

event label. Our subjective experiments show that our proposed methods achieve

higher diversity and naturalness than conventional methods using sound event

labels.

02 Nov 2023

We present the task description of the Detection and Classification of

Acoustic Scenes and Events (DCASE) 2023 Challenge Task 2: ``First-shot

unsupervised anomalous sound detection (ASD) for machine condition

monitoring''. The main goal is to enable rapid deployment of ASD systems for

new kinds of machines without the need for hyperparameter tuning. In the past

ASD tasks, developed methods tuned hyperparameters for each machine type, as

the development and evaluation datasets had the same machine types. However,

collecting normal and anomalous data as the development dataset can be

infeasible in practice. In 2023 Task 2, we focus on solving the first-shot

problem, which is the challenge of training a model on a completely novel

machine type. Specifically, (i) each machine type has only one section (a

subset of machine type) and (ii) machine types in the development and

evaluation datasets are completely different. Analysis of 86 submissions from

23 teams revealed that the keys to outperform baselines were: 1) sampling

techniques for dealing with class imbalances across different domains and

attributes, 2) generation of synthetic samples for robust detection, and 3) use

of multiple large pre-trained models to extract meaningful embeddings for the

anomaly detector.

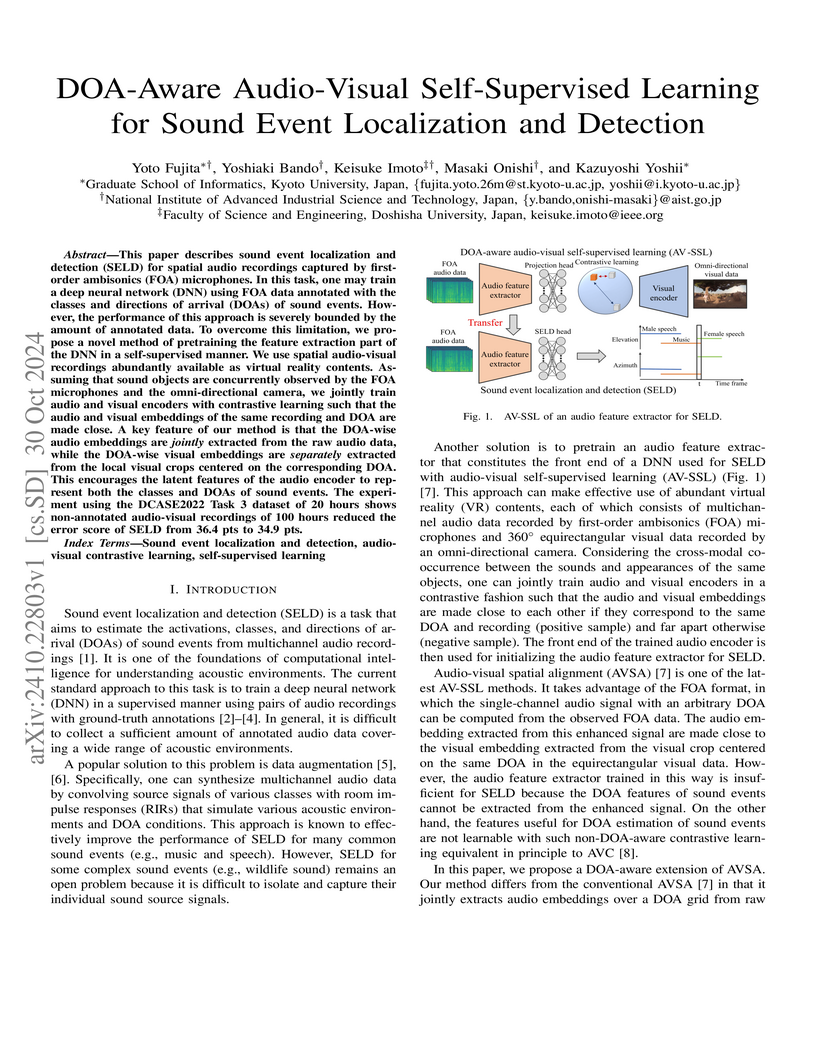

This paper describes sound event localization and detection (SELD) for spatial audio recordings captured by firstorder ambisonics (FOA) microphones. In this task, one may train a deep neural network (DNN) using FOA data annotated with the classes and directions of arrival (DOAs) of sound events. However, the performance of this approach is severely bounded by the amount of annotated data. To overcome this limitation, we propose a novel method of pretraining the feature extraction part of the DNN in a self-supervised manner. We use spatial audio-visual recordings abundantly available as virtual reality contents. Assuming that sound objects are concurrently observed by the FOA microphones and the omni-directional camera, we jointly train audio and visual encoders with contrastive learning such that the audio and visual embeddings of the same recording and DOA are made close. A key feature of our method is that the DOA-wise audio embeddings are jointly extracted from the raw audio data, while the DOA-wise visual embeddings are separately extracted from the local visual crops centered on the corresponding DOA. This encourages the latent features of the audio encoder to represent both the classes and DOAs of sound events. The experiment using the DCASE2022 Task 3 dataset of 20 hours shows non-annotated audio-visual recordings of 100 hours reduced the error score of SELD from 36.4 pts to 34.9 pts.

11 Jun 2024

This DCASE 2024 Challenge Task 2 focuses on first-shot unsupervised anomalous sound detection for machine condition monitoring, aiming to detect anomalies in novel machine types using extremely limited normal training data. The challenge incorporates domain shifts and concealed machine attributes, evaluating systems based on AUC and partial AUC at low false-positive rates for practical industrial relevance.

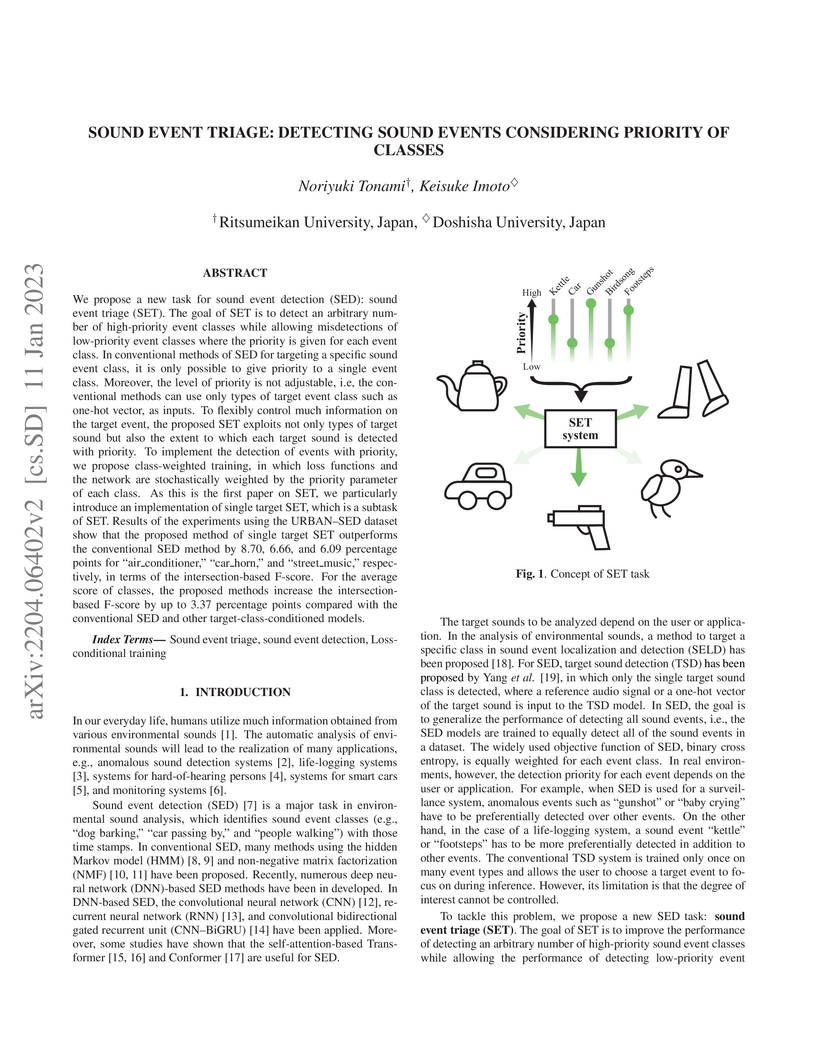

We propose a new task for sound event detection (SED): sound event triage

(SET). The goal of SET is to detect an arbitrary number of high-priority event

classes while allowing misdetections of low-priority event classes where the

priority is given for each event class. In conventional methods of SED for

targeting a specific sound event class, it is only possible to give priority to

a single event class. Moreover, the level of priority is not adjustable, i.e,

the conventional methods can use only types of target event class such as

one-hot vector, as inputs. To flexibly control much information on the target

event, the proposed SET exploits not only types of target sound but also the

extent to which each target sound is detected with priority. To implement the

detection of events with priority, we propose class-weighted training, in which

loss functions and the network are stochastically weighted by the priority

parameter of each class. As this is the first paper on SET, we particularly

introduce an implementation of single target SET, which is a subtask of SET.

Results of the experiments using the URBAN-SED dataset show that the proposed

method of single target SET outperforms the conventional SED method by 8.70,

6.66, and 6.09 percentage points for ``air_conditioner,'' ``car_horn,'' and

``street_music,'' respectively, in terms of the intersection-based F-score. For

the average score of classes, the proposed methods increase the

intersection-based F-score by up to 3.37 percentage points compared with the

conventional SED and other target-class-conditioned models.

It is challenging to improve automatic speech recognition (ASR) performance

in noisy conditions with single-channel speech enhancement (SE). In this paper,

we investigate the causes of ASR performance degradation by decomposing the SE

errors using orthogonal projection-based decomposition (OPD). OPD decomposes

the SE errors into noise and artifact components. The artifact component is

defined as the SE error signal that cannot be represented as a linear

combination of speech and noise sources. We propose manually scaling the error

components to analyze their impact on ASR. We experimentally identify the

artifact component as the main cause of performance degradation, and we find

that mitigating the artifact can greatly improve ASR performance. Furthermore,

we demonstrate that the simple observation adding (OA) technique (i.e., adding

a scaled version of the observed signal to the enhanced speech) can

monotonically increase the signal-to-artifact ratio under a mild condition.

Accordingly, we experimentally confirm that OA improves ASR performance for

both simulated and real recordings. The findings of this paper provide a better

understanding of the influence of SE errors on ASR and open the door to future

research on novel approaches for designing effective single-channel SE

front-ends for ASR.

Heterogeneous treatment effect (HTE) estimation is critical in medical

research. It provides insights into how treatment effects vary among

individuals, which can provide statistical evidence for precision medicine.

While most existing methods focus on binary treatment situations, real-world

applications often involve multiple interventions. However, current HTE

estimation methods are primarily designed for binary comparisons and often rely

on black-box models, which limit their applicability and interpretability in

multi-arm settings. To address these challenges, we propose an interpretable

machine learning framework for HTE estimation in multi-arm trials. Our method

employs a rule-based ensemble approach consisting of rule generation, rule

ensemble, and HTE estimation, ensuring both predictive accuracy and

interpretability. Through extensive simulation studies and real data

applications, the performance of our method was evaluated against

state-of-the-art multi-arm HTE estimation approaches. The results indicate that

our approach achieved lower bias and higher estimation accuracy compared with

those of existing methods. Furthermore, the interpretability of our framework

allows clearer insights into how covariates influence treatment effects,

facilitating clinical decision making. By bridging the gap between accuracy and

interpretability, our study contributes a valuable tool for multi-arm HTE

estimation, supporting precision medicine.

26 Apr 2025

Parameter estimation can result in substantial mean squared error (MSE), even

when consistent estimators are used and the sample size is large. This paper

addresses the longstanding statistical challenge of analyzing the bias and MSE

of ridge-type estimators in nonlinear models, including duration, Poisson, and

multinomial choice models, where theoretical results have been scarce.

Employing a finite-sample approximation technique developed in the econometrics

literature, this study derives new theoretical results showing that the

generalized ridge maximum likelihood estimator (MLE) achieves lower

finite-sample MSE than the conventional MLE across a broad class of nonlinear

models. Importantly, the analysis extends beyond parameter estimation to

model-based prediction, demonstrating that the generalized ridge estimator

improves predictive accuracy relative to the generic MLE for sufficiently small

penalty terms, regardless of the validity of the incorporated hypotheses.

Extensive simulation studies and an empirical application involving the

estimation of marginal mean and quantile treatment effects further support the

superior performance and practical applicability of the proposed method.

28 Sep 2025

We consider fractional stochastic heat equations with space-time Lévy white noise of the form ∂t∂X(t,x)=LαX(t,x)+σ(X(t,x))Λ˙(t,x). Here, the principal part Lα=−(−Δ)α/2 is the d-dimensional fractional Laplacian with α∈(0,2), the noise term Λ˙(t,x) denotes the space-time Lévy white noise, and the function σ:R↦R is Lipschitz continuous. Under suitable assumptions, we obtain bounds for the Lyapunov exponents and the growth indices of exponential type on pth moments of the mild solutions, which are connected with the weakly intermittency properties and the characterizations of the high peaks propagate away from the origin. Unlike the case of the Gaussian noise, the proofs heavily depend on the heavy tail property of heat kernel estimates for the fractional Laplacian. The results complement these in \cite{CD15-1,CK19} for fractional stochastic heat equations driven by space-time white noise and stochastic heat equations with Lévy noise, respectively.

We trace the evolution of research on extreme solar and solar-terrestrial events from the 1859 Carrington event to the rapid development of the last twenty years. Our focus is on the largest observed/inferred/theoretical cases of sunspot groups, flares on the Sun and Sun-like stars, coronal mass ejections, solar proton events, and geomagnetic storms. The reviewed studies are based on modern observations, historical or long-term data including the auroral and cosmogenic radionuclide record, and Kepler observations of Sun-like stars. We compile a table of 100- and 1000-year events based on occurrence frequency distributions for the space weather phenomena listed above. Questions considered include the Sun-like nature of superflare stars and the existence of impactful but unpredictable solar "black swans" and extreme "dragon king" solar phenomena that can involve different physics from that operating in events which are merely large.

There are no more papers matching your filters at the moment.