Facebook AI

Facebook AI researchers introduced DETR (Detection Transformer), an end-to-end object detection model that uses a Transformer encoder-decoder architecture to directly predict a set of objects. This approach eliminates the need for hand-designed components like anchor boxes and Non-Maximum Suppression, achieving competitive performance on the COCO dataset and demonstrating strong capabilities in detecting large objects.

RoBERTa, developed by Facebook AI and the University of Washington, demonstrates that BERT's performance can be substantially improved through careful optimization of its pretraining process, rather than architectural changes. The approach achieved state-of-the-art results on the GLUE benchmark and other NLP tasks by refining aspects like dynamic masking, batch size, and data scale.

A new training methodology and distillation strategy enable Vision Transformers (ViT) to achieve competitive performance on ImageNet-1k, reaching 85.2% top-1 accuracy without requiring massive proprietary datasets. This work introduces a "distillation token" and optimized training to make pure transformer models practical and accessible for image classification.

Dense Passage Retrieval (DPR) introduces a method to learn dense vector representations for questions and passages using a dual-encoder architecture and a straightforward fine-tuning approach. This allows it to significantly outperform traditional sparse retrieval methods like BM25, achieving 78.4% top-20 retrieval accuracy on Natural Questions, an 19.3% absolute improvement, and establishing new state-of-the-art exact match accuracies on several open-domain QA benchmarks.

TimeSformer introduces a convolution-free video understanding architecture that leverages self-attention mechanisms to achieve state-of-the-art action recognition performance on multiple benchmarks with significantly improved training and inference efficiency.

Facebook AI introduced the DeepFake Detection Challenge (DFDC) Dataset, an extensive, ethically-sourced collection of manipulated videos, alongside a benchmark competition. This resource provides compelling evidence that models trained on synthetic Deepfakes can generalize to real-world Deepfake videos, fostering advancements in detection capabilities.

We present VideoCLIP, a contrastive approach to pre-train a unified model for zero-shot video and text understanding, without using any labels on downstream tasks. VideoCLIP trains a transformer for video and text by contrasting temporally overlapping positive video-text pairs with hard negatives from nearest neighbor retrieval. Our experiments on a diverse series of downstream tasks, including sequence-level text-video retrieval, VideoQA, token-level action localization, and action segmentation reveal state-of-the-art performance, surpassing prior work, and in some cases even outperforming supervised approaches. Code is made available at this https URL.

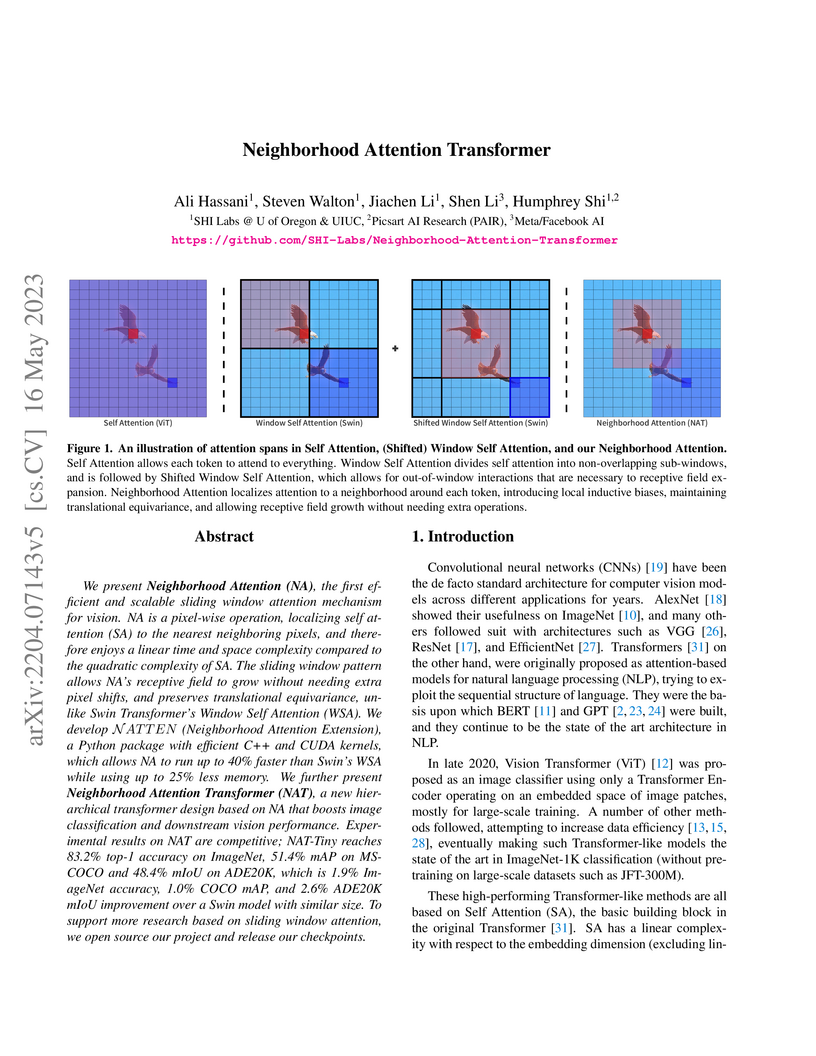

This work introduces the Neighborhood Attention Transformer (NAT), a new hierarchical vision architecture built on Neighborhood Attention (NA), an efficiently implemented pixel-wise sliding window attention mechanism. The research demonstrates that NAT achieves competitive or superior performance across image classification, object detection, and semantic segmentation tasks compared to leading Vision Transformers and CNNs, often with improved computational efficiency.

Transformers have been recently adapted for large scale image classification, achieving high scores shaking up the long supremacy of convolutional neural networks. However the optimization of image transformers has been little studied so far. In this work, we build and optimize deeper transformer networks for image classification. In particular, we investigate the interplay of architecture and optimization of such dedicated transformers. We make two transformers architecture changes that significantly improve the accuracy of deep transformers. This leads us to produce models whose performance does not saturate early with more depth, for instance we obtain 86.5% top-1 accuracy on Imagenet when training with no external data, we thus attain the current SOTA with less FLOPs and parameters. Moreover, our best model establishes the new state of the art on Imagenet with Reassessed labels and Imagenet-V2 / match frequency, in the setting with no additional training data. We share our code and models.

Researchers from Facebook AI and NUS introduce a decoupled training approach for long-tailed recognition, separating representation learning from classifier training. This method achieves state-of-the-art results on ImageNet-LT, Places-LT, and iNaturalist 2018, improving overall accuracy by up to 5% and few-shot accuracy by up to 11% compared to joint training baselines.

Researchers from TIMM, Meta AI, and Sorbonne Université demonstrate that optimizing training procedures for the vanilla ResNet-50 architecture can achieve a top-1 accuracy of 80.4% on ImageNet-val, surpassing previous baselines for this model without architectural changes. Their work provides three training recipes, showing the substantial performance gains possible from advanced training techniques alone.

Deep metric learning papers from the past four years have consistently

claimed great advances in accuracy, often more than doubling the performance of

decade-old methods. In this paper, we take a closer look at the field to see if

this is actually true. We find flaws in the experimental methodology of

numerous metric learning papers, and show that the actual improvements over

time have been marginal at best.

This work introduces a paradigm shift in semantic segmentation by completely replacing the conventional convolution-based encoder with a pure Transformer encoder, formulating the task as sequence-to-sequence prediction. The proposed SETR models achieve new state-of-the-art performance on ADE20K and Pascal Context datasets, demonstrating the viability and effectiveness of a CNN-free architecture for dense prediction tasks.

Facebook AI introduces The Hateful Memes Challenge, a 10,000-meme dataset designed to foster multimodal understanding for hate speech detection. The challenge set, built with 'benign confounders' to prevent unimodal shortcuts, reveals a substantial performance gap between state-of-the-art AI models (highest AUROC 75.44%) and human annotators (84.7% accuracy), indicating a need for more sophisticated multimodal reasoning.

We present ResMLP, an architecture built entirely upon multi-layer perceptrons for image classification. It is a simple residual network that alternates (i) a linear layer in which image patches interact, independently and identically across channels, and (ii) a two-layer feed-forward network in which channels interact independently per patch. When trained with a modern training strategy using heavy data-augmentation and optionally distillation, it attains surprisingly good accuracy/complexity trade-offs on ImageNet. We also train ResMLP models in a self-supervised setup, to further remove priors from employing a labelled dataset. Finally, by adapting our model to machine translation we achieve surprisingly good results.

We share pre-trained models and our code based on the Timm library.

Facebook AI researchers detail their experiences implementing and optimizing the PyTorch Distributed Data Parallel (DDP) module, which handles over 60% of production GPU hours at Facebook. The work provides practical insights into DDP's design, crucial optimizations like gradient bucketing and computation-communication overlap, and real-world considerations, demonstrating near-linear scalability and improved efficiency for training large deep learning models.

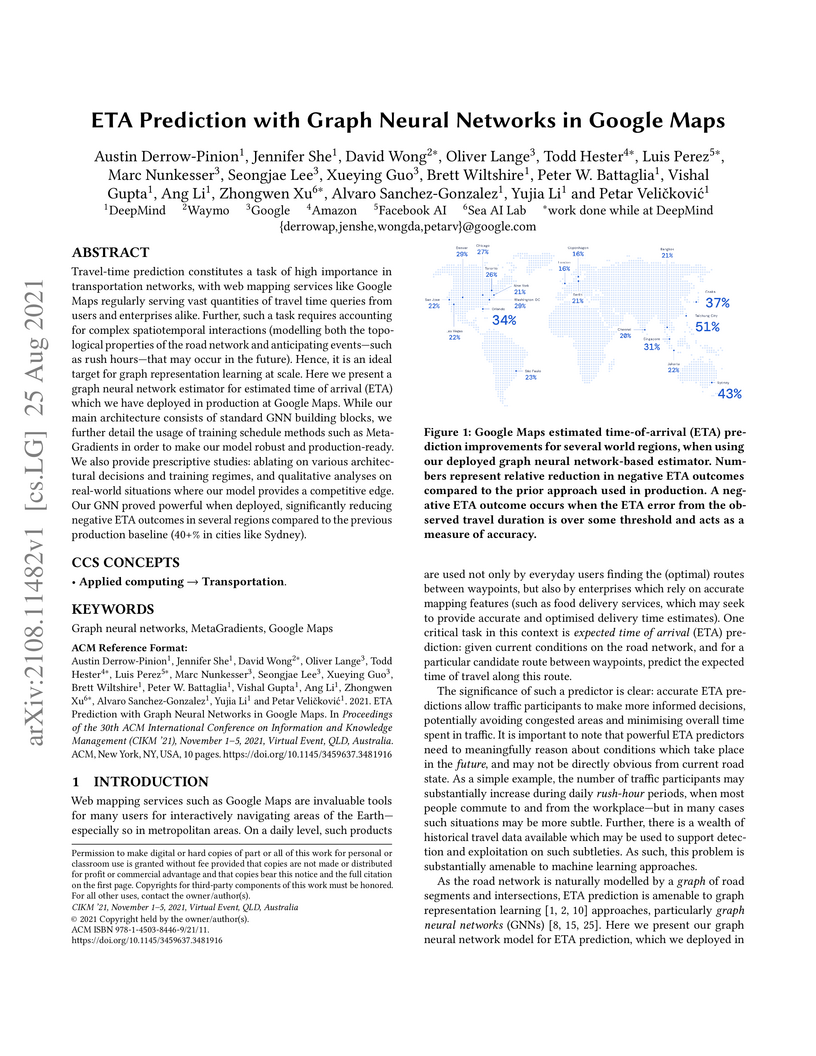

Travel-time prediction constitutes a task of high importance in transportation networks, with web mapping services like Google Maps regularly serving vast quantities of travel time queries from users and enterprises alike. Further, such a task requires accounting for complex spatiotemporal interactions (modelling both the topological properties of the road network and anticipating events -- such as rush hours -- that may occur in the future). Hence, it is an ideal target for graph representation learning at scale. Here we present a graph neural network estimator for estimated time of arrival (ETA) which we have deployed in production at Google Maps. While our main architecture consists of standard GNN building blocks, we further detail the usage of training schedule methods such as MetaGradients in order to make our model robust and production-ready. We also provide prescriptive studies: ablating on various architectural decisions and training regimes, and qualitative analyses on real-world situations where our model provides a competitive edge. Our GNN proved powerful when deployed, significantly reducing negative ETA outcomes in several regions compared to the previous production baseline (40+% in cities like Sydney).

We propose a novel neural representation for videos (NeRV) which encodes videos in neural networks. Unlike conventional representations that treat videos as frame sequences, we represent videos as neural networks taking frame index as input. Given a frame index, NeRV outputs the corresponding RGB image. Video encoding in NeRV is simply fitting a neural network to video frames and decoding process is a simple feedforward operation. As an image-wise implicit representation, NeRV output the whole image and shows great efficiency compared to pixel-wise implicit representation, improving the encoding speed by 25x to 70x, the decoding speed by 38x to 132x, while achieving better video quality. With such a representation, we can treat videos as neural networks, simplifying several video-related tasks. For example, conventional video compression methods are restricted by a long and complex pipeline, specifically designed for the task. In contrast, with NeRV, we can use any neural network compression method as a proxy for video compression, and achieve comparable performance to traditional frame-based video compression approaches (H.264, HEVC \etc). Besides compression, we demonstrate the generalization of NeRV for video denoising. The source code and pre-trained model can be found at this https URL.

State-of-the-art natural language understanding classification models follow two-stages: pre-training a large language model on an auxiliary task, and then fine-tuning the model on a task-specific labeled dataset using cross-entropy loss. However, the cross-entropy loss has several shortcomings that can lead to sub-optimal generalization and instability. Driven by the intuition that good generalization requires capturing the similarity between examples in one class and contrasting them with examples in other classes, we propose a supervised contrastive learning (SCL) objective for the fine-tuning stage. Combined with cross-entropy, our proposed SCL loss obtains significant improvements over a strong RoBERTa-Large baseline on multiple datasets of the GLUE benchmark in few-shot learning settings, without requiring specialized architecture, data augmentations, memory banks, or additional unsupervised data. Our proposed fine-tuning objective leads to models that are more robust to different levels of noise in the fine-tuning training data, and can generalize better to related tasks with limited labeled data.

Following their success in natural language processing, transformers have

recently shown much promise for computer vision. The self-attention operation

underlying transformers yields global interactions between all tokens ,i.e.

words or image patches, and enables flexible modelling of image data beyond the

local interactions of convolutions. This flexibility, however, comes with a

quadratic complexity in time and memory, hindering application to long

sequences and high-resolution images. We propose a "transposed" version of

self-attention that operates across feature channels rather than tokens, where

the interactions are based on the cross-covariance matrix between keys and

queries. The resulting cross-covariance attention (XCA) has linear complexity

in the number of tokens, and allows efficient processing of high-resolution

images. Our cross-covariance image transformer (XCiT) is built upon XCA. It

combines the accuracy of conventional transformers with the scalability of

convolutional architectures. We validate the effectiveness and generality of

XCiT by reporting excellent results on multiple vision benchmarks, including

image classification and self-supervised feature learning on ImageNet-1k,

object detection and instance segmentation on COCO, and semantic segmentation

on ADE20k.

There are no more papers matching your filters at the moment.