Ford Motor Company

Machine Learning (ML) has the potential to revolutionise the field of

automotive aerodynamics, enabling split-second flow predictions early in the

design process. However, the lack of open-source training data for realistic

road cars, using high-fidelity CFD methods, represents a barrier to their

development. To address this, a high-fidelity open-source (CC-BY-SA) public

dataset for automotive aerodynamics has been generated, based on 500

parametrically morphed variants of the widely-used DrivAer notchback generic

vehicle. Mesh generation and scale-resolving CFD was executed using consistent

and validated automatic workflows representative of the industrial

state-of-the-art. Geometries and rich aerodynamic data are published in

open-source formats. To our knowledge, this is the first large, public-domain

dataset for complex automotive configurations generated using high-fidelity

CFD.

Multi-Agent Reinforcement Learning (MARL) has shown promising results across

several domains. Despite this promise, MARL policies often lack robustness and

are therefore sensitive to small changes in their environment. This presents a

serious concern for the real world deployment of MARL algorithms, where the

testing environment may slightly differ from the training environment. In this

work we show that we can gain robustness by controlling a policy's Lipschitz

constant, and under mild conditions, establish the existence of a Lipschitz and

close-to-optimal policy. Based on these insights, we propose a new robust MARL

framework, ERNIE, that promotes the Lipschitz continuity of the policies with

respect to the state observations and actions by adversarial regularization.

The ERNIE framework provides robustness against noisy observations, changing

transition dynamics, and malicious actions of agents. However, ERNIE's

adversarial regularization may introduce some training instability. To reduce

this instability, we reformulate adversarial regularization as a Stackelberg

game. We demonstrate the effectiveness of the proposed framework with extensive

experiments in traffic light control and particle environments. In addition, we

extend ERNIE to mean-field MARL with a formulation based on distributionally

robust optimization that outperforms its non-robust counterpart and is of

independent interest. Our code is available at

this https URL

Automated task planning algorithms have been developed to help robots complete complex tasks that require multiple actions. Most of those algorithms have been developed for "closed worlds" assuming complete world knowledge is provided. However, the real world is generally open, and the robots frequently encounter unforeseen situations that can potentially break the planner's completeness. This paper introduces a novel algorithm (COWP) for open-world task planning and situation handling that dynamically augments the robot's action knowledge with task-oriented common sense. In particular, common sense is extracted from Large Language Models based on the current task at hand and robot skills. For systematic evaluations, we collected a dataset that includes 561 execution-time situations in a dining domain, where each situation corresponds to a state instance of a robot being potentially unable to complete a task using a solution that normally works. Experimental results show that our approach significantly outperforms competitive baselines from the literature in the success rate of service tasks. Additionally, we have demonstrated COWP using a mobile manipulator. The project website is available at: this https URL, where a more detailed version can also be found. This version has been accepted for publication in Autonomous Robots.

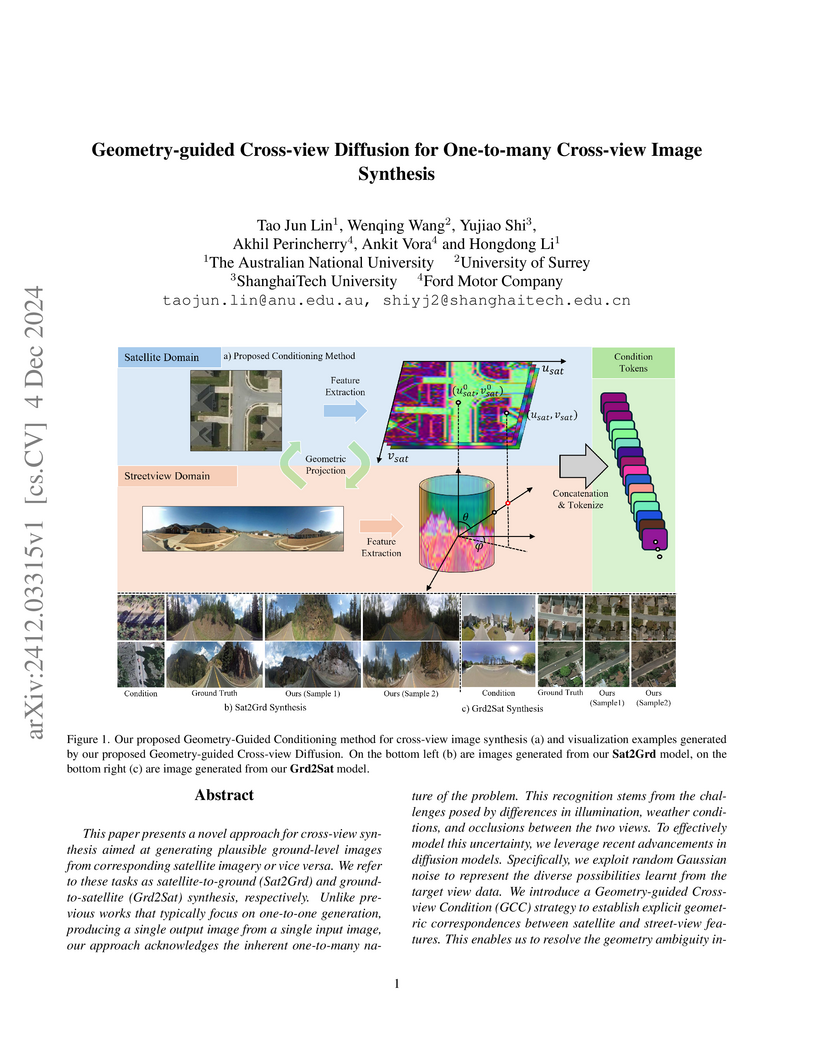

Researchers from The Australian National University and collaborators introduce Geometry-guided Cross-view Diffusion, a framework that generates diverse and geometrically consistent target-view images (e.g., ground to satellite or vice-versa) from a single input view. This approach leverages diffusion models with explicit 3D geometric guidance to capture the inherent one-to-many relationship in cross-view image synthesis, outperforming prior methods in fidelity and diversity.

Physics-informed neural networks (PINNs) are an increasingly powerful way to solve partial differential equations, generate digital twins, and create neural surrogates of physical models. In this manuscript we detail the inner workings of this http URL and show how a formulation structured around numerical quadrature gives rise to new loss functions which allow for adaptivity towards bounded error tolerances. We describe the various ways one can use the tool, detailing mathematical techniques like using extended loss functions for parameter estimation and operator discovery, to help potential users adopt these PINN-based techniques into their workflow. We showcase how NeuralPDE uses a purely symbolic formulation so that all of the underlying training code is generated from an abstract formulation, and show how to make use of GPUs and solve systems of PDEs. Afterwards we give a detailed performance analysis which showcases the trade-off between training techniques on a large set of PDEs. We end by focusing on a complex multiphysics example, the Doyle-Fuller-Newman (DFN) Model, and showcase how this PDE can be formulated and solved with NeuralPDE. Together this manuscript is meant to be a detailed and approachable technical report to help potential users of the technique quickly get a sense of the real-world performance trade-offs and use cases of the PINN techniques.

Researchers from Northwestern University and Ford Motor Company developed Deep DIC, an end-to-end deep learning framework for measuring displacement and strain fields directly from image pairs. This approach demonstrates superior robustness and maintains high spatial resolution in scenarios with large deformations and degraded speckle patterns, while achieving significantly faster computation times compared to commercial Digital Image Correlation software.

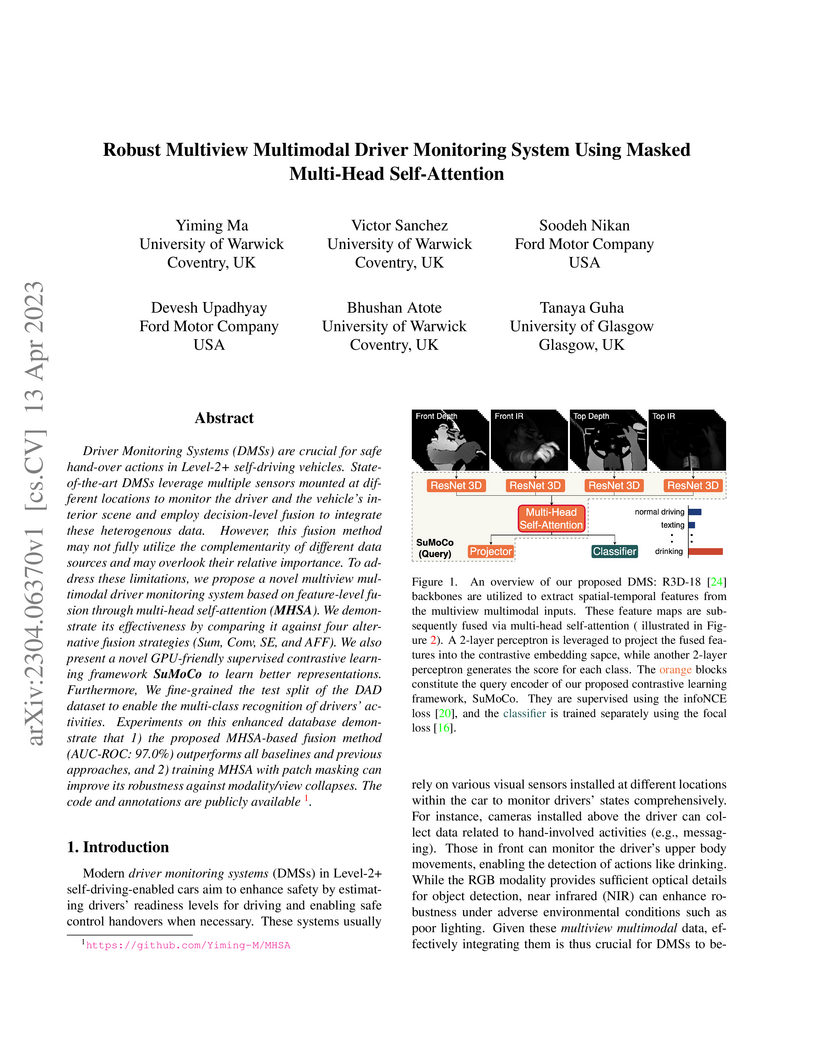

Driver Monitoring Systems (DMSs) are crucial for safe hand-over actions in

Level-2+ self-driving vehicles. State-of-the-art DMSs leverage multiple sensors

mounted at different locations to monitor the driver and the vehicle's interior

scene and employ decision-level fusion to integrate these heterogenous data.

However, this fusion method may not fully utilize the complementarity of

different data sources and may overlook their relative importance. To address

these limitations, we propose a novel multiview multimodal driver monitoring

system based on feature-level fusion through multi-head self-attention (MHSA).

We demonstrate its effectiveness by comparing it against four alternative

fusion strategies (Sum, Conv, SE, and AFF). We also present a novel

GPU-friendly supervised contrastive learning framework SuMoCo to learn better

representations. Furthermore, We fine-grained the test split of the DAD dataset

to enable the multi-class recognition of drivers' activities. Experiments on

this enhanced database demonstrate that 1) the proposed MHSA-based fusion

method (AUC-ROC: 97.0\%) outperforms all baselines and previous approaches, and

2) training MHSA with patch masking can improve its robustness against

modality/view collapses. The code and annotations are publicly available.

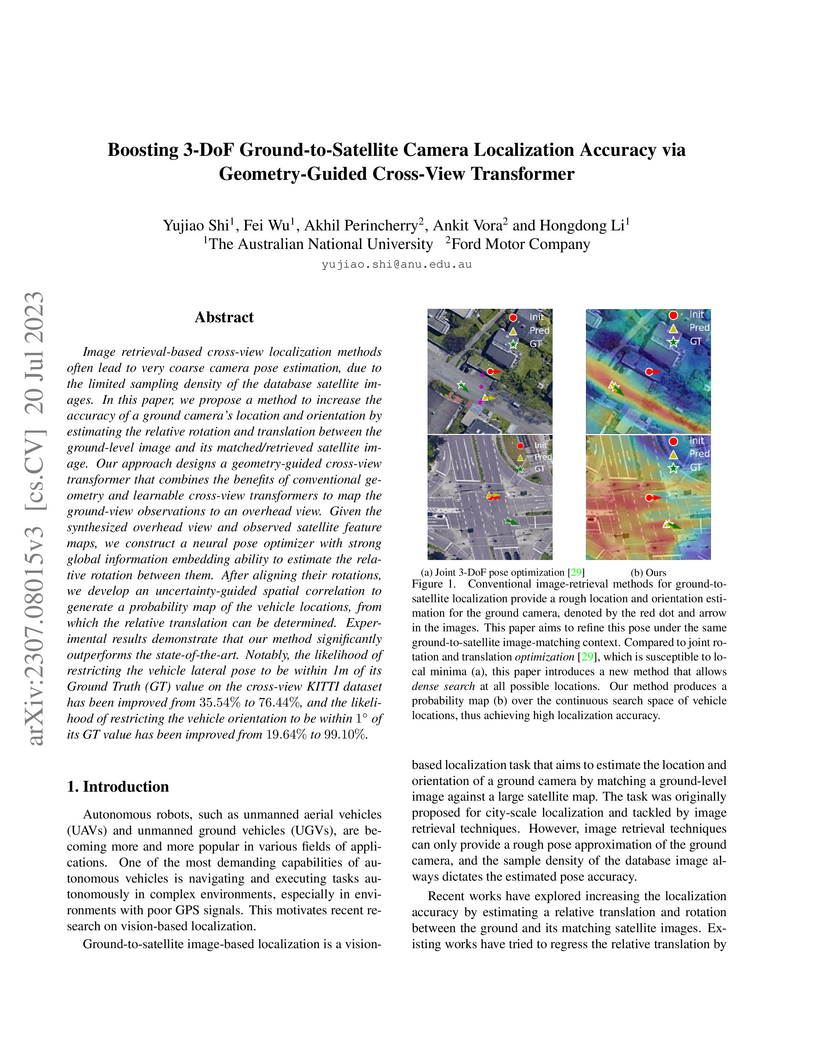

Researchers from The Australian National University and Ford Motor Company developed a geometry-guided cross-view transformer that refines 3-DoF ground-to-satellite camera localization, achieving 99.10% orientation accuracy within 1° and nearly doubling lateral localization accuracy on KITTI Test1 compared to previous methods.

Ensuring safety and meeting temporal specifications are critical challenges for long-term robotic tasks. Signal temporal logic (STL) has been widely used to systematically and rigorously specify these requirements. However, traditional methods of finding the control policy under those STL requirements are computationally complex and not scalable to high-dimensional or systems with complex nonlinear dynamics. Reinforcement learning (RL) methods can learn the policy to satisfy the STL specifications via hand-crafted or STL-inspired rewards, but might encounter unexpected behaviors due to ambiguity and sparsity in the reward. In this paper, we propose a method to directly learn a neural network controller to satisfy the requirements specified in STL. Our controller learns to roll out trajectories to maximize the STL robustness score in training. In testing, similar to Model Predictive Control (MPC), the learned controller predicts a trajectory within a planning horizon to ensure the satisfaction of the STL requirement in deployment. A backup policy is designed to ensure safety when our controller fails. Our approach can adapt to various initial conditions and environmental parameters. We conduct experiments on six tasks, where our method with the backup policy outperforms the classical methods (MPC, STL-solver), model-free and model-based RL methods in STL satisfaction rate, especially on tasks with complex STL specifications while being 10X-100X faster than the classical methods.

A weakly-supervised learning approach for ground-to-satellite camera localization refines 3-DoF pose without requiring precise ground truth labels. The method achieves superior cross-area generalization and competitive performance, surpassing fully supervised state-of-the-art techniques in robustness across diverse geographical regions.

In this paper, we propose an MPC-based precision cooling strategy (PCS) for energy efficient thermal management of automotive air conditioning (A/C) system. The proposed PCS is able to provide precise tracking of the time-varying cooling power trajectory, which is assumed to match the passenger comfort requirements. In addition, by leveraging the emerging connected and automated vehicles (CAVs) technology, vehicle speed preview can be incorporated in our A/C thermal management strategy for further energy efficiency improvement. This proposed A/C thermal management strategy is developed and evaluated based on a physics-based A/C system model (ACSim) from Ford Motor Company for the vehicles with electrified powertrains. In a comparison with Ford benchmark case over SC03 cycle, for tracking the same cooling power trajectory, the proposed PCS provides 4.9% energy saving at the cost of a slight increase in the cabin temperature (less than 1oC). It is also demonstrated that by coordinating with future vehicle speed and shifting the A/C power load, the A/C energy consumption can be further reduced.

This paper describes a physics-based end-to-end software simulation for image

systems. We use the software to explore sensors designed to enhance performance

in high dynamic range (HDR) environments, such as driving through daytime

tunnels and under nighttime conditions. We synthesize physically realistic HDR

spectral radiance images and use them as the input to digital twins that model

the optics and sensors of different systems. This paper makes three main

contributions: (a) We create a labeled (instance segmentation and depth),

synthetic radiance dataset of HDR driving scenes. (b) We describe the

development and validation of the end-to-end simulation framework. (c) We

present a comparative analysis of two single-shot sensors designed for HDR. We

open-source both the dataset and the software.

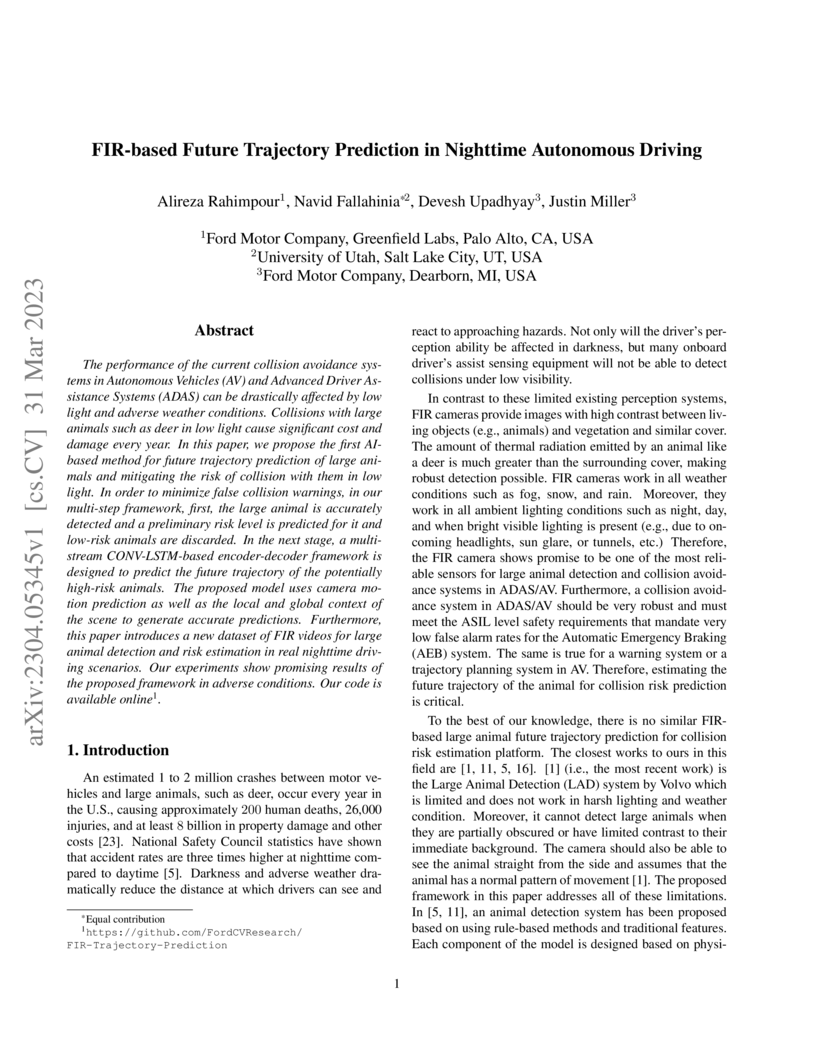

The performance of the current collision avoidance systems in Autonomous Vehicles (AV) and Advanced Driver Assistance Systems (ADAS) can be drastically affected by low light and adverse weather conditions. Collisions with large animals such as deer in low light cause significant cost and damage every year. In this paper, we propose the first AI-based method for future trajectory prediction of large animals and mitigating the risk of collision with them in low light. In order to minimize false collision warnings, in our multi-step framework, first, the large animal is accurately detected and a preliminary risk level is predicted for it and low-risk animals are discarded. In the next stage, a multi-stream CONV-LSTM-based encoder-decoder framework is designed to predict the future trajectory of the potentially high-risk animals. The proposed model uses camera motion prediction as well as the local and global context of the scene to generate accurate predictions. Furthermore, this paper introduces a new dataset of FIR videos for large animal detection and risk estimation in real nighttime driving scenarios. Our experiments show promising results of the proposed framework in adverse conditions. Our code is available online.

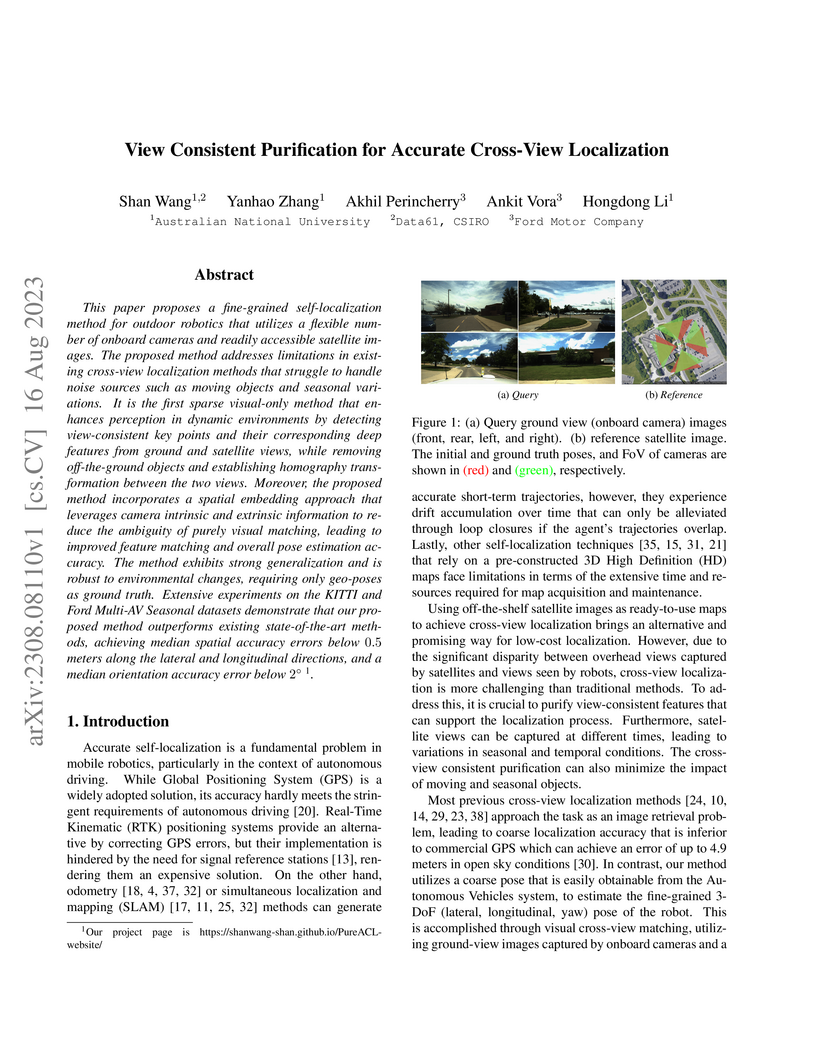

PureACL introduces a sparse visual-only cross-view localization method that achieves sub-meter translation accuracy by purifying view-consistent, on-ground features from ground-level camera images and satellite maps. On the KITTI-CVL dataset, it reduces mean lateral and longitudinal errors by 86% and 94% respectively compared to prior visual methods, while also demonstrating robust generalization to environmental changes and unseen routes.

Depth estimation is a critical topic for robotics and vision-related tasks. In monocular depth estimation, in comparison with supervised learning that requires expensive ground truth labeling, self-supervised methods possess great potential due to no labeling cost. However, self-supervised learning still has a large gap with supervised learning in 3D reconstruction and depth estimation performance. Meanwhile, scaling is also a major issue for monocular unsupervised depth estimation, which commonly still needs ground truth scale from GPS, LiDAR, or existing maps to correct. In the era of deep learning, existing methods primarily rely on exploring image relationships to train unsupervised neural networks, while the physical properties of the camera itself such as intrinsics and extrinsics are often overlooked. These physical properties are not just mathematical parameters; they are embodiments of the camera's interaction with the physical world. By embedding these physical properties into the deep learning model, we can calculate depth priors for ground regions and regions connected to the ground based on physical principles, providing free supervision signals without the need for additional sensors. This approach is not only easy to implement but also enhances the effects of all unsupervised methods by embedding the camera's physical properties into the model, thereby achieving an embodied understanding of the real world.

Collision-free planning is essential for bipedal robots operating within

unstructured environments. This paper presents a real-time Model Predictive

Control (MPC) framework that addresses both body and foot avoidance for dynamic

bipedal robots. Our contribution is two-fold: we introduce (1) a novel

formulation for adjusting step timing to facilitate faster body avoidance and

(2) a novel 3D foot-avoidance formulation that implicitly selects swing

trajectories and footholds that either steps over or navigate around obstacles

with awareness of Center of Mass (COM) dynamics. We achieve body avoidance by

applying a half-space relaxation of the safe region but introduce a switching

heuristic based on tracking error to detect a need to change foot-timing

schedules. To enable foot avoidance and viable landing footholds on all sides

of foot-level obstacles, we decompose the non-convex safe region on the ground

into several convex polygons and use Mixed-Integer Quadratic Programming to

determine the optimal candidate. We found that introducing a soft

minimum-travel-distance constraint is effective in preventing the MPC from

being trapped in local minima that can stall half-space relaxation methods

behind obstacles. We demonstrated the proposed algorithms on multibody

simulations on the bipedal robot platforms, Cassie and Digit, as well as

hardware experiments on Digit.

28 Sep 2021

This work presents a novel target-free extrinsic calibration algorithm for a

3D Lidar and an IMU pair using an Extended Kalman Filter (EKF) which exploits

the \textit{motion based calibration constraint} for state update. The steps

include, data collection by motion excitation of the Lidar Inertial Sensor

suite along all degrees of freedom, determination of the inter sensor rotation

by using rotational component of the aforementioned \textit{motion based

calibration constraint} in a least squares optimization framework, and finally,

the determination of inter sensor translation using the \textit{motion based

calibration constraint} for state update in an Extended Kalman Filter (EKF)

framework. We experimentally validate our method using data collected in our

lab and open-source (this https URL) our

contribution for the robotics research community.

Vehicle-to-everything (V2X) communication enables vehicles, roadside vulnerable users, and infrastructure facilities to communicate in an ad-hoc fashion. Cellular V2X (C-V2X), which was introduced in the 3rd generation partnership project (3GPP) release 14 standard, has recently received significant attention due to its perceived ability to address the scalability and reliability requirements of vehicular safety applications. In this paper, we provide a comprehensive study of the resource allocation of the C-V2X multiple access mechanism for high-density vehicular networks, as it can strongly impact the key performance indicators such as latency and packet delivery rate. Phenomena that can affect the communication performance are investigated and a detailed analysis of the cases that can cause possible performance degradation or system limitations, is provided. The results indicate that a unified system configuration may be necessary for all vehicles, as it is mandated for IEEE 802.11p, in order to obtain the optimum performance. In the end, we show the inter-dependence of different parameters on the resource allocation procedure with the aid of our high fidelity simulator.

The increasing volume of commercially available conversational agents (CAs)

on the market has resulted in users being burdened with learning and adopting

multiple agents to accomplish their tasks. Though prior work has explored

supporting a multitude of domains within the design of a single agent, the

interaction experience suffers due to the large action space of desired

capabilities. To address these problems, we introduce a new task BBAI:

Black-Box Agent Integration, focusing on combining the capabilities of multiple

black-box CAs at scale. We explore two techniques: question agent pairing and

question response pairing aimed at resolving this task. Leveraging these

techniques, we design One For All (OFA), a scalable system that provides a

unified interface to interact with multiple CAs. Additionally, we introduce

MARS: Multi-Agent Response Selection, a new encoder model for question response

pairing that jointly encodes user question and agent response pairs. We

demonstrate that OFA is able to automatically and accurately integrate an

ensemble of commercially available CAs spanning disparate domains.

Specifically, using the MARS encoder we achieve the highest accuracy on our

BBAI task, outperforming strong baselines.

The COWP framework, developed by researchers from SUNY Binghamton and Ford Motor Company, integrates classical task planning with Large Language Models to enable robots to dynamically handle unforeseen situations in open-world environments. It achieved a 67.8% task completion rate and 36.9% situation handling rate in dining-focused tasks, outperforming state-of-the-art baselines in simulations and a real-world mobile manipulator demonstration.

There are no more papers matching your filters at the moment.