Fraunhofer Institute for Industrial Mathematics ITWM

27 Sep 2019

Current training methods for deep neural networks boil down to very high

dimensional and non-convex optimization problems which are usually solved by a

wide range of stochastic gradient descent methods. While these approaches tend

to work in practice, there are still many gaps in the theoretical understanding

of key aspects like convergence and generalization guarantees, which are

induced by the properties of the optimization surface (loss landscape). In

order to gain deeper insights, a number of recent publications proposed methods

to visualize and analyze the optimization surfaces. However, the computational

cost of these methods are very high, making it hardly possible to use them on

larger networks.

In this paper, we present the GradVis Toolbox, an open source library for

efficient and scalable visualization and analysis of deep neural network loss

landscapes in Tensorflow and PyTorch. Introducing more efficient mathematical

formulations and a novel parallelization scheme, GradVis allows to plot 2d and

3d projections of optimization surfaces and trajectories, as well as high

resolution second order gradient information for large networks.

Multivariate partial fractioning is a powerful tool for simplifying rational function coefficients in scattering amplitude computations. Since current research problems lead to large sets of complicated rational functions, performance of the partial fractioning as well as size of the obtained expressions are a prime concern. We develop a large scale parallel framework for multivariate partial fractioning, which implements and combines an improved version of Leinartas' algorithm and the {\sc MultivariateApart} algorithm. Our approach relies only on open source software. It combines parallelism over the different rational function coefficients with parallelism for individual expressions. The implementation is based on the \textsc{Singular}/\textsc{GPI-Space framework} for massively parallel computer algebra, which formulates parallel algorithms in terms of Petri nets. The modular nature of this approach allows for easy incorporation of future algorithmic developments into our package. We demonstrate the performance of our framework by simplifying expressions arising from current multiloop scattering amplitude problems.

Shearography is a non-destructive testing method for detecting subsurface defects, offering high sensitivity and full-field inspection capabilities. However, its industrial adoption remains limited due to the need for expert interpretation. To reduce reliance on labeled data and manual evaluation, this study explores unsupervised learning methods for automated anomaly detection in shearographic images. Three architectures are evaluated: a fully connected autoencoder, a convolutional autoencoder, and a student-teacher feature matching model. All models are trained solely on defect-free data. A controlled dataset was developed using a custom specimen with reproducible defect patterns, enabling systematic acquisition of shearographic measurements under both ideal and realistic deformation conditions. Two training subsets were defined: one containing only undistorted, defect-free samples, and one additionally including globally deformed, yet defect-free, data. The latter simulates practical inspection conditions by incorporating deformation-induced fringe patterns that may obscure localized anomalies. The models are evaluated in terms of binary classification and, for the student-teacher model, spatial defect localization. Results show that the student-teacher approach achieves superior classification robustness and enables precise localization. Compared to the autoencoder-based models, it demonstrates improved separability of feature representations, as visualized through t-SNE embeddings. Additionally, a YOLOv8 model trained on labeled defect data serves as a reference to benchmark localization quality. This study underscores the potential of unsupervised deep learning for scalable, label-efficient shearographic inspection in industrial environments.

03 Feb 2025

We present a photonic ultra-wideband frequency extension for a commercial Vector Network Analyzer (VNA) to perform free-space measurements in a frequency range from 70 GHz up to 520 GHz with a Hz level resolution. The concept is based on the synchronization of continuous-wave (CW) lasers with highly frequency-stable electronic emitter sources as a reference. The use of CW photomixers with bandwidths up to several terahertz allows for the straightforward expansion of the covered frequency range to 1 THz or even beyond. This can be achieved, for instance, by cascading additional lasers within the synchronization scheme. Consequently, the necessity for additional frequency extender modules, as seen in current state-of-the-art VNAs, is eliminated, thereby reducing the complexity and cost of the system significantly. To showcase the capabilities of the Photonic Vector Network Analyzer (PVNA) extender concept, we conducted S21 transmission measurements of various cross-shaped bandpass filters and waveguide-coupled high-frequency (HF) components. Additionally, the analyzed magnitude and phase data were either compared to electro-magnetic (EM) simulations or referenced against data obtained from commercial electronic frequency extender modules.

In image processing, the amount of data to be processed grows rapidly, in particular when imaging methods yield images of more than two dimensions or time series of images. Thus, efficient processing is a challenge, as data sizes may push even supercomputers to their limits. Quantum image processing promises to encode images with logarithmically less qubits than classical pixels in the image. In theory, this is a huge progress, but so far not many experiments have been conducted in practice, in particular on real backends. Often, the precise conversion of classical data to quantum states, the exact implementation, and the interpretation of the measurements in the classical context are challenging. We investigate these practical questions in this paper. In particular, we study the feasibility of the Flexible Representation of Quantum Images (FRQI). Furthermore, we check experimentally what is the limit in the current noisy intermediate-scale quantum era, i.e. up to which image size an image can be encoded, both on simulators and on real backends. Finally, we propose a method for simplifying the circuits needed for the FRQI. With our alteration, the number of gates needed, especially of the error-prone controlled-NOT gates, can be reduced. As a consequence, the size of manageable images increases.

Exploring the potential of quantum hardware for enhancing classical and real-world applications is an ongoing challenge. This study evaluates the performance of quantum and quantum-inspired methods compared to classical models for crack segmentation. Using annotated gray-scale image patches of concrete samples, we benchmark a classical mean Gaussian mixture technique, a quantum-inspired fermion-based method, Q-Seg a quantum annealing-based method, and a U-Net deep learning architecture. Our results indicate that quantum-inspired and quantum methods offer a promising alternative for image segmentation, particularly for complex crack patterns, and could be applied in near-future applications.

This paper employs machine learning algorithms to forecast German electricity

spot market prices. The forecasts utilize in particular bid and ask order book

data from the spot market but also fundamental market data like renewable

infeed and expected demand. Appropriate feature extraction for the order book

data is developed. Using cross-validation to optimise hyperparameters, neural

networks and random forests are proposed and compared to statistical reference

models. The machine learning models outperform traditional approaches.

The rich physical properties of multiatomic molecules and crystalline structures are determined, to a significant extent, by the underlying geometry and connectivity of atomic orbitals. This orbital degree of freedom has also been used effectively to introduce structural diversity in a few synthetic materials including polariton lattices nonlinear photonic lattices and ultracold atoms in optical lattices. In particular, the mixing of orbitals with distinct parity representations, such as s and p orbitals, has been shown to be especially useful for generating systems that require alternating phase patterns, as with the sign of couplings within a lattice. Here we show that by further breaking the symmetries of such mixed-orbital lattices, it is possible to generate synthetic magnetic flux threading the lattice. This capability allows the generation of multipole higher-order topological phases in synthetic bosonic platforms, in which π flux threading each plaquette of the lattice is required, and which to date have only been implemented using tailored connectivity patterns. We use this insight to experimentally demonstrate a quadrupole photonic topological insulator in a two-dimensional lattice of waveguides that leverage modes with both s and p orbital-type representations. We confirm the nontrivial quadrupole topology of the system by observing the presence of protected zero-dimensional states, which are spatially confined to the corners, and by confirming that these states sit at the band gap. Our approach is also applicable to a broader range of time-reversal-invariant synthetic materials that do not allow for tailored connectivity, e.g. with nanoscale geometries, and in which synthetic fluxes are essential.

Shearography is an interferometric technique sensitive to surface displacement gradients, providing high sensitivity for detecting subsurface defects in safety-critical components. A key limitation to industrial adoption is the lack of high-quality annotated datasets, since manual labeling remains labor-intensive, subjective, and difficult to standardize. We introduce an automated workflow that generates defect annotations from shearography measurements using deep learning, producing high-resolution segmentation and bounding-box labels. Evaluation against expert-labeled data demonstrates sufficient accuracy to enable weakly supervised training, reducing manual effort and supporting scalable dataset creation for robust defect detection.

21 Jul 2025

Since their prediction by Einstein at the dawn of quantum mechanics, Bose-Einstein condensates (BECs), owing to their property to show quantum phenomena on macroscopic scales, are drawing increasing attention across various fields in physics. They are the subject of many fascinating observations in various physical systems, from liquid helium to diluted atomic gases. In addition to real particles like atoms and composite bosons such as Cooper pairs or excitons, this phenomenon is also observed in gases of quasiparticles such as polaritons and magnons - quanta of spin-wave excitations in magnetic media. The fundamental property of the BEC state is its coherence, which is represented by a precisely defined phase of the corresponding wave function, which arises spontaneously and encompasses all particles gathered at the bottom of their spectrum. Until now, the BEC phase was only revealed in phenomena depending on the spatial phase difference, such as interference, second order coherence, and macroscopic BEC motions - supercurrents, superfluidity and Josephson oscillations. Here, we present a method for the direct time-domain measurement of the magnon BEC coherent state phase relative to an outside reference signal. We report the emergence of spontaneous coherence from a freely evolving magnon gas, which manifests as the condensation of magnons into a uniform precession state with minimal energy and a well-defined phase. These findings confirm all postulated fundamental properties of quasiparticle condensates, provide access to a new degree of freedom in such systems, and open up the possibility of information processing using microwave-frequency magnon BECs.

29 Jan 2020

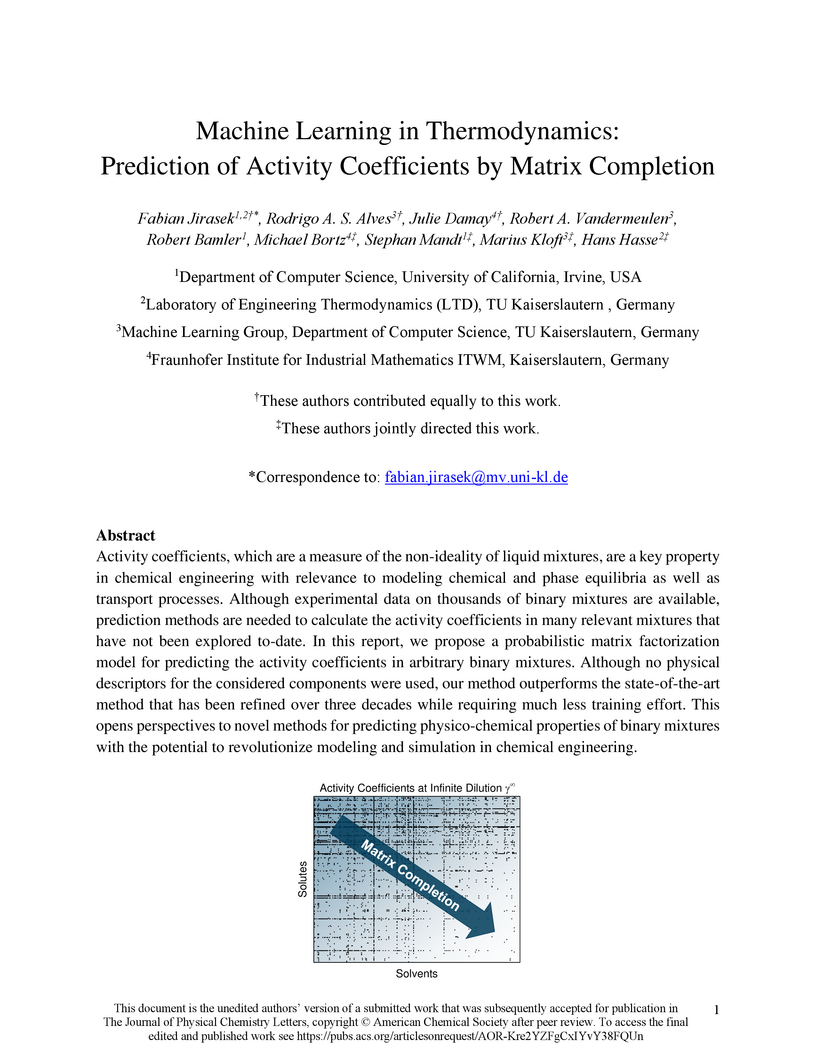

Activity coefficients, which are a measure of the non-ideality of liquid

mixtures, are a key property in chemical engineering with relevance to modeling

chemical and phase equilibria as well as transport processes. Although

experimental data on thousands of binary mixtures are available, prediction

methods are needed to calculate the activity coefficients in many relevant

mixtures that have not been explored to-date. In this report, we propose a

probabilistic matrix factorization model for predicting the activity

coefficients in arbitrary binary mixtures. Although no physical descriptors for

the considered components were used, our method outperforms the

state-of-the-art method that has been refined over three decades while

requiring much less training effort. This opens perspectives to novel methods

for predicting physico-chemical properties of binary mixtures with the

potential to revolutionize modeling and simulation in chemical engineering.

17 Jan 2024

In this paper we consider the topology optimization for a bipolar plate of a

hydrogen electrolysis cell. We use the Borvall-Petersson model to describe the

fluid flow and derive a criterion for a uniform flow distribution in the

bipolar plate. Furthermore, we introduce a novel deflation approach to compute

multiple local minimizers of topology optimization problems. The approach is

based on a penalty method that discourages convergence towards previously found

solutions. Finally, we demonstrate this technique on the topology optimization

for bipolar plates and show that multiple distinct local solutions can be

found.

Pore-scale modeling and simulation of reactive flow in porous media has a

range of diverse applications, and poses a number of research challenges. It is

known that the morphology of a porous medium has significant influence on the

local flow rate, which can have a substantial impact on the rate of chemical

reactions. While there are a large number of papers and software tools

dedicated to simulating either fluid flow in 3D computerized tomography (CT)

images or reactive flow using pore-network models, little attention to date has

been focused on the pore-scale simulation of sorptive transport in 3D CT

images, which is the specific focus of this paper. Here we first present an

algorithm for the simulation of such reactive flows directly on images, which

is implemented in a sophisticated software package. We then use this software

to present numerical results in two resolved geometries, illustrating the

importance of pore-scale simulation and the flexibility of our software

package.

In p-median location interdiction the aim is to find a subset of edges in a graph, such that the objective value of the p-median problem in the same graph without the selected edges is as large as possible.

We prove that this problem is NP-hard even on acyclic graphs. Restricting the problem to trees with unit lengths on the edges, unit interdiction costs, and a single edge interdiction, we provide an algorithm which solves the problem in polynomial time. Furthermore, we investigate path graphs with unit and arbitrary lengths. For the former case, we present an algorithm, where multiple edges can get interdicted. Furthermore, for the latter case, we present a method to compute an optimal solution for one interdiction step which can also be extended to multiple interdicted edges.

With the growing demand for efficient power electronics, SiC-based devices are progressively becoming more relevant. In contrast to established methods such as the mercury capacitance-voltage technique, terahertz spectroscopy promises a contactless characterization. In this work, we simultaneously determine the charge carrier density of SiC epilayers and their substrates in a single measurement over a wide range of about 8x10^(15)$ cm^(-3) to 4x10^(18) cm^(-3) using time-domain spectroscopy in a reflection geometry. Furthermore, inhomogeneities in the samples are detected by mapping the determined charge carrier densities over the whole wafer. Additional theoretical calculations confirm these results and provide thickness-dependent information on the doping range of 4H-SiC, in which terahertz time-domain spectroscopy is capable of determining the charge carrier density.

13 May 2024

In the rapidly advancing domain of quantum optimization, the confluence of

quantum algorithms such as Quantum Annealing (QA) and the Quantum Approximate

Optimization Algorithm (QAOA) with robust optimization methodologies presents a

cutting-edge frontier. Although it seems natural to apply quantum algorithms

when facing uncertainty, this has barely been approached.

In this paper we adapt the aforementioned quantum optimization techniques to

tackle robust optimization problems. By leveraging the inherent stochasticity

of quantum annealing and adjusting the parameters and evaluation functions

within QAOA, we present two innovative methods for obtaining robust optimal

solutions. These heuristics are applied on two use cases within the energy

sector: the unit commitment problem, which is central to the scheduling of

power plant operations, and the optimization of charging electric vehicles

(EVs) including electricity from photovoltaic (PV) to minimize costs. These

examples highlight not only the potential of quantum optimization methods to

enhance decision-making in energy management but also the practical relevance

of the young field of quantum computing in general. Through careful adaptation

of quantum algorithms, we lay the foundation for exploring ways to achieve more

reliable and efficient solutions in complex optimization scenarios that occur

in the real-world.

\noindent Solving cell problems in homogenization is hard, and available deep-learning frameworks fail to match the speed and generality of traditional computational frameworks. More to the point, it is generally unclear what to expect of machine-learning approaches, let alone single out which approaches are promising. In the work at hand, we advocate Fourier Neural Operators (FNOs) for micromechanics, empowering them by insights from computational micromechanics methods based on the fast Fourier transform (FFT). We construct an FNO surrogate mimicking the basic scheme foundational for FFT-based methods and show that the resulting operator predicts solutions to cell problems with \emph{arbitrary} stiffness distribution only subject to a material-contrast constraint up to a desired accuracy. In particular, there are no restrictions on the material symmetry like isotropy, on the number of phases and on the geometry of the interfaces between materials. Also, the provided fidelity is sharp and uniform, providing explicit guarantees leveraging our physical empowerment of FNOs. To show the desired universal approximation property, we construct an FNO explicitly that requires no training to begin with. Still, the obtained neural operator complies with the same memory requirements as the basic scheme and comes with runtimes proportional to classical FFT solvers. In particular, large-scale problems with more than 100 million voxels are readily handled. The goal of this work is to underline the potential of FNOs for solving micromechanical problems, linking FFT-based methods to FNOs. This connection is expected to provide a fruitful exchange between both worlds.

For the numerical solution of shape optimization problems, particularly those constrained by partial differential equations (PDEs), the quality of the underlying mesh is of utmost importance. Particularly when investigating complex geometries, the mesh quality tends to deteriorate over the course of a shape optimization so that either the optimization comes to a halt or an expensive remeshing operation must be performed before the optimization can be continued. In this paper, we present a novel, semi-discrete approach for enforcing a minimum mesh quality in shape optimization. Our approach is based on Rosen's gradient projection method, which incorporates mesh quality constraints into the shape optimization problem. The proposed constraints bound the angles of triangular and solid angles of tetrahedral mesh cells and, thus, also bound the quality of these mesh cells. The method treats these constraints by projecting the search direction to the linear subspace of the currently active constraints. Additionally, only slight modifications to the usual line search procedure are required to ensure the feasibility of the method. We present our method for two- and three-dimensional simplicial meshes. We investigate the proposed approach numerically for the drag minimization of an obstacle in a two-dimensional Stokes flow, the optimization of the flow in a pipe governed by the Navier-Stokes equations, and for the large-scale, three-dimensional optimization of a structured packing used in a distillation column. Our results show that the proposed method is indeed capable of guaranteeing a minimum mesh quality for both academic examples and challenging industrial applications. Particularly, our approach allows the shape optimization of complex structures while ensuring that the mesh quality does not deteriorate.

Heidelberg UniversityUniversity of StuttgartUniversity of HeidelbergTU DortmundUniversity of MünsterFraunhofer Institute for Industrial Mathematics ITWMTU Clausthal-ZellerfeldInterdisciplinary Center for Scientific Computing, Heidelberg UniversityInstitute for Analysis and Numerics, University of MünsterInstitute for Applied Analysis and Numerical Simulation, University of StuttgartInst. f. Applied Mathematics, TU Dortmund

In the Exa-Dune project we have developed, implemented and optimised numerical algorithms and software for the scalable solution of partial differential equations (PDEs) on future exascale systems exhibiting a heterogeneous massively parallel architecture. In order to cope with the increased probability of hardware failures, one aim of the project was to add flexible, application-oriented resilience capabilities into the framework. Continuous improvement of the underlying hardware-oriented numerical methods have included GPU-based sparse approximate inverses, matrix-free sum-factorisation for high-order discontinuous Galerkin discretisations as well as partially matrix-free preconditioners. On top of that, additional scalability is facilitated by exploiting massive coarse grained parallelism offered by multiscale and uncertainty quantification methods where we have focused on the adaptive choice of the coarse/fine scale and the overlap region as well as the combination of local reduced basis multiscale methods and the multilevel Monte-Carlo algorithm. Finally, some of the concepts are applied in a land-surface model including subsurface flow and surface runoff.

02 Nov 2022

Novel bifunctional cellulose diacetate derivatives were synthesized in order to achieve bio-based photoresists, which can be structured by two-photon absorption via direct laser writing (DLW) without the need to use a photoinitiator. Therefore, cellulose diacetate is functionalized with thiol moieties and olefinic or methacrylic side groups enabling thiol-conjugated crosslinking. These cellulose derivatives are also photo-crosslinkable via UV irradiation (λ = 254 nm and 365 nm) without using an initiator.

There are no more papers matching your filters at the moment.