University of Yamanashi

Purpose: In radiology, large language models (LLMs), including ChatGPT, have recently gained attention, and their utility is being rapidly evaluated. However, concerns have emerged regarding their reliability in clinical applications due to limitations such as hallucinations and insufficient referencing. To address these issues, we focus on the latest technology, retrieval-augmented generation (RAG), which enables LLMs to reference reliable external knowledge (REK). Specifically, this study examines the utility and reliability of a recently released RAG-equipped LLM (RAG-LLM), NotebookLM, for staging lung cancer.

Materials and methods: We summarized the current lung cancer staging guideline in Japan and provided this as REK to NotebookLM. We then tasked NotebookLM with staging 100 fictional lung cancer cases based on CT findings and evaluated its accuracy. For comparison, we performed the same task using a gold-standard LLM, GPT-4 Omni (GPT-4o), both with and without the REK.

Results: NotebookLM achieved 86% diagnostic accuracy in the lung cancer staging experiment, outperforming GPT-4o, which recorded 39% accuracy with the REK and 25% without it. Moreover, NotebookLM demonstrated 95% accuracy in searching reference locations within the REK.

Conclusion: NotebookLM successfully performed lung cancer staging by utilizing the REK, demonstrating superior performance compared to GPT-4o. Additionally, it provided highly accurate reference locations within the REK, allowing radiologists to efficiently evaluate the reliability of NotebookLM's responses and detect possible hallucinations. Overall, this study highlights the potential of NotebookLM, a RAG-LLM, in image diagnosis.

Large language model (LLM)-based recommender models that bridge users and items through textual prompts for effective semantic reasoning have gained considerable attention. However, few methods consider the underlying rationales behind interactions, such as user preferences and item attributes, limiting the reasoning capability of LLMs for recommendations. This paper proposes a rationale distillation recommender (RDRec), a compact model designed to learn rationales generated by a larger language model (LM). By leveraging rationales from reviews related to users and items, RDRec remarkably specifies their profiles for recommendations. Experiments show that RDRec achieves state-of-the-art (SOTA) performance in both top-N and sequential recommendations. Our source code is released at this https URL.

Given a set of ideas collected from crowds with regard to an open-ended

question, how can we organize and prioritize them in order to determine the

preferred ones based on preference comparisons by crowd evaluators? As there

are diverse latent criteria for the value of an idea, multiple ideas can be

considered as "the best". In addition, evaluators can have different preference

criteria, and their comparison results often disagree.

In this paper, we propose an analysis method for obtaining a subset of ideas,

which we call frontier ideas, that are the best in terms of at least one latent

evaluation criterion. We propose an approach, called CrowDEA, which estimates

the embeddings of the ideas in the multiple-criteria preference space, the best

viewpoint for each idea, and preference criterion for each evaluator, to obtain

a set of frontier ideas. Experimental results using real datasets containing

numerous ideas or designs demonstrate that the proposed approach can

effectively prioritize ideas from multiple viewpoints, thereby detecting

frontier ideas. The embeddings of ideas learned by the proposed approach

provide a visualization that facilitates observation of the frontier ideas. In

addition, the proposed approach prioritizes ideas from a wider variety of

viewpoints, whereas the baselines tend to use to the same viewpoints; it can

also handle various viewpoints and prioritize ideas in situations where only a

limited number of evaluators or labels are available.

Crowdsourcing has been used to collect data at scale in numerous fields. Triplet similarity comparison is a type of crowdsourcing task, in which crowd workers are asked the question ``among three given objects, which two are more similar?'', which is relatively easy for humans to answer. However, the comparison can be sometimes based on multiple views, i.e., different independent attributes such as color and shape. Each view may lead to different results for the same three objects. Although an algorithm was proposed in prior work to produce multiview embeddings, it involves at least two problems: (1) the existing algorithm cannot independently predict multiview embeddings for a new sample, and (2) different people may prefer different views. In this study, we propose an end-to-end inductive deep learning framework to solve the multiview representation learning problem. The results show that our proposed method can obtain multiview embeddings of any object, in which each view corresponds to an independent attribute of the object. We collected two datasets from a crowdsourcing platform to experimentally investigate the performance of our proposed approach compared to conventional baseline methods.

04 Sep 2024

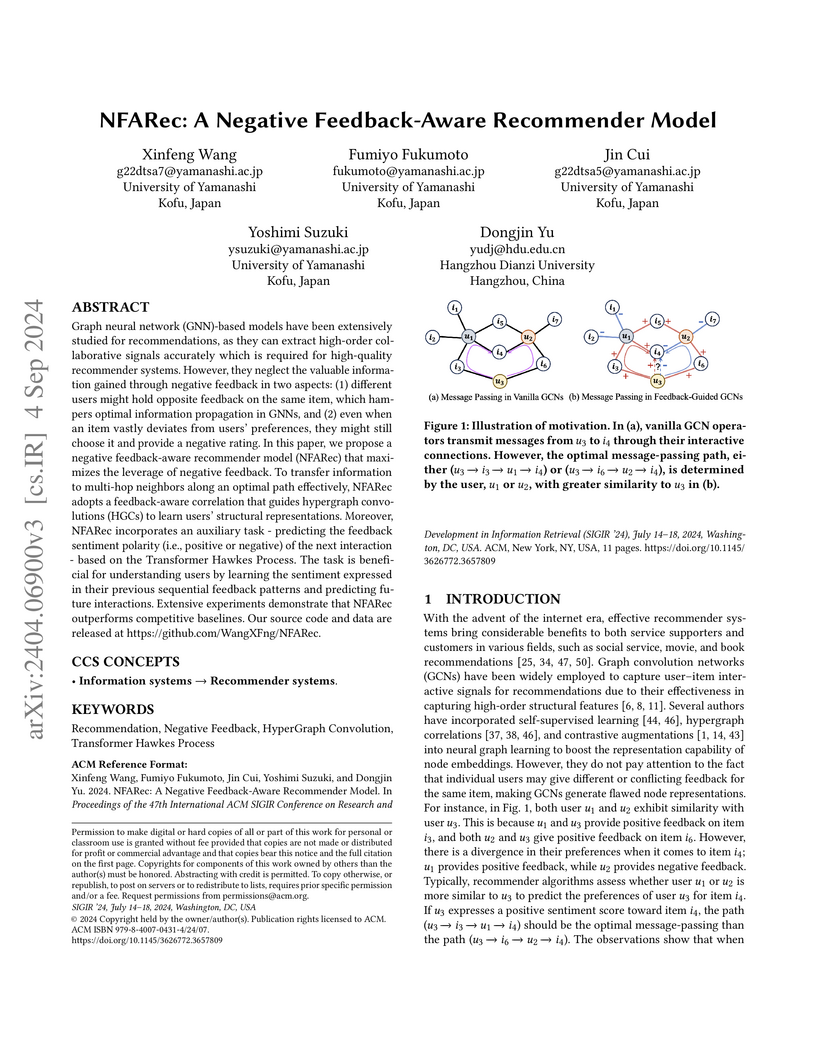

Graph neural network (GNN)-based models have been extensively studied for recommendations, as they can extract high-order collaborative signals accurately which is required for high-quality recommender systems. However, they neglect the valuable information gained through negative feedback in two aspects: (1) different users might hold opposite feedback on the same item, which hampers optimal information propagation in GNNs, and (2) even when an item vastly deviates from users' preferences, they might still choose it and provide a negative rating. In this paper, we propose a negative feedback-aware recommender model (NFARec) that maximizes the leverage of negative feedback. To transfer information to multi-hop neighbors along an optimal path effectively, NFARec adopts a feedback-aware correlation that guides hypergraph convolutions (HGCs) to learn users' structural representations. Moreover, NFARec incorporates an auxiliary task - predicting the feedback sentiment polarity (i.e., positive or negative) of the next interaction - based on the Transformer Hawkes Process. The task is beneficial for understanding users by learning the sentiment expressed in their previous sequential feedback patterns and predicting future interactions. Extensive experiments demonstrate that NFARec outperforms competitive baselines. Our source code and data are released at this https URL.

There has been growing interest in Multimodal Aspect-Based Sentiment Analysis

(MABSA) in recent years. Existing methods predominantly rely on pre-trained

small language models (SLMs) to collect information related to aspects and

sentiments from both image and text, with an aim to align these two modalities.

However, small SLMs possess limited capacity and knowledge, often resulting in

inaccurate identification of meaning, aspects, sentiments, and their

interconnections in textual and visual data. On the other hand, Large language

models (LLMs) have shown exceptional capabilities in various tasks by

effectively exploring fine-grained information in multimodal data. However,

some studies indicate that LLMs still fall short compared to fine-tuned small

models in the field of ABSA. Based on these findings, we propose a novel

framework, termed LRSA, which combines the decision-making capabilities of SLMs

with additional information provided by LLMs for MABSA. Specifically, we inject

explanations generated by LLMs as rationales into SLMs and employ a dual

cross-attention mechanism for enhancing feature interaction and fusion, thereby

augmenting the SLMs' ability to identify aspects and sentiments. We evaluated

our method using two baseline models, numerous experiments highlight the

superiority of our approach on three widely-used benchmarks, indicating its

generalizability and applicability to most pre-trained models for MABSA.

Question answering (QA) tasks have been extensively studied in the field of natural language processing (NLP). Answers to open-ended questions are highly diverse and difficult to quantify, and cannot be simply evaluated as correct or incorrect, unlike close-ended questions with definitive answers. While large language models (LLMs) have demonstrated strong capabilities across various tasks, they exhibit relatively weaker performance in evaluating answers to open-ended questions. In this study, we propose a method that leverages LLMs and the analytic hierarchy process (AHP) to assess answers to open-ended questions. We utilized LLMs to generate multiple evaluation criteria for a question. Subsequently, answers were subjected to pairwise comparisons under each criterion with LLMs, and scores for each answer were calculated in the AHP. We conducted experiments on four datasets using both ChatGPT-3.5-turbo and GPT-4. Our results indicate that our approach more closely aligns with human judgment compared to the four baselines. Additionally, we explored the impact of the number of criteria, variations in models, and differences in datasets on the results.

13 Apr 2025

A unified thermodynamic formalism describing the efficiency of learning is

proposed. First, we derive an inequality, which is more strength than

Clausius's inequality, revealing the lower bound of the entropy-production rate

of a subsystem. Second, the inequality is transformed to determine the general

upper limit for the efficiency of learning. In particular, we exemplify the

bound of the efficiency in nonequilibrium quantum-dot systems and networks of

living cells. The framework provides a fundamental trade-off relationship

between energy and information inheriting in stochastic thermodynamic

processes.

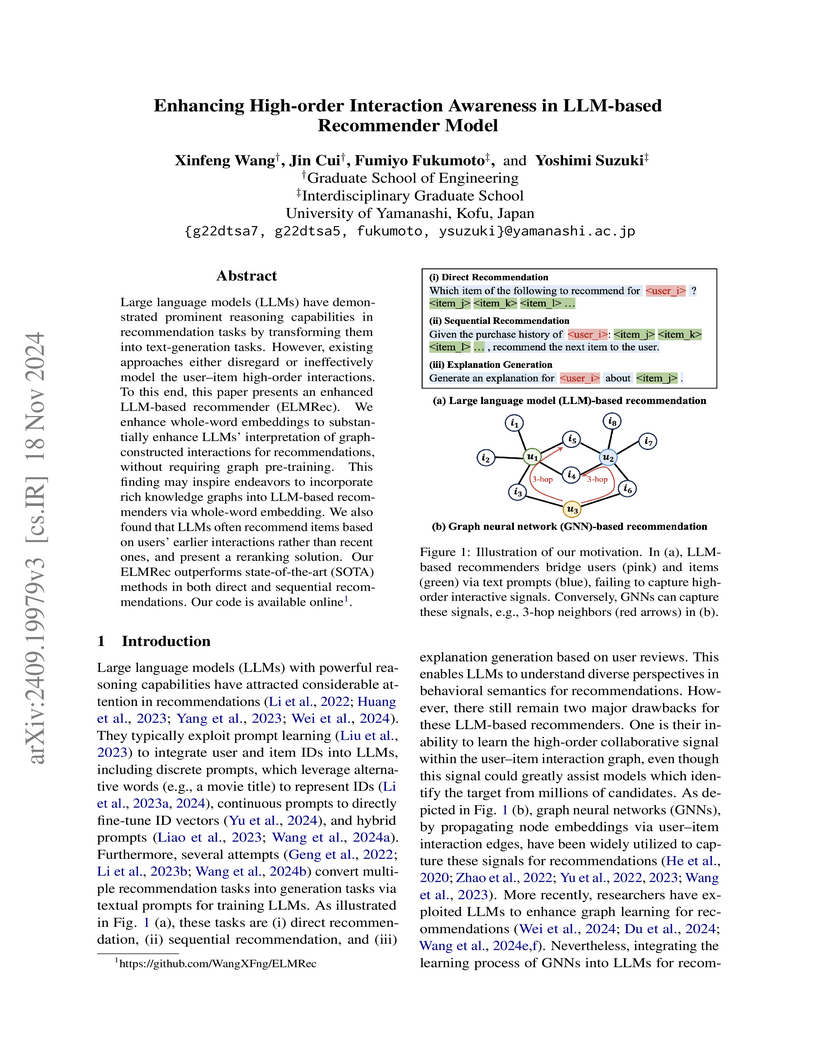

Researchers at the University of Yamanashi developed ELMRec, a large language model-based recommender system that effectively incorporates high-order user-item interaction awareness using graph-derived whole-word embeddings. ELMRec consistently surpassed existing LLM and GNN-based recommenders across multiple datasets, for instance, outperforming previous LLM-based competitors by up to 293.7% in direct recommendation.

This paper presents a novel ECG denoising method using a CNN with integrated wavelet transform layers to better handle overlapping noise frequencies.

26 Mar 2013

University of Utah Kyoto UniversityTokyo University of ScienceYonsei University

Kyoto UniversityTokyo University of ScienceYonsei University RIKENKavli Institute for the Physics and Mathematics of the Universe

RIKENKavli Institute for the Physics and Mathematics of the Universe University of TokyoChiba UniversityHanyang UniversityRitsumeikan UniversityChungnam National UniversityEhime UniversityTokyo Institute of TechnologySaitama University

University of TokyoChiba UniversityHanyang UniversityRitsumeikan UniversityChungnam National UniversityEhime UniversityTokyo Institute of TechnologySaitama University Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiTokyo City UniversityHiroshima City UniversityKinki UniversityInstitute of Particle and Nuclear Studies, KEKUniversity Libre de BruxellesNational Institute of Radiological ScienceRutgers—The State University of New JerseyOsaka-city University

Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiTokyo City UniversityHiroshima City UniversityKinki UniversityInstitute of Particle and Nuclear Studies, KEKUniversity Libre de BruxellesNational Institute of Radiological ScienceRutgers—The State University of New JerseyOsaka-city University

Kyoto UniversityTokyo University of ScienceYonsei University

Kyoto UniversityTokyo University of ScienceYonsei University RIKENKavli Institute for the Physics and Mathematics of the Universe

RIKENKavli Institute for the Physics and Mathematics of the Universe University of TokyoChiba UniversityHanyang UniversityRitsumeikan UniversityChungnam National UniversityEhime UniversityTokyo Institute of TechnologySaitama University

University of TokyoChiba UniversityHanyang UniversityRitsumeikan UniversityChungnam National UniversityEhime UniversityTokyo Institute of TechnologySaitama University Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiTokyo City UniversityHiroshima City UniversityKinki UniversityInstitute of Particle and Nuclear Studies, KEKUniversity Libre de BruxellesNational Institute of Radiological ScienceRutgers—The State University of New JerseyOsaka-city University

Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiTokyo City UniversityHiroshima City UniversityKinki UniversityInstitute of Particle and Nuclear Studies, KEKUniversity Libre de BruxellesNational Institute of Radiological ScienceRutgers—The State University of New JerseyOsaka-city UniversityThe Telescope Array (TA) collaboration has measured the energy spectrum of ultra-high energy cosmic rays with primary energies above 1.6 x 10^(18) eV. This measurement is based upon four years of observation by the surface detector component of TA. The spectrum shows a dip at an energy of 4.6 x 10^(18) eV and a steepening at 5.4 x 10^(19) eV which is consistent with the expectation from the GZK cutoff. We present the results of a technique, new to the analysis of ultra-high energy cosmic ray surface detector data, that involves generating a complete simulation of ultra-high energy cosmic rays striking the TA surface detector. The procedure starts with shower simulations using the CORSIKA Monte Carlo program where we have solved the problems caused by use of the "thinning" approximation. This simulation method allows us to make an accurate calculation of the acceptance of the detector for the energies concerned.

11 Apr 2018

University of UtahSungkyunkwan UniversityTokyo University of ScienceYonsei University RIKENUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityKindai UniversityTokyo Institute of TechnologySaitama University

RIKENUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityKindai UniversityTokyo Institute of TechnologySaitama University Waseda UniversityShinshu UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiSternberg Astronomical InstituteTokyo City UniversityInstitute for Cosmic Ray ResearchOsaka Electro-Communication UniversityKavli Institute for the Physics and Mathematics of the Universe (WPI),Rutgers University, The State University of New JerseyEarthquake Research InstituteOsaka-city UniversityUniversit

Libre de Bruxelles

Waseda UniversityShinshu UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiSternberg Astronomical InstituteTokyo City UniversityInstitute for Cosmic Ray ResearchOsaka Electro-Communication UniversityKavli Institute for the Physics and Mathematics of the Universe (WPI),Rutgers University, The State University of New JerseyEarthquake Research InstituteOsaka-city UniversityUniversit

Libre de Bruxelles

RIKENUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityKindai UniversityTokyo Institute of TechnologySaitama University

RIKENUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityKindai UniversityTokyo Institute of TechnologySaitama University Waseda UniversityShinshu UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiSternberg Astronomical InstituteTokyo City UniversityInstitute for Cosmic Ray ResearchOsaka Electro-Communication UniversityKavli Institute for the Physics and Mathematics of the Universe (WPI),Rutgers University, The State University of New JerseyEarthquake Research InstituteOsaka-city UniversityUniversit

Libre de Bruxelles

Waseda UniversityShinshu UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiSternberg Astronomical InstituteTokyo City UniversityInstitute for Cosmic Ray ResearchOsaka Electro-Communication UniversityKavli Institute for the Physics and Mathematics of the Universe (WPI),Rutgers University, The State University of New JerseyEarthquake Research InstituteOsaka-city UniversityUniversit

Libre de BruxellesOne of the uncertainties in interpretation of ultra-high energy cosmic ray (UHECR) data comes from the hadronic interaction models used for air shower Monte Carlo (MC) simulations. The number of muons observed at the ground from UHECR-induced air showers is expected to depend upon the hadronic interaction model. One may therefore test the hadronic interaction models by comparing the measured number of muons with the MC prediction. In this paper, we present the results of studies of muon densities in UHE extensive air showers obtained by analyzing the signal of surface detector stations which should have high muonpurity. The muon purity of a station will depend on both the inclination of the shower and the relative position of the station. In 7 years' data from the Telescope Array experiment, we find that the number of particles observed for signals with an expected muon purity of ∼65% at a lateral distance of 2000 m from the shower core is 1.72±0.10(stat.)±0.37(syst.) times larger than the MC prediction value using the QGSJET II-03 model for proton-induced showers. A similar effect is also seen in comparisons with other hadronic models such as QGSJET II-04, which shows a 1.67±0.10±0.36 excess. We also studied the dependence of these excesses on lateral distances and found a slower decrease of the lateral distribution of muons in the data as compared to the MC, causing larger discrepancy at larger lateral distances.

The prediction of odor characters is still impossible based on the odorant molecular structure. We designed a CNN-based regressor for computed parameters in molecular vibrations (CNN\_vib), in order to investigate the ability to predict odor characters of molecular vibrations. In this study, we explored following three approaches for the predictability; (i) CNN with molecular vibrational parameters, (ii) logistic regression based on vibrational spectra, and (iii) logistic regression with molecular fingerprint(FP). Our investigation demonstrates that both (i) and (ii) provide predictablity, and also that the vibrations as an explanatory variable (i and ii) and logistic regression with fingerprints (iii) show nearly identical tendencies. The predictabilities of (i) and (ii), depending on odor descriptors, are comparable to those of (iii). Our research shows that odor is predictable by odorant molecular vibration as well as their shapes alone. Our findings provide insight into the representation of molecular motional features beyond molecular structures.

04 Sep 2024

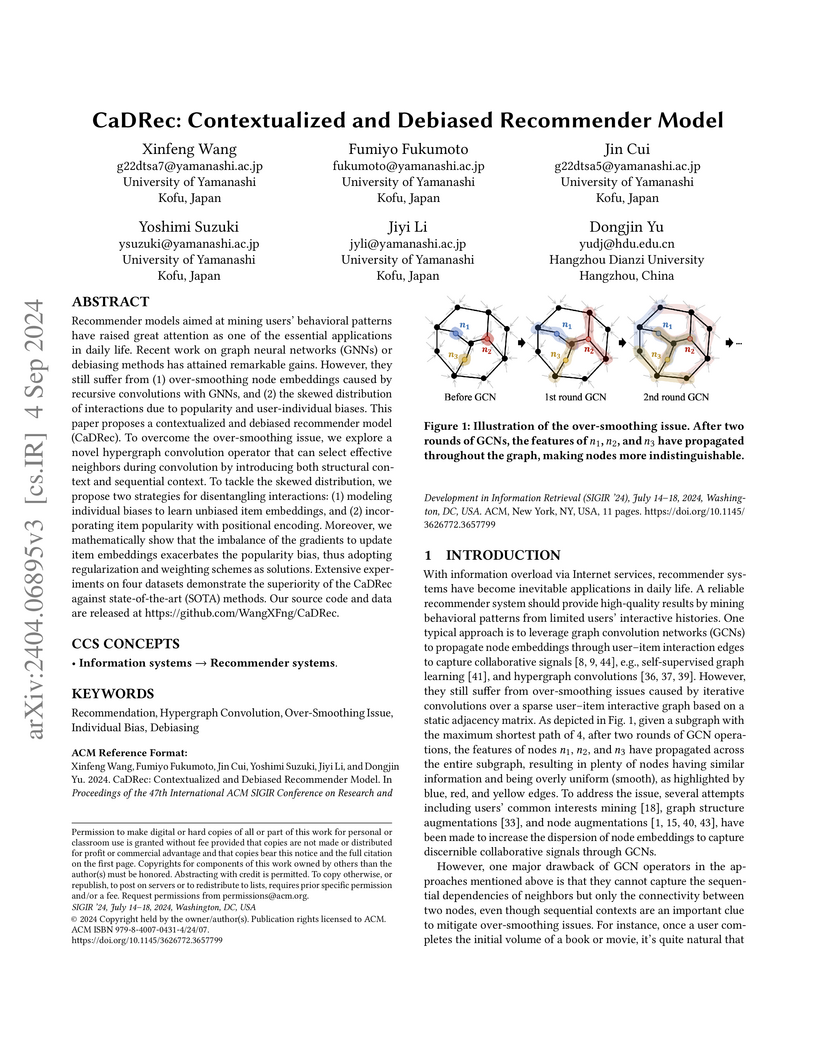

Recommender models aimed at mining users' behavioral patterns have raised

great attention as one of the essential applications in daily life. Recent work

on graph neural networks (GNNs) or debiasing methods has attained remarkable

gains. However, they still suffer from (1) over-smoothing node embeddings

caused by recursive convolutions with GNNs, and (2) the skewed distribution of

interactions due to popularity and user-individual biases. This paper proposes

a contextualized and debiased recommender model (CaDRec). To overcome the

over-smoothing issue, we explore a novel hypergraph convolution operator that

can select effective neighbors during convolution by introducing both

structural context and sequential context. To tackle the skewed distribution,

we propose two strategies for disentangling interactions: (1) modeling

individual biases to learn unbiased item embeddings, and (2) incorporating item

popularity with positional encoding. Moreover, we mathematically show that the

imbalance of the gradients to update item embeddings exacerbates the popularity

bias, thus adopting regularization and weighting schemes as solutions.

Extensive experiments on four datasets demonstrate the superiority of the

CaDRec against state-of-the-art (SOTA) methods. Our source code and data are

released at this https URL

University of Yamanashi researchers developed a Retrieval-Augmented Generation (RAG) enhanced Large Language Model (NotebookLM) to stage pancreatic cancer, achieving 70% accuracy compared to 38% for its base LLM while also providing transparent justification through retrieved guidelines.

University of UtahKEKSungkyunkwan UniversityTokyo University of ScienceYonsei University RIKENKavli Institute for the Physics and Mathematics of the UniverseUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityEhime UniversityTokyo Institute of TechnologySaitama University

RIKENKavli Institute for the Physics and Mathematics of the UniverseUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityEhime UniversityTokyo Institute of TechnologySaitama University Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiSternberg Astronomical Institute, Moscow M.V. Lomonosov State UniversityTokyo City UniversityInstitute for Cosmic Ray Research, University of TokyoHiroshima City UniversityRutgers University, The State University of New JerseyEarthquake Research Institute, University of TokyoKinki UniversityNational Institute of Radiological ScienceOsaka-city UniversityUniversit

Libre de Bruxelles

Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiSternberg Astronomical Institute, Moscow M.V. Lomonosov State UniversityTokyo City UniversityInstitute for Cosmic Ray Research, University of TokyoHiroshima City UniversityRutgers University, The State University of New JerseyEarthquake Research Institute, University of TokyoKinki UniversityNational Institute of Radiological ScienceOsaka-city UniversityUniversit

Libre de Bruxelles

RIKENKavli Institute for the Physics and Mathematics of the UniverseUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityEhime UniversityTokyo Institute of TechnologySaitama University

RIKENKavli Institute for the Physics and Mathematics of the UniverseUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityEhime UniversityTokyo Institute of TechnologySaitama University Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiSternberg Astronomical Institute, Moscow M.V. Lomonosov State UniversityTokyo City UniversityInstitute for Cosmic Ray Research, University of TokyoHiroshima City UniversityRutgers University, The State University of New JerseyEarthquake Research Institute, University of TokyoKinki UniversityNational Institute of Radiological ScienceOsaka-city UniversityUniversit

Libre de Bruxelles

Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiSternberg Astronomical Institute, Moscow M.V. Lomonosov State UniversityTokyo City UniversityInstitute for Cosmic Ray Research, University of TokyoHiroshima City UniversityRutgers University, The State University of New JerseyEarthquake Research Institute, University of TokyoKinki UniversityNational Institute of Radiological ScienceOsaka-city UniversityUniversit

Libre de BruxellesPrevious measurements of the composition of Ultra-High Energy Cosmic Rays(UHECRs) made by the High Resolution Fly's Eye(HiRes) and Pierre Auger Observatory(PAO) are seemingly contradictory, but utilize different detection methods, as HiRes was a stereo detector and PAO is a hybrid detector. The five year Telescope Array(TA) Middle Drum hybrid composition measurement is similar in some, but not all, respects in methodology to PAO, and good agreement is evident between data and a light, largely protonic, composition when comparing the measurements to predictions obtained with the QGSJetII-03 and QGSJet-01c models. These models are also in agreement with previous HiRes stereo measurements, confirming the equivalence of the stereo and hybrid methods. The data is incompatible with a pure iron composition, for all models examined, over the available range of energies. The elongation rate and mean values of Xmax are in good agreement with Pierre Auger Observatory data. This analysis is presented using two methods: data cuts using simple geometrical variables and a new pattern recognition technique.

16 Jul 2014

University of UtahKEKSungkyunkwan UniversityTokyo University of ScienceYonsei University RIKEN

RIKEN University of TokyoUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityEhime UniversityTokyo Institute of TechnologySaitama University

University of TokyoUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityEhime UniversityTokyo Institute of TechnologySaitama University Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiTokyo City UniversityHiroshima City UniversityRutgers University, The State University of New JerseyKinki UniversityUniversit ́eLibre de BruxellesNational Institute of Radiological ScienceOsaka-city University

Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiTokyo City UniversityHiroshima City UniversityRutgers University, The State University of New JerseyKinki UniversityUniversit ́eLibre de BruxellesNational Institute of Radiological ScienceOsaka-city University

RIKEN

RIKEN University of TokyoUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityEhime UniversityTokyo Institute of TechnologySaitama University

University of TokyoUlsan National Institute of Science and TechnologyChiba UniversityHanyang UniversityRitsumeikan UniversityEhime UniversityTokyo Institute of TechnologySaitama University Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiTokyo City UniversityHiroshima City UniversityRutgers University, The State University of New JerseyKinki UniversityUniversit ́eLibre de BruxellesNational Institute of Radiological ScienceOsaka-city University

Waseda UniversityEwha Womans UniversityKanagawa UniversityKochi UniversityInstitute for Nuclear Research of the Russian Academy of SciencesUniversity of YamanashiTokyo City UniversityHiroshima City UniversityRutgers University, The State University of New JerseyKinki UniversityUniversit ́eLibre de BruxellesNational Institute of Radiological ScienceOsaka-city UniversityWe have searched for intermediate-scale anisotropy in the arrival directions

of ultrahigh-energy cosmic rays with energies above 57~EeV in the northern sky

using data collected over a 5 year period by the surface detector of the

Telescope Array experiment. We report on a cluster of events that we call the

hotspot, found by oversampling using 20∘-radius circles. The hotspot has

a Li-Ma statistical significance of 5.1σ, and is centered at

R.A.=146.7∘, Dec.=43.2∘. The position of the hotspot is about

19∘ off of the supergalactic plane. The probability of a cluster of

events of 5.1σ significance, appearing by chance in an isotropic

cosmic-ray sky, is estimated to be 3.7×10−4 (3.4σ).

Finding synthetic artifacts of spoofing data will help the anti-spoofing countermeasures (CMs) system discriminate between spoofed and real speech. The Conformer combines the best of convolutional neural network and the Transformer, allowing it to aggregate global and local information. This may benefit the CM system to capture the synthetic artifacts hidden both locally and globally. In this paper, we present the transfer learning based MFA-Conformer structure for CM systems. By pre-training the Conformer encoder with different tasks, the robustness of the CM system is enhanced. The proposed method is evaluated on both Chinese and English spoofing detection databases. In the FAD clean set, proposed method achieves an EER of 0.04%, which dramatically outperforms the baseline. Our system is also comparable to the pre-training methods base on Wav2Vec 2.0. Moreover, we also provide a detailed analysis of the robustness of different models.

The quality is a crucial issue for crowd annotations. Answer aggregation is an important type of solution. The aggregated answers estimated from multiple crowd answers to the same instance are the eventually collected annotations, rather than the individual crowd answers themselves. Recently, the capability of Large Language Models (LLMs) on data annotation tasks has attracted interest from researchers. Most of the existing studies mainly focus on the average performance of individual crowd workers; several recent works studied the scenarios of aggregation on categorical labels and LLMs used as label creators. However, the scenario of aggregation on text answers and the role of LLMs as aggregators are not yet well-studied. In this paper, we investigate the capability of LLMs as aggregators in the scenario of close-ended crowd text answer aggregation. We propose a human-LLM hybrid text answer aggregation method with a Creator-Aggregator Multi-Stage (CAMS) crowdsourcing framework. We make the experiments based on public crowdsourcing datasets. The results show the effectiveness of our approach based on the collaboration of crowd workers and LLMs.

We have held dialogue robot competitions in 2020 and 2022 to compare the performances of interactive robots using an android that closely resembles a human. In 2023, the third competition DRC2023 was held. The task of DRC2023 was designed to be more challenging than the previous travel agent dialogue tasks. Since anyone can now develop a dialogue system using LLMs, the participating teams are required to develop a system that effectively uses information about the situation on the spot (real-time information), which is not handled by ChatGPT and other systems. DRC2023 has two rounds, a preliminary round and the final round as well as the previous competitions. The preliminary round has held on Oct.27 -- Nov.20, 2023 at real travel agency stores. The final round will be held on December 23, 2023. This paper provides an overview of the task settings and evaluation method of DRC2023 and the preliminary round results.

There are no more papers matching your filters at the moment.