Helmut Schmidt University

03 Dec 2025

A new benchmark evaluates Large Language Model (LLM) agents in planning and real-time execution within a dynamic Blocksworld simulation, integrating them via the Model Context Protocol (MCP). It demonstrates that an LLM agent achieved 80% success on basic tasks and 100% in identifying impossible scenarios, but its performance dropped to 60% with partial observability and increased significantly in execution time and token consumption for more complex challenges.

This expository paper introduces a simplified approach to image-based quality inspection in manufacturing using OpenAI's CLIP (Contrastive Language-Image Pretraining) model adapted for few-shot learning. While CLIP has demonstrated impressive capabilities in general computer vision tasks, its direct application to manufacturing inspection presents challenges due to the domain gap between its training data and industrial applications. We evaluate CLIP's effectiveness through five case studies: metallic pan surface inspection, 3D printing extrusion profile analysis, stochastic textured surface evaluation, automotive assembly inspection, and microstructure image classification. Our results show that CLIP can achieve high classification accuracy with relatively small learning sets (50-100 examples per class) for single-component and texture-based applications. However, the performance degrades with complex multi-component scenes. We provide a practical implementation framework that enables quality engineers to quickly assess CLIP's suitability for their specific applications before pursuing more complex solutions. This work establishes CLIP-based few-shot learning as an effective baseline approach that balances implementation simplicity with robust performance, demonstrated in several manufacturing quality control applications.

Fast and accurate 3D shape generation from point clouds is essential for applications in robotics, AR/VR, and digital content creation. We introduce ConTiCoM-3D, a continuous-time consistency model that synthesizes 3D shapes directly in point space, without discretized diffusion steps, pre-trained teacher models, or latent-space encodings. The method integrates a TrigFlow-inspired continuous noise schedule with a Chamfer Distance-based geometric loss, enabling stable training on high-dimensional point sets while avoiding expensive Jacobian-vector products. This design supports efficient one- to two-step inference with high geometric fidelity. In contrast to previous approaches that rely on iterative denoising or latent decoders, ConTiCoM-3D employs a time-conditioned neural network operating entirely in continuous time, thereby achieving fast generation. Experiments on the ShapeNet benchmark show that ConTiCoM-3D matches or outperforms state-of-the-art diffusion and latent consistency models in both quality and efficiency, establishing it as a practical framework for scalable 3D shape generation.

Cyber-Physical Systems (CPS) in domains such as manufacturing and energy distribution generate complex time series data crucial for Prognostics and Health Management (PHM). While Deep Learning (DL) methods have demonstrated strong forecasting capabilities, their adoption in industrial CPS remains limited due insufficient robustness. Existing robustness evaluations primarily focus on formal verification or adversarial perturbations, inadequately representing the complexities encountered in real-world CPS scenarios. To address this, we introduce a practical robustness definition grounded in distributional robustness, explicitly tailored to industrial CPS, and propose a systematic framework for robustness evaluation. Our framework simulates realistic disturbances, such as sensor drift, noise and irregular sampling, enabling thorough robustness analyses of forecasting models on real-world CPS datasets. The robustness definition provides a standardized score to quantify and compare model performance across diverse datasets, assisting in informed model selection and architecture design. Through extensive empirical studies evaluating prominent DL architectures (including recurrent, convolutional, attention-based, modular, and structured state-space models) we demonstrate the applicability and effectiveness of our approach. We publicly release our robustness benchmark to encourage further research and reproducibility.

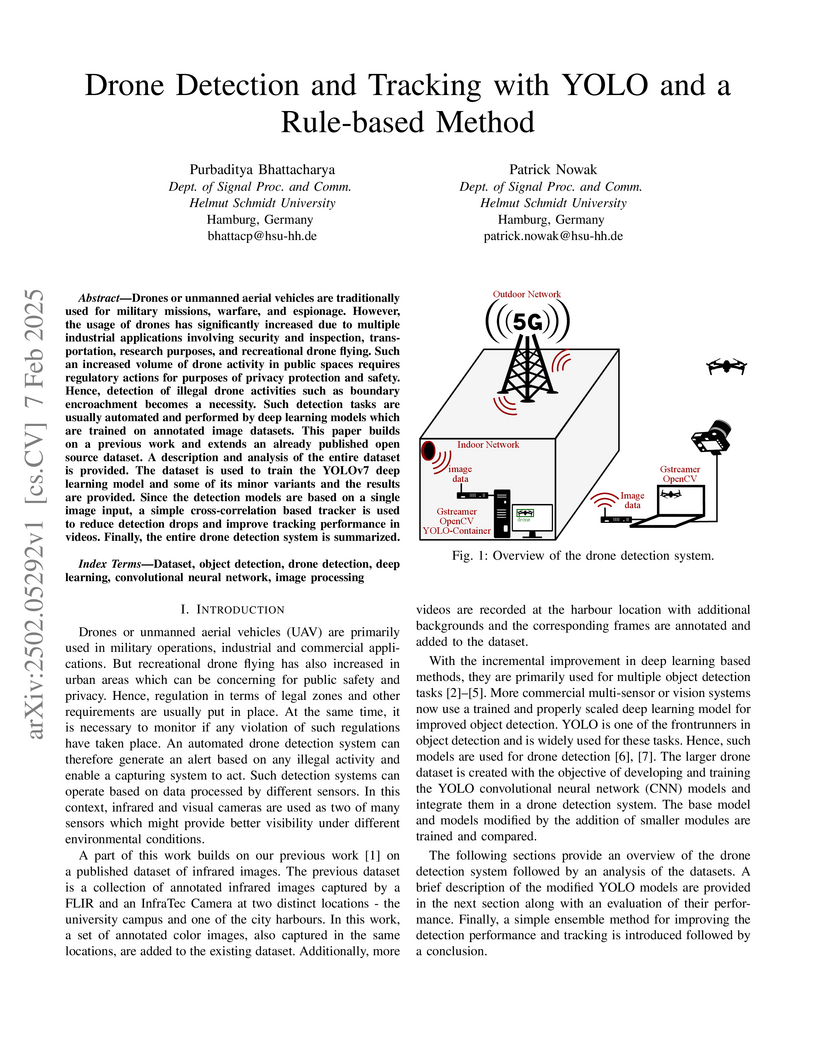

Drones or unmanned aerial vehicles are traditionally used for military

missions, warfare, and espionage. However, the usage of drones has

significantly increased due to multiple industrial applications involving

security and inspection, transportation, research purposes, and recreational

drone flying. Such an increased volume of drone activity in public spaces

requires regulatory actions for purposes of privacy protection and safety.

Hence, detection of illegal drone activities such as boundary encroachment

becomes a necessity. Such detection tasks are usually automated and performed

by deep learning models which are trained on annotated image datasets. This

paper builds on a previous work and extends an already published open source

dataset. A description and analysis of the entire dataset is provided. The

dataset is used to train the YOLOv7 deep learning model and some of its minor

variants and the results are provided. Since the detection models are based on

a single image input, a simple cross-correlation based tracker is used to

reduce detection drops and improve tracking performance in videos. Finally, the

entire drone detection system is summarized.

Capability ontologies are increasingly used to model functionalities of systems or machines. The creation of such ontological models with all properties and constraints of capabilities is very complex and can only be done by ontology experts. However, Large Language Models (LLMs) have shown that they can generate machine-interpretable models from natural language text input and thus support engineers / ontology experts. Therefore, this paper investigates how LLMs can be used to create capability ontologies. We present a study with a series of experiments in which capabilities with varying complexities are generated using different prompting techniques and with different LLMs. Errors in the generated ontologies are recorded and compared. To analyze the quality of the generated ontologies, a semi-automated approach based on RDF syntax checking, OWL reasoning, and SHACL constraints is used. The results of this study are very promising because even for complex capabilities, the generated ontologies are almost free of errors.

This data set descriptor introduces a structured, high-resolution dataset of transient thermal simulations for a vertical axis of a machine tool test rig. The data set includes temperature and heat flux values recorded at 29 probe locations at 1800 time steps, sampled every second over a 30-minute range, across 17 simulation runs derived from a fractional factorial design. First, a computer-aided design model was de-featured, segmented, and optimized, followed by finite element (FE) modelling. Detailed information on material, mesh, and boundary conditions is included. To support research and model development, the dataset provides summary statistics, thermal evolution plots, correlation matrix analyses, and a reproducible Jupyter notebook. The data set is designed to support machine learning and deep learning applications in thermal modelling for prediction, correction, and compensation of thermally induced deviations in mechanical systems, and aims to support researchers without FE expertise by providing ready-to-use simulation data.

14 Aug 2025

Human-Computer Interaction (HCI) is a multi-modal, interdisciplinary field focused on designing, studying, and improving the interactions between people and computer systems. This involves the design of systems that can recognize, interpret, and respond to human emotions or stress. Developing systems to monitor and react to stressful events can help prevent severe health implications caused by long-term stress exposure. Currently, the publicly available datasets and standardized protocols for data collection in this domain are limited. Therefore, we introduce a multi-modal dataset intended for wearable affective computing research, specifically the development of automated stress recognition systems. We systematically review the publicly available datasets recorded in controlled laboratory settings. Based on a proposed framework for the standardization of stress experiments and data collection, we collect physiological and motion signals from wearable devices (e.g., electrodermal activity, photoplethysmography, three-axis accelerometer). During the experimental protocol, we differentiate between the following four affective/activity states: neutral, physical, cognitive stress, and socio-evaluative stress. These different phases are meticulously labeled, allowing for detailed analysis and reconstruction of each experiment. Meta-data such as body positions, locations, and rest phases are included as further annotations. In addition, we collect psychological self-assessments after each stressor to evaluate subjects' affective states. The contributions of this paper are twofold: 1) a novel multi-modal, publicly available dataset for automated stress recognition, and 2) a benchmark for stress detection with 89\% in a binary classification (baseline vs. stress) and 82\% in a multi-class classification (baseline vs. stress vs. physical exercise).

Thermal errors in machine tools significantly impact machining precision and productivity. Traditional thermal error correction/compensation methods rely on measured temperature-deformation fields or on transfer functions. Most existing data-driven compensation strategies employ neural networks (NNs) to directly predict thermal errors or specific compensation values. While effective, these approaches are tightly bound to particular error types, spatial locations, or machine configurations, limiting their generality and adaptability. In this work, we introduce a novel paradigm in which NNs are trained to predict high-fidelity temperature and heat flux fields within the machine tool. The proposed framework enables subsequent computation and correction of a wide range of error types using modular, swappable downstream components. The NN is trained using data obtained with the finite element method under varying initial conditions and incorporates a correlation-based selection strategy that identifies the most informative measurement points, minimising hardware requirements during inference. We further benchmark state-of-the-art time-series NN architectures, namely Recurrent NN, Gated Recurrent Unit, Long-Short Term Memory (LSTM), Bidirectional LSTM, Transformer, and Temporal Convolutional Network, by training both specialised models, tailored for specific initial conditions, and general models, capable of extrapolating to unseen scenarios. The results show accurate and low-cost prediction of temperature and heat flux fields, laying the basis for enabling flexible and generalisable thermal error correction in machine tool environments.

17 Oct 2025

We propose a flexible and robust nonparametric framework for testing spatial dependence in two- and three-dimensional random fields. Our approach involves converting spatial data into one-dimensional time series using space-filling Hilbert curves. We then apply ordinal pattern-based tests for serial dependence to this series. Because Hilbert curves preserve spatial locality, spatial dependence in the original field manifests as serial dependence in the transformed sequence. The approach is easy to implement, accommodates arbitrary grid sizes through generalized Hilbert (``gilbert'') curves, and naturally extends beyond three dimensions. This provides a practical and general alternative to existing methods based on spatial ordinal patterns, which are typically limited to two-dimensional settings.

01 Mar 2024

Several models for count time series have been developed during the last decades, often inspired by traditional autoregressive moving average (ARMA) models for real-valued time series, including integer-valued ARMA (INARMA) and integer-valued generalized autoregressive conditional heteroscedasticity (INGARCH) models. Both INARMA and INGARCH models exhibit an ARMA-like autocorrelation function (ACF). To achieve negative ACF values within the class of INGARCH models, log and softplus link functions are suggested in the literature, where the softplus approach leads to conditional linearity in good approximation. However, the softplus approach is limited to the INGARCH family for unbounded counts, i.e. it can neither be used for bounded counts, nor for count processes from the INARMA family. In this paper, we present an alternative solution, named the Tobit approach, for achieving approximate linearity together with negative ACF values, which is more generally applicable than the softplus approach. A Skellam--Tobit INGARCH model for unbounded counts is studied in detail, including stationarity, approximate computation of moments, maximum likelihood and censored least absolute deviations estimation for unknown parameters and corresponding simulations. Extensions of the Tobit approach to other situations are also discussed, including underlying discrete distributions, INAR models, and bounded counts. Three real-data examples are considered to illustrate the usefulness of the new approach.

New York UniversityUniversity of LjubljanaJD Explore AcademyThe University of SydneyBeijing University of Posts and TelecommunicationsFondazione Bruno KesslerKitwareUniversity of CagliariUniversity of LisbonUniversity of SeoulUniversity of JyvaskylaUniversity of South-Eastern NorwayUniversity of TuebingenFraunhofer IOSBHelmut Schmidt UniversityTU KaiserslauternGranular AI

New York UniversityUniversity of LjubljanaJD Explore AcademyThe University of SydneyBeijing University of Posts and TelecommunicationsFondazione Bruno KesslerKitwareUniversity of CagliariUniversity of LisbonUniversity of SeoulUniversity of JyvaskylaUniversity of South-Eastern NorwayUniversity of TuebingenFraunhofer IOSBHelmut Schmidt UniversityTU KaiserslauternGranular AIThe 1st Workshop on Maritime Computer Vision (MaCVi) 2023 focused on maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicle (USV), and organized several subchallenges in this domain: (i) UAV-based Maritime Object Detection, (ii) UAV-based Maritime Object Tracking, (iii) USV-based Maritime Obstacle Segmentation and (iv) USV-based Maritime Obstacle Detection. The subchallenges were based on the SeaDronesSee and MODS benchmarks. This report summarizes the main findings of the individual subchallenges and introduces a new benchmark, called SeaDronesSee Object Detection v2, which extends the previous benchmark by including more classes and footage. We provide statistical and qualitative analyses, and assess trends in the best-performing methodologies of over 130 submissions. The methods are summarized in the appendix. The datasets, evaluation code and the leaderboard are publicly available at this https URL.

02 Feb 2023

A lot of studies on the summary measures of predictive strength of categorical response models consider the likelihood ratio index (LRI), also known as the McFadden-R2, a better option than many other measures. We propose a simple modification of the LRI that adjusts for the effect of the number of response categories on the measure and that also rescales its values, mimicking an underlying latent measure. The modified measure is applicable to both binary and ordinal response models fitted by maximum likelihood. Results from simulation studies and a real data example on the olfactory perception of boar taint show that the proposed measure outperforms most of the widely used goodness-of-fit measures for binary and ordinal models. The proposed R2 interestingly proves quite invariant to an increasing number of response categories of an ordinal model.

During loading and unloading steps, energy is consumed when cranes lift containers, while energy is often wasted when cranes drop containers. By optimizing the scheduling of cranes, it is possible to reduce energy consumption, thereby lowering operational costs and environmental impacts. In this paper, we introduce a single-crane scheduling problem with energy savings, focusing on reusing the energy from containers that have already been lifted and reducing the total energy consumption of the entire scheduling plan. We establish a basic model considering a one-dimensional storage area and provide a systematic complexity analysis of the problem. First, we investigate the connection between our problem and the semi-Eulerization problem and propose an additive approximation algorithm. Then, we present a polynomial-time Dynamic Programming (DP) algorithm for the case of bounded energy buffer and processing lengths. Next, adopting a Hamiltonian perspective, we address the general case with arbitrary energy buffer and processing lengths. We propose an exact DP algorithm and show that the variation of the problem is polynomially solvable when it can be transformed into a path cover problem on acyclic interval digraphs. We introduce a paradigm that integrates both the Eulerian and Hamiltonian perspectives, providing a robust framework for addressing the problem.

We present a multi-scale computational framework suitable for designing solid lubricant interfaces fully in silico. The approach is based on stochastic thermodynamics founded on the classical thermally activated two-dimensional Prandtl-Tomlinson model, linked with First Principles methods to accurately capture the properties of real materials. It allows investigating the energy dissipation due to friction in materials as it arises directly from their electronic structure, and naturally accessing the time-scale range of a typical friction force microscopy. This opens new possibilities for designing a broad class of material surfaces with atomically tailored properties. We apply the multi-scale framework to a class of two-dimensional layered materials and reveal a delicate interplay between the topology of the energy landscape and dissipation that known static approaches based solely on the energy barriers fail to capture.

30 Sep 2025

In structural health monitoring (SHM), sensor measurements are collected, and damage-sensitive features such as natural frequencies are extracted for damage detection. However, these features depend not only on damage but are also influenced by various confounding factors, including environmental conditions and operational parameters. These factors must be identified, and their effects must be removed before further analysis. However, it has been shown that confounding variables may influence the mean and the covariance of the extracted features. This is particularly significant since the covariance is an essential building block in many damage detection tools. To account for the complex relationships resulting from the confounding factors, a nonparametric kernel approach can be used to estimate a conditional covariance matrix. By doing so, the covariance matrix is allowed to change depending on the identified confounding factor, thus providing a clearer understanding of how, for example, temperature influences the extracted features. This paper presents two bootstrap-based methods for obtaining confidence intervals for the conditional covariances, providing a way to quantify the uncertainty associated with the conditional covariance estimator. A proof-of-concept Monte Carlo study compares the two bootstrap versions proposed and evaluates their effectiveness. Finally, the methods are applied to the natural frequency data of the KW51 railway bridge near Leuven, Belgium. This real-world application highlights the practical implications of the findings. It underscores the importance of accurately accounting for confounding factors to generate more reliable diagnostic values with fewer false alarms.

Type 1 diabetes (T1D) management can be significantly enhanced through the

use of predictive machine learning (ML) algorithms, which can mitigate the risk

of adverse events like hypoglycemia. Hypoglycemia, characterized by blood

glucose levels below 70 mg/dL, is a life-threatening condition typically caused

by excessive insulin administration, missed meals, or physical activity. Its

asymptomatic nature impedes timely intervention, making ML models crucial for

early detection. This study integrates short- (up to 2h) and long-term (up to

24h) prediction horizons (PHs) within a single classification model to enhance

decision support. The predicted times are 5-15 min, 15-30 min, 30 min-1h, 1-2h,

2-4h, 4-8h, 8-12h, and 12-24h before hypoglycemia. In addition, a simplified

model classifying up to 4h before hypoglycemia is compared. We trained ResNet

and LSTM models on glucose levels, insulin doses, and acceleration data. The

results demonstrate the superiority of the LSTM models when classifying nine

classes. In particular, subject-specific models yielded better performance but

achieved high recall only for classes 0, 1, and 2 with 98%, 72%, and 50%,

respectively. A population-based six-class model improved the results with at

least 60% of events detected. In contrast, longer PHs remain challenging with

the current approach and may be considered with different models.

20 Jan 2025

A major limitation of laser interferometers using continuous wave lasers are parasitic light fields, such as ghost beams, scattered or stray light, which can cause non-linear noise. This is especially relevant for laser interferometric ground-based gravitational wave detectors. Increasing their sensitivity, particularly at frequencies below 10 Hz, is threatened by the influence of parasitic photons. These can up-convert low-frequency disturbances into phase and amplitude noise inside the relevant measurement band. By artificially tuning the coherence of the lasers, using pseudo-random-noise (PRN) phase modulations, this influence of parasitic fields can be suppressed. As it relies on these fields traveling different paths, it does not sacrifice the coherence for the intentional interference. We demonstrate the feasibility of this technique experimentally, achieving noise suppression levels of 40 dB in a Michelson interferometer with an artificial coherence length below 30 cm. We probe how the suppression depends on the delay mismatch and length of the PRN sequence. We also prove that optical resonators can be operated in the presence of PRN modulation by measuring the behavior of a linear cavity with and without such a modulation. By matching the resonators round-trip length and the PRN sequence repetition length, the classic response is recovered.

01 Nov 2023

We extend and analyze the deep neural network multigrid solver (DNN-MG) for the Navier-Stokes equations in three dimensions. The idea of the method is to augment a finite element simulation on coarse grids with fine scale information obtained using deep neural networks.

The neural network operates locally on small patches of grid elements. The local approach proves to be highly efficient, since the network can be kept (relatively) small and since it can be applied in parallel on all grid patches. However, the main advantage of the local approach is the inherent generalizability of the method. Since the network only processes data of small sub-areas, it never ``sees'' the global problem and thus does not learn false biases.

We describe the method with a focus on the interplay between the finite element method and deep neural networks. Further, we demonstrate with numerical examples the excellent efficiency of the hybrid approach, which allows us to achieve very high accuracy with a coarse grid and thus reduce the computation time by orders of magnitude.

14 Jun 2021

We investigate scaling and efficiency of the deep neural network multigrid

method (DNN-MG).

DNN-MG is a novel neural network-based technique for the simulation of the

Navier-Stokes equations that combines an adaptive geometric multigrid solver,

i.e. a highly efficient

classical solution scheme, with a recurrent neural network with memory.

The neural network replaces in DNN-MG one or multiple finest multigrid layers

and provides a correction for the classical solve in the next time step.

This leads to little degradation in the solution quality while substantially

reducing the overall computational costs.

At the same time, the use of the multigrid solver at the coarse scales allows

for a compact network that is easy to train, generalizes well, and allows for

the incorporation of physical constraints.

Previous work on DNN-MG focused on the overall scheme and how to enforce

divergence freedom in the solution.

In this work, we investigate how the network size affects training and

solution quality and the overall runtime of the computations.

Our results demonstrate that larger networks are able to capture the

flow behavior better while requiring only little additional training time.

At runtime, the use of the neural network correction can even reduce the

computation time compared to a classical multigrid simulation through a faster

convergence of the nonlinear solve that is required at every time step.

There are no more papers matching your filters at the moment.