Helwan University

Determining the appropriate number of clusters in unsupervised learning is a central problem in statistics and data science. Traditional validity indices such as Calinski-Harabasz, Silhouette, and Davies-Bouldin-depend on centroid-based distances and therefore degrade in high-dimensional or contaminated data. This paper proposes a new robust, nonparametric clustering validation framework, the High-Dimensional Between-Within Distance Median (HD-BWDM), which extends the recently introduced BWDM criterion to high-dimensional spaces. HD-BWDM integrates random projection and principal component analysis to mitigate the curse of dimensionality and applies trimmed clustering and medoid-based distances to ensure robustness against outliers. We derive theoretical results showing consistency and convergence under Johnson-Lindenstrauss embeddings. Extensive simulations demonstrate that HD-BWDM remains stable and interpretable under high-dimensional projections and contamination, providing a robust alternative to traditional centroid-based validation criteria. The proposed method provides a theoretically grounded, computationally efficient stopping rule for nonparametric clustering in modern high-dimensional applications.

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of Pittsburgh

University of Pittsburgh University of California, Santa BarbaraSLAC National Accelerator Laboratory

University of California, Santa BarbaraSLAC National Accelerator Laboratory Harvard University

Harvard University Imperial College LondonUniversity of OklahomaDESY

Imperial College LondonUniversity of OklahomaDESY University of ManchesterUniversity of ZurichUniversity of Bern

University of ManchesterUniversity of ZurichUniversity of Bern UC Berkeley

UC Berkeley University of OxfordNikhefIndiana UniversityPusan National UniversityScuola Normale Superiore

University of OxfordNikhefIndiana UniversityPusan National UniversityScuola Normale Superiore Cornell University

Cornell University University of California, San Diego

University of California, San Diego Northwestern UniversityUniversity of Granada

Northwestern UniversityUniversity of Granada CERN

CERN Argonne National LaboratoryFlorida State University

Argonne National LaboratoryFlorida State University Seoul National University

Seoul National University Huazhong University of Science and Technology

Huazhong University of Science and Technology University of Wisconsin-MadisonUniversity of Pisa

University of Wisconsin-MadisonUniversity of Pisa Lawrence Berkeley National LaboratoryPolitecnico di MilanoUniversity of LiverpoolUniversity of Iowa

Lawrence Berkeley National LaboratoryPolitecnico di MilanoUniversity of LiverpoolUniversity of Iowa Duke UniversityUniversity of GenevaUniversity of Glasgow

Duke UniversityUniversity of GenevaUniversity of Glasgow University of WarwickIowa State University

University of WarwickIowa State University Karlsruhe Institute of TechnologyUniversità di Milano-BicoccaTechnische Universität MünchenOld Dominion UniversityTexas Tech University

Karlsruhe Institute of TechnologyUniversità di Milano-BicoccaTechnische Universität MünchenOld Dominion UniversityTexas Tech University Durham UniversityNiels Bohr InstituteCzech Technical University in PragueUniversity of OregonUniversity of AlabamaSTFC Rutherford Appleton LaboratoryLawrence Livermore National Laboratory

Durham UniversityNiels Bohr InstituteCzech Technical University in PragueUniversity of OregonUniversity of AlabamaSTFC Rutherford Appleton LaboratoryLawrence Livermore National Laboratory University of California, Santa CruzUniversity of SarajevoJefferson LabTOBB University of Economics and TechnologyUniversity of California RiversideUniversity of HuddersfieldCEA SaclayRadboud University NijmegenUniversitá degli Studi dell’InsubriaHumboldt University BerlinINFN Milano-BicoccaUniversità degli Studi di BresciaIIT GuwahatiDaresbury LaboratoryINFN - PadovaINFN MilanoUniversità degli Studi di BariCockcroft InstituteHelwan UniversityINFN-TorinoINFN PisaINFN-BolognaBrookhaven National Laboratory (BNL)INFN Laboratori Nazionali del SudINFN PaviaMax Planck Institute for Nuclear PhysicsINFN TriesteINFN Roma TreINFN GenovaFermi National Accelerator Laboratory (Fermilab)INFN BariINFN-FirenzeINFN FerraraPunjab Agricultural UniversityEuropean Spallation Source (ESS)Fusion for EnergyInternational Institute of Physics (IIP)INFN-Roma La SapienzaUniversit

degli Studi di GenovaUniversit

di FerraraUniversit

degli Studi di PadovaUniversit

di Roma

La SapienzaRWTH Aachen UniversityUniversit

di TorinoSapienza Universit

di RomaUniversit

degli Studi di FirenzeUniversit

degli Studi di TorinoUniversit

di PaviaUniversit

Di BolognaUniversit

degli Studi Roma Tre

University of California, Santa CruzUniversity of SarajevoJefferson LabTOBB University of Economics and TechnologyUniversity of California RiversideUniversity of HuddersfieldCEA SaclayRadboud University NijmegenUniversitá degli Studi dell’InsubriaHumboldt University BerlinINFN Milano-BicoccaUniversità degli Studi di BresciaIIT GuwahatiDaresbury LaboratoryINFN - PadovaINFN MilanoUniversità degli Studi di BariCockcroft InstituteHelwan UniversityINFN-TorinoINFN PisaINFN-BolognaBrookhaven National Laboratory (BNL)INFN Laboratori Nazionali del SudINFN PaviaMax Planck Institute for Nuclear PhysicsINFN TriesteINFN Roma TreINFN GenovaFermi National Accelerator Laboratory (Fermilab)INFN BariINFN-FirenzeINFN FerraraPunjab Agricultural UniversityEuropean Spallation Source (ESS)Fusion for EnergyInternational Institute of Physics (IIP)INFN-Roma La SapienzaUniversit

degli Studi di GenovaUniversit

di FerraraUniversit

degli Studi di PadovaUniversit

di Roma

La SapienzaRWTH Aachen UniversityUniversit

di TorinoSapienza Universit

di RomaUniversit

degli Studi di FirenzeUniversit

degli Studi di TorinoUniversit

di PaviaUniversit

Di BolognaUniversit

degli Studi Roma TreThis review, by the International Muon Collider Collaboration (IMCC), outlines the scientific case and technological feasibility of a multi-TeV muon collider, demonstrating its potential for unprecedented energy reach and precision measurements in particle physics. It presents a comprehensive conceptual design and R&D roadmap for a collider capable of reaching 10+ TeV center-of-mass energy.

01 Nov 2018

Current performance evaluation for audio source separation depends on

comparing the processed or separated signals with reference signals. Therefore,

common performance evaluation toolkits are not applicable to real-world

situations where the ground truth audio is unavailable. In this paper, we

propose a performance evaluation technique that does not require reference

signals in order to assess separation quality. The proposed technique uses a

deep neural network (DNN) to map the processed audio into its quality score.

Our experiment results show that the DNN is capable of predicting the

sources-to-artifacts ratio from the blind source separation evaluation toolkit

without the need for reference signals.

Egyptian hieroglyphs, the ancient Egyptian writing system, are composed entirely of drawings. Translating these glyphs into English poses various challenges, including the fact that a single glyph can have multiple meanings. Deep learning translation applications are evolving rapidly, producing remarkable results that significantly impact our lives. In this research, we propose a method for the automatic recognition and translation of ancient Egyptian hieroglyphs from images to English. This study utilized two datasets for classification and translation: the Morris Franken dataset and the EgyptianTranslation dataset. Our approach is divided into three stages: segmentation (using Contour and Detectron2), mapping symbols to Gardiner codes, and translation (using the CNN model). The model achieved a BLEU score of 42.2, a significant result compared to previous research.

Recently, there have been tremendous research outcomes in the fields of speech recognition and natural language processing. This is due to the well-developed multi-layers deep learning paradigms such as wav2vec2.0, Wav2vecU, WavBERT, and HuBERT that provide better representation learning and high information capturing. Such paradigms run on hundreds of unlabeled data, then fine-tuned on a small dataset for specific tasks. This paper introduces a deep learning constructed emotional recognition model for Arabic speech dialogues. The developed model employs the state of the art audio representations include wav2vec2.0 and HuBERT. The experiment and performance results of our model overcome the previous known outcomes.

We report a large persistent photoconductivity (PPC) in oxygen reduced PLD

grown YBa2Cu3O7-{\delta}/La2/3Ca1/3MnO3-y (YBCO/LCMO) superlattices scaling

with the oxygen deficiency and being similar to that observed in single layer

YBa2Cu3O7-{\delta} films. These results contradict previous observations where

in sputtered bilayer samples only a transient photoconductivity (TPC) was

found. We argue that the PPC effect in superlattices is caused by the PPC

effect due to the YBa2Cu3O7-x layers with limited charge transfer to

La2/3Ca1/3MnO3-y. The discrepancy arises from the different permeability of

charges across the interface and shed light on the sensitivity of oxide

interface properties on details of their preparation.

This dataset includes 6823 thermal images captured using a UNI-T UTi165A camera for face detection, recognition, and emotion analysis. It consists of 2485 facial recognition images depicting emotions (happy, sad, angry, natural, surprised), 2054 images for face recognition, and 2284 images for face detection. The dataset covers various conditions, color palettes, shooting angles, and zoom levels, with a temperature range of -10°C to 400°C and a resolution of 19,200 pixels. It serves as a valuable resource for advancing thermal imaging technology, aiding in algorithm development, and benchmarking for facial recognition across different palettes. Additionally, it contributes to facial motion recognition, fostering interdisciplinary collaboration in computer vision, psychology, and neuroscience. The dataset promotes transparency in thermal face detection and recognition research, with applications in security, healthcare, and human-computer interaction.

Decoding natural language from brain activity using non-invasive electroencephalography (EEG) remains a significant challenge in neuroscience and machine learning, particularly for open-vocabulary scenarios where traditional methods struggle with noise and variability. Previous studies have achieved high accuracy on small-closed vocabularies, but it still struggles on open vocabularies. In this study, we propose ETS, a framework that integrates EEG with synchronized eye-tracking data to address two critical tasks: (1) open-vocabulary text generation and (2) sentiment classification of perceived language. Our model achieves a superior performance on BLEU and Rouge score for EEG-To-Text decoding and up to 10% F1 score on EEG-based ternary sentiment classification, which significantly outperforms supervised baselines. Furthermore, we show that our proposed model can handle data from various subjects and sources, showing great potential for high performance open vocabulary eeg-to-text system.

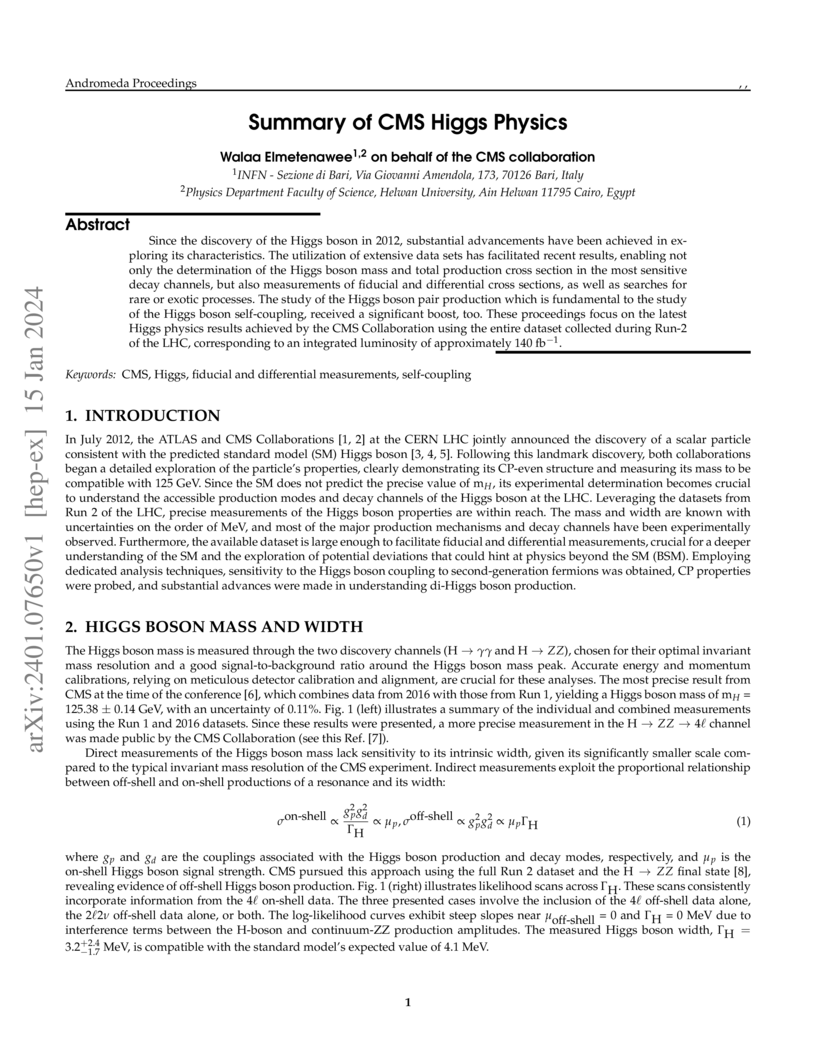

Since the discovery of the Higgs boson in 2012, substantial advancements have been achieved in exploring its characteristics. The utilization of extensive data sets has facilitated recent results, enabling not only the determination of the Higgs boson mass and total production cross section in the most sensitive decay channels, but also measurements of fiducial and differential cross sections, as well as searches for rare or exotic processes. The study of the Higgs boson pair production which is fundamental to the study of the Higgs boson self-coupling, received a significant boost, too. These proceedings focus on the latest Higgs physics results achieved by the CMS Collaboration using the entire dataset collected during Run-2 of the LHC, corresponding to an integrated luminosity of approximately 140 fb−1.

01 Oct 2025

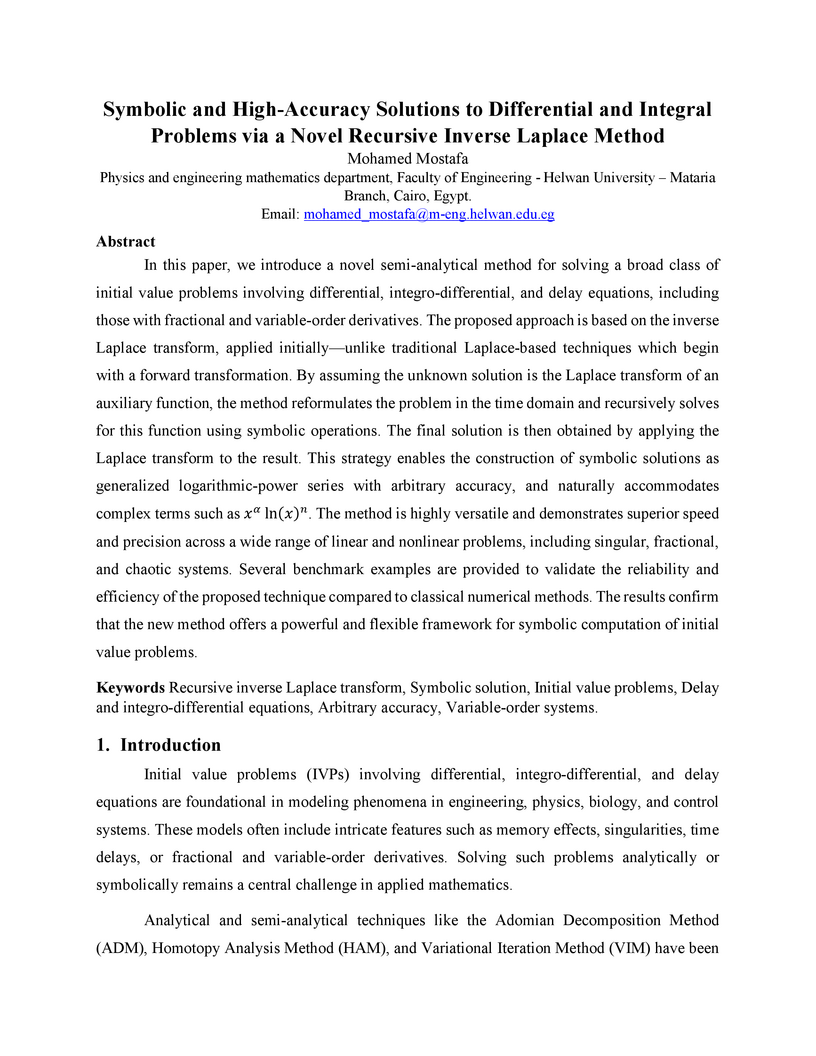

In this paper, we introduce a novel semi-analytical method for solving a broad class of initial value problems involving differential, integro-differential, and delay equations, including those with fractional and variable-order derivatives. The proposed approach is based on the inverse Laplace transform, applied initially - unlike traditional Laplace-based techniques which begin with a forward transformation. By assuming the unknown solution is the Laplace transform of an auxiliary function, the method reformulates the problem in the time domain and recursively solves for this function using symbolic operations. The final solution is then obtained by applying the Laplace transform to the result. This strategy enables the construction of symbolic solutions as generalized logarithmic-power series with arbitrary accuracy, and naturally accommodates complex terms. The method is highly versatile and demonstrates superior speed and precision across a wide range of linear and nonlinear problems, including singular, fractional, and chaotic systems. Several benchmark examples are provided to validate the reliability and efficiency of the proposed technique compared to classical numerical methods. The results confirm that the new method offers a powerful and flexible framework for symbolic computation of initial value problems.

09 Jul 2021

Focus accuracy affects the quality of the astronomical observations.

Auto-focusing is necessary for imaging systems designed for astronomical

observations. The automatic focus system searches for the best focus position

by using a proposed search algorithm. The search algorithm uses the image's

focus levels as its objective function in the search process. This paper aims

to study the performance of several search algorithms to select a suitable one.

The proper search algorithm will be used to develop an automatic focus system

for Kottamia Astronomical Observatory (KAO). The optimal search algorithm is

selected by applying several search algorithms into five sequences of

star-clusters observations. Then, their performance is evaluated based on two

criteria, which are accuracy and number of steps. The experimental results show

that the Binary search is the optimal search algorithm.

INFN Sezione di NapoliGhent UniversityKorea University CERN

CERN Université Paris-Saclay

Université Paris-Saclay MITUniversità degli Studi di PaviaINFN, Sezione di PaviaINFN, Sezione di TorinoINFN, Laboratori Nazionali di FrascatiUniversidade do Estado do Rio de JaneiroUniversità degli Studi di Roma "Tor Vergata"INFN-Sezione di BolognaPolitecnico di BariUniversità degli Studi di BariUniversidad IberoamericanaINFN Sezione di Roma Tor VergataHelwan UniversityInter-university Institute for High Energies, Vrije Universiteit BrusselINFN (Sezione di Bari)Inter-University Institute for High EnergiesClermont Université, Université Blaise Pascal, CNRS/IN2P3, Laboratoire de Physique CorpusculaireUniversit

Claude Bernard Lyon ISapienza Universit

di RomaUniversit

degli Studi di TorinoVrije Universiteit Brussel

MITUniversità degli Studi di PaviaINFN, Sezione di PaviaINFN, Sezione di TorinoINFN, Laboratori Nazionali di FrascatiUniversidade do Estado do Rio de JaneiroUniversità degli Studi di Roma "Tor Vergata"INFN-Sezione di BolognaPolitecnico di BariUniversità degli Studi di BariUniversidad IberoamericanaINFN Sezione di Roma Tor VergataHelwan UniversityInter-university Institute for High Energies, Vrije Universiteit BrusselINFN (Sezione di Bari)Inter-University Institute for High EnergiesClermont Université, Université Blaise Pascal, CNRS/IN2P3, Laboratoire de Physique CorpusculaireUniversit

Claude Bernard Lyon ISapienza Universit

di RomaUniversit

degli Studi di TorinoVrije Universiteit Brussel

CERN

CERN Université Paris-Saclay

Université Paris-Saclay MITUniversità degli Studi di PaviaINFN, Sezione di PaviaINFN, Sezione di TorinoINFN, Laboratori Nazionali di FrascatiUniversidade do Estado do Rio de JaneiroUniversità degli Studi di Roma "Tor Vergata"INFN-Sezione di BolognaPolitecnico di BariUniversità degli Studi di BariUniversidad IberoamericanaINFN Sezione di Roma Tor VergataHelwan UniversityInter-university Institute for High Energies, Vrije Universiteit BrusselINFN (Sezione di Bari)Inter-University Institute for High EnergiesClermont Université, Université Blaise Pascal, CNRS/IN2P3, Laboratoire de Physique CorpusculaireUniversit

Claude Bernard Lyon ISapienza Universit

di RomaUniversit

degli Studi di TorinoVrije Universiteit Brussel

MITUniversità degli Studi di PaviaINFN, Sezione di PaviaINFN, Sezione di TorinoINFN, Laboratori Nazionali di FrascatiUniversidade do Estado do Rio de JaneiroUniversità degli Studi di Roma "Tor Vergata"INFN-Sezione di BolognaPolitecnico di BariUniversità degli Studi di BariUniversidad IberoamericanaINFN Sezione di Roma Tor VergataHelwan UniversityInter-university Institute for High Energies, Vrije Universiteit BrusselINFN (Sezione di Bari)Inter-University Institute for High EnergiesClermont Université, Université Blaise Pascal, CNRS/IN2P3, Laboratoire de Physique CorpusculaireUniversit

Claude Bernard Lyon ISapienza Universit

di RomaUniversit

degli Studi di TorinoVrije Universiteit BrusselResistive Plate Chambers detectors are extensively used in several domains of Physics. In High Energy Physics, they are typically operated in avalanche mode with a high-performance gas mixture based on Tetrafluoroethane (C2H2F4), a fluorinated high Global Warming Potential greenhouse gas. The RPC EcoGas@GIF++ Collaboration has pursued an intensive R\&D activity to search for new gas mixtures with low environmental impact, fulfilling the performance expected for the LHC operations as well as for future and different applications. Here, results obtained with new eco-friendly gas mixtures based on Tetrafluoropropene and carbon dioxide, even under high-irradiation conditions, will be presented. Long-term aging tests carried out at the CERN Gamma Irradiation Facility will be discussed along with their possible limits and future perspectives.

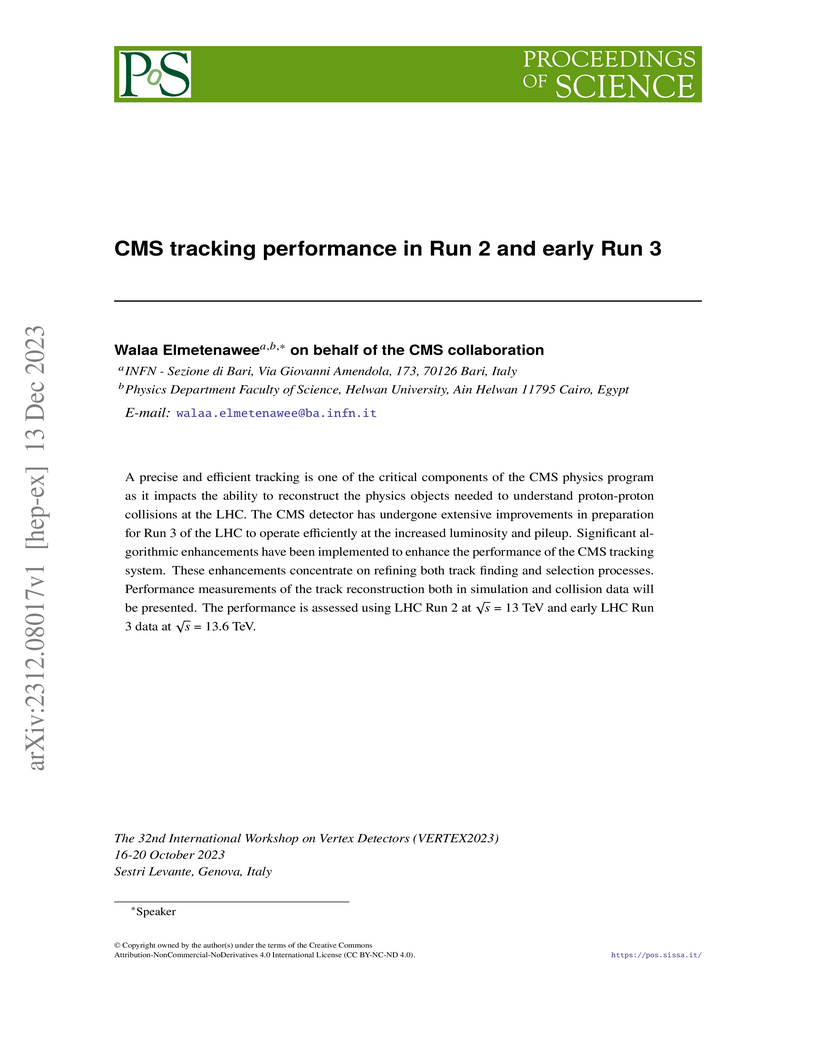

A precise and efficient tracking is one of the critical components of the CMS

physics program as it impacts the ability to reconstruct the physics objects

needed to understand proton-proton collisions at the LHC. The CMS detector has

undergone extensive improvements in preparation for Run 3 of the LHC to operate

efficiently at the increased luminosity and pileup. Significant algorithmic

enhancements have been implemented to enhance the performance of the CMS

tracking system. These enhancements concentrate on refining both track finding

and selection processes. Performance measurements of the track reconstruction

both in simulation and collision data will be presented. The performance is

assessed using LHC Run 2 at s = 13 TeV and early LHC Run 3 data at

s = 13.6 TeV.

04 Jun 2019

The validation of design pattern implementations to identify pattern

violations has gained more relevance as part of re-engineering processes in

order to preserve, extend, reuse software projects in rapid development

environments. If design pattern implementations do not conform to their

definitions, they are considered a violation. Software aging and the lack of

experience of developers are the origins of design pattern violations. It is

important to check the correctness of the design pattern implementations

against some predefined characteristics to detect and to correct violations,

thus, to reduce costs. Currently, several tools have been developed to detect

design pattern instances, but there has been little work done in creating an

automated tool to identify and validate design pattern violations. In this

paper we propose a Design Pattern Violations Identification and Assessment

(DPVIA) tool, which has the ability to identify software design pattern

violations and report the conformance score of pattern instance implementations

towards a set of predefined characteristics for any design pattern definition

whether Gang of Four (GoF) design patterns by Gamma et al[1]; or custom pattern

by software developer. Moreover, we have verified the validity of the proposed

tool using two evaluation experiments and the results were manually checked.

Finally, in order to assess the functionality of the proposed tool, it is

evaluated with a data-set containing 5,679,964 Lines of Code among 28,669 in 15

open-source projects, with a large and small size of open-source projects that

extensively and systematically employing design patterns, to determine design

pattern violations and suggest refactoring solutions, thus keeping costs of

software evolution. The results can be used by software architects to develop

best practices while using design patterns.

The particle ratios k+/π+, π−/K−, pˉ/π−,

Λ/π−, Ω/π−, p/π+, π−/π+, K−/K+,

pˉ/p, Λˉ/Λ, Σˉ/Σ, $

\bar{\Omega}/\Omega$ measured at AGS, SPS and RHIC energies are compared with

large statistical ensembles of 100,000 events deduced from the CRMC EPOS

1.99 and the Ultra-relativistic Quantum Molecular Dynamics (UrQMD) hybrid

model. In the UrQMD hybrid model two types of phase transitions are taken into

account. All these are then confronted to the Hadron Resonance Gas Model. The

two types of phase transitions are apparently indistinguishable. Apart from

k+/π+, k−/π−, Ω/π−, pˉ/π+, and

Ωˉ/Ω, the UrQMD hybrid model agrees well with the CRMC EPOS

1.99. Also, we conclude that the CRMC EPOS 1.99 seems to largely

underestimate k+/π+, k−/π−, Ω/π−, and pˉ/π+.

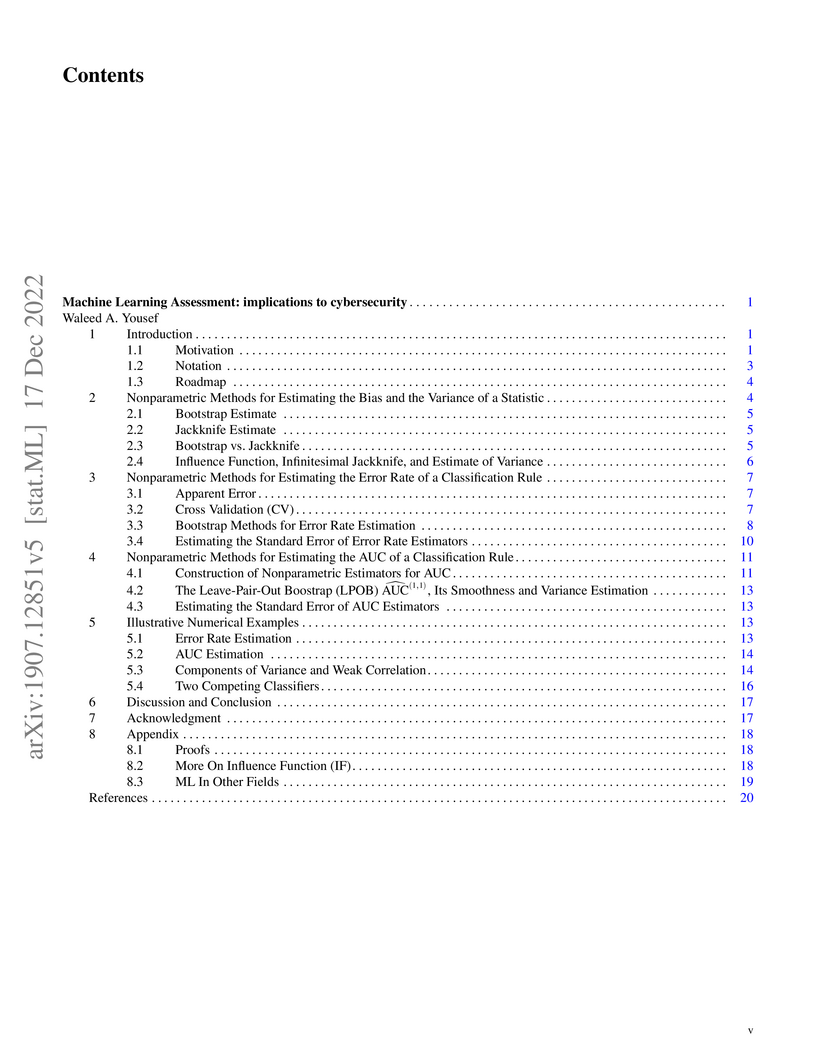

This chapter is dedicated to the assessment and performance estimation of

machine learning (ML) algorithms, a topic that is equally important to the

construction of these algorithms, in particular in the context of cyberphysical

security design. The literature is full of nonparametric methods to estimate a

statistic from just one available dataset through resampling techniques, e.g.,

jackknife, bootstrap and cross validation (CV). Special statistics of great

interest are the error rate and the area under the ROC curve (AUC) of a

classification rule. The importance of these resampling methods stems from the

fact that they require no knowledge about the probability distribution of the

data or the construction details of the ML algorithm. This chapter provides a

concise review of this literature to establish a coherent theoretical framework

for these methods that can estimate both the error rate (a one-sample

statistic) and the AUC (a two-sample statistic). The resampling methods are

usually computationally expensive, because they rely on repeating the training

and testing of a ML algorithm after each resampling iteration. Therefore, the

practical applicability of some of these methods may be limited to the

traditional ML algorithms rather than the very computationally demanding

approaches of the recent deep neural networks (DNN). In the field of

cyberphysical security, many applications generate structured (tabular) data,

which can be fed to all traditional ML approaches. This is in contrast to the

DNN approaches, which favor unstructured data, e.g., images, text, voice, etc.;

hence, the relevance of this chapter to this field.%

Scientific Computing relies on executing computer algorithms coded in some

programming languages. Given a particular available hardware, algorithms speed

is a crucial factor. There are many scientific computing environments used to

code such algorithms. Matlab is one of the most tremendously successful and

widespread scientific computing environments that is rich of toolboxes,

libraries, and data visualization tools. OpenCV is a (C++)-based library

written primarily for Computer Vision and its related areas. This paper

presents a comparative study using 20 different real datasets to compare the

speed of Matlab and OpenCV for some Machine Learning algorithms. Although

Matlab is more convenient in developing and data presentation, OpenCV is much

faster in execution, where the speed ratio reaches more than 80 in some cases.

The best of two worlds can be achieved by exploring using Matlab or similar

environments to select the most successful algorithm; then, implementing the

selected algorithm using OpenCV or similar environments to gain a speed factor.

07 Nov 2024

In this work, we introduce a nonparametric clustering stopping rule algorithm

based on the spatial median. Our proposed method aims to achieve the balance

between the homogeneity within the clusters and the heterogeneity between

clusters. The proposed algorithm maximises the ratio of the variation between

clusters and the variation within clusters while adjusting for the number of

clusters and number of observations. The proposed algorithm is robust against

distributional assumptions and the presence of outliers. Simulations have been

used to validate the algorithm. We further evaluated the stability and the

efficacy of the proposed algorithm using three real-world datasets. Moreover,

we compared the performance of our model with 13 other traditional algorithms

for determining the number of clusters. We found that the proposed algorithm

outperformed 11 of the algorithms considered for comparison in terms of

clustering number determination. The finding demonstrates that the proposed

method provides a reliable alternative to determine the number of clusters for

multivariate data.

20 Nov 2024

In the work "Generalized approximation spaces generation from Ij-neighborhoods and ideals with application to Chikungunya disease, published in \emph{AIMS Mathematics}, \textbf{9}(4) (2024), 10050−10077," Al-Shami and Hosny introduced a novel approach for generating generalized neighborhoods through Ij-neighborhoods with ideals, subsequently deriving new methods for generalized approximations based on these neighborhoods. However, several errors have been identified in their results, concepts, and methods, along with significant inaccuracies in the provided examples and comparison tables. This paper aims to address and correct these errors, providing counterexamples to illustrate the inaccuracies in the proposed results. Furthermore, we correct several flawed proofs and present the revised formulations of these results and concepts. Additionally, we offer properties and clarifications to enhance the understanding of these concepts.

09 Jul 2025

Nanophotonics, an interdisciplinary field merging nanotechnology and photonics, has enabled transformative advancements across diverse sectors including green energy, biomedicine, and optical computing. This review comprehensively examines recent progress in nanophotonic principles and applications, highlighting key innovations in material design, device engineering, and system integration. In renewable energy, nanophotonic allows light-trapping nanostructures and spectral control in perovskite solar cells, concentrating solar power, and thermophotovoltaics. That have significantly enhanced solar conversion efficiencies, approaching theoretical limits. For biosensing, nanophotonic platforms achieve unprecedented sensitivity in detecting biomolecules, pathogens, and pollutants, enabling real-time diagnostics and environmental monitoring. Medical applications leverage tailored light-matter interactions for precision photothermal therapy, image-guided surgery, and early disease detection. Furthermore, nanophotonics underpins next-generation optical neural networks and neuromorphic computing, offering ultra-fast, energy-efficient alternatives to von Neumann architectures. Despite rapid growth, challenges in scalability, fabrication costs, and material stability persist. Future advancements will rely on novel materials, AI-driven design optimization, and multidisciplinary approaches to enable scalable, low-cost deployment. This review summarizes recent progress and highlights future trends, including novel material systems, multidisciplinary approaches, and enhanced computational capabilities, to pave the way for transformative applications in this rapidly evolving field.

There are no more papers matching your filters at the moment.