ITIS SoftwareUniversity of Malaga

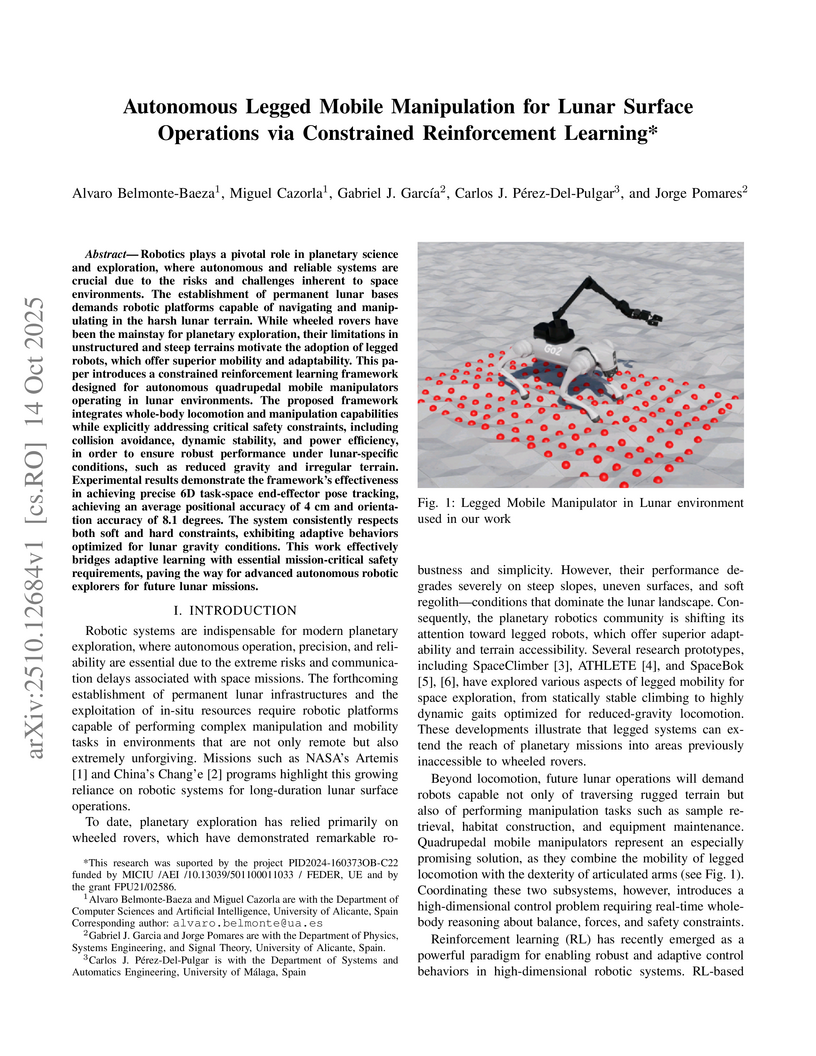

A Constrained Reinforcement Learning framework enables a quadrupedal mobile manipulator to achieve precise 6D end-effector tracking in a simulated lunar environment, consistently adhering to critical safety constraints. The system demonstrates adaptive, energy-efficient gaits by leveraging the unique low-gravity conditions.

17 Oct 2025

The AC Optimal Power Flow (AC-OPF) problem is a non-convex, NP-hard optimization task essential for secure and economic power system operation. Two prominent solution strategies are interior-point methods, valued for computational efficiency, and spatial branching techniques, which provide global optimality guarantees at higher computational cost. In this work, we also explore data-boosted variants that leverage historical operating data to enhance performance by guiding initialization in interior-point methods or constraining the search region in spatial branching. We conduct a comprehensive empirical comparison across networks of varying sizes and under both standard benchmark conditions and modified configurations designed to induce local optima. Our results show that data-boosted strategies can improve convergence and reduce computation times for both approaches. Spatial branching, however, remains computationally demanding, requiring further development for practical application. In contrast, modern interior-point solvers exhibit remarkable robustness, often converging to the global optimum even in challenging instances with multiple local solutions.

07 Nov 2024

Researchers evaluated Force-Torque Sensors (FTSs) on the European Space Agency's MaRTA rover in a planetary analog environment, demonstrating their superior performance over IMUs for terrain classification and analyzing their utility for drawbar pull estimation. The study generated an open-access dataset (BASEPROD) to inform future rover sensor suites.

12 Oct 2025

Improving robotic navigation is critical for extending exploration range and enhancing operational efficiency. Vision-based navigation relying on traditional CCD or CMOS cameras faces major challenges when complex illumination conditions are paired with motion, limiting the range and accessibility of mobile planetary robots. In this study, we propose a novel approach to planetary navigation that leverages the unique imaging capabilities of Single-Photon Avalanche Diode (SPAD) cameras. We present the first comprehensive evaluation of single-photon imaging as an alternative passive sensing technology for robotic exploration missions targeting perceptually challenging locations, with a special emphasis on high-latitude lunar regions. We detail the operating principles and performance characteristics of SPAD cameras, assess their advantages and limitations in addressing key perception challenges of upcoming exploration missions to the Moon, and benchmark their performance under representative illumination conditions.

Many modern workloads, such as neural networks, databases, and graph processing, are fundamentally memory-bound. For such workloads, the data movement between main memory and CPU cores imposes a significant overhead in terms of both latency and energy. A major reason is that this communication happens through a narrow bus with high latency and limited bandwidth, and the low data reuse in memory-bound workloads is insufficient to amortize the cost of main memory access. Fundamentally addressing this data movement bottleneck requires a paradigm where the memory system assumes an active role in computing by integrating processing capabilities. This paradigm is known as processing-in-memory (PIM).

Recent research explores different forms of PIM architectures, motivated by the emergence of new 3D-stacked memory technologies that integrate memory with a logic layer where processing elements can be easily placed. Past works evaluate these architectures in simulation or, at best, with simplified hardware prototypes. In contrast, the UPMEM company has designed and manufactured the first publicly-available real-world PIM architecture.

This paper provides the first comprehensive analysis of the first publicly-available real-world PIM architecture. We make two key contributions. First, we conduct an experimental characterization of the UPMEM-based PIM system using microbenchmarks to assess various architecture limits such as compute throughput and memory bandwidth, yielding new insights. Second, we present PrIM, a benchmark suite of 16 workloads from different application domains (e.g., linear algebra, databases, graph processing, neural networks, bioinformatics).

This paper introduces an open source simulator for packet routing in Low Earth Orbit Satellite Constellations (LSatCs) considering the dynamic system uncertainties. The simulator, implemented in Python, supports traditional Dijkstra's based routing as well as more advanced learning solutions, specifically Q-Routing and Multi-Agent Deep Reinforcement Learning (MA-DRL) from our previous work. It uses an event-based approach with the SimPy module to accurately simulate packet creation, routing and queuing, providing real-time tracking of queues and latency. The simulator is highly configurable, allowing adjustments in routing policies, traffic, ground and space layer topologies, communication parameters, and learning hyperparameters. Key features include the ability to visualize system motion and track packet paths. Results highlight significant improvements in end-to-end (E2E) latency using Reinforcement Learning (RL)-based routing policies compared to traditional methods. The source code, the documentation and a Jupyter notebook with post-processing results and analysis are available on GitHub.

As a technique that can compactly represent complex patterns, machine learning has significant potential for predictive inference. K-fold cross-validation (CV) is the most common approach to ascertaining the likelihood that a machine learning outcome is generated by chance, and it frequently outperforms conventional hypothesis testing. This improvement uses measures directly obtained from machine learning classifications, such as accuracy, that do not have a parametric description. To approach a frequentist analysis within machine learning pipelines, a permutation test or simple statistics from data partitions (i.e., folds) can be added to estimate confidence intervals. Unfortunately, neither parametric nor non-parametric tests solve the inherent problems of partitioning small sample-size datasets and learning from heterogeneous data sources. The fact that machine learning strongly depends on the learning parameters and the distribution of data across folds recapitulates familiar difficulties around excess false positives and replication. A novel statistical test based on K-fold CV and the Upper Bound of the actual risk (K-fold CUBV) is proposed, where uncertain predictions of machine learning with CV are bounded by the worst case through the evaluation of concentration inequalities. Probably Approximately Correct-Bayesian upper bounds for linear classifiers in combination with K-fold CV are derived and used to estimate the actual risk. The performance with simulated and neuroimaging datasets suggests that K-fold CUBV is a robust criterion for detecting effects and validating accuracy values obtained from machine learning and classical CV schemes, while avoiding excess false positives.

OpenTwins is an open-source framework developed by ITIS Software, University of Malaga, for designing and integrating digital twins that combine 3D visualization, IoT data, and AI/ML capabilities. It aims to overcome challenges of domain-specificity and technological orchestration in digital twin development, demonstrated through a petrochemical industry use case for real-time freezing point prediction.

28 Jun 2025

Exploring high-latitude lunar regions presents an extremely challenging visual environment for robots. The low sunlight elevation angle and minimal light scattering result in a visual field dominated by a high dynamic range featuring long, dynamic shadows. Reproducing these conditions on Earth requires sophisticated simulators and specialized facilities. We introduce a unique dataset recorded at the LunaLab from the SnT - University of Luxembourg, an indoor test facility designed to replicate the optical characteristics of multiple lunar latitudes. Our dataset includes images, inertial measurements, and wheel odometry data from robots navigating seven distinct trajectories under multiple illumination scenarios, simulating high-latitude lunar conditions from dawn to night time with and without the aid of headlights, resulting in 88 distinct sequences containing a total of 1.3M images. Data was captured using a stereo RGB-inertial sensor, a monocular monochrome camera, and for the first time, a novel single-photon avalanche diode (SPAD) camera. We recorded both static and dynamic image sequences, with robots navigating at slow (5 cm/s) and fast (50 cm/s) speeds. All data is calibrated, synchronized, and timestamped, providing a valuable resource for validating perception tasks from vision-based autonomous navigation to scientific imaging for future lunar missions targeting high-latitude regions or those intended for robots operating across perceptually degraded environments. The dataset can be downloaded from this https URL, and a visual overview is available at this https URL. All supplementary material can be found at this https URL.

Researchers at the University of Malaga and Aalborg University developed a decentralized multi-agent deep reinforcement learning framework for routing in Low Earth Orbit satellite constellations. This framework enables satellites to make autonomous decisions using local information and continually adapt over time through model anticipation and federated learning, achieving end-to-end latency comparable to centralized shortest path algorithms while better handling congestion.

29 Jun 2023

The use of datasets is getting more relevance in surgical robotics since they can be used to recognise and automate tasks. Also, this allows to use common datasets to compare different algorithms and methods. The objective of this work is to provide a complete dataset of three common training surgical tasks that surgeons perform to improve their skills. For this purpose, 12 subjects teleoperated the da Vinci Research Kit to perform these tasks. The obtained dataset includes all the kinematics and dynamics information provided by the da Vinci robot (both master and slave side) together with the associated video from the camera. All the information has been carefully timestamped and provided in a readable csv format. A MATLAB interface integrated with ROS for using and replicating the data is also provided.

29 Sep 2025

Massive connectivity with ultra-low latency and high reliability necessitates fundamental advances in future communication networks operating under finite-blocklength (FBL) transmission. Fluid antenna systems (FAS) have emerged as a promising enabler, offering superior spectrum and energy efficiency in short-packet/FBL scenarios. In this work, by leveraging the simplicity and accuracy of block-correlation channel modeling, we rigorously bound the performance limits of FBL-FAS from a statistical perspective, focusing on two key performance metrics: block error rate (BLER) and outage probability (OP). Furthermore, we introduce a novel complex-integral simplification method based on Gauss-Laguerre quadrature, which achieves higher approximation accuracy compared to existing Taylor-expansion-based approaches. Numerical results validate the robustness of the proposed analysis and clearly demonstrate the superiority of FBL-FAS over conventional multiple-antenna systems with fixed antenna placement.

22 Nov 2019

This paper proposes a Guidance, Navigation, and Control (GNC) architecture

for planetary rovers targeting the conditions of upcoming Mars exploration

missions such as Mars 2020 and the Sample Fetching Rover (SFR). The navigation

requirements of these missions demand a control architecture featuring

autonomous capabilities to achieve a fast and long traverse. The proposed

solution presents a two-level architecture where the efficient navigation (low)

level is always active and the full navigation (upper) level is enabled

according to the difficulty of the terrain. The first level is an efficient

implementation of the basic functionalities for autonomous navigation based on

hazard detection, local path replanning, and trajectory control with visual

odometry. The second level implements an adaptive SLAM algorithm that improves

the relative localization, evaluates the traversability of the terrain ahead

for a more optimal path planning, and performs global (absolute) localization

that corrects the pose drift during longer traverses. The architecture provides

a solution for long range, low supervision and fast planetary exploration. Both

navigation levels have been validated on planetary analogue field test

campaigns.

This work addresses the reduction of power consumption of the AODV routing

protocol in vehicular networks as an optimization problem. Nowadays, network

designers focus on energy-aware communication protocols, specially to deploy

wireless networks. Here, we introduce an automatic method to search for

energy-efficient AODV configurations by using an evolutionary algorithm and

parallel Monte-Carlo simulations to improve the accuracy of the evaluation of

tentative solutions. The experimental results demonstrate that significant

power consumption improvements over the standard configuration can be attained,

with no noteworthy loss in the quality of service.

02 Jun 2023

End-to-end routing in Low Earth Orbit (LEO) satellite constellations (LSatCs) is a complex and dynamic problem. The topology, of finite size, is dynamic and predictable, the traffic from/to Earth and transiting the space segment is highly imbalanced, and the delay is dominated by the propagation time in non-congested routes and by the queueing time at Inter-Satellite Links (ISLs) in congested routes. Traditional routing algorithms depend on excessive communication with ground or other satellites, and oversimplify the characterization of the path links towards the destination. We model the problem as a multi-agent Partially Observable Markov Decision Problem (POMDP) where the nodes (i.e., the satellites) interact only with nearby nodes. We propose a distributed Q-learning solution that leverages on the knowledge of the neighbours and the correlation of the routing decisions of each node. We compare our results to two centralized algorithms based on the shortest path: one aiming at using the highest data rate links and a second genie algorithm that knows the instantaneous queueing delays at all satellites. The results of our proposal are positive on every front: (1) it experiences delays that are comparable to the benchmarks in steady-state conditions; (2) it increases the supported traffic load without congestion; and (3) it can be easily implemented in a LSatC as it does not depend on the ground segment and minimizes the signaling overhead among satellites.

14 Mar 2025

Quantum computers leverage the principles of quantum mechanics to do

computation with a potential advantage over classical computers. While a single

classical computer transforms one particular binary input into an output after

applying one operator to the input, a quantum computer can apply the operator

to a superposition of binary strings to provide a superposition of binary

outputs, doing computation apparently in parallel. This feature allows quantum

computers to speed up the computation compared to classical algorithms.

Unsurprisingly, quantum algorithms have been proposed to solve optimization

problems in quantum computers. Furthermore, a family of quantum machines called

quantum annealers are specially designed to solve optimization problems. In

this paper, we provide an introduction to quantum optimization from a practical

point of view. We introduce the reader to the use of quantum annealers and

quantum gate-based machines to solve optimization problems.

Generative adversarial networks (GANs) are powerful generative models but remain challenging to train due to pathologies suchas mode collapse and instability. Recent research has explored co-evolutionary approaches, in which populations of generators and discriminators are evolved, as a promising solution. This paper presents an empirical analysis of different coevolutionary GAN training strategies, focusing on the impact of selection and replacement mechanisms. We compare (mu,lambda), (mu+lambda) with elitism, and (mu+lambda) with tournament selection coevolutionary schemes, along with a non-evolutionary population based multi-generator multi-discriminator GAN baseline, across both synthetic low-dimensional datasets (blob and gaussian mixtures) and an image-based benchmark (MNIST). Results show that full generational replacement, i.e., (mu,lambda), consistently outperforms in terms of both sample quality and diversity, particularly when combined with larger offspring sizes. In contrast, elitist approaches tend to converge prematurely and suffer from reduced diversity. These findings highlight the importance of balancing exploration and exploitation dynamics in coevolutionary GAN training and provide guidance for designing more effective population-based generative models.

The integration of Semantic Communications (SemCom) and edge computing in

space networks enables the optimal allocation of the scarce energy, computing,

and communication resources for data-intensive applications. We use Earth

Observation (EO) as a canonical functionality of satellites and review its main

characteristics and challenges. We identify the potential of the space segment,

represented by a low Earth orbit (LEO) satellite constellation, to serve as an

edge layer for distributed intelligence. Based on that, propose a system

architecture that supports semantic and goal-oriented applications for image

reconstruction and object detection and localization. The simulation results

show the intricate trade-offs among energy, time, and task-performance using a

real dataset and State-of-the-Art (SoA) processing and communication

parameters.

The advent of next-generation sequencing (NGS) has revolutionized genomic research by enabling high-throughput data generation through parallel sequencing of a diverse range of organisms at significantly reduced costs. This breakthrough has unleashed a "Cambrian explosion" in genomic data volume and diversity. This volume of workloads places genomics among the top four big data challenges anticipated for this decade. In this context, pairwise sequence alignment represents a very time- and energy-consuming step in common bioinformatics pipelines. Speeding up this step requires the implementation of heuristic approaches, optimized algorithms, and/or hardware acceleration.

Whereas state-of-the-art CPU and GPU implementations have demonstrated significant performance gains, recent field programmable gate array (FPGA) implementations have shown improved energy efficiency. However, the latter often suffer from limited scalability due to constraints on hardware resources when aligning longer sequences. In this work, we present a scalable and flexible FPGA-based accelerator template that implements Myers's algorithm using high-level synthesis and a worker-based architecture. GeneTEK, an instance of this accelerator template in a Xilinx Zynq UltraScale+ FPGA, outperforms state-of-the-art CPU and GPU implementations in both speed and energy efficiency, while overcoming scalability limitations of current FPGA approaches. Specifically, GeneTEK achieves at least a 19.4% increase in execution speed and up to 62x reduction in energy consumption compared to leading CPU and GPU solutions, while fitting comparison matrices up to 72% larger compared to previous FPGA solutions. These results reaffirm the potential of FPGAs as an energy-efficient platform for scalable genomic workloads.

Deep Learning (DL) applications are gaining momentum in the realm of

Artificial Intelligence, particularly after GPUs have demonstrated remarkable

skills for accelerating their challenging computational requirements. Within

this context, Convolutional Neural Network (CNN) models constitute a

representative example of success on a wide set of complex applications,

particularly on datasets where the target can be represented through a

hierarchy of local features of increasing semantic complexity. In most of the

real scenarios, the roadmap to improve results relies on CNN settings involving

brute force computation, and researchers have lately proven Nvidia GPUs to be

one of the best hardware counterparts for acceleration. Our work complements

those findings with an energy study on critical parameters for the deployment

of CNNs on flagship image and video applications: object recognition and people

identification by gait, respectively. We evaluate energy consumption on four

different networks based on the two most popular ones (ResNet/AlexNet): ResNet

(167 layers), a 2D CNN (15 layers), a CaffeNet (25 layers) and a ResNetIm (94

layers) using batch sizes of 64, 128 and 256, and then correlate those with

speed-up and accuracy to determine optimal settings. Experimental results on a

multi-GPU server endowed with twin Maxwell and twin Pascal Titan X GPUs

demonstrate that energy correlates with performance and that Pascal may have up

to 40% gains versus Maxwell. Larger batch sizes extend performance gains and

energy savings, but we have to keep an eye on accuracy, which sometimes shows a

preference for small batches. We expect this work to provide a preliminary

guidance for a wide set of CNN and DL applications in modern HPC times, where

the GFLOPS/w ratio constitutes the primary goal.

There are no more papers matching your filters at the moment.