Idiap Research Institute

This paper introduces linear transformers, which reduce the computational and memory complexity of the Transformer architecture from quadratic to linear by reformulating self-attention using kernel methods and matrix associativity. This approach enables thousands of times faster autoregressive inference and efficient processing of very long sequences while maintaining competitive performance across tasks like image generation and speech recognition.

09 Oct 2025

Researchers at Idiap Research Institute, EPFL, and Harvard University introduce Geometry-aware Policy Imitation (GPI), a method that interprets robotic demonstrations as geometric curves to directly synthesize control policies. It achieves significantly faster inference times and lower memory footprints than diffusion-based policies, maintaining high success rates across various complex robotic tasks in both simulation and real-world settings.

This work introduces σ-GPTs, a class of autoregressive models that relax the fixed-order generation assumption by training on randomly shuffled sequences. The models leverage double positional encodings and a dynamic token-based rejection sampling mechanism to achieve efficient, sub-linear inference and enable natural infilling capabilities, demonstrating comparable or improved performance against traditional GPTs across various tasks.

Diffusion models have emerged as a powerful paradigm for generation, obtaining strong performance in various continuous domains. However, applying continuous diffusion models to natural language remains challenging due to its discrete nature and the need for a large number of diffusion steps to generate text, making diffusion-based generation expensive. In this work, we propose Text-to-text Self-conditioned Simplex Diffusion (TESS), a text diffusion model that is fully non-autoregressive, employs a new form of self-conditioning, and applies the diffusion process on the logit simplex space rather than the learned embedding space. Through extensive experiments on natural language understanding and generation tasks including summarization, text simplification, paraphrase generation, and question generation, we demonstrate that TESS outperforms state-of-the-art non-autoregressive models, requires fewer diffusion steps with minimal drop in performance, and is competitive with pretrained autoregressive sequence-to-sequence models. We publicly release our codebase at this https URL.

HyperMixer introduces an MLP-based neural network architecture that offers a cost-effective alternative to Transformers for natural language understanding. This model achieves competitive or superior performance across several GLUE benchmark tasks while significantly reducing computational expense, data requirements, and tuning effort by using hypernetworks to dynamically generate an attention-like token mixing function.

08 Oct 2025

Neuro-symbolic NLP methods aim to leverage the complementary strengths of large language models and formal logical solvers. However, current approaches are mostly static in nature, i.e., the integration of a target solver is predetermined at design time, hindering the ability to employ diverse formal inference strategies. To address this, we introduce an adaptive, multi-paradigm, neuro-symbolic inference framework that: (1) automatically identifies formal reasoning strategies from problems expressed in natural language; and (2) dynamically selects and applies specialized formal logical solvers via autoformalization interfaces. Extensive experiments on individual and multi-paradigm reasoning tasks support the following conclusions: LLMs are effective at predicting the necessary formal reasoning strategies with an accuracy above 90 percent. This enables flexible integration with formal logical solvers, resulting in our framework outperforming competing baselines by 27 percent and 6 percent compared to GPT-4o and DeepSeek-V3.1, respectively. Moreover, adaptive reasoning can even positively impact pure LLM methods, yielding gains of 10, 5, and 6 percent on zero-shot, CoT, and symbolic CoT settings with GPT-4o. Finally, although smaller models struggle with adaptive neuro-symbolic reasoning, post-training offers a viable path to improvement. Overall, this work establishes the foundations for adaptive LLM-symbolic reasoning, offering a path forward for unifying material and formal inferences on heterogeneous reasoning challenges.

Researchers from IIT, Queen Mary University of London, and Idiap/EPFL developed a unified formulation for visual affordance prediction and introduced the "Affordance Sheet" to promote reporting standards. This work systematically reviews existing methodologies and datasets, critically identifying pervasive reproducibility challenges across the field.

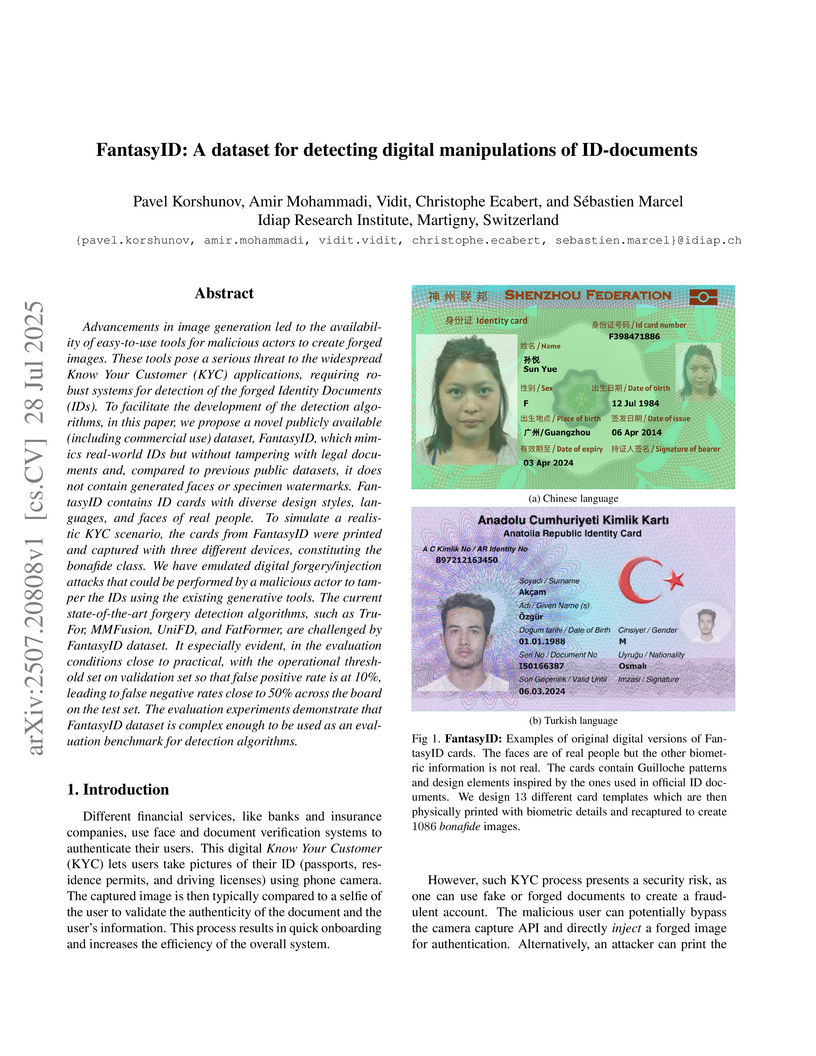

FantasyID introduces a publicly available dataset designed for detecting digital manipulations of ID documents, featuring genuinely pristine bonafide cards, real human faces, and diverse digital forgeries across multiple languages. It provides a robust and unbiased benchmark to develop and evaluate algorithms for combating the growing threat of forged identity documents in applications like KYC.

A study from Idiap, EPFL, and NVIDIA rigorously assessed how well current speech enhancement (SE) models perform on pathological speech, revealing that pre-training on large neurotypical datasets followed by fine-tuning with smaller pathological datasets substantially enhances performance, improving generative model intelligibility (∆E-STOI) from 0.01 to 0.17.

Researchers from the University of Manchester and Idiap Research Institute developed Explanation-Refiner, a neuro-symbolic framework for verifying and refining natural language explanations (NLEs) in Natural Language Inference (NLI) tasks using Large Language Models (LLMs) and a symbolic theorem prover. This approach iteratively improved GPT-4's logical validity on the e-SNLI dataset from 36% to 84% and substantially reduced syntactic errors in formalisations.

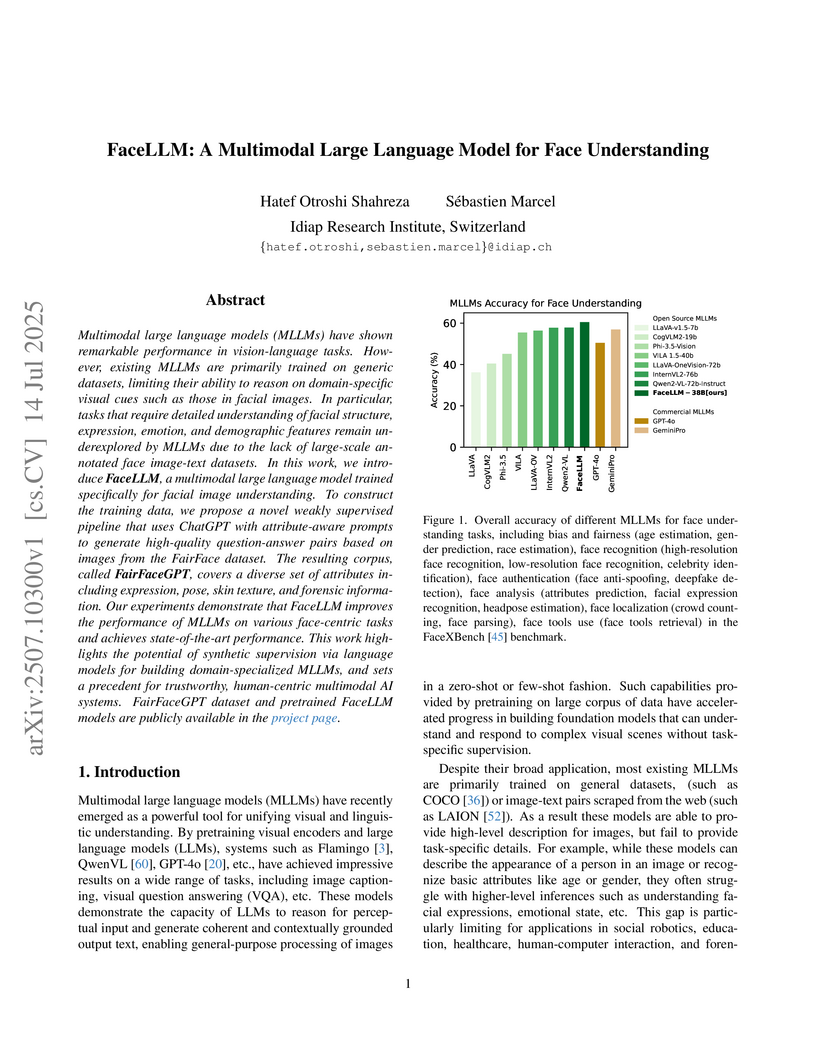

Multimodal large language models (MLLMs) have shown remarkable performance in vision-language tasks. However, existing MLLMs are primarily trained on generic datasets, limiting their ability to reason on domain-specific visual cues such as those in facial images. In particular, tasks that require detailed understanding of facial structure, expression, emotion, and demographic features remain underexplored by MLLMs due to the lack of large-scale annotated face image-text datasets. In this work, we introduce FaceLLM, a multimodal large language model trained specifically for facial image understanding. To construct the training data, we propose a novel weakly supervised pipeline that uses ChatGPT with attribute-aware prompts to generate high-quality question-answer pairs based on images from the FairFace dataset. The resulting corpus, called FairFaceGPT, covers a diverse set of attributes including expression, pose, skin texture, and forensic information. Our experiments demonstrate that FaceLLM improves the performance of MLLMs on various face-centric tasks and achieves state-of-the-art performance. This work highlights the potential of synthetic supervision via language models for building domain-specialized MLLMs, and sets a precedent for trustworthy, human-centric multimodal AI systems. FairFaceGPT dataset and pretrained FaceLLM models are publicly available in the project page.

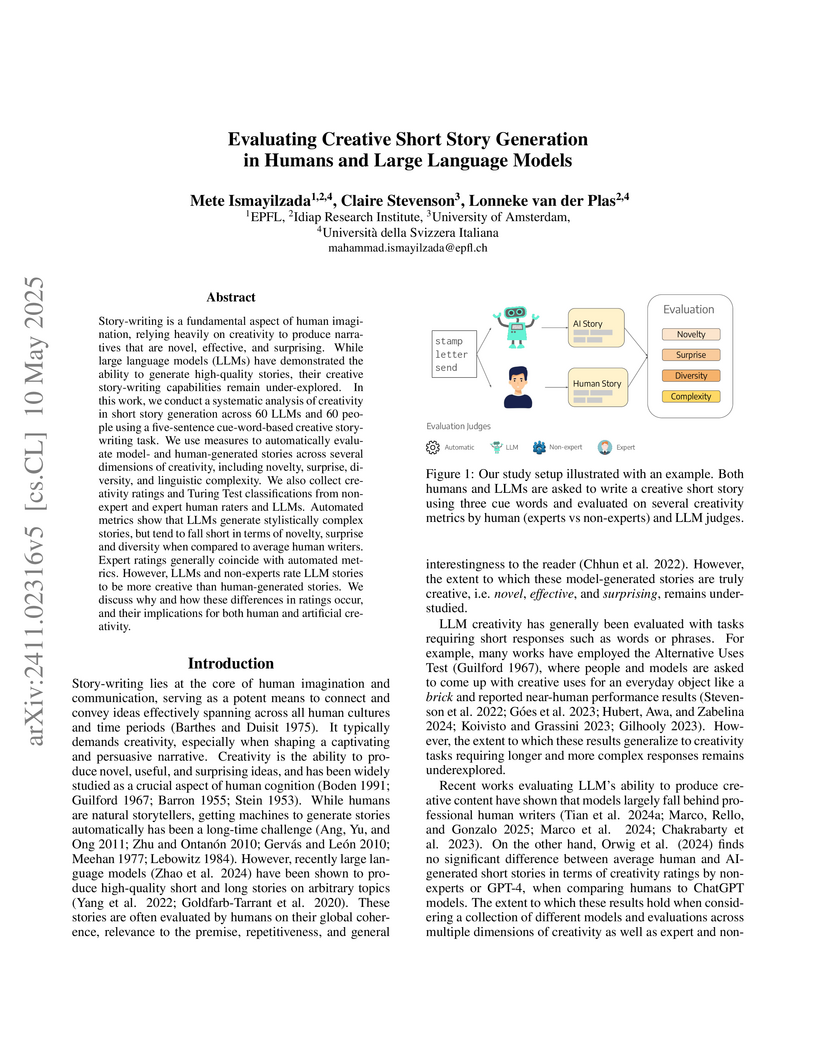

Researchers from EPFL, Idiap, USI, and University of Amsterdam systematically compared creative short story generation between 60 diverse LLMs and 60 average human writers. Their analysis revealed that LLMs generated more linguistically complex but less novel, surprising, and diverse stories than humans, and also exposed biases in non-expert and LLM evaluators who often mistook complexity for creativity.

In this paper, we introduce CoRet, a dense retrieval model designed for

code-editing tasks that integrates code semantics, repository structure, and

call graph dependencies. The model focuses on retrieving relevant portions of a

code repository based on natural language queries such as requests to implement

new features or fix bugs. These retrieved code chunks can then be presented to

a user or to a second code-editing model or agent. To train CoRet, we propose a

loss function explicitly designed for repository-level retrieval. On SWE-bench

and Long Code Arena's bug localisation datasets, we show that our model

substantially improves retrieval recall by at least 15 percentage points over

existing models, and ablate the design choices to show their importance in

achieving these results.

The alignments of reasoning abilities between smaller and larger Language Models are largely conducted via Supervised Fine-Tuning (SFT) using demonstrations generated from robust Large Language Models (LLMs). Although these approaches deliver more performant models, they do not show sufficiently strong generalization ability as the training only relies on the provided demonstrations.

In this paper, we propose the Self-refine Instruction-tuning method that elicits Smaller Language Models to self-refine their abilities. Our approach is based on a two-stage process, where reasoning abilities are first transferred between LLMs and Small Language Models (SLMs) via Instruction-tuning on demonstrations provided by LLMs, and then the instructed models Self-refine their abilities through preference optimization strategies. In particular, the second phase operates refinement heuristics based on the Direct Preference Optimization algorithm, where the SLMs are elicited to deliver a series of reasoning paths by automatically sampling the generated responses and providing rewards using ground truths from the LLMs. Results obtained on commonsense and math reasoning tasks show that this approach significantly outperforms Instruction-tuning in both in-domain and out-domain scenarios, aligning the reasoning abilities of Smaller and Larger Language Models.

Multimodal large language models (MLLMs) have achieved remarkable performance across diverse vision-and-language tasks. However, their potential in face recognition remains underexplored. In particular, the performance of open-source MLLMs needs to be evaluated and compared with existing face recognition models on standard benchmarks with similar protocol. In this work, we present a systematic benchmark of state-of-the-art MLLMs for face recognition on several face recognition datasets, including LFW, CALFW, CPLFW, CFP, AgeDB and RFW. Experimental results reveal that while MLLMs capture rich semantic cues useful for face-related tasks, they lag behind specialized models in high-precision recognition scenarios in zero-shot applications. This benchmark provides a foundation for advancing MLLM-based face recognition, offering insights for the design of next-generation models with higher accuracy and generalization. The source code of our benchmark is publicly available in the project page.

This work proposes an industry-level omni-modal large language model (LLM) pipeline that integrates auditory, visual, and linguistic modalities to overcome challenges such as limited tri-modal datasets, high computational costs, and complex feature alignments. Our pipeline consists of three main components: First, a modular framework enabling flexible configuration of various encoder-LLM-decoder architectures. Second, a lightweight training strategy that pre-trains audio-language alignment on the state-of-the-art vision-language model Qwen2.5-VL, thus avoiding the costly pre-training of vision-specific modalities. Third, an audio synthesis pipeline that generates high-quality audio-text data from diverse real-world scenarios, supporting applications such as Automatic Speech Recognition and Speech-to-Speech chat. To this end, we introduce an industry-level omni-modal LLM, Nexus. Extensive experiments validate the efficacy of our pipeline, yielding the following key findings:(1) In the visual understanding task, Nexus exhibits superior performance compared with its backbone model - Qwen2.5-VL-7B, validating the efficiency of our training strategy. (2) Within the English Spoken Question-Answering task, the model achieves better accuracy than the same-period competitor (i.e, MiniCPM-o2.6-7B) in the LLaMA Q. benchmark. (3) In our real-world ASR testset, Nexus achieves outstanding performance, indicating its robustness in real scenarios. (4) In the Speech-to-Text Translation task, our model outperforms Qwen2-Audio-Instruct-7B. (5) In the Text-to-Speech task, based on pretrained vocoder (e.g., Fishspeech1.4 or CosyVoice2.0), Nexus is comparable to its backbone vocoder on Seed-TTS benchmark. (6) An in-depth analysis of tri-modal alignment reveals that incorporating the audio modality enhances representational alignment between vision and language.

This survey paper from EPFL, USI, and Idiap Research Institute provides a timely overview of the creative capabilities of the latest AI systems, identifying key advancements in linguistic and artistic content generation while highlighting challenges in areas like true originality and abstract reasoning. It systematically reviews machine creativity across diverse domains and advocates for more comprehensive evaluation frameworks.

Our understanding of the generalization capabilities of neural networks (NNs)

is still incomplete. Prevailing explanations are based on implicit biases of

gradient descent (GD) but they cannot account for the capabilities of models

from gradient-free methods nor the simplicity bias recently observed in

untrained networks. This paper seeks other sources of generalization in NNs.

Findings. To understand the inductive biases provided by architectures

independently from GD, we examine untrained, random-weight networks. Even

simple MLPs show strong inductive biases: uniform sampling in weight space

yields a very biased distribution of functions in terms of complexity. But

unlike common wisdom, NNs do not have an inherent "simplicity bias". This

property depends on components such as ReLUs, residual connections, and layer

normalizations. Alternative architectures can be built with a bias for any

level of complexity. Transformers also inherit all these properties from their

building blocks.

Implications. We provide a fresh explanation for the success of deep learning

independent from gradient-based training. It points at promising avenues for

controlling the solutions implemented by trained models.

Recent works have shown the effectiveness of Large Vision Language Models (VLMs or LVLMs) in image manipulation detection. However, text manipulation detection is largely missing in these studies. We bridge this knowledge gap by analyzing closed- and open-source VLMs on different text manipulation datasets. Our results suggest that open-source models are getting closer, but still behind closed-source ones like GPT- 4o. Additionally, we benchmark image manipulation detection-specific VLMs for text manipulation detection and show that they suffer from the generalization problem. We benchmark VLMs for manipulations done on in-the-wild scene texts and on fantasy ID cards, where the latter mimic a challenging real-world misuse.

FVAE-LoRA, developed by researchers at Idiap Research Institute and EPFL, introduces a parameter-efficient fine-tuning method that integrates a Factorized Variational Autoencoder into LoRA, explicitly disentangling task-relevant features from other information. This approach enhances robustness to spurious correlations and improves performance, often surpassing standard LoRA and matching full fine-tuning across diverse image, text, and audio tasks.

There are no more papers matching your filters at the moment.