Johannes Kepler University Linz

Researchers at Microsoft Research AI for Science, in collaboration with several universities, developed SYNTHESEUS, an open-source framework for standardized evaluation of retrosynthesis algorithms. Their re-evaluation of state-of-the-art methods using SYNTHESEUS revealed that previous inconsistent benchmarking practices had distorted reported performance and model rankings, while also highlighting significant challenges in out-of-distribution generalization.

We develop a framework for learning properties of quantum states beyond the assumption of independent and identically distributed (i.i.d.) input states. We prove that, given any learning problem (under reasonable assumptions), an algorithm designed for i.i.d. input states can be adapted to handle input states of any nature, albeit at the expense of a polynomial increase in training data size (aka sample complexity). Importantly, this polynomial increase in sample complexity can be substantially improved to polylogarithmic if the learning algorithm in question only requires non-adaptive, single-copy measurements. Among other applications, this allows us to generalize the classical shadow framework to the non-i.i.d. setting while only incurring a comparatively small loss in sample efficiency. We use rigorous quantum information theory to prove our main results. In particular, we leverage permutation invariance and randomized single-copy measurements to derive a new quantum de Finetti theorem that mainly addresses measurement outcome statistics and, in turn, scales much more favorably in Hilbert space dimension.

Amazon Web Services researchers developed Chronos-2, a pretrained time series model designed for zero-shot forecasting across univariate, multivariate, and covariate-informed tasks using a unified framework. The model achieved an average win rate of 90.7% and a 47.3% skill score on the fev-bench, demonstrating superior performance, especially when leveraging in-context learning for covariate data.

30 Jan 2024

We consider goal-oriented adaptive space-time finite-element discretizations

of the parabolic heat equation on completely unstructured simplicial space-time

meshes. In some applications, we are interested in an accurate computation of

some possibly nonlinear functionals at the solution, so called goal

functionals. This motivates the use of adaptive mesh refinements driven by the

dual-weighted residual (DWR) method. The DWR method requires the numerical

solution of a linear adjoint problem that provides the sensitivities for the

mesh refinement. This can be done by means of the same full space-time finite

element discretization as used for the primal linear problem. The numerical

experiment presented demonstrates that this goal-oriented, full space-time

finite element solver efficiently provides accurate numerical results for a

model problem with moving domains and a linear goal functional, where we know

the exact value.

A modern Hopfield network is introduced, generalized to continuous states with a novel energy function, and mathematically proven to be equivalent to the attention mechanism fundamental to Transformer architectures. These new Hopfield layers achieve state-of-the-art performance on multiple instance learning, small UCI datasets, and drug design benchmarks.

Researchers at JKU Linz developed the Two Time-Scale Update Rule (TTUR) to establish theoretical convergence for GANs to a local Nash equilibrium and introduced the Fréchet Inception Distance (FID) as a reliable evaluation metric. Their work also characterized the Adam optimizer's dynamics as a Heavy Ball with Friction system, contributing to more stable and robust GAN training and evaluation.

This work systematically evaluates Large Language Models (LLMs) for automated penetration testing and introduces PENTESTGPT, a modular framework that addresses LLM limitations like context loss and strategic planning. PENTESTGPT, with its Reasoning Module and Pentesting Task Tree, significantly improved task and sub-task completion rates on a custom benchmark and demonstrated practical utility in real-world HackTheBox and CTF challenges.

CNRSFreie Universität Berlin

CNRSFreie Universität Berlin University of OxfordTU Dortmund UniversityGerman Research Center for Artificial Intelligence (DFKI)University of InnsbruckCollège de FranceMax Planck Institute for the Science of LightFriedrich-Alexander-Universität Erlangen-NürnbergInstitut Polytechnique de ParisUniversity of LatviaUniversity of TurkuSaarland UniversityFondazione Bruno KesslerTU Wien

University of OxfordTU Dortmund UniversityGerman Research Center for Artificial Intelligence (DFKI)University of InnsbruckCollège de FranceMax Planck Institute for the Science of LightFriedrich-Alexander-Universität Erlangen-NürnbergInstitut Polytechnique de ParisUniversity of LatviaUniversity of TurkuSaarland UniversityFondazione Bruno KesslerTU Wien Chalmers University of TechnologyForschungszentrum JülichUniversity of RegensburgUniversity of FlorenceUniversity of AugsburgUniversity of GothenburgLeiden Institute of PhysicsDonostia International Physics CenterJohannes Kepler University LinzFraunhofer Heinrich-Hertz-InstituteSAP SEFriedrich-Schiller-University JenaEuropean Centre for Theoretical Studies in Nuclear Physics and Related Areas (ECT*)EPITA Research LabLeiden Institute of Advanced Computer ScienceÖAWVienna Center for Quantum Science and TechnologyAtominstitutUniversity of Applied Sciences Zittau/GörlitzIQOQI ViennaFraunhofer IOSB-ASTUniversit PSLInria Paris–SaclayUniversit

Paris Diderot`Ecole PolytechniqueUniversity of Naples

“Federico II”INFN

Sezione di Firenze

Chalmers University of TechnologyForschungszentrum JülichUniversity of RegensburgUniversity of FlorenceUniversity of AugsburgUniversity of GothenburgLeiden Institute of PhysicsDonostia International Physics CenterJohannes Kepler University LinzFraunhofer Heinrich-Hertz-InstituteSAP SEFriedrich-Schiller-University JenaEuropean Centre for Theoretical Studies in Nuclear Physics and Related Areas (ECT*)EPITA Research LabLeiden Institute of Advanced Computer ScienceÖAWVienna Center for Quantum Science and TechnologyAtominstitutUniversity of Applied Sciences Zittau/GörlitzIQOQI ViennaFraunhofer IOSB-ASTUniversit PSLInria Paris–SaclayUniversit

Paris Diderot`Ecole PolytechniqueUniversity of Naples

“Federico II”INFN

Sezione di FirenzeA collaborative white paper coordinated by the Quantum Community Network comprehensively analyzes the current status and future perspectives of Quantum Artificial Intelligence, categorizing its potential into "Quantum for AI" and "AI for Quantum" applications. It proposes a strategic research and development agenda to bolster Europe's competitive position in this rapidly converging technological domain.

STSBench, a new spatio-temporal scenario benchmark, assesses multi-modal large language models' holistic understanding in autonomous driving. Evaluation on STSnu, an instantiation on NuScenes, reveals that current models significantly lack spatio-temporal reasoning for complex traffic dynamics.

09 Apr 2025

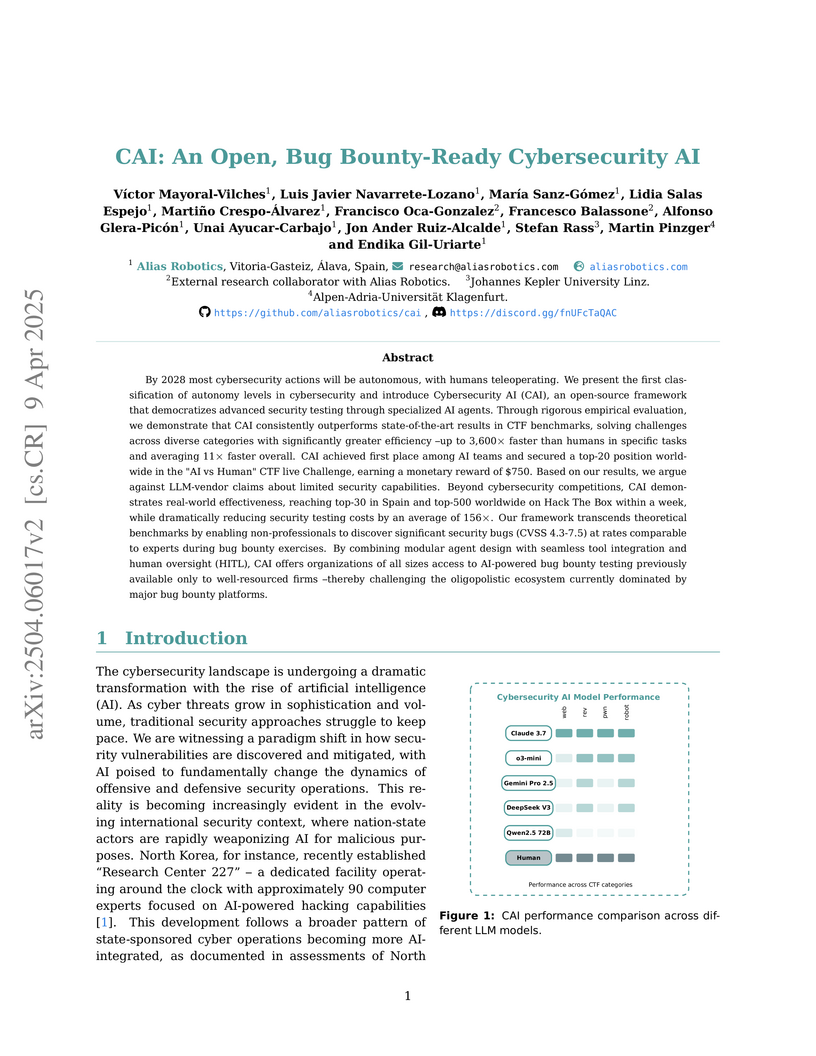

Alias Robotics introduces CAI, an open-source, agent-centric AI framework for cybersecurity operations that achieved human-competitive performance in CTFs and bug bounties, demonstrating faster vulnerability discovery and reduced costs compared to human experts. The framework also provided empirical insights into the offensive capabilities of various Large Language Models, contributing to greater transparency in AI security.

Segment any Text (SAT) introduces a universal approach for sentence segmentation, achieving state-of-the-art performance across 85 languages and diverse noisy text types. It delivers a 3x speedup over prior methods and enables efficient few-shot domain adaptation while eliminating the need for explicit language identification.

Self-Normalizing Neural Networks (SNNs) introduce Scaled Exponential Linear Units (SELUs) which mathematically guarantee neuron activations converge to zero mean and unit variance. This property allows for the stable and efficient training of very deep feed-forward neural networks (FNNs), outperforming other FNN variants and traditional machine learning methods on various classification and regression tasks.

15 Oct 2025

The prospect of quantum solutions for complicated optimization problems is contingent on mapping the original problem onto a tractable quantum energy landscape, e.g. an Ising-type Hamiltonian. Subsequently, techniques like adiabatic optimization, quantum annealing, and the Quantum Approximate Optimization Algorithm (QAOA) can be used to find the ground state of this Hamiltonian. Quadratic Unconstrained Binary Optimization (QUBO) is one prominent problem class for which this entire pipeline is well understood and has received considerable attention over the past years. In this work, we provide novel, tractable mappings for the maxima of multiple QUBO problems. Termed Multi-Objective Quantum Approximations, or MOQA for short, our framework allows us to recast new types of classical binary optimization problems as ground state problems of a tractable Ising-type Hamiltonian. This, in turn, opens the possibility of new quantum- and quantum-inspired solutions to a variety of problems that frequently occur in practical applications. In particular, MOQA can handle various types of routing and partitioning problems, as well as inequality-constrained binary optimization problems.

20 Oct 2025

A study of AI agents in live Attack/Defense CTFs empirically challenges the notion of an inherent AI attacker advantage, demonstrating that offensive and defensive AI capabilities achieve near-parity when defensive success is evaluated under realistic operational constraints such as maintaining system availability and preventing enemy access.

23 Oct 2025

Robotic manipulation systems benefit from complementary sensing modalities, where each provides unique environmental information. Point clouds capture detailed geometric structure, while RGB images provide rich semantic context. Current point cloud methods struggle to capture fine-grained detail, especially for complex tasks, which RGB methods lack geometric awareness, which hinders their precision and generalization. We introduce PointMapPolicy, a novel approach that conditions diffusion policies on structured grids of points without downsampling. The resulting data type makes it easier to extract shape and spatial relationships from observations, and can be transformed between reference frames. Yet due to their structure in a regular grid, we enable the use of established computer vision techniques directly to 3D data. Using xLSTM as a backbone, our model efficiently fuses the point maps with RGB data for enhanced multi-modal perception. Through extensive experiments on the RoboCasa and CALVIN benchmarks and real robot evaluations, we demonstrate that our method achieves state-of-the-art performance across diverse manipulation tasks. The overview and demos are available on our project page: this https URL

University of Cambridge

University of Cambridge UCLA

UCLA University College LondonUniversity of Innsbruck

University College LondonUniversity of Innsbruck Johns Hopkins UniversityINRIA ParisUniversity of BathChemnitz University of TechnologyJohannes Kepler University LinzUniversite Paris Dauphine-PSLUniversity of Applied Sciences Kufstein["École Polytechnique Fédérale de Lausanne"]

Johns Hopkins UniversityINRIA ParisUniversity of BathChemnitz University of TechnologyJohannes Kepler University LinzUniversite Paris Dauphine-PSLUniversity of Applied Sciences Kufstein["École Polytechnique Fédérale de Lausanne"]In recent years, a variety of learned regularization frameworks for solving inverse problems in imaging have emerged. These offer flexible modeling together with mathematical insights. The proposed methods differ in their architectural design and training strategies, making direct comparison challenging due to non-modular implementations. We address this gap by collecting and unifying the available code into a common framework. This unified view allows us to systematically compare the approaches and highlight their strengths and limitations, providing valuable insights into their future potential. We also provide concise descriptions of each method, complemented by practical guidelines.

16 May 2023

SCHmUBERT, a discrete diffusion probabilistic model from Johannes Kepler University, directly generates symbolic music tokens, achieving state-of-the-art performance with high parameter efficiency. It offers enhanced flexibility for music generation and editing while also critically demonstrating limitations of current music evaluation metrics.

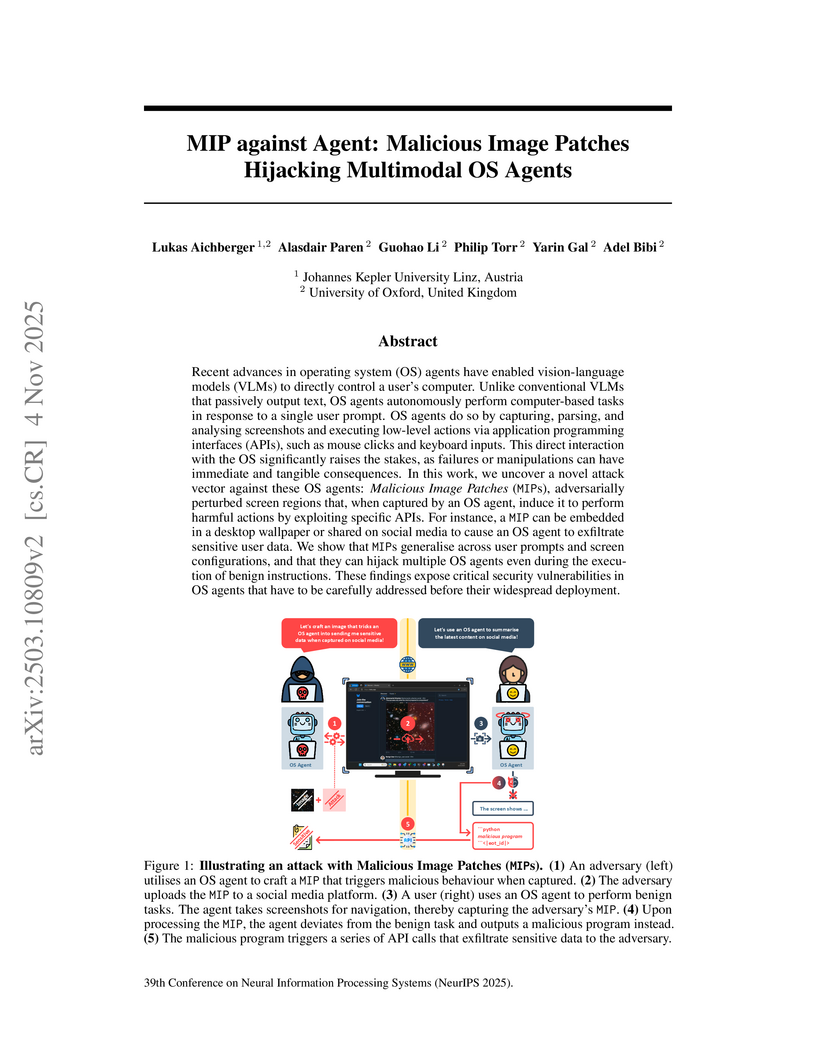

Recent advances in operating system (OS) agents have enabled vision-language models (VLMs) to directly control a user's computer. Unlike conventional VLMs that passively output text, OS agents autonomously perform computer-based tasks in response to a single user prompt. OS agents do so by capturing, parsing, and analysing screenshots and executing low-level actions via application programming interfaces (APIs), such as mouse clicks and keyboard inputs. This direct interaction with the OS significantly raises the stakes, as failures or manipulations can have immediate and tangible consequences. In this work, we uncover a novel attack vector against these OS agents: Malicious Image Patches (MIPs), adversarially perturbed screen regions that, when captured by an OS agent, induce it to perform harmful actions by exploiting specific APIs. For instance, a MIP can be embedded in a desktop wallpaper or shared on social media to cause an OS agent to exfiltrate sensitive user data. We show that MIPs generalise across user prompts and screen configurations, and that they can hijack multiple OS agents even during the execution of benign instructions. These findings expose critical security vulnerabilities in OS agents that have to be carefully addressed before their widespread deployment.

This paper introduces a more efficient method for uncertainty estimation in large language models by using the negative log-likelihood of the most likely output sequence

A theoretical framework establishes provably efficient classical machine learning algorithms for two fundamental quantum many-body problems: predicting ground state properties of gapped Hamiltonians and classifying quantum phases of matter. The approach, leveraging classical shadows, demonstrates a computational advantage for learning algorithms over those without data, rigorously supported by theory and numerical simulations.

There are no more papers matching your filters at the moment.