This paper investigates whether current mechanistic interpretability (MI) methods yield unique explanations for neural network behavior. Researchers systematically demonstrate that multiple distinct circuits, interpretations, and algorithms can equally satisfy existing MI validity criteria across various simple tasks and small networks.

20 Oct 2025

We propose a primal heuristic for quadratic mixed-integer problems. Our method extends the Boscia framework -- originally a mixed-integer convex solver leveraging a Frank-Wolfe-based branch-and-bound approach -- to address nonconvex quadratic objective and constraints. We reformulate nonlinear constraints, introduce preprocessing steps, and a suite of heuristics including rounding strategies, gradient-guided selection, and large neighborhood search techniques that exploit integer-feasible vertices generated during the Frank-Wolfe iterations. Computational results demonstrate the effectiveness of our method in solving challenging MIQCQPs, achieving improvements on QPLIB instances within minutes and winning first place in the Land-Doig MIP Computational Competition 2025.

This paper presents an approach for identifying the root causes of collective

anomalies given observational time series and an acyclic summary causal graph

which depicts an abstraction of causal relations present in a dynamic system at

its normal regime. The paper first shows how the problem of root cause

identification can be divided into many independent subproblems by grouping

related anomalies using d-separation. Further, it shows how, under this

setting, some root causes can be found directly from the graph and from the

time of appearance of anomalies. Finally, it shows, how the rest of the root

causes can be found by comparing direct effects in the normal and in the

anomalous regime. To this end, an adjustment set for identifying direct effects

is introduced. Extensive experiments conducted on both simulated and real-world

datasets demonstrate the effectiveness of the proposed method.

This study addresses the problem of learning a summary causal graph on time

series with potentially different sampling rates. To do so, we first propose a

new causal temporal mutual information measure for time series. We then show

how this measure relates to an entropy reduction principle that can be seen as

a special case of the probability raising principle. We finally combine these

two ingredients in PC-like and FCI-like algorithms to construct the summary

causal graph. There algorithm are evaluated on several datasets, which shows

both their efficacy and efficiency.

Researchers at Univ. Grenoble Alpes, Inria, and CNRS developed an LP-update policy based on Model Predictive Control for Restless Multi-Armed Bandits (RMABs), achieving asymptotic optimality with an O(1/√N) convergence rate under the weakest assumptions to date. This approach leverages a novel dissipativity framework, allowing finite-horizon control to be connected to infinite-horizon average reward problems without requiring the Uniform Global Attractor Property (UGAP).

General-purpose multilingual vector representations, used in retrieval,

regression and classification, are traditionally obtained from bidirectional

encoder models. Despite their wide applicability, encoders have been recently

overshadowed by advances in generative decoder-only models. However, many

innovations driving this progress are not inherently tied to decoders. In this

paper, we revisit the development of multilingual encoders through the lens of

these advances, and introduce EuroBERT, a family of multilingual encoders

covering European and widely spoken global languages. Our models outperform

existing alternatives across a diverse range of tasks, spanning multilingual

capabilities, mathematics, and coding, and natively supporting sequences of up

to 8,192 tokens. We also examine the design decisions behind EuroBERT, offering

insights into our dataset composition and training pipeline. We publicly

release the EuroBERT models, including intermediate training checkpoints,

together with our training framework.

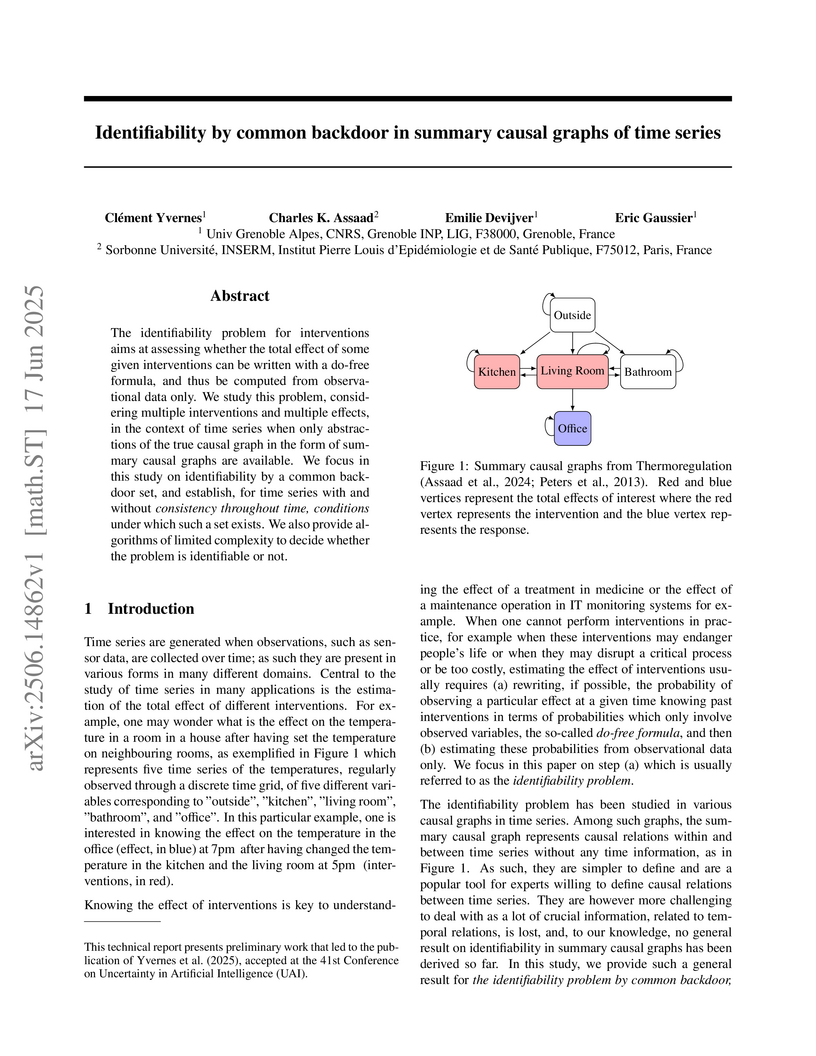

The identifiability problem for interventions aims at assessing whether the total effect of some given interventions can be written with a do-free formula, and thus be computed from observational data only. We study this problem, considering multiple interventions and multiple effects, in the context of time series when only abstractions of the true causal graph in the form of summary causal graphs are available. We focus in this study on identifiability by a common backdoor set, and establish, for time series with and without consistency throughout time, conditions under which such a set exists. We also provide algorithms of limited complexity to decide whether the problem is identifiable or not.

Federated Learning, a new machine learning paradigm enhancing the use of edge devices, is receiving a lot of attention in the pervasive community to support the development of smart services. Nevertheless, this approach still needs to be adapted to the specificity of the pervasive domain. In particular, issues related to continual learning need to be addressed. In this paper, we present a distillation-based approach dealing with catastrophic forgetting in federated learning scenario. Specifically, Human Activity Recognition tasks are used as a demonstration domain.

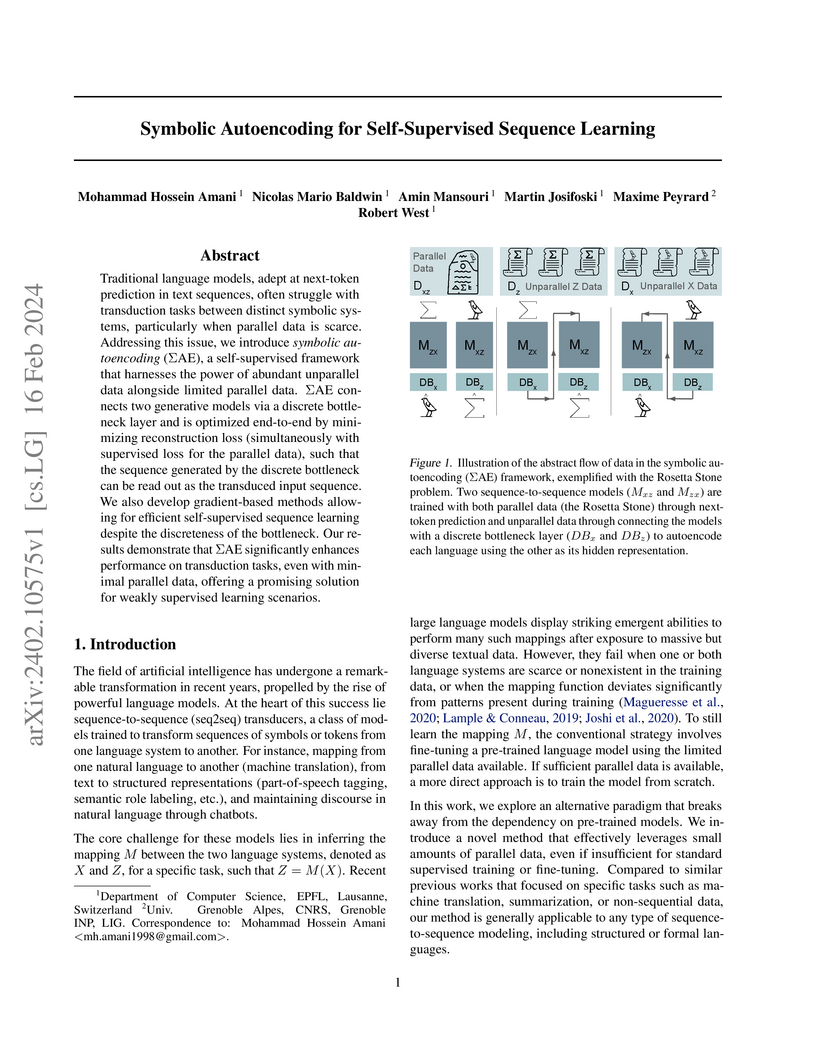

Traditional language models, adept at next-token prediction in text sequences, often struggle with transduction tasks between distinct symbolic systems, particularly when parallel data is scarce. Addressing this issue, we introduce \textit{symbolic autoencoding} (ΣAE), a self-supervised framework that harnesses the power of abundant unparallel data alongside limited parallel data. ΣAE connects two generative models via a discrete bottleneck layer and is optimized end-to-end by minimizing reconstruction loss (simultaneously with supervised loss for the parallel data), such that the sequence generated by the discrete bottleneck can be read out as the transduced input sequence. We also develop gradient-based methods allowing for efficient self-supervised sequence learning despite the discreteness of the bottleneck. Our results demonstrate that ΣAE significantly enhances performance on transduction tasks, even with minimal parallel data, offering a promising solution for weakly supervised learning scenarios.

12 Jul 2021

Adapter modules were recently introduced as an efficient alternative to

fine-tuning in NLP. Adapter tuning consists in freezing pretrained parameters

of a model and injecting lightweight modules between layers, resulting in the

addition of only a small number of task-specific trainable parameters. While

adapter tuning was investigated for multilingual neural machine translation,

this paper proposes a comprehensive analysis of adapters for multilingual

speech translation (ST). Starting from different pre-trained models (a

multilingual ST trained on parallel data or a multilingual BART (mBART) trained

on non-parallel multilingual data), we show that adapters can be used to: (a)

efficiently specialize ST to specific language pairs with a low extra cost in

terms of parameters, and (b) transfer from an automatic speech recognition

(ASR) task and an mBART pre-trained model to a multilingual ST task.

Experiments show that adapter tuning offer competitive results to full

fine-tuning, while being much more parameter-efficient.

The identifiability problem for interventions aims at assessing whether the

total causal effect can be written with a do-free formula, and thus be

estimated from observational data only. We study this problem, considering

multiple interventions, in the context of time series when only an abstraction

of the true causal graph, in the form of a summary causal graph, is available.

We propose in particular both necessary and sufficient conditions for the

adjustment criterion, which we show is complete in this setting, and provide a

pseudo-linear algorithm to decide whether the query is identifiable or not.

18 Jun 2025

We present the data model, design choices, and performance of ProvSQL, a general and easy-to-deploy provenance tracking and probabilistic database system implemented as a PostgreSQL extension. ProvSQL's data and query models closely reflect that of a large core of SQL, including multiset semantics, the full relational algebra, and aggregation. A key part of its implementation relies on generic provenance circuits stored in memory-mapped files. We propose benchmarks to measure the overhead of provenance and probabilistic evaluation and demonstrate its scalability and competitiveness with respect to other state-of-the-art systems.

In recent years, significant attention has been directed towards learning

average-reward Markov Decision Processes (MDPs). However, existing algorithms

either suffer from sub-optimal regret guarantees or computational

inefficiencies. In this paper, we present the first tractable algorithm with

minimax optimal regret of $\widetilde{\mathrm{O}}(\sqrt{\mathrm{sp}(h^*) S A

T}),where\mathrm{sp}(h^*)isthespanoftheoptimalbiasfunctionh^*$,

S×A is the size of the state-action space and T the number of

learning steps. Remarkably, our algorithm does not require prior information on

sp(h∗). Our algorithm relies on a novel subroutine, Projected

Mitigated Extended Value Iteration (PMEVI), to compute bias-constrained optimal

policies efficiently. This subroutine can be applied to various previous

algorithms to improve regret bounds.

To better understand discriminations and the effect of affirmative actions in selection problems (e.g., college admission or hiring), a recent line of research proposed a model based on differential variance. This model assumes that the decision-maker has a noisy estimate of each candidate's quality and puts forward the difference in the noise variances between different demographic groups as a key factor to explain discrimination. The literature on differential variance, however, does not consider the strategic behavior of candidates who can react to the selection procedure to improve their outcome, which is well-known to happen in many domains.

In this paper, we study how the strategic aspect affects fairness in selection problems. We propose to model selection problems with strategic candidates as a contest game: A population of rational candidates compete by choosing an effort level to increase their quality. They incur a cost-of-effort but get a (random) quality whose expectation equals the chosen effort. A Bayesian decision-maker observes a noisy estimate of the quality of each candidate (with differential variance) and selects the fraction α of best candidates based on their posterior expected quality; each selected candidate receives a reward S. We characterize the (unique) equilibrium of this game in the different parameters' regimes, both when the decision-maker is unconstrained and when they are constrained to respect the fairness notion of demographic parity. Our results reveal important impacts of the strategic behavior on the discrimination observed at equilibrium and allow us to understand the effect of imposing demographic parity in this context. In particular, we find that, in many cases, the results contrast with the non-strategic setting.

Gradual Binary Search and Dimension Expansion : A general method for activation quantization in LLMs

Gradual Binary Search and Dimension Expansion : A general method for activation quantization in LLMs

An innovative framework combines Gradual Binary Search and Dimension Expansion with Hadamard matrices to enable accurate 3-bit quantization of LLM weights, activations, and KV caches. This method improves the accuracy of models like Mistral 7B by 40% at 3-bit WAKV compared to existing rotation-based quantization techniques.

This paper documents GETALP's submission to the Third Run of the Automatic Minuting Shared Task at SIGDial 2025. We participated in Task B: question-answering based on meeting transcripts. Our method is based on a retrieval augmented generation (RAG) system and Abstract Meaning Representations (AMR). We propose three systems combining these two approaches. Our results show that incorporating AMR leads to high-quality responses for approximately 35% of the questions and provides notable improvements in answering questions that involve distinguishing between different participants (e.g., who questions).

15 Sep 2025

In this paper, we examine the problem of sampling from log-concave distributions with (possibly) superlinear gradient growth under kinetic (underdamped) Langevin algorithms. Using a carefully tailored taming scheme, we propose two novel discretizations of the kinetic Langevin SDE, and we show that they are both contractive and satisfy a log-Sobolev inequality. Building on this, we establish a series of non-asymptotic bounds in 2-Wasserstein distance between the law reached by each algorithm and the underlying target measure.

Every day, we experience the effects of the global warming: extreme weather events, major forest fires, storms, global warming, this http URL scientific community acknowledges that this crisis is a consequence of human activities where Information and Communications Technologies (ICT) are an increasingly important this http URL scientists need tools for measuring the footprint of the code they produce and for optimizing it. Running Average Power Limit (RAPL) is a low-level interface designed by Intel that provides a measure of the energy consumption of a CPU (and more) without the need for additional hardware. Since 2017, it is available on most computing devices, including non-Intel devices such as AMD this http URL and more people are using RAPL for energy measurement, mostly like a black box without deep knowledge of its this http URL, this causes mistakes when implementing measurement this http URL this paper, we propose to come back to the basic mechanisms that allow to use RAPL measurements and present a critical analysis of their operations. In addition to long-established mechanisms, we explore the suitability of the recent eBPF technology (formerly and abbreviation for extended Berkeley Packet Filter) for working with this http URL each mechanism, we release an implementation in Rust that avoids the pitfalls we detected in existing tools, improving correctness, timing accuracy and performance. These new implementations have desirable properties for monitoring and profiling parallel this http URL also provide an experimental study with multiple benchmarks and processor models (Intel and AMD) in order to evaluate the efficiency of the various mechanisms and their impact on parallel this http URL experiments show that no mechanism provides a significant performance advantage over the others. However, they differ significantly in terms of ease-of-use and this http URL believe that this work will help the community to develop correct, resilient and lightweight measurement tools.

24 Feb 2024

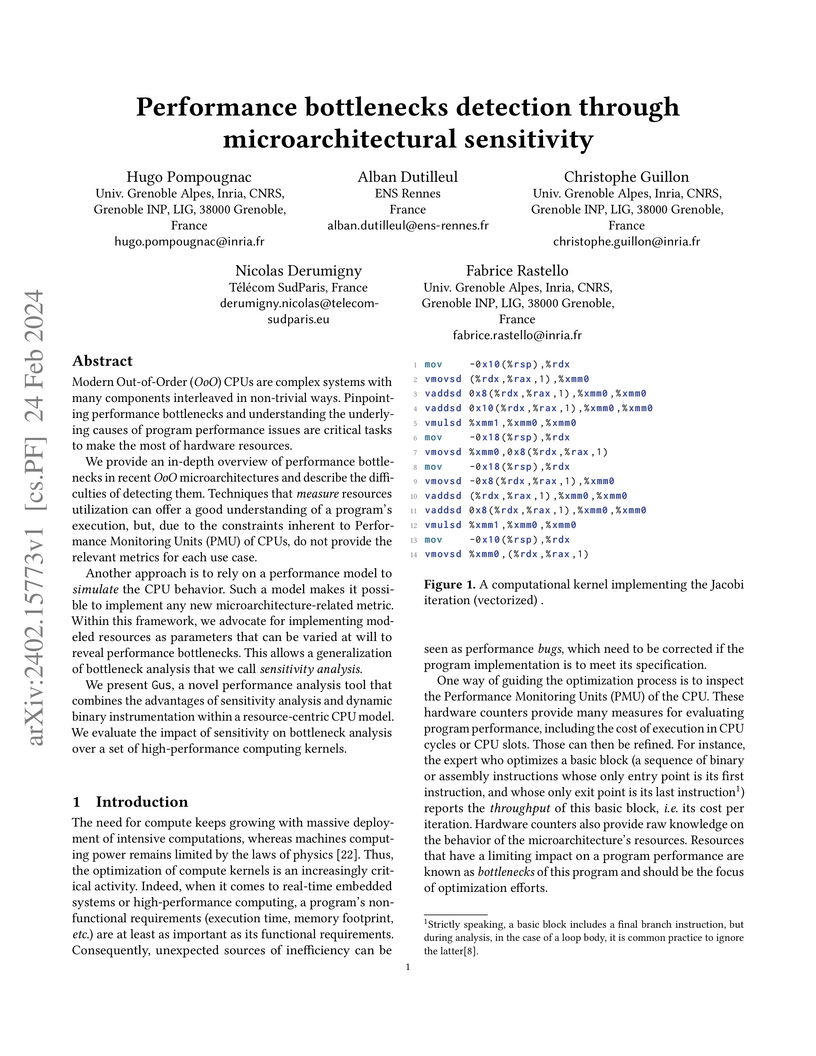

Modern Out-of-Order (OoO) CPUs are complex systems with many components

interleaved in non-trivial ways. Pinpointing performance bottlenecks and

understanding the underlying causes of program performance issues are critical

tasks to make the most of hardware resources.

We provide an in-depth overview of performance bottlenecks in recent OoO

microarchitectures and describe the difficulties of detecting them. Techniques

that measure resources utilization can offer a good understanding of a

program's execution, but, due to the constraints inherent to Performance

Monitoring Units (PMU) of CPUs, do not provide the relevant metrics for each

use case.

Another approach is to rely on a performance model to simulate the CPU

behavior. Such a model makes it possible to implement any new

microarchitecture-related metric. Within this framework, we advocate for

implementing modeled resources as parameters that can be varied at will to

reveal performance bottlenecks. This allows a generalization of bottleneck

analysis that we call sensitivity analysis.

We present Gus, a novel performance analysis tool that combines the

advantages of sensitivity analysis and dynamic binary instrumentation within a

resource-centric CPU model. We evaluate the impact of sensitivity on bottleneck

analysis over a set of high-performance computing kernels.

27 Jun 2025

Understanding the connections between different quantum information protocols has been proven fruitful for both theoretical insights and experimental applications. In this work, we explore the relationship between non-local and prepare-and-measure scenarios, proposing a systematic way to translate bipartite Bell inequalities into dimensionally-bounded prepare-and-measure tasks. We identify sufficient conditions under which the translation preserves the quantum bound and self-testing properties, enabling a wide range of certification protocols originally developed for the non-local setting to be adapted to the sequential framework of prepare-and-measure with a dimensional bound. While the dimensionality bound is not device-independent, it still is a practical and experimentally reasonable assumption in many cases of interest. In some instances, we find new experimentally-friendly certification protocols. In others, we demonstrate equivalences with already known prepare-and-measure protocols, where self-testing results were previously established using alternative mathematical methods. Our results unify different quantum correlation frameworks, and contribute to the ongoing research effort of studying the interplay between parallel and sequential protocols.

There are no more papers matching your filters at the moment.