LIGM

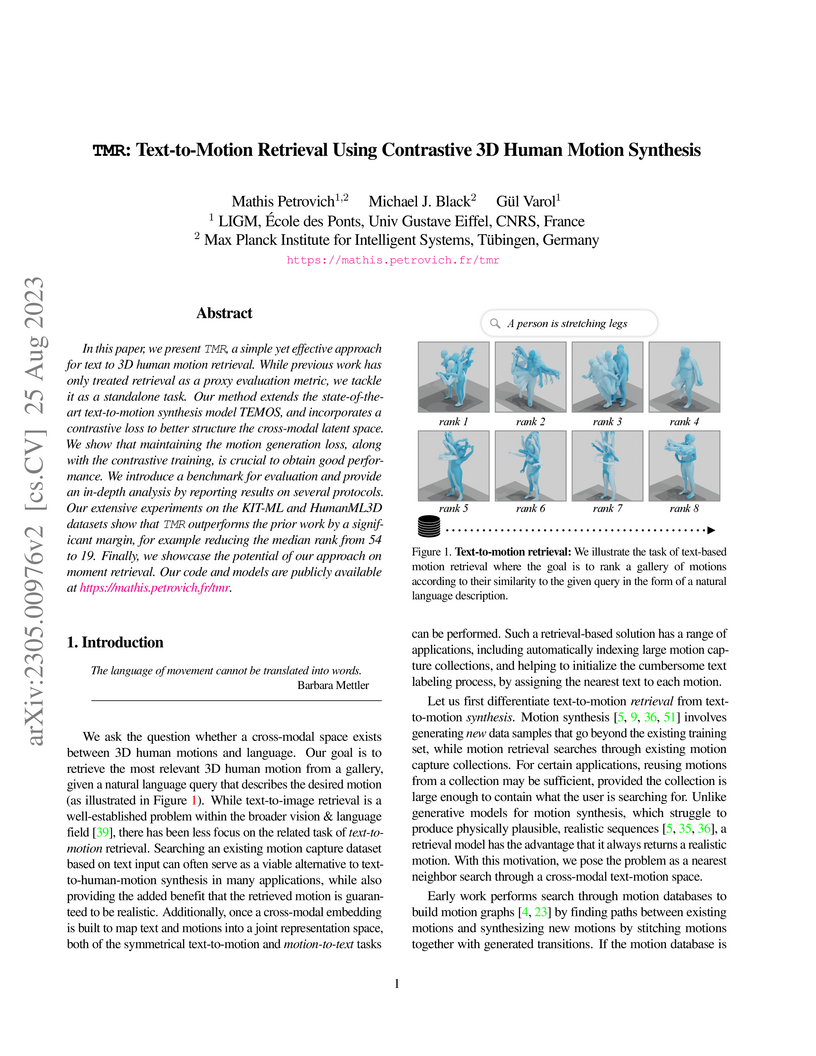

TMR proposes a high-performance system for text-to-3D human motion retrieval, addressing limitations of prior retrieval methods and offering a robust alternative to motion synthesis. It achieves state-of-the-art performance by combining a probabilistic motion synthesis framework with contrastive learning and a novel negative filtering mechanism, while also demonstrating emergent zero-shot moment retrieval capabilities.

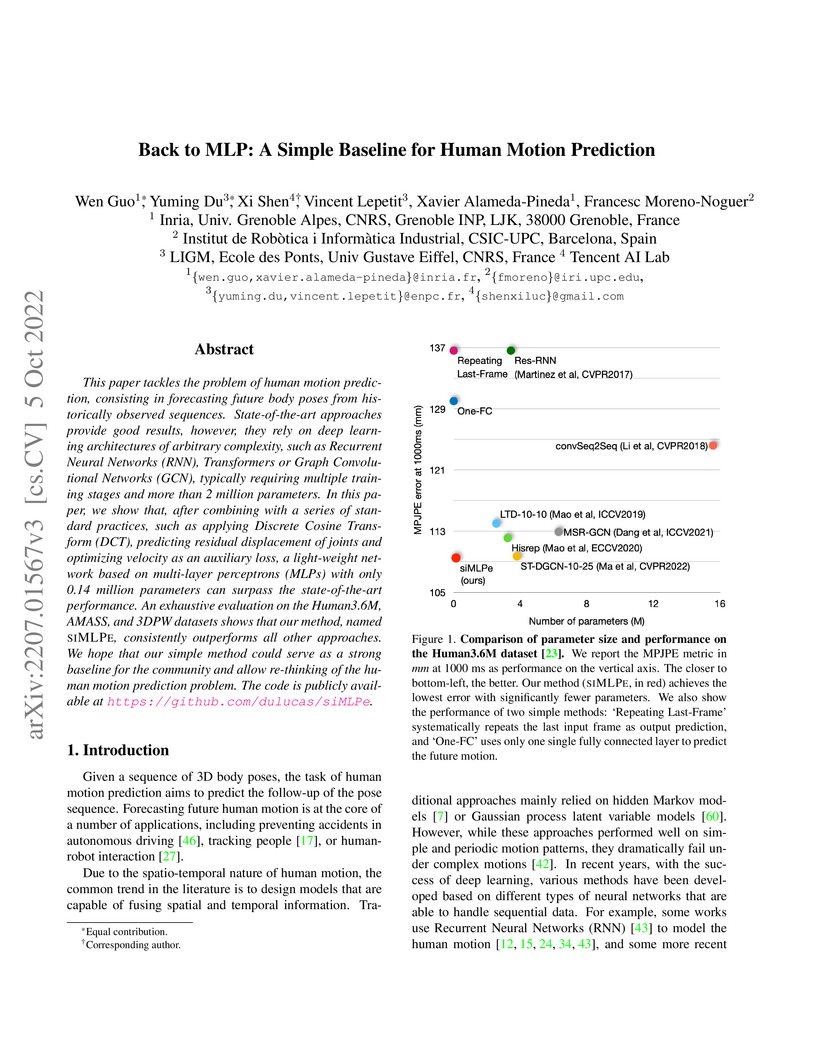

SIMLPE, a lightweight Multi-Layer Perceptron (MLP) network, achieves state-of-the-art performance in human motion prediction by outperforming complex deep learning models with significantly fewer parameters. The research challenges the trend of increasing architectural complexity, demonstrating that simple designs can be highly effective when combined with established practices like DCT and residual learning.

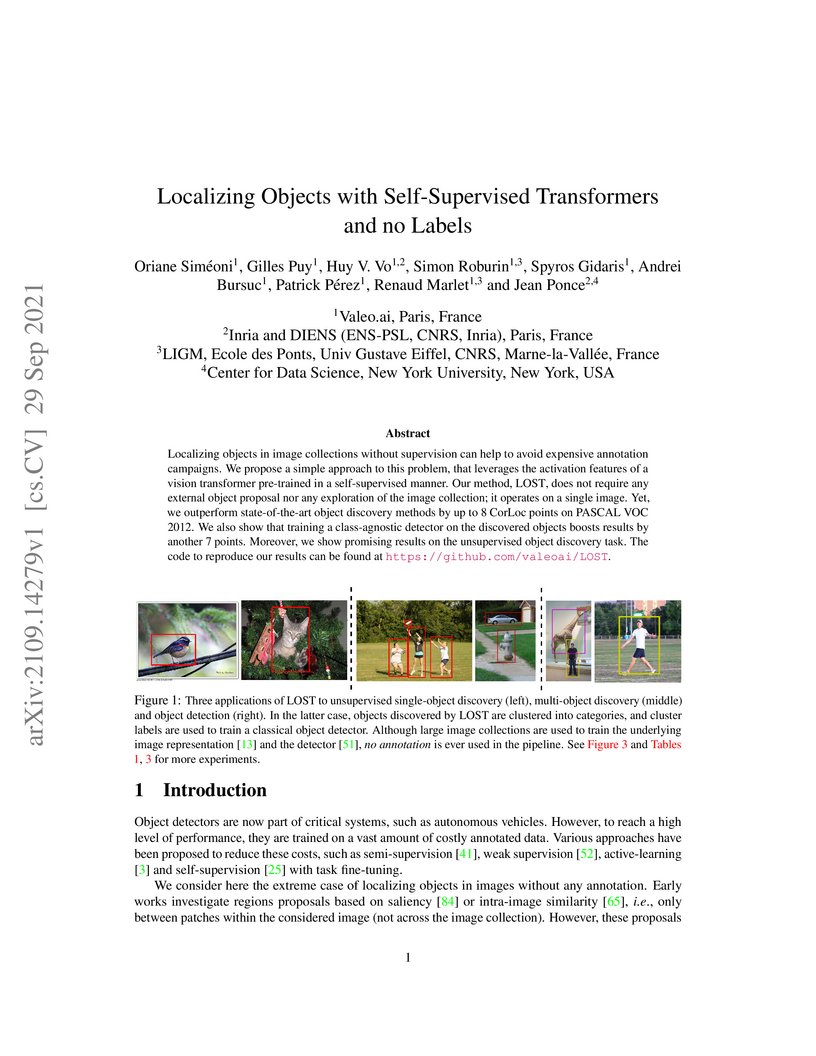

Researchers from Valeo.ai and partner institutions developed LOST, a method that localizes objects in images using self-supervised Vision Transformer features, requiring no human annotations. This approach achieves a CorLoc of 61.9% on PASCAL VOC 2007 `trainval` for single-object discovery and enables training of fully unsupervised class-agnostic and class-aware detectors, outperforming previous unsupervised methods.

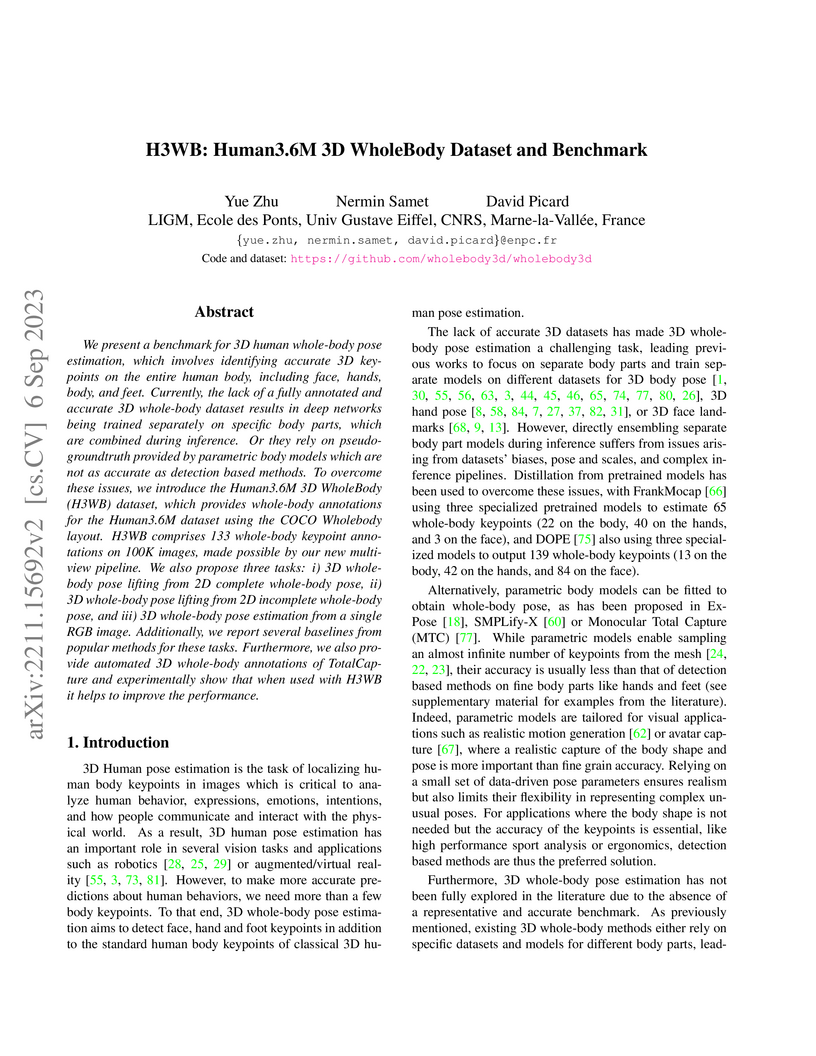

Human3.6M 3D WholeBody (H3WB) is introduced as the first large-scale, high-accuracy benchmark dataset for 3D whole-body pose estimation, featuring 100,000 images with 133 precise 3D keypoints validated at an average 17mm error. It also establishes standardized benchmark tasks and baselines to foster unified model development for comprehensive human understanding.

In this paper, we describe a graph-based algorithm that uses the features obtained by a self-supervised transformer to detect and segment salient objects in images and videos. With this approach, the image patches that compose an image or video are organised into a fully connected graph, where the edge between each pair of patches is labeled with a similarity score between patches using features learned by the transformer. Detection and segmentation of salient objects is then formulated as a graph-cut problem and solved using the classical Normalized Cut algorithm. Despite the simplicity of this approach, it achieves state-of-the-art results on several common image and video detection and segmentation tasks. For unsupervised object discovery, this approach outperforms the competing approaches by a margin of 6.1%, 5.7%, and 2.6%, respectively, when tested with the VOC07, VOC12, and COCO20K datasets. For the unsupervised saliency detection task in images, this method improves the score for Intersection over Union (IoU) by 4.4%, 5.6% and 5.2%. When tested with the ECSSD, DUTS, and DUT-OMRON datasets, respectively, compared to current state-of-the-art techniques. This method also achieves competitive results for unsupervised video object segmentation tasks with the DAVIS, SegTV2, and FBMS datasets.

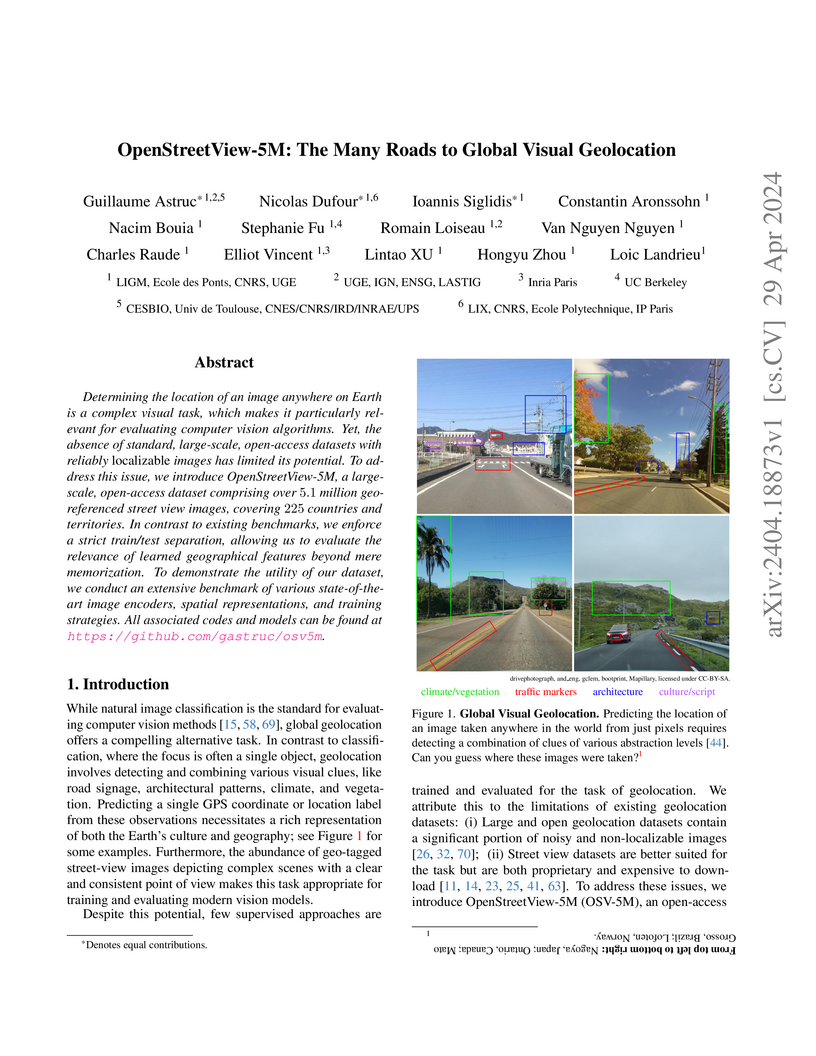

Determining the location of an image anywhere on Earth is a complex visual task, which makes it particularly relevant for evaluating computer vision algorithms. Yet, the absence of standard, large-scale, open-access datasets with reliably localizable images has limited its potential. To address this issue, we introduce OpenStreetView-5M, a large-scale, open-access dataset comprising over 5.1 million geo-referenced street view images, covering 225 countries and territories. In contrast to existing benchmarks, we enforce a strict train/test separation, allowing us to evaluate the relevance of learned geographical features beyond mere memorization. To demonstrate the utility of our dataset, we conduct an extensive benchmark of various state-of-the-art image encoders, spatial representations, and training strategies. All associated codes and models can be found at this https URL.

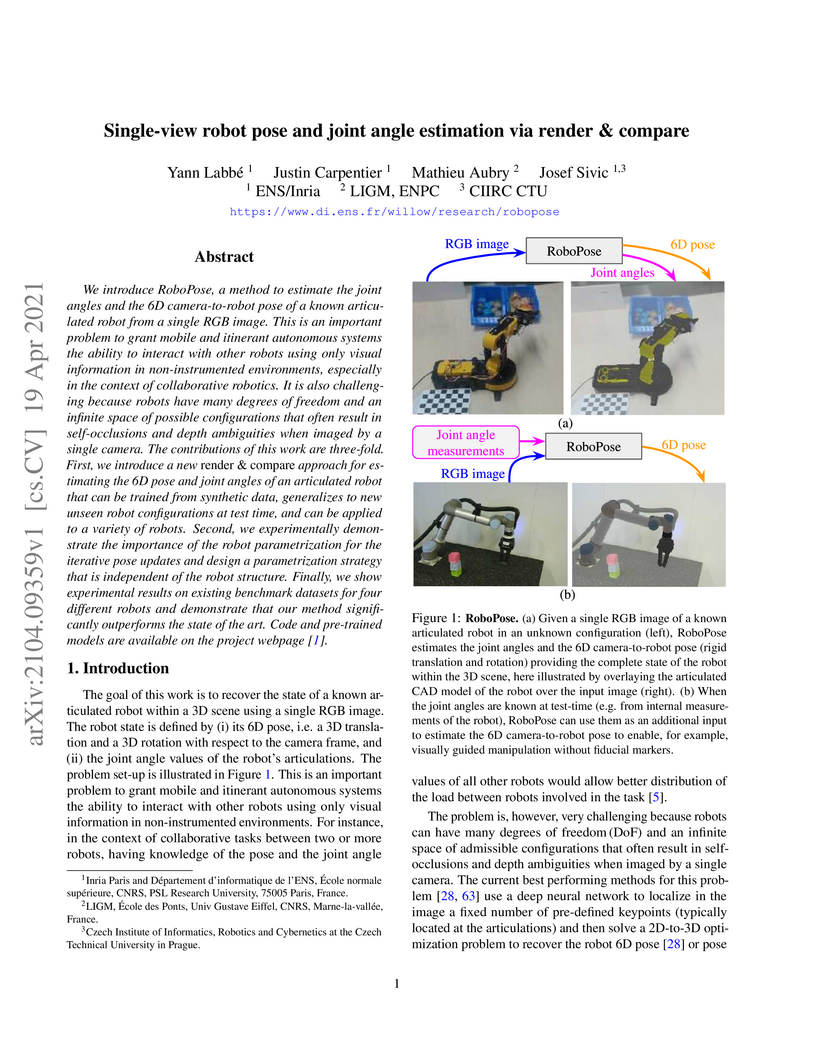

We introduce RoboPose, a method to estimate the joint angles and the 6D

camera-to-robot pose of a known articulated robot from a single RGB image. This

is an important problem to grant mobile and itinerant autonomous systems the

ability to interact with other robots using only visual information in

non-instrumented environments, especially in the context of collaborative

robotics. It is also challenging because robots have many degrees of freedom

and an infinite space of possible configurations that often result in

self-occlusions and depth ambiguities when imaged by a single camera. The

contributions of this work are three-fold. First, we introduce a new render &

compare approach for estimating the 6D pose and joint angles of an articulated

robot that can be trained from synthetic data, generalizes to new unseen robot

configurations at test time, and can be applied to a variety of robots. Second,

we experimentally demonstrate the importance of the robot parametrization for

the iterative pose updates and design a parametrization strategy that is

independent of the robot structure. Finally, we show experimental results on

existing benchmark datasets for four different robots and demonstrate that our

method significantly outperforms the state of the art. Code and pre-trained

models are available on the project webpage

this https URL

UniBench introduces a unified evaluation framework integrating over 50 Vision-Language Model (VLM) benchmarks, providing a standardized tool to assess capabilities and identify limitations. The study evaluates nearly 60 VLMs, revealing that scaling model size or training data offers minimal improvements for visual reasoning and relational understanding, while also exposing surprising underperformance on fundamental tasks like digit recognition.

Our goal is to train a generative model of 3D hand motions, conditioned on natural language descriptions specifying motion characteristics such as handshapes, locations, finger/hand/arm movements. To this end, we automatically build pairs of 3D hand motions and their associated textual labels with unprecedented scale. Specifically, we leverage a large-scale sign language video dataset, along with noisy pseudo-annotated sign categories, which we translate into hand motion descriptions via an LLM that utilizes a dictionary of sign attributes, as well as our complementary motion-script cues. This data enables training a text-conditioned hand motion diffusion model HandMDM, that is robust across domains such as unseen sign categories from the same sign language, but also signs from another sign language and non-sign hand movements. We contribute extensive experimental investigation of these scenarios and will make our trained models and data publicly available to support future research in this relatively new field.

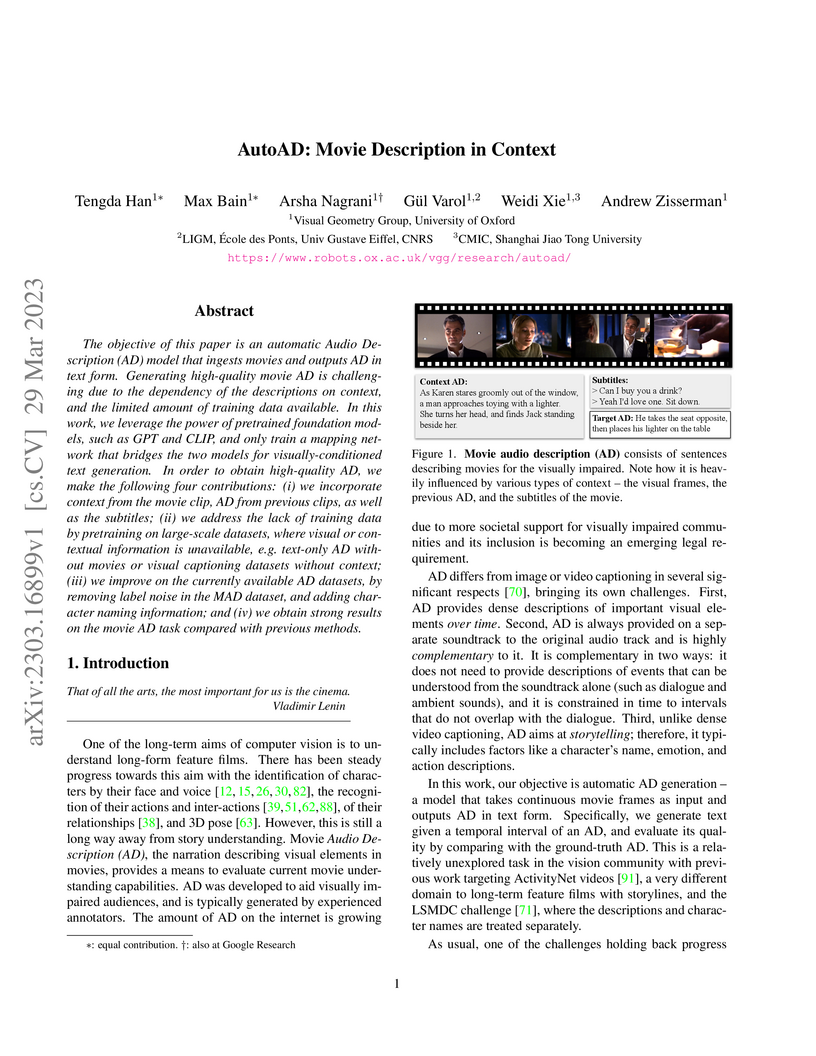

AutoAD, developed by the Visual Geometry Group at the University of Oxford, establishes a system for automatic audio description generation for movies by integrating visual, previous AD, and subtitle context. The model, leveraging frozen foundation models and novel partial-data pretraining, achieves state-of-the-art results on movie AD benchmarks, enhancing accessibility for visually impaired audiences and providing new, denoised datasets.

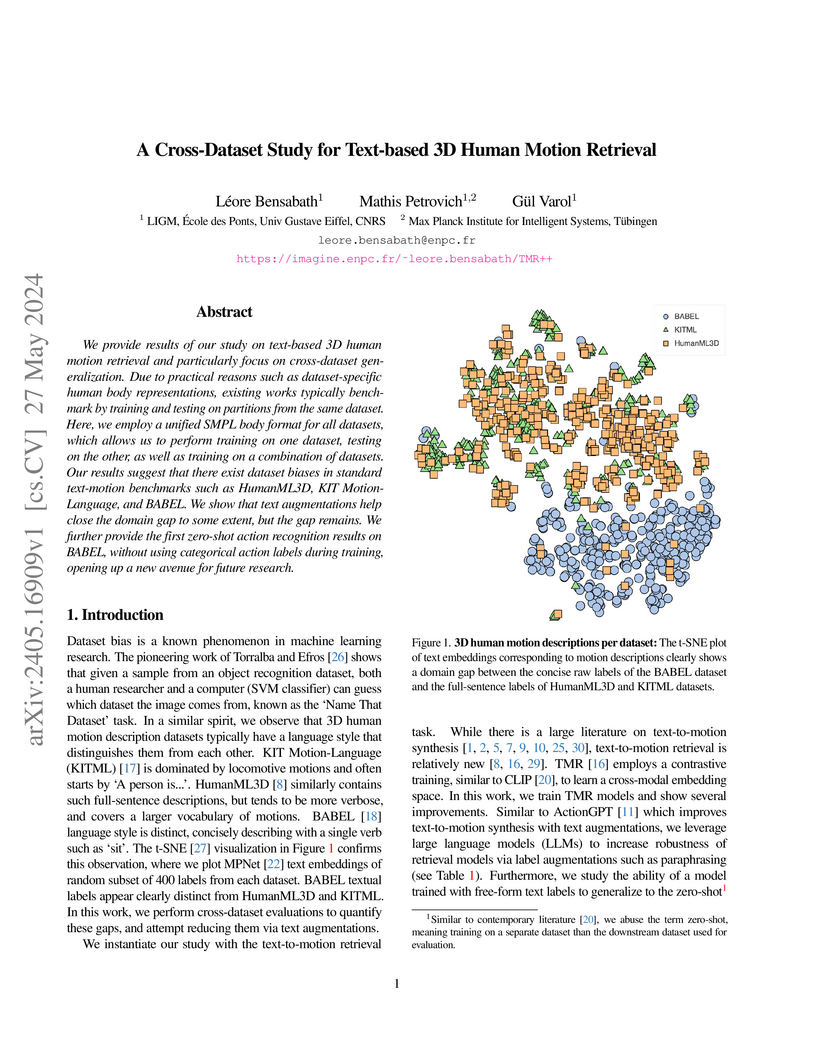

We provide results of our study on text-based 3D human motion retrieval and particularly focus on cross-dataset generalization. Due to practical reasons such as dataset-specific human body representations, existing works typically benchmarkby training and testing on partitions from the same dataset. Here, we employ a unified SMPL body format for all datasets, which allows us to perform training on one dataset, testing on the other, as well as training on a combination of datasets. Our results suggest that there exist dataset biases in standard text-motion benchmarks such as HumanML3D, KIT Motion-Language, and BABEL. We show that text augmentations help close the domain gap to some extent, but the gap remains. We further provide the first zero-shot action recognition results on BABEL, without using categorical action labels during training, opening up a new avenue for future research.

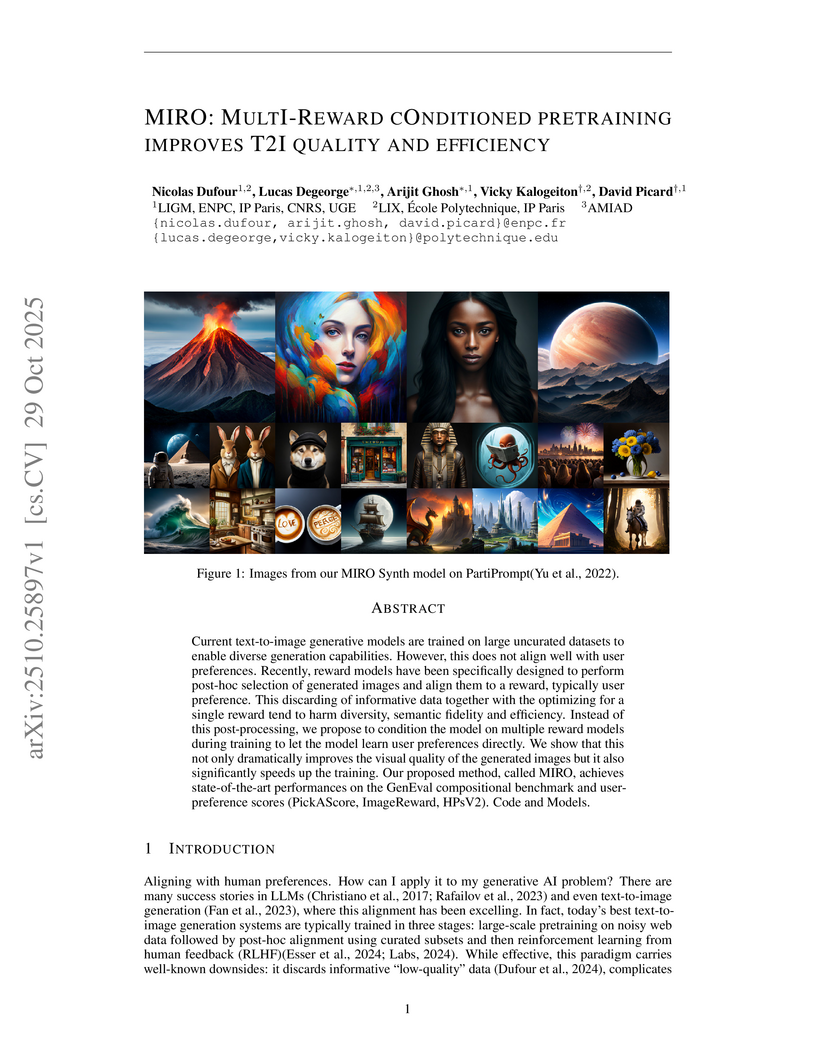

Current text-to-image generative models are trained on large uncurated datasets to enable diverse generation capabilities. However, this does not align well with user preferences. Recently, reward models have been specifically designed to perform post-hoc selection of generated images and align them to a reward, typically user preference. This discarding of informative data together with the optimizing for a single reward tend to harm diversity, semantic fidelity and efficiency. Instead of this post-processing, we propose to condition the model on multiple reward models during training to let the model learn user preferences directly. We show that this not only dramatically improves the visual quality of the generated images but it also significantly speeds up the training. Our proposed method, called MIRO, achieves state-of-the-art performances on the GenEval compositional benchmark and user-preference scores (PickAScore, ImageReward, HPSv2).

sim2art: Accurate Articulated Object Modeling from a Single Video using Synthetic Training Data Only

sim2art: Accurate Articulated Object Modeling from a Single Video using Synthetic Training Data Only

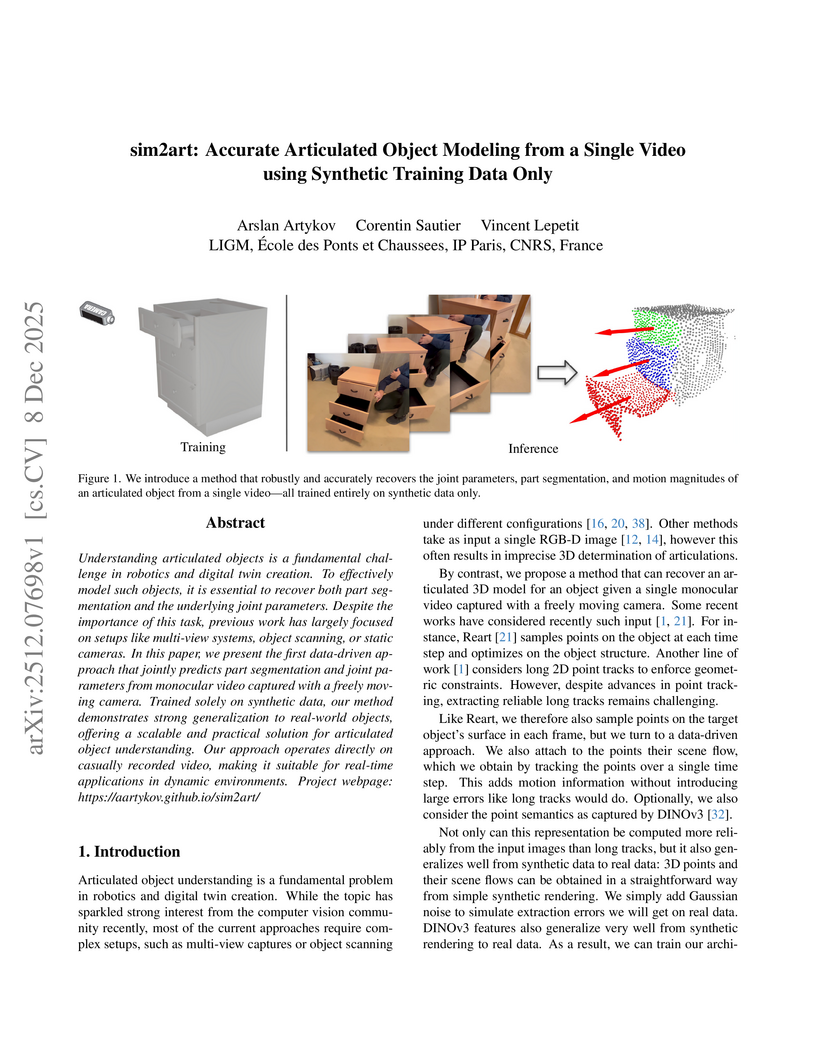

Understanding articulated objects is a fundamental challenge in robotics and digital twin creation. To effectively model such objects, it is essential to recover both part segmentation and the underlying joint parameters. Despite the importance of this task, previous work has largely focused on setups like multi-view systems, object scanning, or static cameras. In this paper, we present the first data-driven approach that jointly predicts part segmentation and joint parameters from monocular video captured with a freely moving camera. Trained solely on synthetic data, our method demonstrates strong generalization to real-world objects, offering a scalable and practical solution for articulated object understanding. Our approach operates directly on casually recorded video, making it suitable for real-time applications in dynamic environments. Project webpage: this https URL

We introduce a general detection-based approach to text line recognition, be

it printed (OCR) or handwritten (HTR), with Latin, Chinese, or ciphered

characters. Detection-based approaches have until now been largely discarded

for HTR because reading characters separately is often challenging, and

character-level annotation is difficult and expensive. We overcome these

challenges thanks to three main insights: (i) synthetic pre-training with

sufficiently diverse data enables learning reasonable character localization

for any script; (ii) modern transformer-based detectors can jointly detect a

large number of instances, and, if trained with an adequate masking strategy,

leverage consistency between the different detections; (iii) once a pre-trained

detection model with approximate character localization is available, it is

possible to fine-tune it with line-level annotation on real data, even with a

different alphabet. Our approach, dubbed DTLR, builds on a completely different

paradigm than state-of-the-art HTR methods, which rely on autoregressive

decoding, predicting character values one by one, while we treat a complete

line in parallel. Remarkably, we demonstrate good performance on a large range

of scripts, usually tackled with specialized approaches. In particular, we

improve state-of-the-art performances for Chinese script recognition on the

CASIA v2 dataset, and for cipher recognition on the Borg and Copiale datasets.

Our code and models are available at this https URL

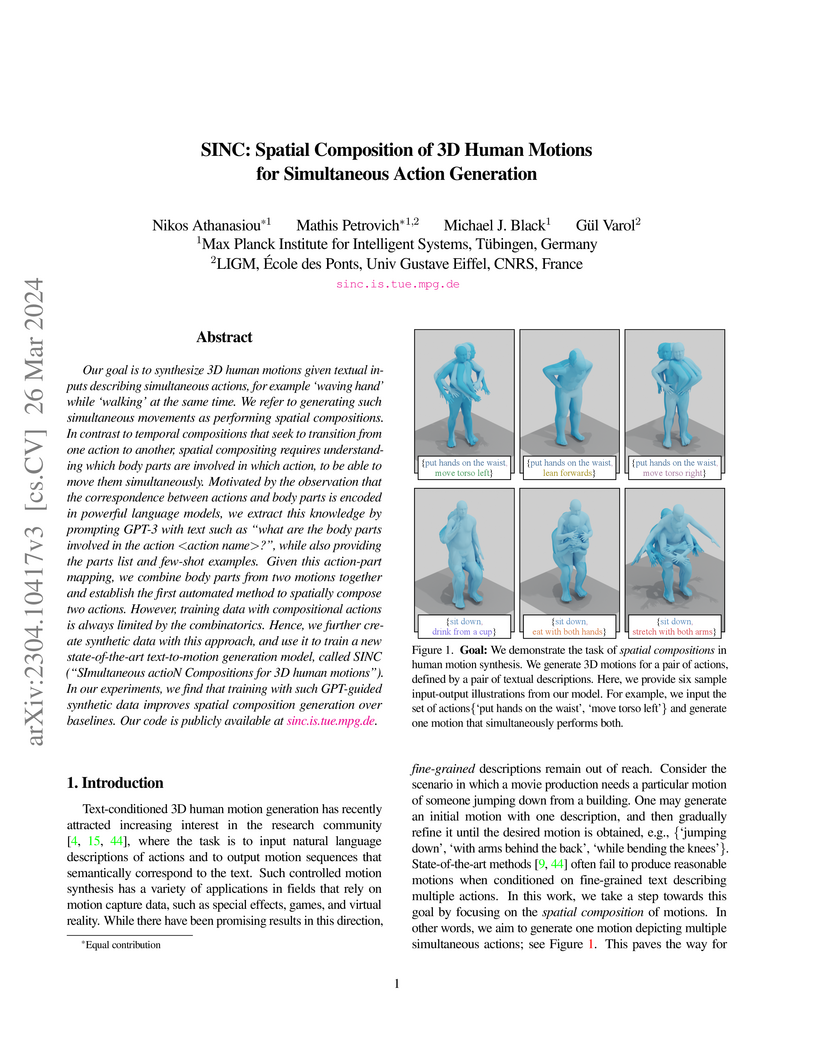

Our goal is to synthesize 3D human motions given textual inputs describing

simultaneous actions, for example 'waving hand' while 'walking' at the same

time. We refer to generating such simultaneous movements as performing 'spatial

compositions'. In contrast to temporal compositions that seek to transition

from one action to another, spatial compositing requires understanding which

body parts are involved in which action, to be able to move them

simultaneously. Motivated by the observation that the correspondence between

actions and body parts is encoded in powerful language models, we extract this

knowledge by prompting GPT-3 with text such as "what are the body parts

involved in the action ?", while also providing the parts list and

few-shot examples. Given this action-part mapping, we combine body parts from

two motions together and establish the first automated method to spatially

compose two actions. However, training data with compositional actions is

always limited by the combinatorics. Hence, we further create synthetic data

with this approach, and use it to train a new state-of-the-art text-to-motion

generation model, called SINC ("SImultaneous actioN Compositions for 3D human

motions"). In our experiments, that training with such GPT-guided synthetic

data improves spatial composition generation over baselines. Our code is

publicly available at this https URL

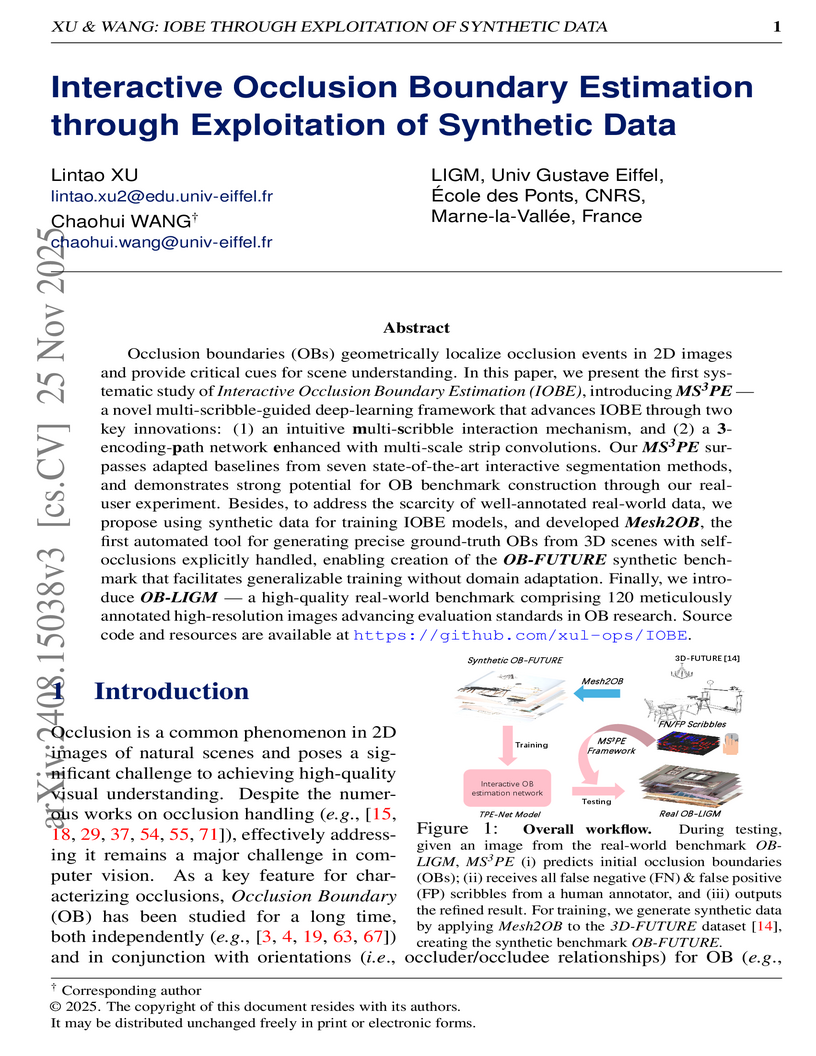

Occlusion boundaries (OBs) geometrically localize occlusion events in 2D images and provide critical cues for scene understanding. In this paper, we present the first systematic study of Interactive Occlusion Boundary Estimation (IOBE), introducing MS\textsuperscript{3}PE, a novel multi-scribble-guided deep-learning framework that advances IOBE through two key innovations: (1) an intuitive multi-scribble interaction mechanism, and (2) a 3-encoding-path network enhanced with multi-scale strip convolutions. Our MS\textsuperscript{3}PE surpasses adapted baselines from seven state-of-the-art interactive segmentation methods, and demonstrates strong potential for OB benchmark construction through our real-user experiment. Besides, to address the scarcity of well-annotated real-world data, we propose using synthetic data for training IOBE models, and developed Mesh2OB, the first automated tool for generating precise ground-truth OBs from 3D scenes with self-occlusions explicitly handled, enabling creation of the OB-FUTURE synthetic benchmark that facilitates generalizable training without domain adaptation. Finally, we introduce OB-LIGM, a high-quality real-world benchmark comprising 120 meticulously annotated high-resolution images advancing evaluation standards in OB research. Source code and resources are available at this https URL.

The generation of LiDAR scans is a growing topic with diverse applications to autonomous driving. However, scan generation remains challenging, especially when compared to the rapid advancement of image and 3D object generation. We consider the task of LiDAR object generation, requiring models to produce 3D objects as viewed by a LiDAR scan. It focuses LiDAR scan generation on a key aspect of scenes, the objects, while also benefiting from advancements in 3D object generative methods. We introduce a novel diffusion-based model to produce LiDAR point clouds of dataset objects, including intensity, and with an extensive control of the generation via conditioning information. Our experiments on nuScenes and KITTI-360 show the quality of our generations measured with new 3D metrics developed to suit LiDAR objects. The code is available at this https URL.

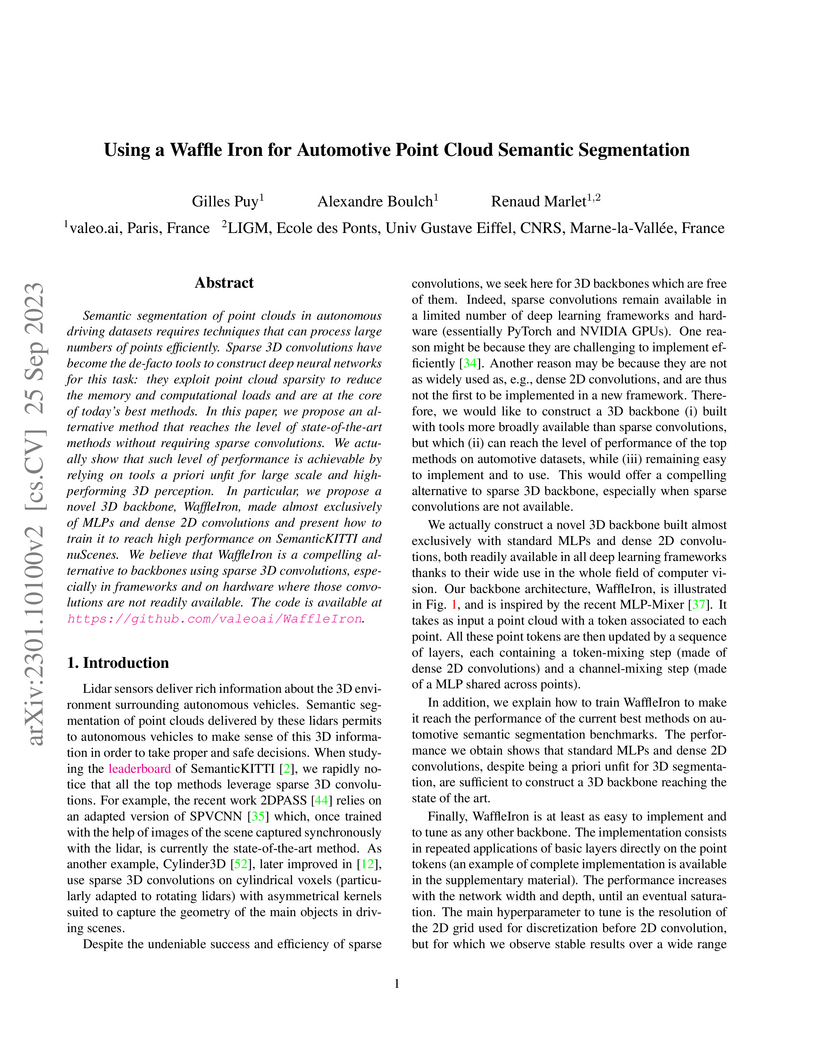

Semantic segmentation of point clouds in autonomous driving datasets requires techniques that can process large numbers of points efficiently. Sparse 3D convolutions have become the de-facto tools to construct deep neural networks for this task: they exploit point cloud sparsity to reduce the memory and computational loads and are at the core of today's best methods. In this paper, we propose an alternative method that reaches the level of state-of-the-art methods without requiring sparse convolutions. We actually show that such level of performance is achievable by relying on tools a priori unfit for large scale and high-performing 3D perception. In particular, we propose a novel 3D backbone, WaffleIron, made almost exclusively of MLPs and dense 2D convolutions and present how to train it to reach high performance on SemanticKITTI and nuScenes. We believe that WaffleIron is a compelling alternative to backbones using sparse 3D convolutions, especially in frameworks and on hardware where those convolutions are not readily available.

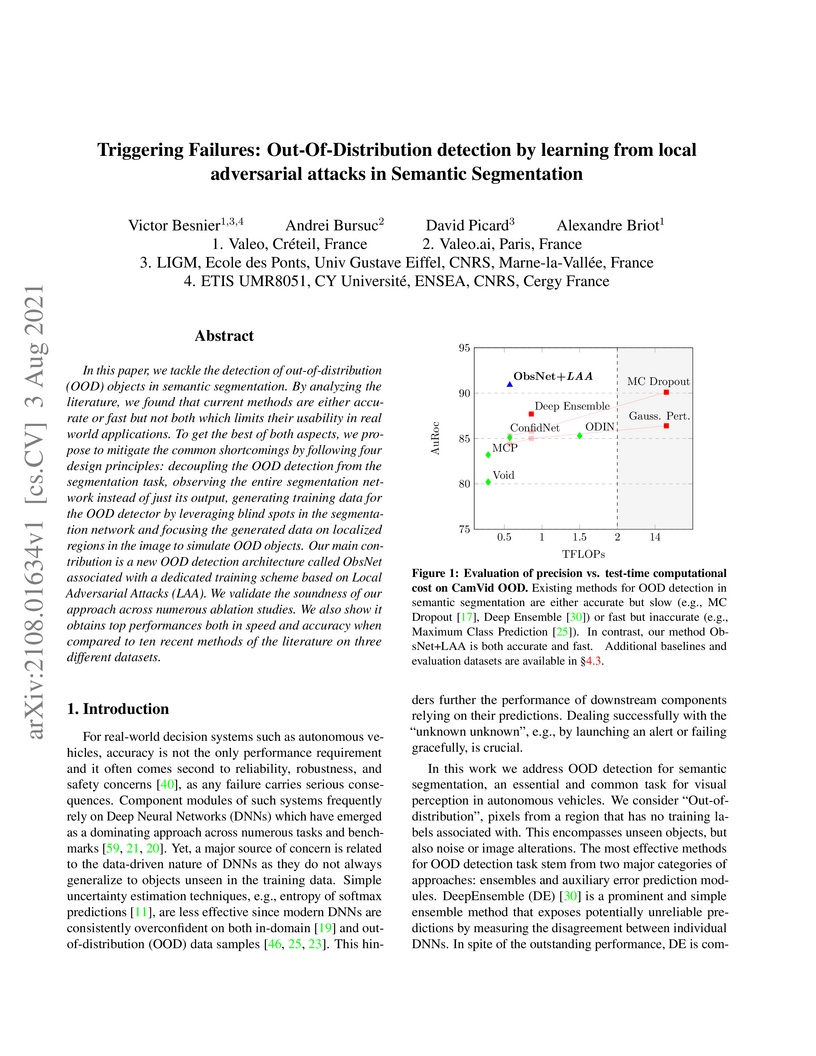

Researchers from Valeo and academic institutions developed ObsNet with Local Adversarial Attacks for accurate and fast Out-of-Distribution (OOD) detection in semantic segmentation, addressing the challenge of unknown objects in real-time safety-critical applications. This method significantly outperforms existing baselines in OOD detection metrics while being up to 21 times faster than ensemble methods.

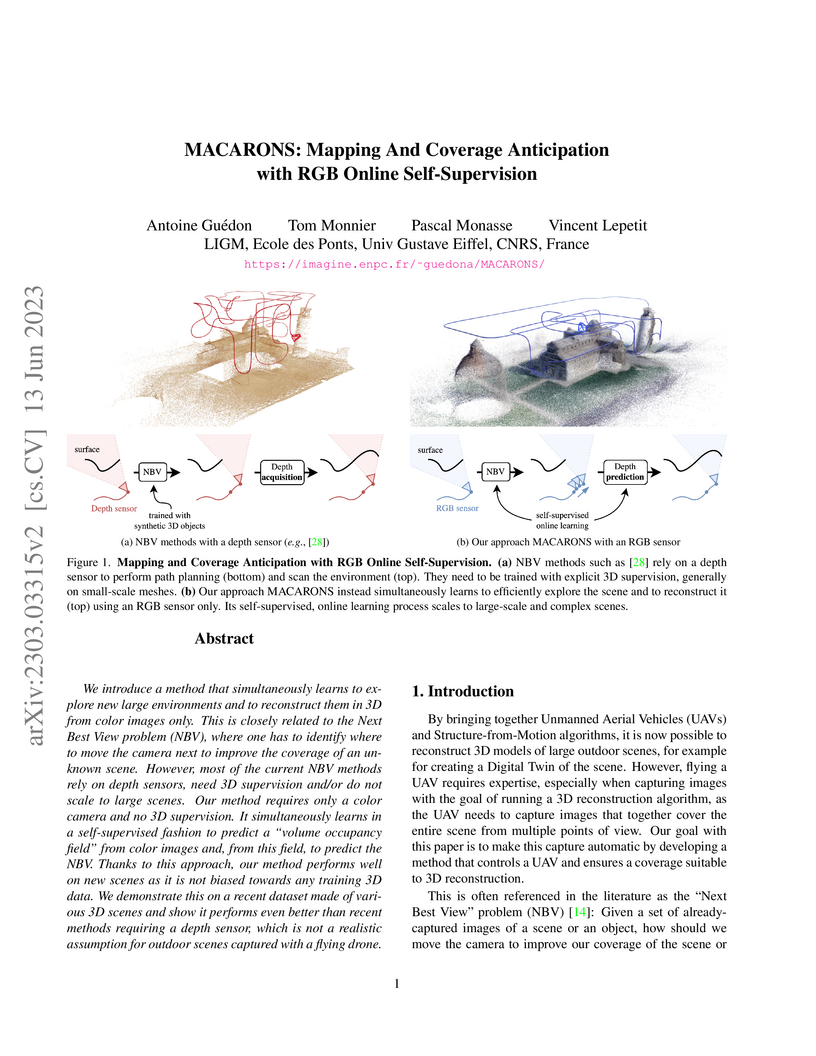

We introduce a method that simultaneously learns to explore new large

environments and to reconstruct them in 3D from color images only. This is

closely related to the Next Best View problem (NBV), where one has to identify

where to move the camera next to improve the coverage of an unknown scene.

However, most of the current NBV methods rely on depth sensors, need 3D

supervision and/or do not scale to large scenes. Our method requires only a

color camera and no 3D supervision. It simultaneously learns in a

self-supervised fashion to predict a "volume occupancy field" from color images

and, from this field, to predict the NBV. Thanks to this approach, our method

performs well on new scenes as it is not biased towards any training 3D data.

We demonstrate this on a recent dataset made of various 3D scenes and show it

performs even better than recent methods requiring a depth sensor, which is not

a realistic assumption for outdoor scenes captured with a flying drone.

There are no more papers matching your filters at the moment.