NASA Jet Propulsion Laboratory

01 Sep 2025

CNRS

CNRS California Institute of Technology

California Institute of Technology University of CambridgeSLAC National Accelerator Laboratory

University of CambridgeSLAC National Accelerator Laboratory Chinese Academy of Sciences

Chinese Academy of Sciences University of Oxford

University of Oxford the University of Tokyo

the University of Tokyo Stanford UniversityScuola Normale Superiore

Stanford UniversityScuola Normale Superiore University of Copenhagen

University of Copenhagen University of Arizona

University of Arizona Sorbonne Université

Sorbonne Université Princeton UniversityUniversity of GenevaNASA Jet Propulsion Laboratory

Princeton UniversityUniversity of GenevaNASA Jet Propulsion Laboratory KTH Royal Institute of TechnologyCNESCentro de Astrobiología (CAB)

KTH Royal Institute of TechnologyCNESCentro de Astrobiología (CAB) University of California, Santa CruzINAF – Osservatorio Astronomico di RomaUPSUniversità di FirenzeEuropean Space Agency (ESA)Cosmic Dawn Center(DAWN)Universit

de ToulouseMax Planck Institut fr AstronomieSapienza Universit

di RomaINAF

Osservatorio Astrofisico di ArcetriUniversity of Texas, Austin

University of California, Santa CruzINAF – Osservatorio Astronomico di RomaUPSUniversità di FirenzeEuropean Space Agency (ESA)Cosmic Dawn Center(DAWN)Universit

de ToulouseMax Planck Institut fr AstronomieSapienza Universit

di RomaINAF

Osservatorio Astrofisico di ArcetriUniversity of Texas, AustinResearchers performed the first direct, dynamical measurement of a black hole mass in a 'Little Red Dot' (Abell2744-QSO1) at z=7.04 using JWST NIRSpec IFS data, confirming the validity of single-epoch virial mass estimates for these early universe objects and revealing a black hole significantly overmassive relative to its host galaxy's stellar mass.

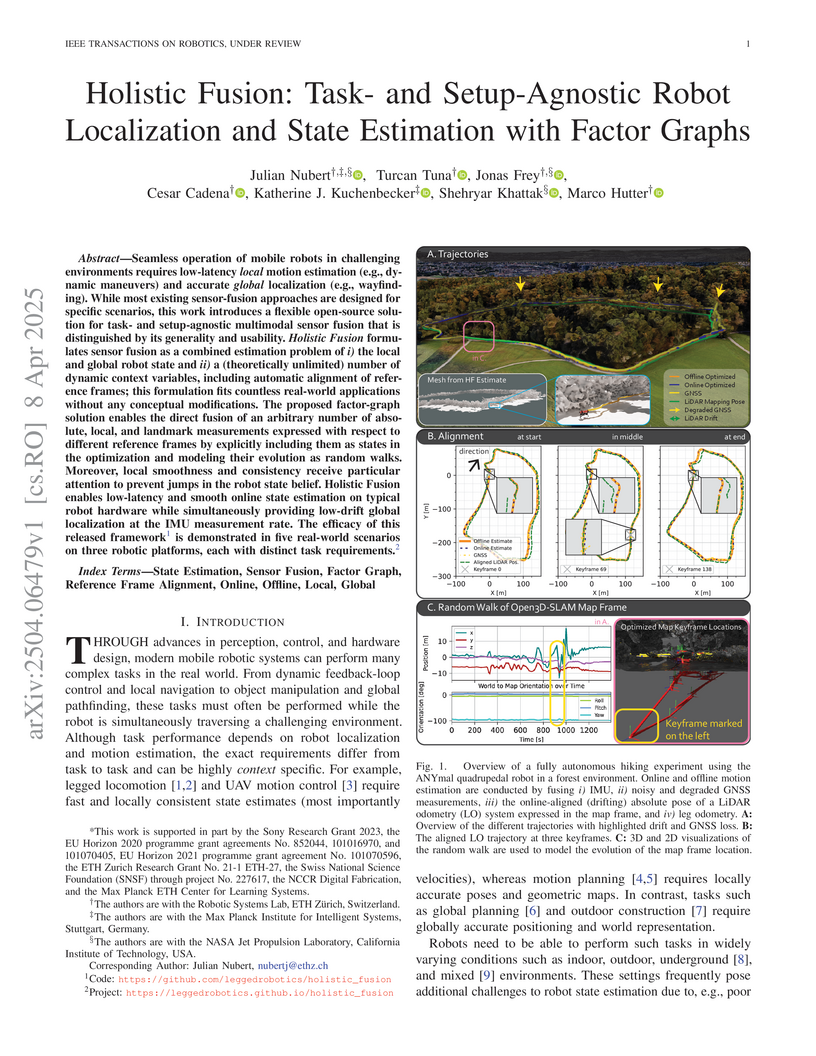

Holistic Fusion: Task- and Setup-Agnostic Robot Localization and State Estimation with Factor Graphs

Holistic Fusion: Task- and Setup-Agnostic Robot Localization and State Estimation with Factor Graphs

A factor graph-based framework enables task-agnostic robot state estimation by jointly optimizing local and global states while automatically aligning multiple reference frames, demonstrating real-time performance across diverse platforms (ANYmal, RACER, HEAP) and environments while handling sensor drift through random walk modeling.

We present the status and goals of the readout electronics system we are developing to support the detector arrays in the coronagraph instrument on the NASA Habitable Worlds Observatory (HWO) mission currently in development. HWO aims to revolutionize exoplanet exploration by performing direct imaging and spectroscopy of 25 or more habitable exoplanets, and to resolve a broad range of astrophysics science questions as well. Since exoplanet yield depends critically on the detector dark count rate, as we show in this paper, the ambitious goals of HWO require arrays of single-photon energy-resolving detectors. We argue that Kinetic Inductance Detectors (KIDs) are best suited to meet these requirements. To support the detectors required for HWO and future far-IR missions, at the required power consumption and detector count, we are developing a radiation-tolerant reconfigurable readout system for both imaging and energy-resolving single photon KID detector arrays. We leverage an existing RFSoC-based system we built for NASA balloons that has a power consumption of 30 Watts and reads out 2000-4000 detectors (i.e. 7-15 mW/pixel), and move to a radiation tolerant Kintex Ultrascale FPGA chip to bring low-power wide bandwidth readout to a space-qualified platform for the first time. This improves significantly over previous spaceflight systems, and delivers what is required for NASA's future needs: ~100,000 pixels with less than 1 kW total power consumption. Overall, the system we are developing is a significant step forward in capability, and retires many key risks for the Habitable Worlds Observatory mission.

06 Feb 2023

We present a method for solving the coverage problem with the objective of

autonomously exploring an unknown environment under mission time constraints.

Here, the robot is tasked with planning a path over a horizon such that the

accumulated area swept out by its sensor footprint is maximized. Because this

problem exhibits a diminishing returns property known as submodularity, we

choose to formulate it as a tree-based sequential decision making process. This

formulation allows us to evaluate the effects of the robot's actions on future

world coverage states, while simultaneously accounting for traversability risk

and the dynamic constraints of the robot. To quickly find near-optimal

solutions, we propose an effective approximation to the coverage sensor model

which adapts to the local environment. Our method was extensively tested across

various complex environments and served as the local exploration algorithm for

a competing entry in the DARPA Subterranean Challenge.

21 Aug 2025

California Institute of Technology

California Institute of Technology NVIDIA

NVIDIA NASA Goddard Space Flight CenterIBM Research

NASA Goddard Space Flight CenterIBM Research Princeton UniversityNASA Jet Propulsion LaboratoryUniversity of Colorado BoulderUniversity of Alabama in HuntsvilleGeorgia State UniversityNASA Marshall Space Flight CenterSouthwest Research InstituteSETI InstituteUniversities Space Research AssociationNASA Science Mission Directorate

Princeton UniversityNASA Jet Propulsion LaboratoryUniversity of Colorado BoulderUniversity of Alabama in HuntsvilleGeorgia State UniversityNASA Marshall Space Flight CenterSouthwest Research InstituteSETI InstituteUniversities Space Research AssociationNASA Science Mission DirectorateHeliophysics is central to understanding and forecasting space weather events and solar activity. Despite decades of high-resolution observations from the Solar Dynamics Observatory (SDO), most models remain task-specific and constrained by scarce labeled data, limiting their capacity to generalize across solar phenomena. We introduce Surya, a 366M parameter foundation model for heliophysics designed to learn general-purpose solar representations from multi-instrument SDO observations, including eight Atmospheric Imaging Assembly (AIA) channels and five Helioseismic and Magnetic Imager (HMI) products. Surya employs a spatiotemporal transformer architecture with spectral gating and long--short range attention, pretrained on high-resolution solar image forecasting tasks and further optimized through autoregressive rollout tuning. Zero-shot evaluations demonstrate its ability to forecast solar dynamics and flare events, while downstream fine-tuning with parameter-efficient Low-Rank Adaptation (LoRA) shows strong performance on solar wind forecasting, active region segmentation, solar flare forecasting, and EUV spectra. Surya is the first foundation model in heliophysics that uses time advancement as a pretext task on full-resolution SDO data. Its novel architecture and performance suggest that the model is able to learn the underlying physics behind solar evolution.

NASA JPL researchers developed ROSA, an AI agent that enables natural language interaction with complex robotic systems by translating conversational commands into executable actions within the Robot Operating System. ROSA successfully demonstrated its ability to abstract ROS complexities, handle errors gracefully, and execute multi-step tasks across diverse robotic platforms including the NeBula-Spot, EELS, and Nova Carter robots, making advanced robotics more accessible for users.

Modeling dynamics is often the first step to making a vehicle autonomous.

While on-road autonomous vehicles have been extensively studied, off-road

vehicles pose many challenging modeling problems. An off-road vehicle

encounters highly complex and difficult-to-model terrain/vehicle interactions,

as well as having complex vehicle dynamics of its own. These complexities can

create challenges for effective high-speed control and planning. In this paper,

we introduce a framework for multistep dynamics prediction that explicitly

handles the accumulation of modeling error and remains scalable for

sampling-based controllers. Our method uses a specially-initialized Long

Short-Term Memory (LSTM) over a limited time horizon as the learned component

in a hybrid model to predict the dynamics of a 4-person seating all-terrain

vehicle (Polaris S4 1000 RZR) in two distinct environments. By only having the

LSTM predict over a fixed time horizon, we negate the need for long term

stability that is often a challenge when training recurrent neural networks.

Our framework is flexible as it only requires odometry information for labels.

Through extensive experimentation, we show that our method is able to predict

millions of possible trajectories in real-time, with a time horizon of five

seconds in challenging off road driving scenarios.

02 Oct 2025

The presence of NH3-bearing components on icy planetary bodies has important implications for their geology and potential habitability. Here, I report the detection of a characteristic NH3 absorption feature at 2.20 ± 0.02 μm on Europa, identified in an observation from the Galileo Near Infrared Mapping Spectrometer. Spectral modeling and band position indicate that NH3-hydrate and NH4-chloride are the most plausible candidates. Spatial correlation between detected ammonia signatures and Europa's microchaos, linear, and band geologic units suggests emplacement from the underground or shallow subsurface. I posit that NH3-bearing materials were transported to the surface via effusive cryovolcanism or similar mechanisms during Europa's recent geological past. The presence of ammoniated compounds implies a thinner ice shell (Spohn & Schubert, 2003) and a thicker, chemically reduced, high-pH subsurface ocean on Europa (Hand et al. 2009). With the detection of NH3-bearing components, this study presents the first evidence of a nitrogen-bearing species on Europa -- an observation of astrobiological significance given nitrogen's essential role in the chemistry of life.

Robust Verification of Controllers under State Uncertainty via Hamilton-Jacobi Reachability Analysis

Robust Verification of Controllers under State Uncertainty via Hamilton-Jacobi Reachability Analysis

As perception-based controllers for autonomous systems become increasingly popular in the real world, it is important that we can formally verify their safety and performance despite perceptual uncertainty. Unfortunately, the verification of such systems remains challenging, largely due to the complexity of the controllers, which are often nonlinear, nonconvex, learning-based, and/or black-box. Prior works propose verification algorithms that are based on approximate reachability methods, but they often restrict the class of controllers and systems that can be handled or result in overly conservative analyses. Hamilton-Jacobi (HJ) reachability analysis is a popular formal verification tool for general nonlinear systems that can compute optimal reachable sets under worst-case system uncertainties; however, its application to perception-based systems is currently underexplored. In this work, we propose RoVer-CoRe, a framework for the Robust Verification of Controllers via HJ Reachability. To the best of our knowledge, RoVer-CoRe is the first HJ reachability-based framework for the verification of perception-based systems under perceptual uncertainty. Our key insight is to concatenate the system controller, observation function, and the state estimation modules to obtain an equivalent closed-loop system that is readily compatible with existing reachability frameworks. Within RoVer-CoRe, we propose novel methods for formal safety verification and robust controller design. We demonstrate the efficacy of the framework in case studies involving aircraft taxiing and NN-based rover navigation. Code is available at the link in the footnote.

06 Oct 2025

Contraction theory is an analytical tool to study differential dynamics of a non-autonomous (i.e., time-varying) nonlinear system under a contraction metric defined with a uniformly positive definite matrix, the existence of which results in a necessary and sufficient characterization of incremental exponential stability of multiple solution trajectories with respect to each other. By using a squared differential length as a Lyapunov-like function, its nonlinear stability analysis boils down to finding a suitable contraction metric that satisfies a stability condition expressed as a linear matrix inequality, indicating that many parallels can be drawn between well-known linear systems theory and contraction theory for nonlinear systems. Furthermore, contraction theory takes advantage of a superior robustness property of exponential stability used in conjunction with the comparison lemma. This yields much-needed safety and stability guarantees for neural network-based control and estimation schemes, without resorting to a more involved method of using uniform asymptotic stability for input-to-state stability. Such distinctive features permit the systematic construction of a contraction metric via convex optimization, thereby obtaining an explicit exponential bound on the distance between a time-varying target trajectory and solution trajectories perturbed externally due to disturbances and learning errors. The objective of this paper is, therefore, to present a tutorial overview of contraction theory and its advantages in nonlinear stability analysis of deterministic and stochastic systems, with an emphasis on deriving formal robustness and stability guarantees for various learning-based and data-driven automatic control methods. In particular, we provide a detailed review of techniques for finding contraction metrics and associated control and estimation laws using deep neural networks.

20 Oct 2025

California Institute of TechnologyAristotle University of ThessalonikiNASA Jet Propulsion Laboratory

California Institute of TechnologyAristotle University of ThessalonikiNASA Jet Propulsion Laboratory University of Virginia

University of Virginia Chalmers University of Technology

Chalmers University of Technology University of GroningenInstituto de Astrofísica de Andalucía, IAA-CSICUniversidad de La Laguna (ULL)Instituto de Astrofísica de Canarias (IAC)INAF – Palermo Astronomical Observatory

University of GroningenInstituto de Astrofísica de Andalucía, IAA-CSICUniversidad de La Laguna (ULL)Instituto de Astrofísica de Canarias (IAC)INAF – Palermo Astronomical ObservatoryUnlocking the atmospheres of sub-Neptunes is among JWST's major achievements, yet such observations demand complex analyses that strongly affect interpretations. We present an independent reanalysis of the original JWST transmission spectrum of K2-18 b, to assess the robustness of previously claimed detections, explore the parameter space, and implications for its formation. The observations were reduced using a combination of public and customized pipelines producing a total of 12 different versions of the transmission spectrum by varying: spectral binning, limb-darkening, and a novel correction for the occulted stellar spot. We then performed atmospheric retrievals using TauREx 3, comparing models of varying complexity, robustly detecting CH4 (3-4σ) across all configurations. The evidence for CO2 is weaker and highly model-dependent. The tentative detection of dimethyl sulphide (DMS) vanishes in our most comprehensive retrieval models. We find that correcting the stellar spot in the NIRISS transit is a critical step, introducing a uniform offset that primarily drives the inference of a lower mean molecular weight atmosphere. Furthermore, the assumed complexity of the retrieval model itself introduces significant biases; including more molecules systematically increases the retrieved CH4 abundance and atmospheric mean molecular weight, even for species without spectral features. The data are consistent with a hydrogen-rich atmosphere with an elevated O and an even more elevated C abundance, leading to a super-solar C/O. We show that the physical properties of the system planets K2-18 c, and K2-18 b are consistent with those expected by the in situ formation theory of Inside-Out Planet Formation (IOPF), interior to the carbon "soot" line, where an elevated C/O ratio of a primordial atmosphere is expected to be inherited from the protoplanetary disk.

19 Sep 2025

California Institute of Technology

California Institute of Technology University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign UCLA

UCLA UC Berkeley

UC Berkeley NASA Goddard Space Flight Center

NASA Goddard Space Flight Center Arizona State University

Arizona State University MITNASA Jet Propulsion Laboratory

MITNASA Jet Propulsion Laboratory Dartmouth CollegeGeorgia State University

Dartmouth CollegeGeorgia State University Harvard-Smithsonian Center for AstrophysicsUniversity of New HampshireSouthwest Research InstituteInstituto Tecnológico de AeronáuticaThe Catholic University of AmericaAerospace CorporationRemcon Inc.

Harvard-Smithsonian Center for AstrophysicsUniversity of New HampshireSouthwest Research InstituteInstituto Tecnológico de AeronáuticaThe Catholic University of AmericaAerospace CorporationRemcon Inc.The Compression and Reconnection Investigations of the Magnetopause (CRIMP) mission is a Heliophysics Medium-Class Explorer (MIDEX) Announcement of Opportunity (AO) mission concept designed to study mesoscale structures and particle outflow along Earth's magnetopause using two identical spacecraft. CRIMP would uncover the impact of magnetosheath mesoscale drivers, dayside magnetopause mesoscale phenomenological processes and structures, and localized plasma outflows on magnetic reconnection and the energy transfer process in the dayside magnetosphere. CRIMP accomplishes this through uniquely phased spacecraft configurations that allow multipoint, contemporaneous measurements at the magnetopause. This enables an unparalleled look at mesoscale spatial differences along the dayside magnetopause on the scale of 1-3 Earth Radii (Re). Through these measurements, CRMIP will uncover how local mass density enhancements affect global reconnection, how mesoscale structures drive magnetopause dynamics, and if the magnetopause acts as a perfectly absorbing boundary for radiation belt electrons. This allows CRIMP to determine the spatial scale, extent, and temporal evolution of energy and mass transfer processes at the magnetopause - crucial measurements to determine how the solar wind energy input in the magnetosphere is transmitted between regions and across scales. This concept was conceived as a part of the 2024 NASA Heliophysics Mission Design School.

20 Jun 2025

Researchers from Stanford University and NASA JPL introduce ARNA, an Agentic Robotic Navigation Architecture that enables general-purpose navigation in unknown environments by allowing a Large Vision-Language Model to dynamically orchestrate perception, reasoning, and acting tools. ARNA achieves 77% accuracy on the HM-EQA embodied question-answering benchmark, outperforming prior methods, and demonstrates significantly improved exploration efficiency with a mean path length of 16.55 meters.

For decades, the terahertz (THz) frequency band had been primarily explored in the context of radar, imaging, and spectroscopy, where multi-gigahertz (GHz) and even THz-wide channels and the properties of terahertz photons offered attractive target accuracy, resolution, and classification capabilities. Meanwhile, the exploitation of the terahertz band for wireless communication had originally been limited due to several reasons, including (i) no immediate need for such high data rates available via terahertz bands and (ii) challenges in designing sufficiently high power terahertz systems at reasonable cost and efficiency, leading to what was often referred to as "the terahertz gap". This roadmap paper first reviews the evolution of the hardware design approaches for terahertz systems, including electronic, photonic, and plasmonic approaches, and the understanding of the terahertz channel itself, in diverse scenarios, ranging from common indoors and outdoors scenarios to intra-body and outer-space environments. The article then summarizes the lessons learned during this multi-decade process and the cutting-edge state-of-the-art findings, including novel methods to quantify power efficiency, which will become more important in making design choices. Finally, the manuscript presents the authors' perspective and insights on how the evolution of terahertz systems design will continue toward enabling efficient terahertz communications and sensing solutions as an integral part of next-generation wireless systems.

California's Central Valley is the national agricultural center, producing 1/4 of the nation's food. However, land in the Central Valley is sinking at a rapid rate (as much as 20 cm per year) due to continued groundwater pumping. Land subsidence has a significant impact on infrastructure resilience and groundwater sustainability. In this study, we aim to identify specific regions with different temporal dynamics of land displacement and find relationships with underlying geological composition. Then, we aim to remotely estimate geologic composition using interferometric synthetic aperture radar (InSAR)-based land deformation temporal changes using machine learning techniques. We identified regions with different temporal characteristics of land displacement in that some areas (e.g., Helm) with coarser grain geologic compositions exhibited potentially reversible land deformation (elastic land compaction). We found a significant correlation between InSAR-based land deformation and geologic composition using random forest and deep neural network regression models. We also achieved significant accuracy with 1/4 sparse sampling to reduce any spatial correlations among data, suggesting that the model has the potential to be generalized to other regions for indirect estimation of geologic composition. Our results indicate that geologic composition can be estimated using InSAR-based land deformation data. In-situ measurements of geologic composition can be expensive and time consuming and may be impractical in some areas. The generalizability of the model sheds light on high spatial resolution geologic composition estimation utilizing existing measurements.

07 Jan 2022

Field robotics in perceptually-challenging environments require fast and accurate state estimation, but modern LiDAR sensors quickly overwhelm current odometry algorithms. To this end, this paper presents a lightweight frontend LiDAR odometry solution with consistent and accurate localization for computationally-limited robotic platforms. Our Direct LiDAR Odometry (DLO) method includes several key algorithmic innovations which prioritize computational efficiency and enables the use of dense, minimally-preprocessed point clouds to provide accurate pose estimates in real-time. This is achieved through a novel keyframing system which efficiently manages historical map information, in addition to a custom iterative closest point solver for fast point cloud registration with data structure recycling. Our method is more accurate with lower computational overhead than the current state-of-the-art and has been extensively evaluated in multiple perceptually-challenging environments on aerial and legged robots as part of NASA JPL Team CoSTAR's research and development efforts for the DARPA Subterranean Challenge.

09 Jul 2022

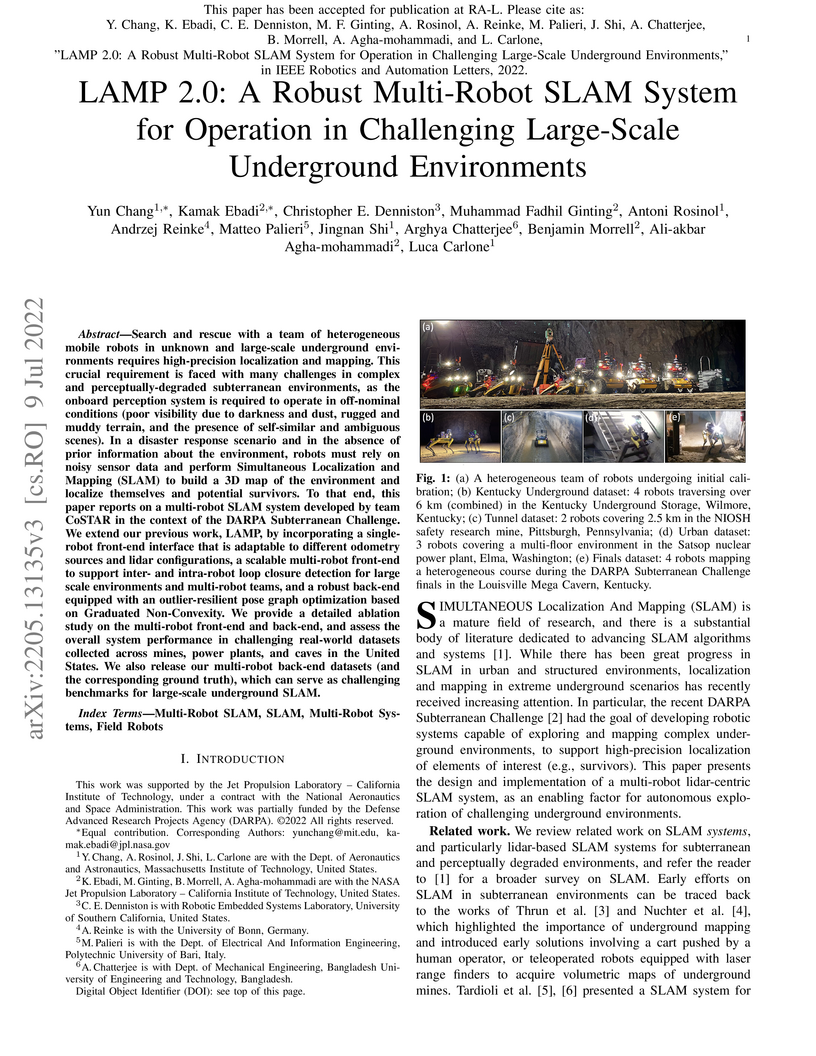

Search and rescue with a team of heterogeneous mobile robots in unknown and

large-scale underground environments requires high-precision localization and

mapping. This crucial requirement is faced with many challenges in complex and

perceptually-degraded subterranean environments, as the onboard perception

system is required to operate in off-nominal conditions (poor visibility due to

darkness and dust, rugged and muddy terrain, and the presence of self-similar

and ambiguous scenes). In a disaster response scenario and in the absence of

prior information about the environment, robots must rely on noisy sensor data

and perform Simultaneous Localization and Mapping (SLAM) to build a 3D map of

the environment and localize themselves and potential survivors. To that end,

this paper reports on a multi-robot SLAM system developed by team CoSTAR in the

context of the DARPA Subterranean Challenge. We extend our previous work, LAMP,

by incorporating a single-robot front-end interface that is adaptable to

different odometry sources and lidar configurations, a scalable multi-robot

front-end to support inter- and intra-robot loop closure detection for large

scale environments and multi-robot teams, and a robust back-end equipped with

an outlier-resilient pose graph optimization based on Graduated Non-Convexity.

We provide a detailed ablation study on the multi-robot front-end and back-end,

and assess the overall system performance in challenging real-world datasets

collected across mines, power plants, and caves in the United States. We also

release our multi-robot back-end datasets (and the corresponding ground truth),

which can serve as challenging benchmarks for large-scale underground SLAM.

This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

27 Jul 2023

Non-linear model predictive control (nMPC) is a powerful approach to control

complex robots (such as humanoids, quadrupeds, or unmanned aerial manipulators

(UAMs)) as it brings important advantages over other existing techniques. The

full-body dynamics, along with the prediction capability of the optimal control

problem (OCP) solved at the core of the controller, allows to actuate the robot

in line with its dynamics. This fact enhances the robot capabilities and

allows, e.g., to perform intricate maneuvers at high dynamics while optimizing

the amount of energy used. Despite the many similarities between humanoids or

quadrupeds and UAMs, full-body torque-level nMPC has rarely been applied to

UAMs.

This paper provides a thorough description of how to use such techniques in

the field of aerial manipulation. We give a detailed explanation of the

different parts involved in the OCP, from the UAM dynamical model to the

residuals in the cost function. We develop and compare three different nMPC

controllers: Weighted MPC, Rail MPC, and Carrot MPC, which differ on the

structure of their OCPs and on how these are updated at every time step. To

validate the proposed framework, we present a wide variety of simulated case

studies. First, we evaluate the trajectory generation problem, i.e., optimal

control problems solved offline, involving different kinds of motions (e.g.,

aggressive maneuvers or contact locomotion) for different types of UAMs. Then,

we assess the performance of the three nMPC controllers, i.e., closed-loop

controllers solved online, through a variety of realistic simulations. For the

benefit of the community, we have made available the source code related to

this work.

This work introduces Transformer-based Successive Convexification (T-SCvx), an extension of Transformer-based Powered Descent Guidance (T-PDG), generalizable for efficient six-degree-of-freedom (DoF) fuel-optimal powered descent trajectory generation. Our approach significantly enhances the sample efficiency and solution quality for nonconvex-powered descent guidance by employing a rotation invariant transformation of the sampled dataset. T-PDG was previously applied to the 3-DoF minimum fuel powered descent guidance problem, improving solution times by up to an order of magnitude compared to lossless convexification (LCvx). By learning to predict the set of tight or active constraints at the optimal control problem's solution, Transformer-based Successive Convexification (T-SCvx) creates the minimal reduced-size problem initialized with only the tight constraints, then uses the solution of this reduced problem to warm-start the direct optimization solver. 6-DoF powered descent guidance is known to be challenging to solve quickly and reliably due to the nonlinear and non-convex nature of the problem, the discretization scheme heavily influencing solution validity, and reference trajectory initialization determining algorithm convergence or divergence. Our contributions in this work address these challenges by extending T-PDG to learn the set of tight constraints for the successive convexification (SCvx) formulation of the 6-DoF powered descent guidance problem. In addition to reducing the problem size, feasible and locally optimal reference trajectories are also learned to facilitate convergence from the initial guess. T-SCvx enables onboard computation of real-time guidance trajectories, demonstrated by a 6-DoF Mars powered landing application problem.

There are no more papers matching your filters at the moment.