NAVER LABS

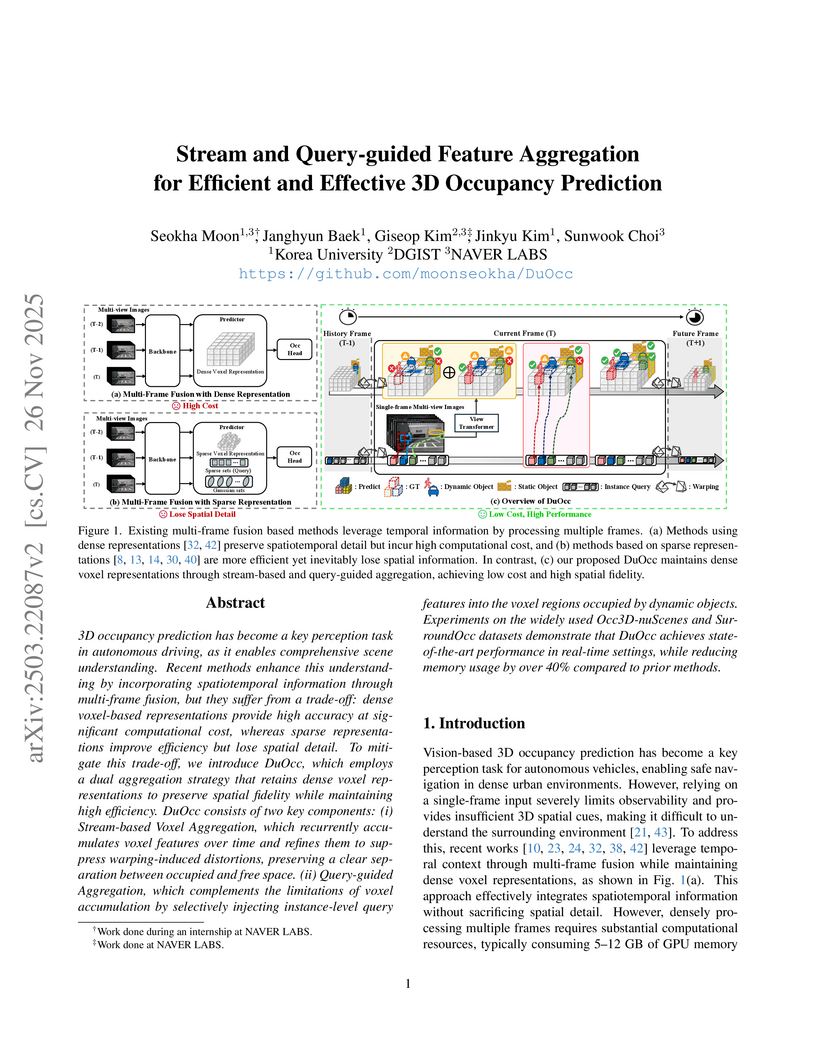

DuOcc, developed by researchers from Korea University, DGIST, and NAVER LABS, introduces a dual aggregation strategy for 3D occupancy prediction that achieves state-of-the-art accuracy while meeting real-time efficiency and low memory consumption requirements. The model attained 41.9 mIoU on Occ3D-nuScenes with 83.3 ms inference latency and 2,788 MB memory usage, outperforming prior real-time methods and performing comparably to slower dense voxel approaches.

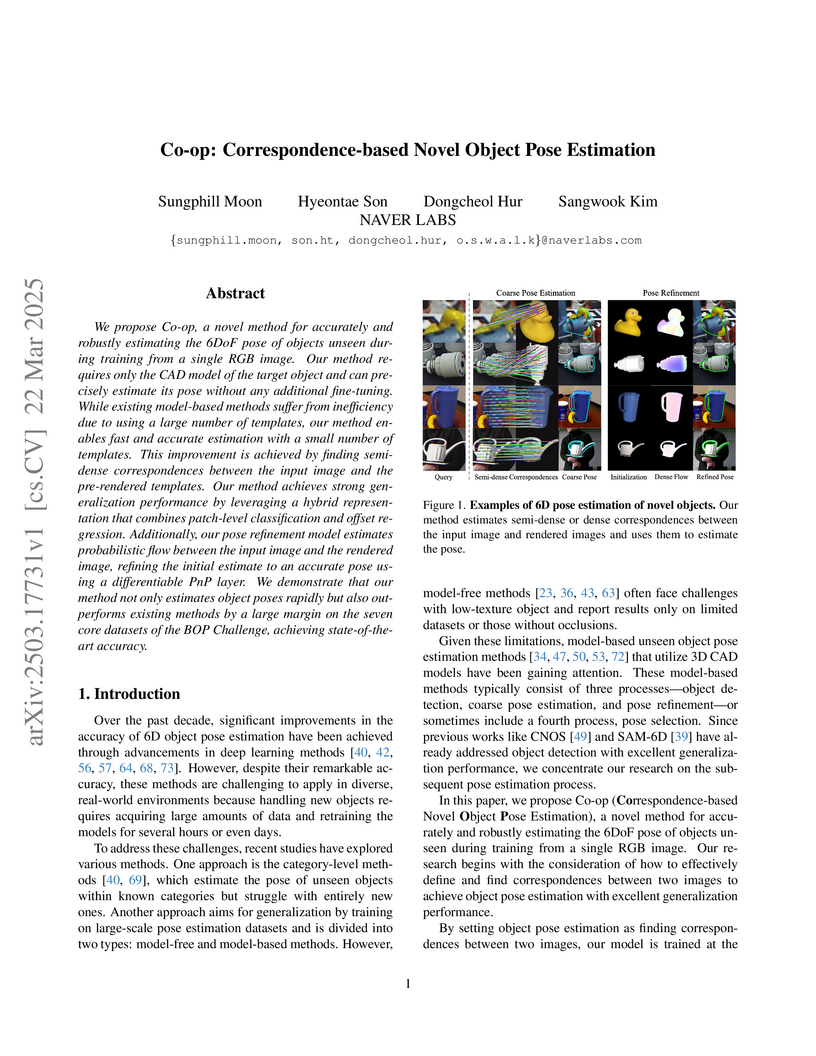

NAVER LABS's Co-op proposes a correspondence-based framework for 6D object pose estimation of entirely novel objects using only their 3D CAD models. This two-stage approach, leveraging hybrid representations and probabilistic flow, achieves state-of-the-art performance on the BOP Challenge by outperforming previous methods and effectively handling challenging scenarios.

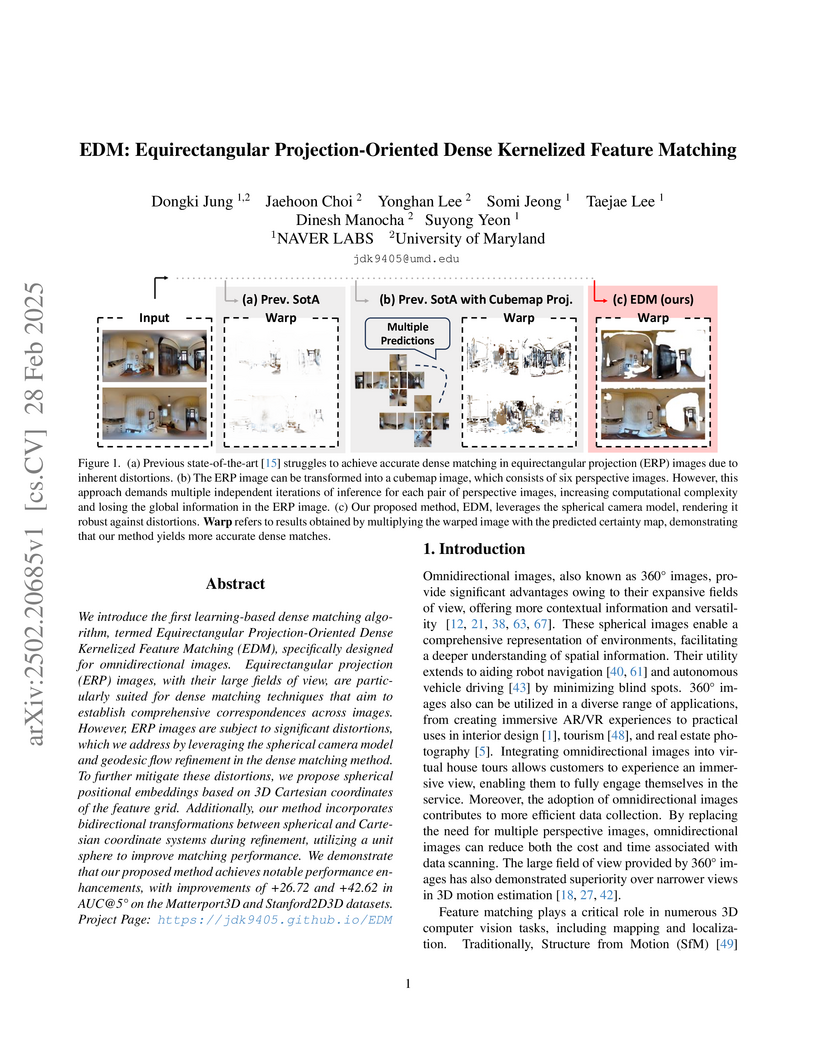

We introduce the first learning-based dense matching algorithm, termed

Equirectangular Projection-Oriented Dense Kernelized Feature Matching (EDM),

specifically designed for omnidirectional images. Equirectangular projection

(ERP) images, with their large fields of view, are particularly suited for

dense matching techniques that aim to establish comprehensive correspondences

across images. However, ERP images are subject to significant distortions,

which we address by leveraging the spherical camera model and geodesic flow

refinement in the dense matching method. To further mitigate these distortions,

we propose spherical positional embeddings based on 3D Cartesian coordinates of

the feature grid. Additionally, our method incorporates bidirectional

transformations between spherical and Cartesian coordinate systems during

refinement, utilizing a unit sphere to improve matching performance. We

demonstrate that our proposed method achieves notable performance enhancements,

with improvements of +26.72 and +42.62 in AUC@5{\deg} on the Matterport3D and

Stanford2D3D datasets.

02 Oct 2024

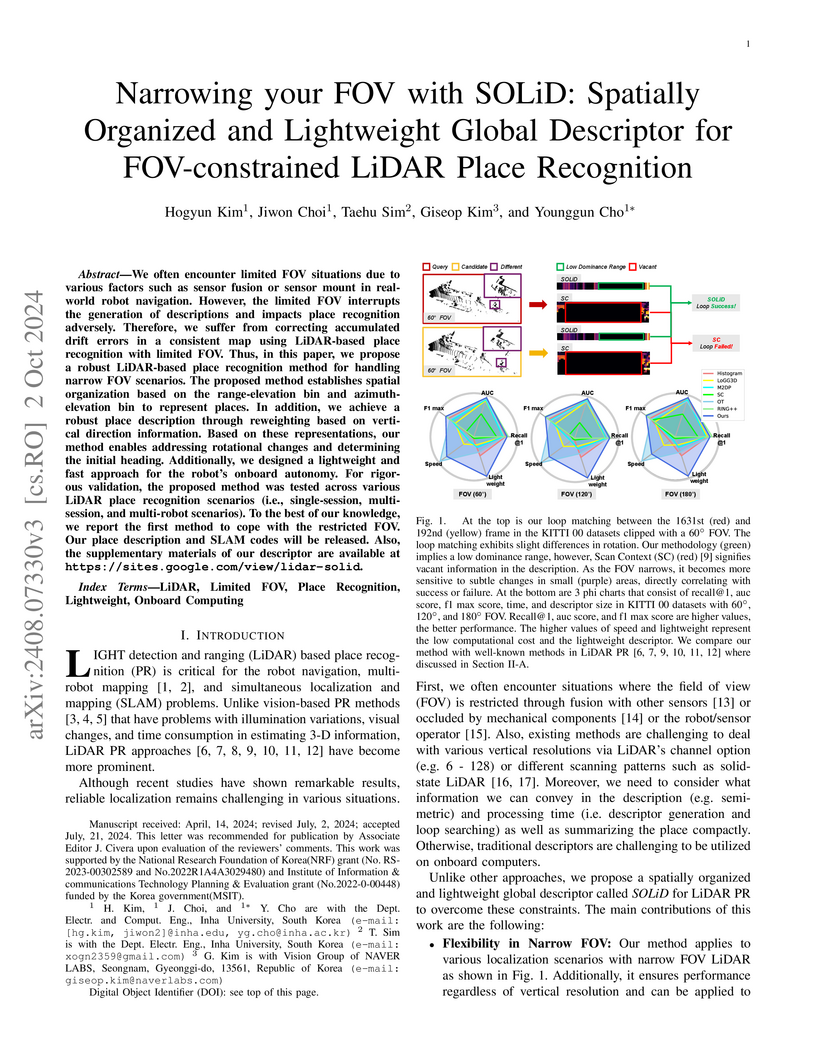

We often encounter limited FOV situations due to various factors such as sensor fusion or sensor mount in real-world robot navigation. However, the limited FOV interrupts the generation of descriptions and impacts place recognition adversely. Therefore, we suffer from correcting accumulated drift errors in a consistent map using LiDAR-based place recognition with limited FOV. Thus, in this paper, we propose a robust LiDAR-based place recognition method for handling narrow FOV scenarios. The proposed method establishes spatial organization based on the range-elevation bin and azimuth-elevation bin to represent places. In addition, we achieve a robust place description through reweighting based on vertical direction information. Based on these representations, our method enables addressing rotational changes and determining the initial heading. Additionally, we designed a lightweight and fast approach for the robot's onboard autonomy. For rigorous validation, the proposed method was tested across various LiDAR place recognition scenarios (i.e., single-session, multi-session, and multi-robot scenarios). To the best of our knowledge, we report the first method to cope with the restricted FOV. Our place description and SLAM codes will be released. Also, the supplementary materials of our descriptor are available at \texttt{\url{this https URL}}.

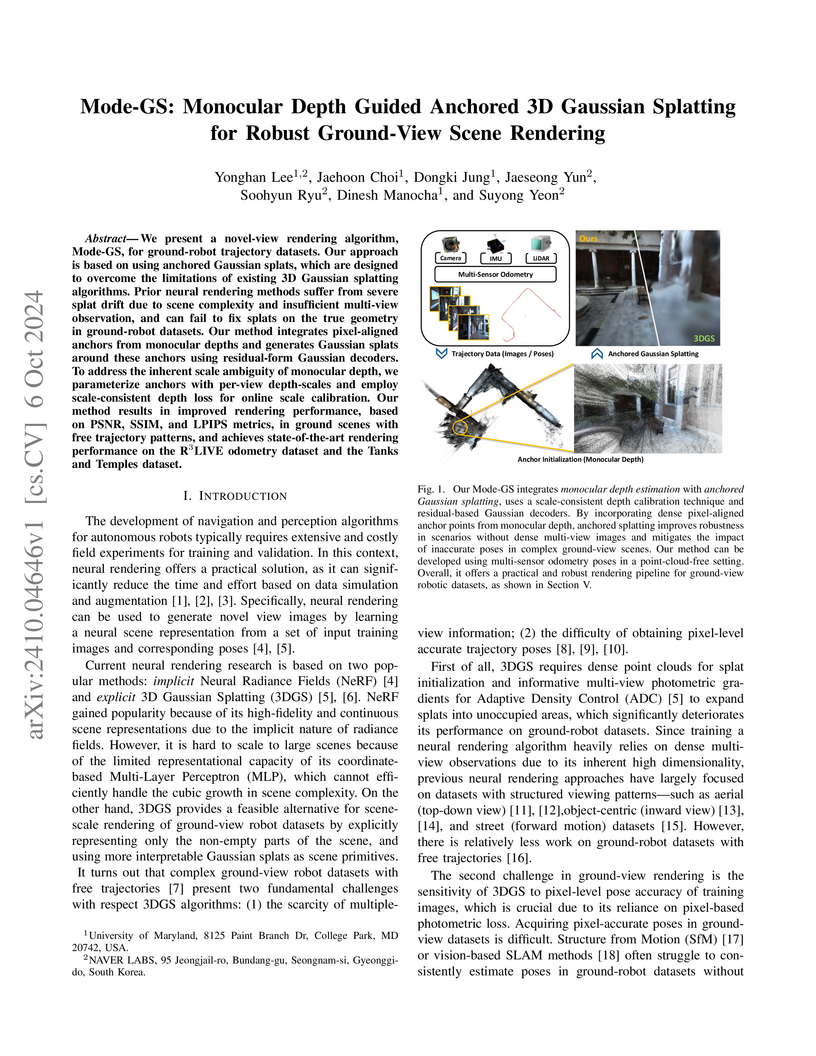

We present a novel-view rendering algorithm, Mode-GS, for ground-robot trajectory datasets. Our approach is based on using anchored Gaussian splats, which are designed to overcome the limitations of existing 3D Gaussian splatting algorithms. Prior neural rendering methods suffer from severe splat drift due to scene complexity and insufficient multi-view observation, and can fail to fix splats on the true geometry in ground-robot datasets. Our method integrates pixel-aligned anchors from monocular depths and generates Gaussian splats around these anchors using residual-form Gaussian decoders. To address the inherent scale ambiguity of monocular depth, we parameterize anchors with per-view depth-scales and employ scale-consistent depth loss for online scale calibration. Our method results in improved rendering performance, based on PSNR, SSIM, and LPIPS metrics, in ground scenes with free trajectory patterns, and achieves state-of-the-art rendering performance on the R3LIVE odometry dataset and the Tanks and Temples dataset.

We present the evaluation methodology, datasets and results of the BOP

Challenge 2024, the 6th in a series of public competitions organized to capture

the state of the art in 6D object pose estimation and related tasks. In 2024,

our goal was to transition BOP from lab-like setups to real-world scenarios.

First, we introduced new model-free tasks, where no 3D object models are

available and methods need to onboard objects just from provided reference

videos. Second, we defined a new, more practical 6D object detection task where

identities of objects visible in a test image are not provided as input. Third,

we introduced new BOP-H3 datasets recorded with high-resolution sensors and

AR/VR headsets, closely resembling real-world scenarios. BOP-H3 include 3D

models and onboarding videos to support both model-based and model-free tasks.

Participants competed on seven challenge tracks. Notably, the best 2024 method

for model-based 6D localization of unseen objects (FreeZeV2.1) achieves 22%

higher accuracy on BOP-Classic-Core than the best 2023 method (GenFlow), and is

only 4% behind the best 2023 method for seen objects (GPose2023) although being

significantly slower (24.9 vs 2.7s per image). A more practical 2024 method for

this task is Co-op which takes only 0.8s per image and is 13% more accurate

than GenFlow. Methods have similar rankings on 6D detection as on 6D

localization but higher run time. On model-based 2D detection of unseen

objects, the best 2024 method (MUSE) achieves 21--29% relative improvement

compared to the best 2023 method (CNOS). However, the 2D detection accuracy for

unseen objects is still -35% behind the accuracy for seen objects (GDet2023),

and the 2D detection stage is consequently the main bottleneck of existing

pipelines for 6D localization/detection of unseen objects. The online

evaluation system stays open and is available at this http URL

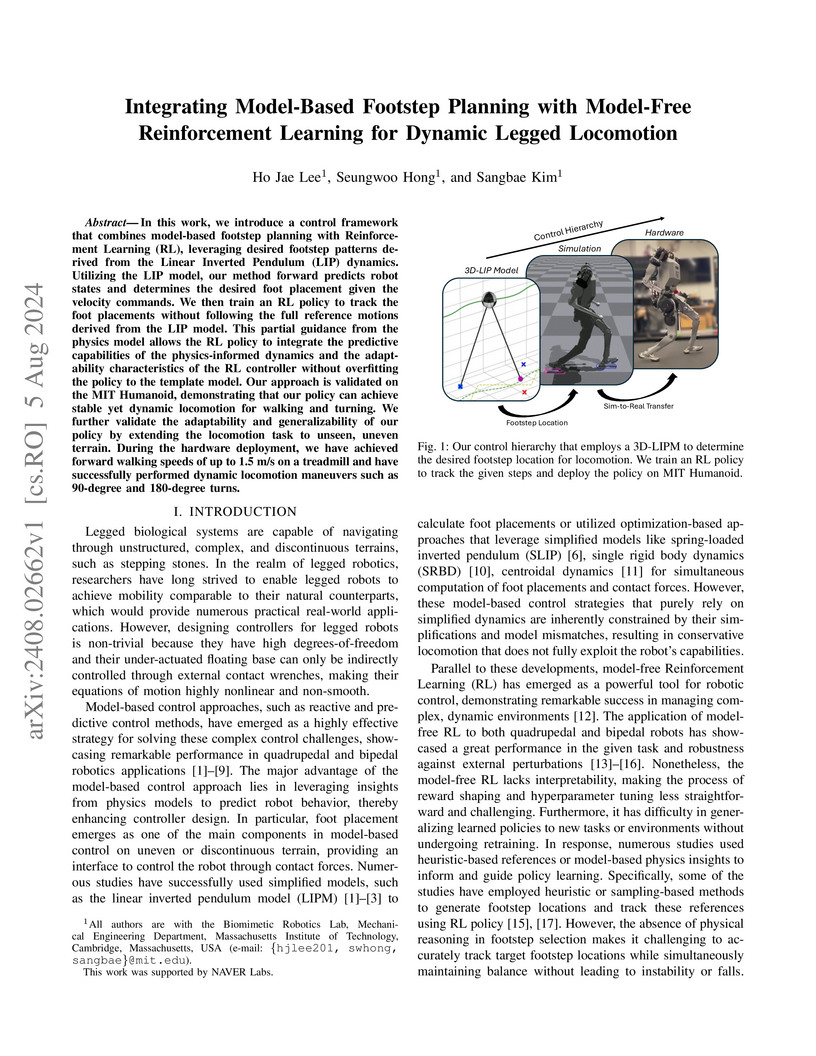

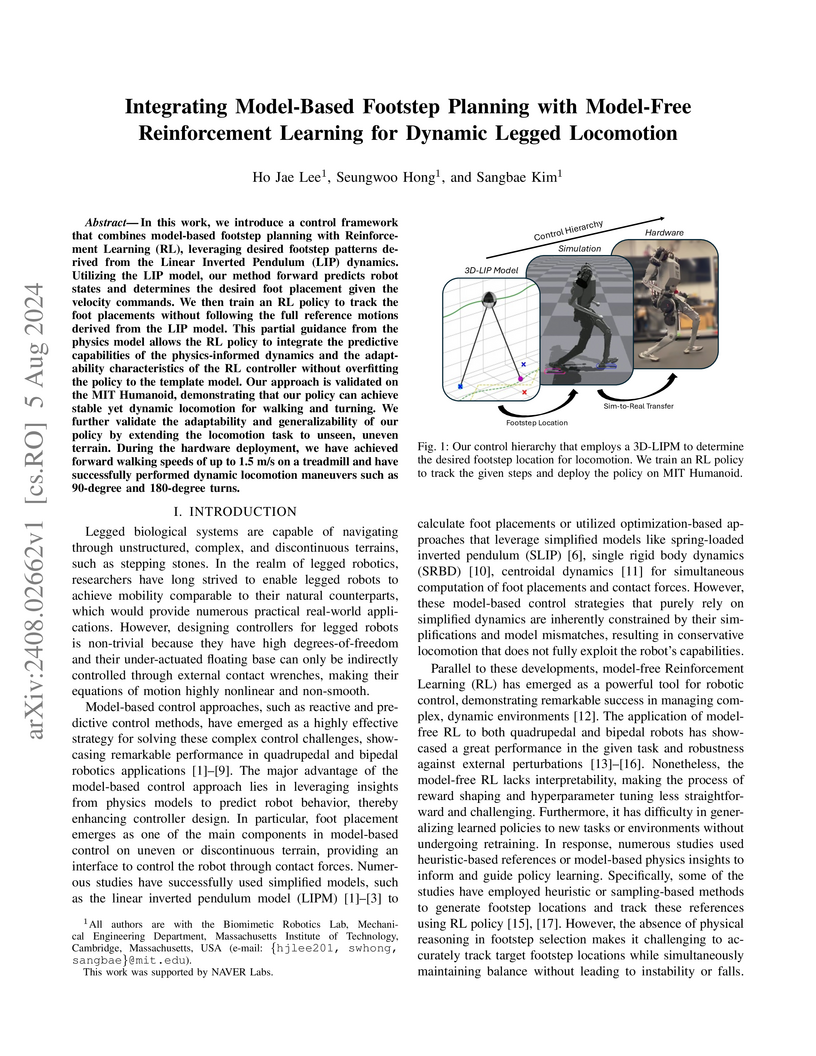

In this work, we introduce a control framework that combines model-based

footstep planning with Reinforcement Learning (RL), leveraging desired footstep

patterns derived from the Linear Inverted Pendulum (LIP) dynamics. Utilizing

the LIP model, our method forward predicts robot states and determines the

desired foot placement given the velocity commands. We then train an RL policy

to track the foot placements without following the full reference motions

derived from the LIP model. This partial guidance from the physics model allows

the RL policy to integrate the predictive capabilities of the physics-informed

dynamics and the adaptability characteristics of the RL controller without

overfitting the policy to the template model. Our approach is validated on the

MIT Humanoid, demonstrating that our policy can achieve stable yet dynamic

locomotion for walking and turning. We further validate the adaptability and

generalizability of our policy by extending the locomotion task to unseen,

uneven terrain. During the hardware deployment, we have achieved forward

walking speeds of up to 1.5 m/s on a treadmill and have successfully performed

dynamic locomotion maneuvers such as 90-degree and 180-degree turns.

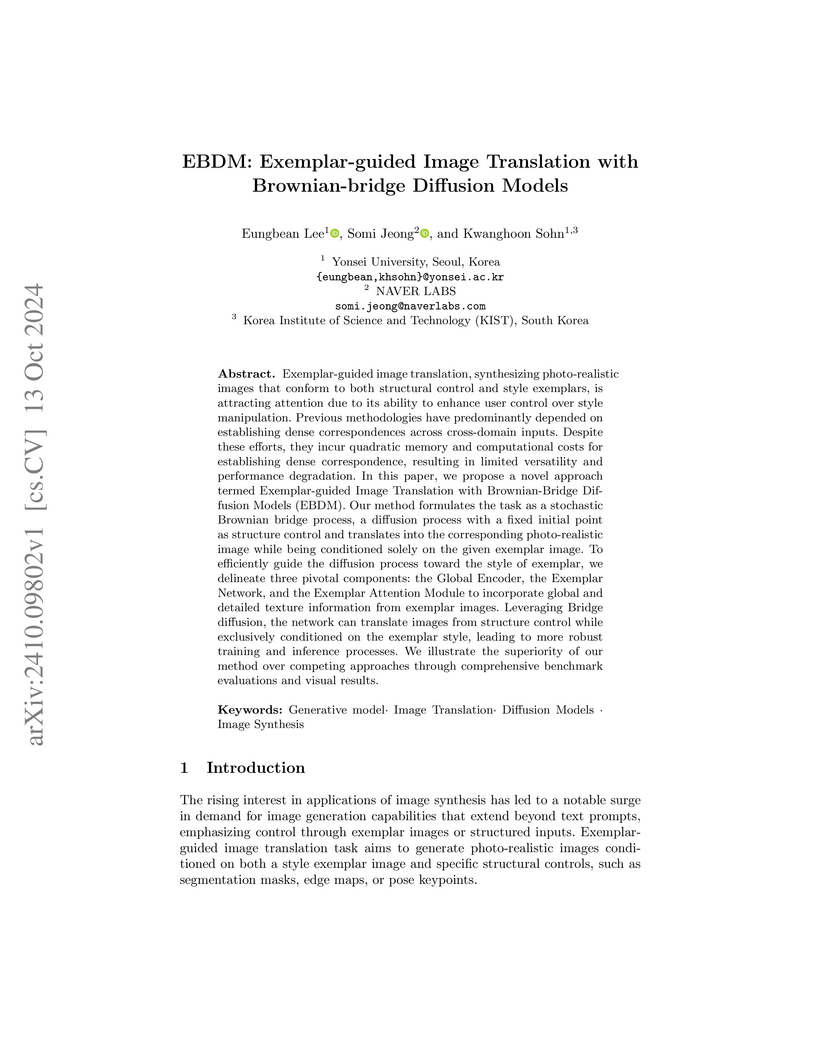

EBDM introduces an exemplar-guided image translation framework using Brownian-bridge diffusion models, which generates photo-realistic images by efficiently transferring both global and fine-grained style from an exemplar while preserving structural control inputs. The framework demonstrates superior detail transfer and improved computational efficiency over prior methods and other diffusion-based approaches.

12 Sep 2024

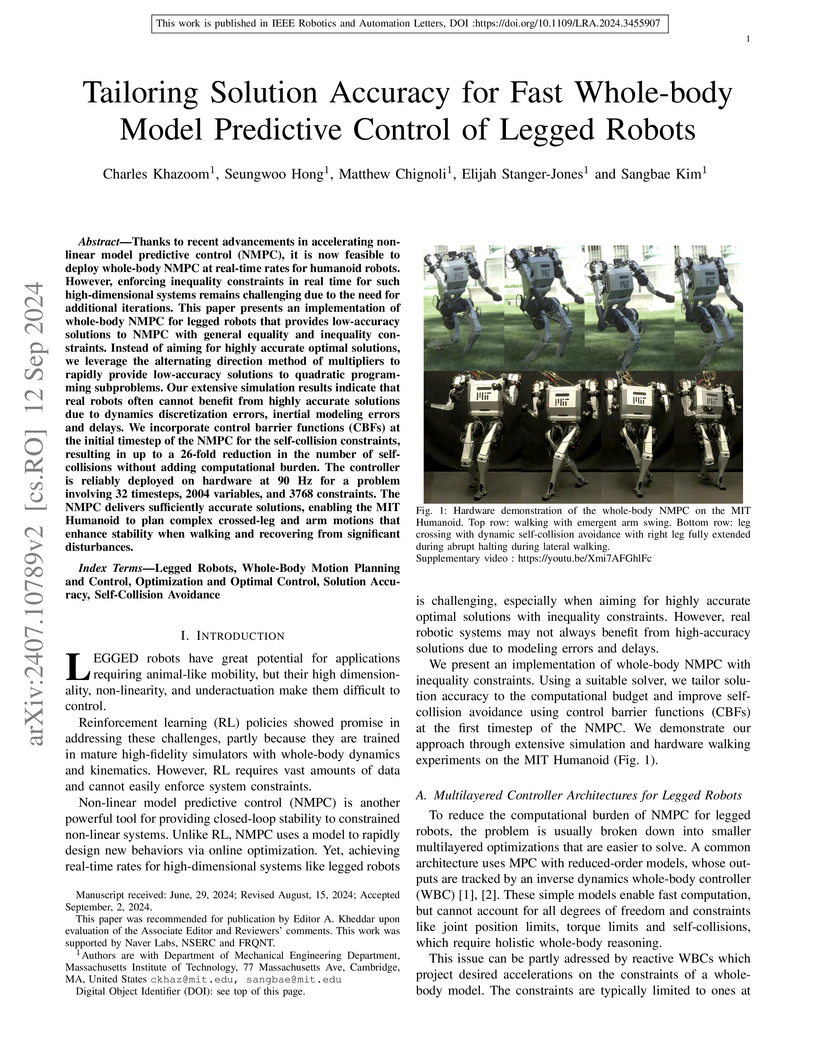

Thanks to recent advancements in accelerating non-linear model predictive

control (NMPC), it is now feasible to deploy whole-body NMPC at real-time rates

for humanoid robots. However, enforcing inequality constraints in real time for

such high-dimensional systems remains challenging due to the need for

additional iterations. This paper presents an implementation of whole-body NMPC

for legged robots that provides low-accuracy solutions to NMPC with general

equality and inequality constraints. Instead of aiming for highly accurate

optimal solutions, we leverage the alternating direction method of multipliers

to rapidly provide low-accuracy solutions to quadratic programming subproblems.

Our extensive simulation results indicate that real robots often cannot benefit

from highly accurate solutions due to dynamics discretization errors, inertial

modeling errors and delays. We incorporate control barrier functions (CBFs) at

the initial timestep of the NMPC for the self-collision constraints, resulting

in up to a 26-fold reduction in the number of self-collisions without adding

computational burden. The controller is reliably deployed on hardware at 90 Hz

for a problem involving 32 timesteps, 2004 variables, and 3768 constraints. The

NMPC delivers sufficiently accurate solutions, enabling the MIT Humanoid to

plan complex crossed-leg and arm motions that enhance stability when walking

and recovering from significant disturbances.

This paper proposes a paradigm for vehicle trajectory prediction that models future relationships between vehicles based on their anticipated future positions, moving beyond reliance on observed past behavior. The approach incorporates a Future Relationship Module and waypoint occupancy prediction, achieving state-of-the-art performance on the nuScenes and Argoverse benchmarks, particularly for long-horizon predictions.

03 Mar 2025

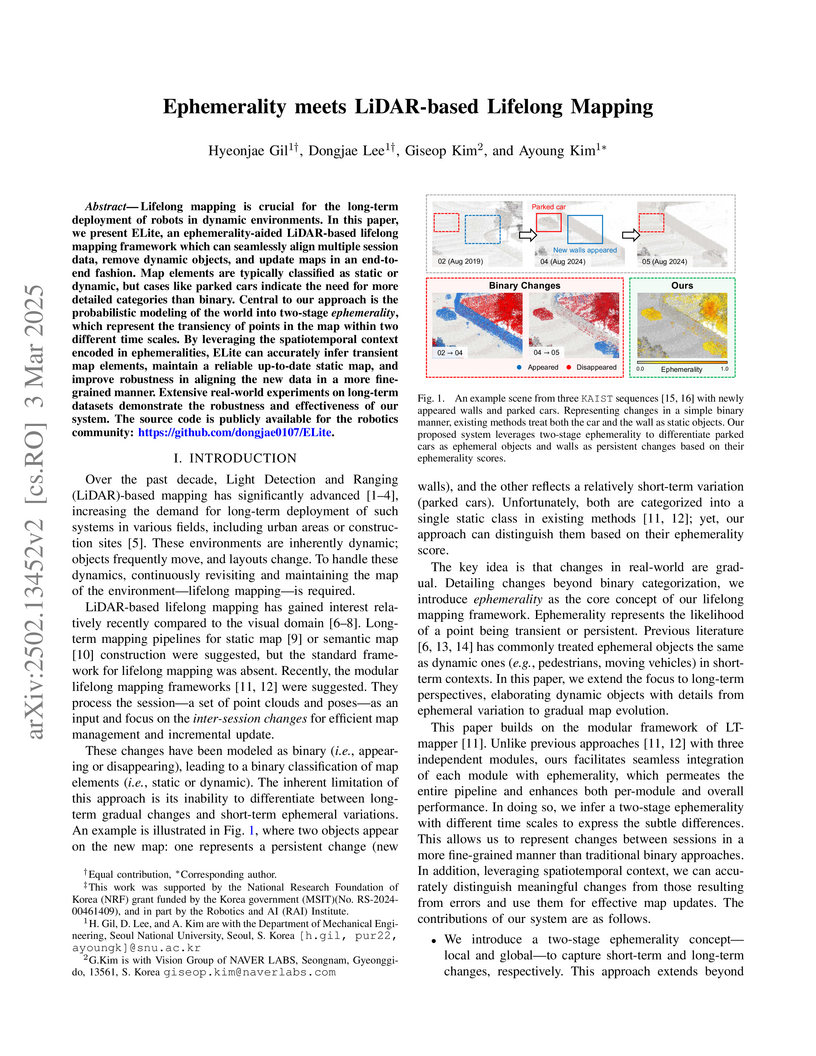

Lifelong mapping is crucial for the long-term deployment of robots in dynamic

environments. In this paper, we present ELite, an ephemerality-aided

LiDAR-based lifelong mapping framework which can seamlessly align multiple

session data, remove dynamic objects, and update maps in an end-to-end fashion.

Map elements are typically classified as static or dynamic, but cases like

parked cars indicate the need for more detailed categories than binary. Central

to our approach is the probabilistic modeling of the world into two-stage

ephemerality, which represent the transiency of points in the map

within two different time scales. By leveraging the spatiotemporal context

encoded in ephemeralities, ELite can accurately infer transient map elements,

maintain a reliable up-to-date static map, and improve robustness in aligning

the new data in a more fine-grained manner. Extensive real-world experiments on

long-term datasets demonstrate the robustness and effectiveness of our system.

The source code is publicly available for the robotics community:

this https URL

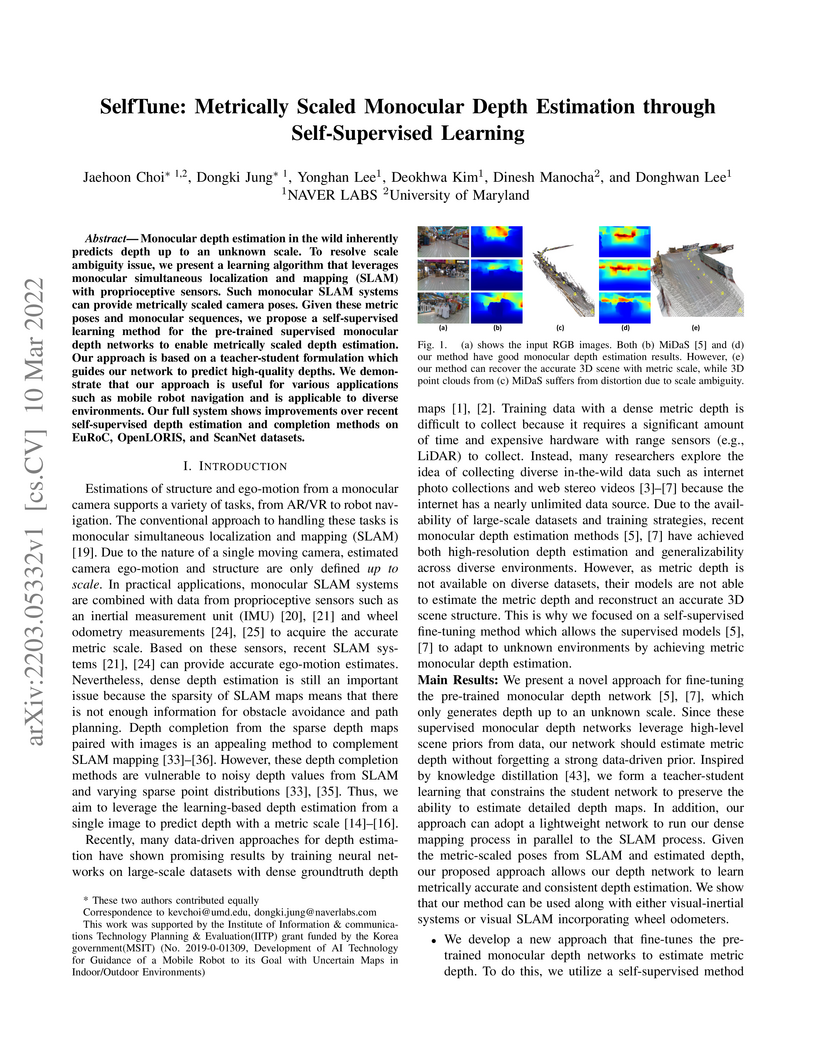

Monocular depth estimation in the wild inherently predicts depth up to an

unknown scale. To resolve scale ambiguity issue, we present a learning

algorithm that leverages monocular simultaneous localization and mapping (SLAM)

with proprioceptive sensors. Such monocular SLAM systems can provide metrically

scaled camera poses. Given these metric poses and monocular sequences, we

propose a self-supervised learning method for the pre-trained supervised

monocular depth networks to enable metrically scaled depth estimation. Our

approach is based on a teacher-student formulation which guides our network to

predict high-quality depths. We demonstrate that our approach is useful for

various applications such as mobile robot navigation and is applicable to

diverse environments. Our full system shows improvements over recent

self-supervised depth estimation and completion methods on EuRoC, OpenLORIS,

and ScanNet datasets.

Over the past few years, image-to-image (I2I) translation methods have been

proposed to translate a given image into diverse outputs. Despite the

impressive results, they mainly focus on the I2I translation between two

domains, so the multi-domain I2I translation still remains a challenge. To

address this problem, we propose a novel multi-domain unsupervised

image-to-image translation (MDUIT) framework that leverages the decomposed

content feature and appearance adaptive convolution to translate an image into

a target appearance while preserving the given geometric content. We also

exploit a contrast learning objective, which improves the disentanglement

ability and effectively utilizes multi-domain image data in the training

process by pairing the semantically similar images. This allows our method to

learn the diverse mappings between multiple visual domains with only a single

framework. We show that the proposed method produces visually diverse and

plausible results in multiple domains compared to the state-of-the-art methods.

Typical LiDAR-based 3D object detection models are trained in a supervised manner with real-world data collection, which is often imbalanced over classes (or long-tailed). To deal with it, augmenting minority-class examples by sampling ground truth (GT) LiDAR points from a database and pasting them into a scene of interest is often used, but challenges still remain: inflexibility in locating GT samples and limited sample diversity. In this work, we propose to leverage pseudo-LiDAR point clouds generated (at a low cost) from videos capturing a surround view of miniatures or real-world objects of minor classes. Our method, called Pseudo Ground Truth Augmentation (PGT-Aug), consists of three main steps: (i) volumetric 3D instance reconstruction using a 2D-to-3D view synthesis model, (ii) object-level domain alignment with LiDAR intensity estimation and (iii) a hybrid context-aware placement method from ground and map information. We demonstrate the superiority and generality of our method through performance improvements in extensive experiments conducted on three popular benchmarks, i.e., nuScenes, KITTI, and Lyft, especially for the datasets with large domain gaps captured by different LiDAR configurations. Our code and data will be publicly available upon publication.

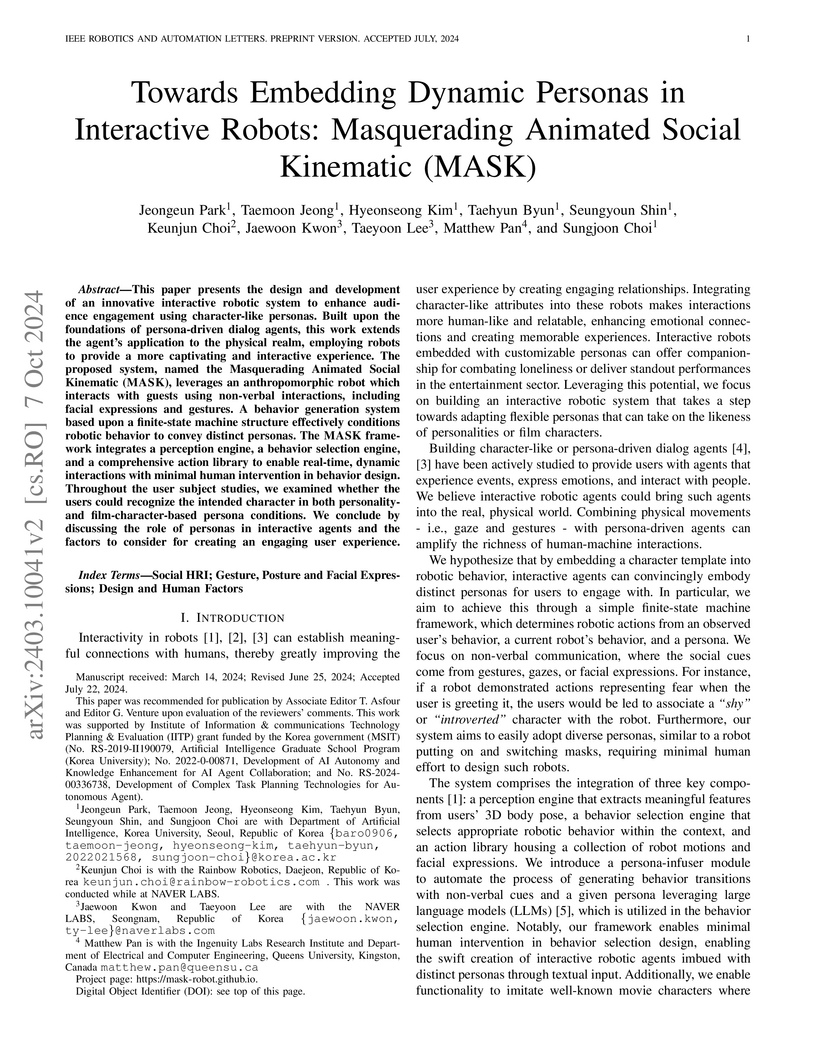

This paper presents the design and development of an innovative interactive

robotic system to enhance audience engagement using character-like personas.

Built upon the foundations of persona-driven dialog agents, this work extends

the agent's application to the physical realm, employing robots to provide a

more captivating and interactive experience. The proposed system, named the

Masquerading Animated Social Kinematic (MASK), leverages an anthropomorphic

robot which interacts with guests using non-verbal interactions, including

facial expressions and gestures. A behavior generation system based upon a

finite-state machine structure effectively conditions robotic behavior to

convey distinct personas. The MASK framework integrates a perception engine, a

behavior selection engine, and a comprehensive action library to enable

real-time, dynamic interactions with minimal human intervention in behavior

design. Throughout the user subject studies, we examined whether the users

could recognize the intended character in both personality- and

film-character-based persona conditions. We conclude by discussing the role of

personas in interactive agents and the factors to consider for creating an

engaging user experience.

NAVER LABS developed PANDAS, a prototype-based method for Novel Class Discovery and Detection that enables object detectors to automatically identify and incorporate new object categories. It achieves superior performance and significantly higher computational efficiency compared to prior state-of-the-art methods by using a class-agnostic regressor and memory-efficient prototype storage.

In this work, we introduce a control framework that combines model-based footstep planning with Reinforcement Learning (RL), leveraging desired footstep patterns derived from the Linear Inverted Pendulum (LIP) dynamics. Utilizing the LIP model, our method forward predicts robot states and determines the desired foot placement given the velocity commands. We then train an RL policy to track the foot placements without following the full reference motions derived from the LIP model. This partial guidance from the physics model allows the RL policy to integrate the predictive capabilities of the physics-informed dynamics and the adaptability characteristics of the RL controller without overfitting the policy to the template model. Our approach is validated on the MIT Humanoid, demonstrating that our policy can achieve stable yet dynamic locomotion for walking and turning. We further validate the adaptability and generalizability of our policy by extending the locomotion task to unseen, uneven terrain. During the hardware deployment, we have achieved forward walking speeds of up to 1.5 m/s on a treadmill and have successfully performed dynamic locomotion maneuvers such as 90-degree and 180-degree turns.

Bilinear models provide rich representations compared with linear models. They have been applied in various visual tasks, such as object recognition, segmentation, and visual question-answering, to get state-of-the-art performances taking advantage of the expanded representations. However, bilinear representations tend to be high-dimensional, limiting the applicability to computationally complex tasks. We propose low-rank bilinear pooling using Hadamard product for an efficient attention mechanism of multimodal learning. We show that our model outperforms compact bilinear pooling in visual question-answering tasks with the state-of-the-art results on the VQA dataset, having a better parsimonious property.

03 Mar 2023

Radar sensors are emerging as solutions for perceiving surroundings and

estimating ego-motion in extreme weather conditions. Unfortunately, radar

measurements are noisy and suffer from mutual interference, which degrades the

performance of feature extraction and matching, triggering imprecise matching

pairs, which are referred to as outliers. To tackle the effect of outliers on

radar odometry, a novel outlier-robust method called \textit{ORORA} is

proposed, which is an abbreviation of \textit{Outlier-RObust RAdar odometry}.

To this end, a novel decoupling-based method is proposed, which consists of

graduated non-convexity~(GNC)-based rotation estimation and anisotropic

component-wise translation estimation~(A-COTE). Furthermore, our method

leverages the anisotropic characteristics of radar measurements, each of whose

uncertainty along the azimuthal direction is somewhat larger than that along

the radial direction. As verified in the public dataset, it was demonstrated

that our proposed method yields robust ego-motion estimation performance

compared with other state-of-the-art methods. Our code is available at

this https URL

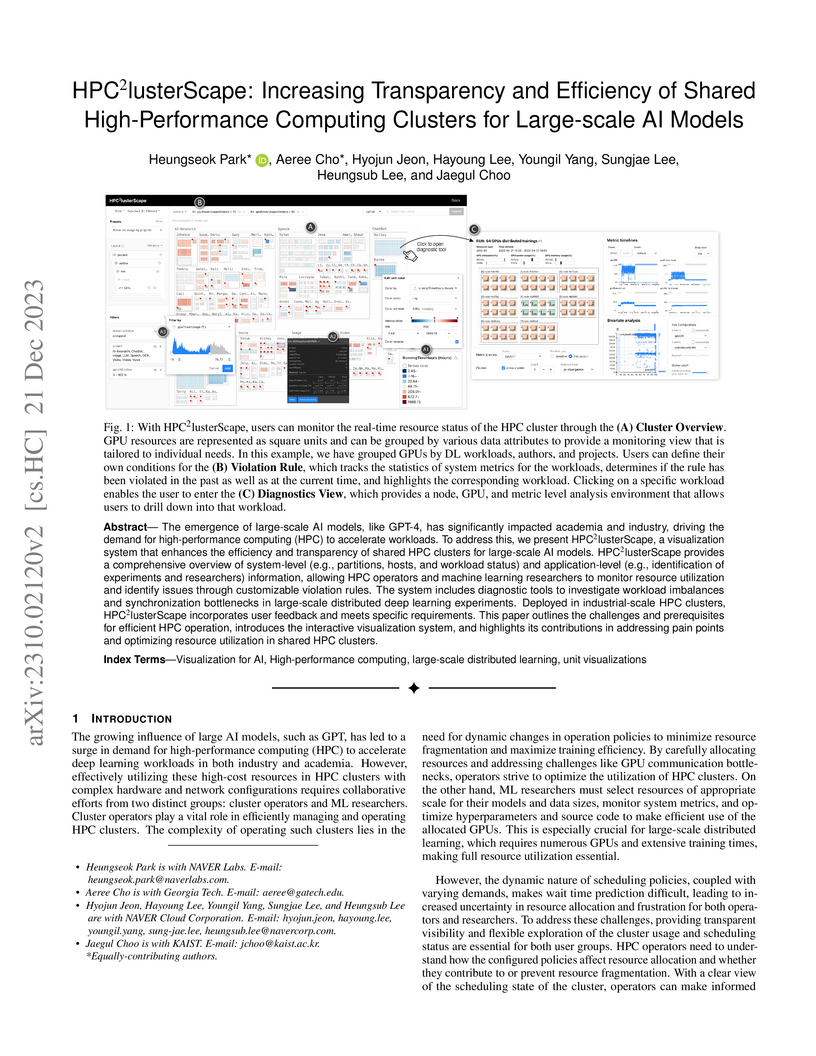

The emergence of large-scale AI models, like GPT-4, has significantly impacted academia and industry, driving the demand for high-performance computing (HPC) to accelerate workloads. To address this, we present HPCClusterScape, a visualization system that enhances the efficiency and transparency of shared HPC clusters for large-scale AI models. HPCClusterScape provides a comprehensive overview of system-level (e.g., partitions, hosts, and workload status) and application-level (e.g., identification of experiments and researchers) information, allowing HPC operators and machine learning researchers to monitor resource utilization and identify issues through customizable violation rules. The system includes diagnostic tools to investigate workload imbalances and synchronization bottlenecks in large-scale distributed deep learning experiments. Deployed in industrial-scale HPC clusters, HPCClusterScape incorporates user feedback and meets specific requirements. This paper outlines the challenges and prerequisites for efficient HPC operation, introduces the interactive visualization system, and highlights its contributions in addressing pain points and optimizing resource utilization in shared HPC clusters.

There are no more papers matching your filters at the moment.