Oden Institute for Computational Engineering and Sciences

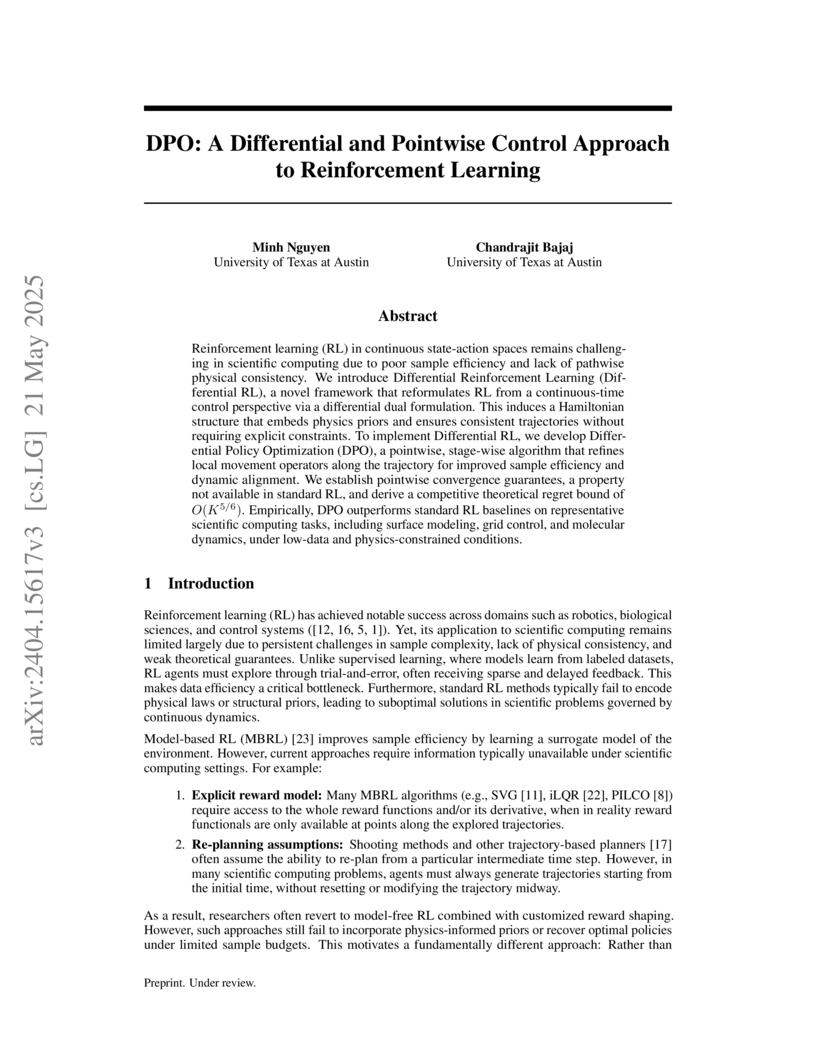

Differential Policy Optimization (DPO) re-frames reinforcement learning for continuous-time optimal control problems, leveraging Hamiltonian mechanics to inherently embed physical priors. This approach demonstrates superior performance and sample efficiency in scientific computing tasks with implicit objectives and limited data.

26 Aug 2025

In recent years, the ascendance of diffusion modeling as a state-of-the-art generative modeling approach has spurred significant interest in their use as priors in Bayesian inverse problems. However, it is unclear how to optimally integrate a diffusion model trained on the prior distribution with a given likelihood function to obtain posterior samples. While algorithms developed for this purpose can produce high-quality, diverse point estimates of the unknown parameters of interest, they are often tested on problems where the prior distribution is analytically unknown, making it difficult to assess their performance in providing rigorous uncertainty quantification. Motivated by this challenge, this work introduces three benchmark problems for evaluating the performance of diffusion model based samplers. The benchmark problems, which are inspired by problems in image inpainting, x-ray tomography, and phase retrieval, have a posterior density that is analytically known. In this setting, approximate ground-truth posterior samples can be obtained, enabling principled evaluation of the performance of posterior sampling algorithms. This work also introduces a general framework for diffusion model based posterior sampling, Bayesian Inverse Problem Solvers through Diffusion Annealing (BIPSDA). This framework unifies several recently proposed diffusion-model-based posterior sampling algorithms and contains novel algorithms that can be realized through flexible combinations of design choices. We tested the performance of a set of BIPSDA algorithms, including previously proposed state-of-the-art approaches, on the proposed benchmark problems. The results provide insight into the strengths and limitations of existing diffusion-model based posterior samplers, while the benchmark problems provide a testing ground for future algorithmic developments.

Neural operators, particularly the Deep Operator Network (DeepONet), have shown promise in learning mappings between function spaces for solving differential equations. However, standard DeepONet requires input functions to be sampled at fixed locations, limiting its applicability when sensor configurations vary or inputs exist on irregular grids. We introduce the Set Operator Network (SetONet), which modifies DeepONet's branch network to process input functions as unordered sets of location-value pairs. By incorporating Deep Sets principles, SetONet ensures permutation invariance while maintaining the same parameter count as the baseline. On classical operator-learning benchmarks, SetONet achieves parity with DeepONet on fixed layouts while sustaining accuracy under variable sensor configurations or sensor drop-off - conditions for which standard DeepONet is not applicable. More significantly, SetONet natively handles problems where inputs are naturally represented as unstructured point clouds (such as point sources or density samples) rather than values on fixed grids, a capability standard DeepONet lacks. On heat conduction with point sources, advection-diffusion modeling chemical plumes, and optimal transport between density samples, SetONet learns operators end-to-end without rasterization or multi-stage pipelines. These problems feature inputs that are naturally discrete point sets (point sources or density samples) rather than functions on fixed grids. SetONet is a DeepONet-class architecture that addresses such problems with a lightweight design, significantly broadening the applicability of operator learning to problems with variable, incomplete, or unstructured input data.

Solving stiff ordinary differential equations (StODEs) requires sophisticated numerical solvers, which are often computationally expensive. In particular, StODE's often cannot be solved with traditional explicit time integration schemes and one must resort to costly implicit methods to compute solutions. On the other hand, state-of-the-art machine learning (ML) based methods such as Neural ODE (NODE) poorly handle the timescale separation of various elements of the solutions to StODEs and require expensive implicit solvers for integration at inference time. In this work, we embark on a different path which involves learning a latent dynamics for StODEs, in which one completely avoids numerical integration. To that end, we consider a constant velocity latent dynamical system whose solution is a sequence of straight lines. Given the initial condition and parameters of the ODE, the encoder networks learn the slope (i.e the constant velocity) and the initial condition for the latent dynamics. In other words, the solution of the original dynamics is encoded into a sequence of straight lines which can be decoded back to retrieve the actual solution as and when required. Another key idea in our approach is a nonlinear transformation of time, which allows for the "stretching/squeezing" of time in the latent space, thereby allowing for varying levels of attention to different temporal regions in the solution. Additionally, we provide a simple universal-approximation-type proof showing that our approach can approximate the solution of stiff nonlinear system on a compact set to any degree of accuracy, {\epsilon}. We show that the dimension of the latent dynamical system in our approach is independent of {\epsilon}. Numerical investigation on prototype StODEs suggest that our method outperforms state-of-the art machine learning approaches for handling StODEs.

29 Jun 2024

The purpose of this technical note is to summarize the relationship between the marginal variance and correlation length of a Gaussian random field with Matérn covariance and the coefficients of the corresponding partial-differential-equation (PDE)-based precision operator.

The University of Texas at AustinIndian Institute of Technology, BombayUniversity of Colorado BoulderOden Institute for Computational Engineering and SciencesDefence Institute of Advanced TechnologyIndian Institute of Tropical MeteorologyMinistry of Earth SciencesNOAA/Physical Sciences LaboratoryCIRESJackson School of Geosciences

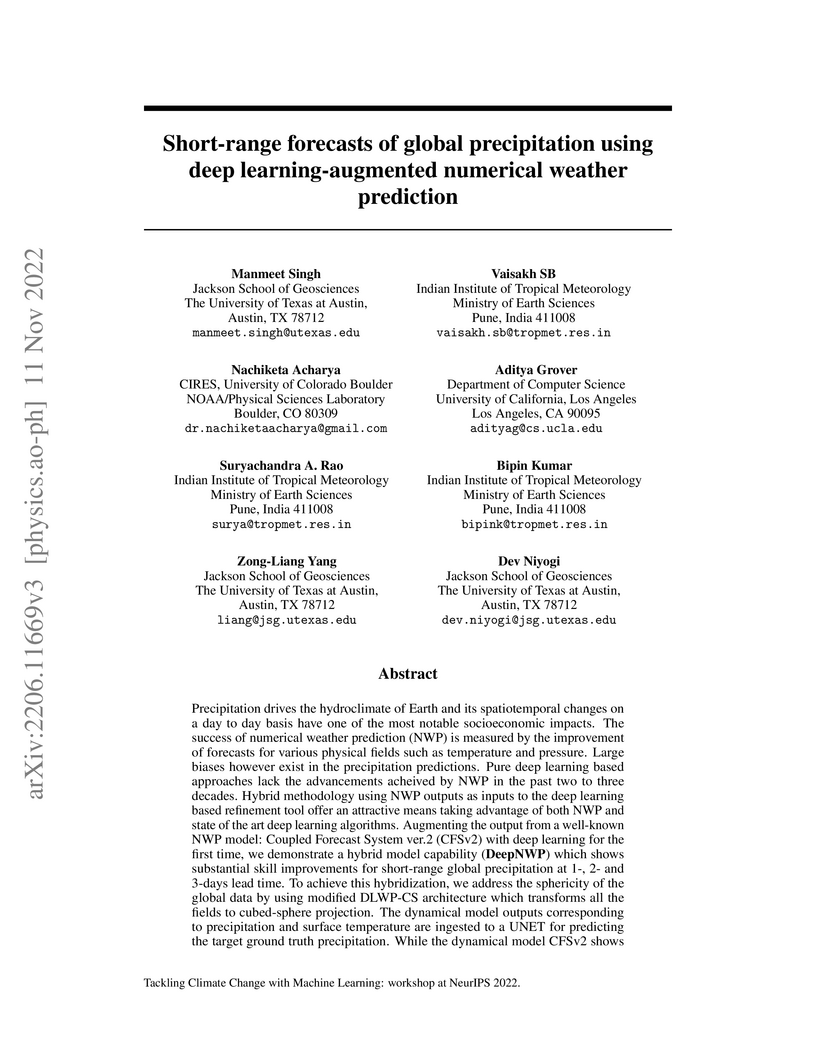

The University of Texas at AustinIndian Institute of Technology, BombayUniversity of Colorado BoulderOden Institute for Computational Engineering and SciencesDefence Institute of Advanced TechnologyIndian Institute of Tropical MeteorologyMinistry of Earth SciencesNOAA/Physical Sciences LaboratoryCIRESJackson School of GeosciencesPrecipitation governs Earth's hydroclimate, and its daily spatiotemporal fluctuations have major socioeconomic effects. Advances in Numerical weather prediction (NWP) have been measured by the improvement of forecasts for various physical fields such as temperature and pressure; however, large biases exist in precipitation prediction. We augment the output of the well-known NWP model CFSv2 with deep learning to create a hybrid model that improves short-range global precipitation at 1-, 2-, and 3-day lead times. To hybridise, we address the sphericity of the global data by using modified DLWP-CS architecture which transforms all the fields to cubed-sphere projection. Dynamical model precipitation and surface temperature outputs are fed into a modified DLWP-CS (UNET) to forecast ground truth precipitation. While CFSv2's average bias is +5 to +7 mm/day over land, the multivariate deep learning model decreases it to within -1 to +1 mm/day. Hurricane Katrina in 2005, Hurricane Ivan in 2004, China floods in 2010, India floods in 2005, and Myanmar storm Nargis in 2008 are used to confirm the substantial enhancement in the skill for the hybrid dynamical-deep learning model. CFSv2 typically shows a moderate to large bias in the spatial pattern and overestimates the precipitation at short-range time scales. The proposed deep learning augmented NWP model can address these biases and vastly improve the spatial pattern and magnitude of predicted precipitation. Deep learning enhanced CFSv2 reduces mean bias by 8x over important land regions for 1 day lead compared to CFSv2. The spatio-temporal deep learning system opens pathways to further the precision and accuracy in global short-range precipitation forecasts.

Clustering is a fundamental task in machine learning. One of the most successful and broadly used algorithms is DBSCAN, a density-based clustering algorithm. DBSCAN requires ϵ-nearest neighbor graphs of the input dataset, which are computed with range-search algorithms and spatial data structures like KD-trees. Despite many efforts to design scalable implementations for DBSCAN, existing work is limited to low-dimensional datasets, as constructing ϵ-nearest neighbor graphs is expensive in high-dimensions. In this paper, we modify DBSCAN to enable use of κ-nearest neighbor graphs of the input dataset. The κ-nearest neighbor graphs are constructed using approximate algorithms based on randomized projections. Although these algorithms can become inaccurate or expensive in high-dimensions, they possess a much lower memory overhead than constructing ϵ-nearest neighbor graphs. We delineate the conditions under which kNN-DBSCAN produces the same clustering as DBSCAN. We also present an efficient parallel implementation of the overall algorithm using OpenMP for shared memory and MPI for distributed memory parallelism. We present results on up to 16 billion points in 20 dimensions, and perform weak and strong scaling studies using synthetic data. Our code is efficient in both low and high dimensions. We can cluster one billion points in 3D in less than one second on 28K cores on the Frontera system at the Texas Advanced Computing Center (TACC). In our largest run, we cluster 65 billion points in 20 dimensions in less than 40 seconds using 114,688 x86 cores on TACC's Frontera system. Also, we compare with a state of the art parallel DBSCAN code; on 20d/4M point dataset, our code is up to 37× faster.

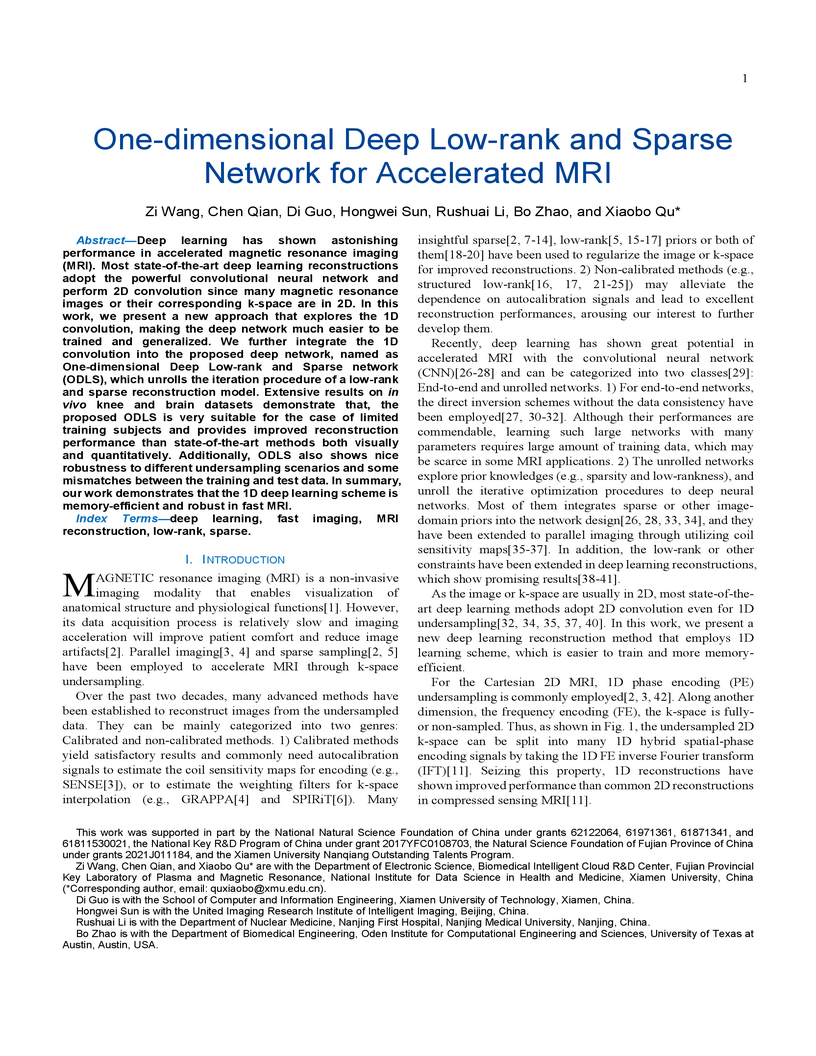

Deep learning has shown astonishing performance in accelerated magnetic resonance imaging (MRI). Most state-of-the-art deep learning reconstructions adopt the powerful convolutional neural network and perform 2D convolution since many magnetic resonance images or their corresponding k-space are in 2D. In this work, we present a new approach that explores the 1D convolution, making the deep network much easier to be trained and generalized. We further integrate the 1D convolution into the proposed deep network, named as One-dimensional Deep Low-rank and Sparse network (ODLS), which unrolls the iteration procedure of a low-rank and sparse reconstruction model. Extensive results on in vivo knee and brain datasets demonstrate that, the proposed ODLS is very suitable for the case of limited training subjects and provides improved reconstruction performance than state-of-the-art methods both visually and quantitatively. Additionally, ODLS also shows nice robustness to different undersampling scenarios and some mismatches between the training and test data. In summary, our work demonstrates that the 1D deep learning scheme is memory-efficient and robust in fast MRI.

04 Aug 2020

We present a method for converting tensors into tensor train format based on

actions of the tensor as a vector-valued multilinear function. Existing methods

for constructing tensor trains require access to "array entries" of the tensor

and are therefore inefficient or computationally prohibitive if the tensor is

accessible only through its action, especially for high order tensors. Our

method permits efficient tensor train compression of large high order

derivative tensors for nonlinear mappings that are implicitly defined through

the solution of a system of equations. Array entries of these derivative

tensors are not directly accessible, but actions of these tensors can be

computed efficiently via a procedure that we discuss. Such tensors are often

amenable to tensor train compression in theory, but until now no efficient

algorithm existed to convert them into tensor train format. We demonstrate our

method by compressing a Hilbert tensor of size $41 \times 42 \times 43 \times

44 \times 45,andbyforminghighorder(upto5^\text{th}$ order

derivatives/6th order tensors) Taylor series surrogates of the

noise-whitened parameter-to-output map for a stochastic partial differential

equation with boundary output.

30 Jul 2020

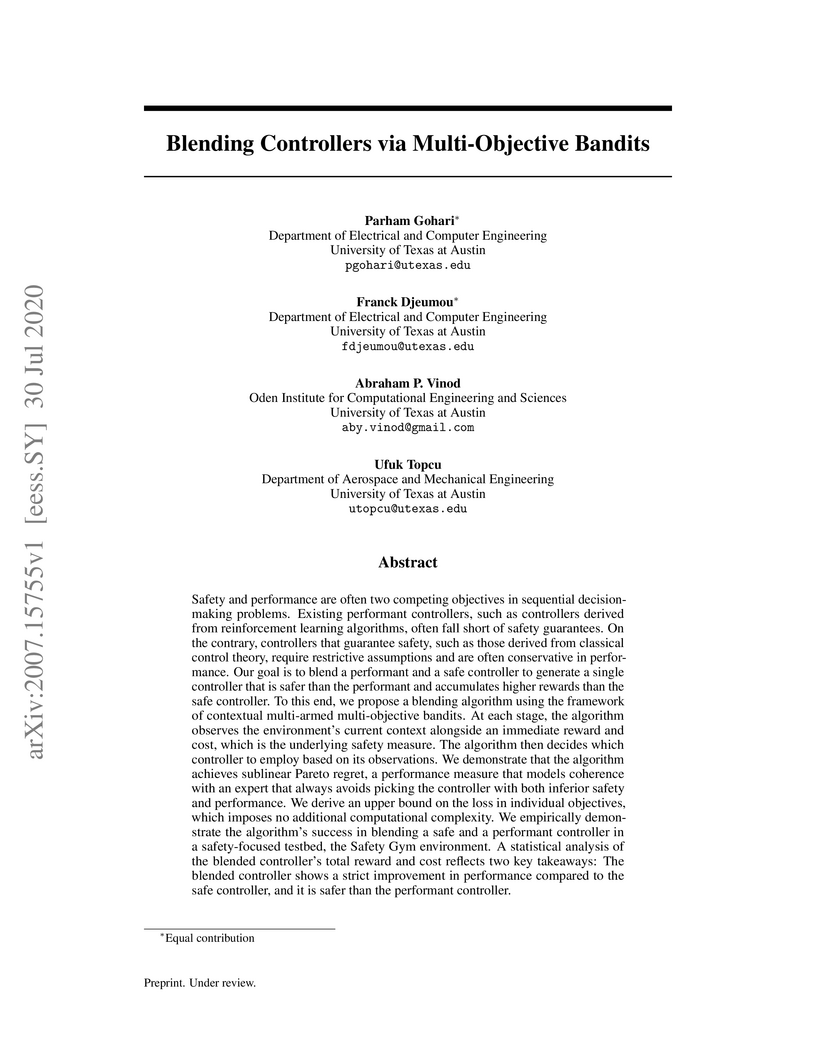

Safety and performance are often two competing objectives in sequential

decision-making problems. Existing performant controllers, such as controllers

derived from reinforcement learning algorithms, often fall short of safety

guarantees. On the contrary, controllers that guarantee safety, such as those

derived from classical control theory, require restrictive assumptions and are

often conservative in performance. Our goal is to blend a performant and a safe

controller to generate a single controller that is safer than the performant

and accumulates higher rewards than the safe controller. To this end, we

propose a blending algorithm using the framework of contextual multi-armed

multi-objective bandits. At each stage, the algorithm observes the

environment's current context alongside an immediate reward and cost, which is

the underlying safety measure. The algorithm then decides which controller to

employ based on its observations. We demonstrate that the algorithm achieves

sublinear Pareto regret, a performance measure that models coherence with an

expert that always avoids picking the controller with both inferior safety and

performance. We derive an upper bound on the loss in individual objectives,

which imposes no additional computational complexity. We empirically

demonstrate the algorithm's success in blending a safe and a performant

controller in a safety-focused testbed, the Safety Gym environment. A

statistical analysis of the blended controller's total reward and cost reflects

two key takeaways: The blended controller shows a strict improvement in

performance compared to the safe controller, and it is safer than the

performant controller.

We explore using neural operators, or neural network representations of

nonlinear maps between function spaces, to accelerate infinite-dimensional

Bayesian inverse problems (BIPs) with models governed by nonlinear parametric

partial differential equations (PDEs). Neural operators have gained significant

attention in recent years for their ability to approximate the

parameter-to-solution maps defined by PDEs using as training data solutions of

PDEs at a limited number of parameter samples. The computational cost of BIPs

can be drastically reduced if the large number of PDE solves required for

posterior characterization are replaced with evaluations of trained neural

operators. However, reducing error in the resulting BIP solutions via reducing

the approximation error of the neural operators in training can be challenging

and unreliable. We provide an a priori error bound result that implies certain

BIPs can be ill-conditioned to the approximation error of neural operators,

thus leading to inaccessible accuracy requirements in training. To reliably

deploy neural operators in BIPs, we consider a strategy for enhancing the

performance of neural operators, which is to correct the prediction of a

trained neural operator by solving a linear variational problem based on the

PDE residual. We show that a trained neural operator with error correction can

achieve a quadratic reduction of its approximation error, all while retaining

substantial computational speedups of posterior sampling when models are

governed by highly nonlinear PDEs. The strategy is applied to two numerical

examples of BIPs based on a nonlinear reaction--diffusion problem and

deformation of hyperelastic materials. We demonstrate that posterior

representations of the two BIPs produced using trained neural operators are

greatly and consistently enhanced by error correction.

Unmeasured causal forces influence diverse experimental time series, such as

the transcription factors that regulate genes, or the descending neurons that

steer motor circuits. Combining the theory of skew-product dynamical systems

with topological data analysis, we show that simultaneous recurrence events

across multiple time series reveal the structure of their shared unobserved

driving signal. We introduce a physics-based unsupervised learning algorithm

that reconstructs causal drivers by iteratively building a recurrence graph

with glass-like structure. As the amount of data increases, a percolation

transition on this graph leads to weak ergodicity breaking for random walks --

revealing the shared driver's dynamics, even from strongly-corrupted

measurements. We relate reconstruction accuracy to the rate of information

transfer from a chaotic driver to the response systems, and we find that

effective reconstruction proceeds through gradual approximation of the driver's

dynamical attractor. Through extensive benchmarks against classical signal

processing and machine learning techniques, we demonstrate our method's ability

to extract causal drivers from diverse experimental datasets spanning ecology,

genomics, fluid dynamics, and physiology.

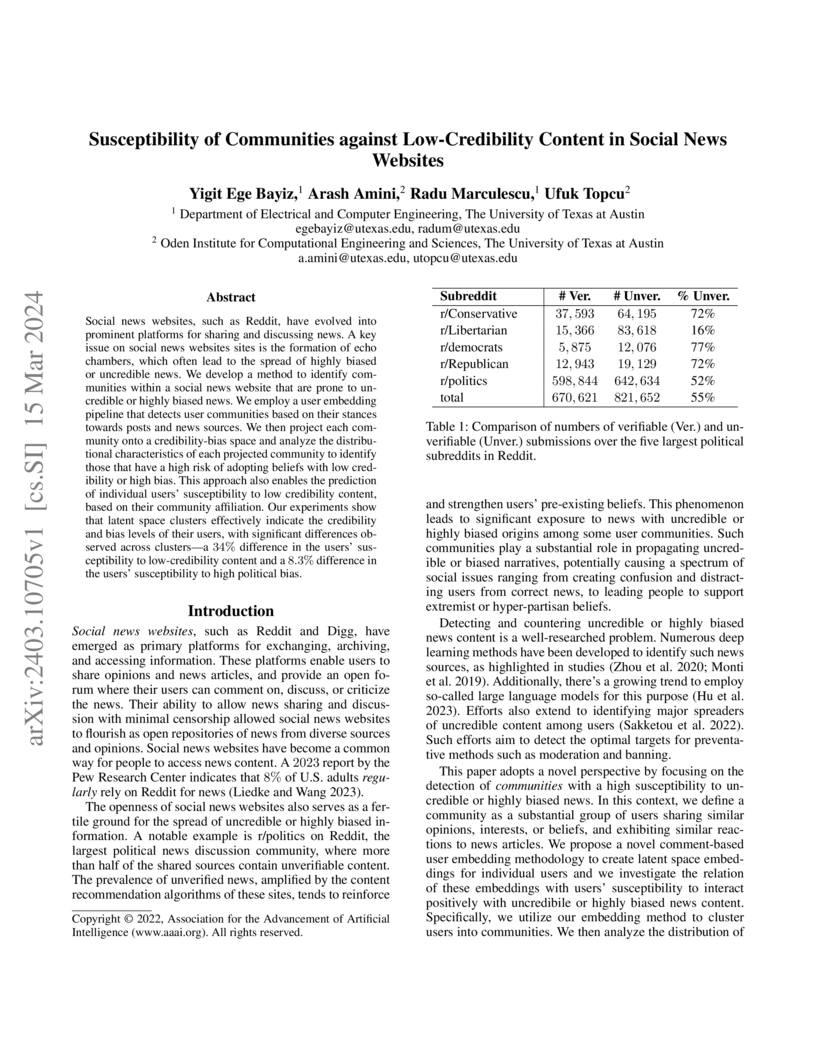

Social news websites, such as Reddit, have evolved into prominent platforms for sharing and discussing news. A key issue on social news websites sites is the formation of echo chambers, which often lead to the spread of highly biased or uncredible news. We develop a method to identify communities within a social news website that are prone to uncredible or highly biased news. We employ a user embedding pipeline that detects user communities based on their stances towards posts and news sources. We then project each community onto a credibility-bias space and analyze the distributional characteristics of each projected community to identify those that have a high risk of adopting beliefs with low credibility or high bias. This approach also enables the prediction of individual users' susceptibility to low credibility content, based on their community affiliation. Our experiments show that latent space clusters effectively indicate the credibility and bias levels of their users, with significant differences observed across clusters -- a 34% difference in the users' susceptibility to low-credibility content and a 8.3% difference in the users' susceptibility to high political bias.

User Manual for the hp3D Finite Element Software, available on GitHub at this https URL

The curse of dimensionality is a longstanding challenge in Bayesian inference in high dimensions. In this work, we propose a projected Stein variational gradient descent (pSVGD) method to overcome this challenge by exploiting the fundamental property of intrinsic low dimensionality of the data informed subspace stemming from ill-posedness of such problems. We adaptively construct the subspace using a gradient information matrix of the log-likelihood, and apply pSVGD to the much lower-dimensional coefficients of the parameter projection. The method is demonstrated to be more accurate and efficient than SVGD. It is also shown to be more scalable with respect to the number of parameters, samples, data points, and processor cores via experiments with parameters dimensions ranging from the hundreds to the tens of thousands.

We develop a data-driven framework for learning and correcting non-autonomous vehicle dynamics. Physics-based vehicle models are often simplified for tractability and therefore exhibit inherent model-form uncertainty, motivating the need for data-driven correction. Moreover, non-autonomous dynamics are governed by time-dependent control inputs, which pose challenges in learning predictive models directly from temporal snapshot data. To address these, we reformulate the vehicle dynamics via a local parameterization of the time-dependent inputs, yielding a modified system composed of a sequence of local parametric dynamical systems. We approximate these parametric systems using two complementary approaches. First, we employ the DRIPS (dimension reduction and interpolation in parameter space) methodology to construct efficient linear surrogate models, equipped with lifted observable spaces and manifold-based operator interpolation. This enables data-efficient learning of vehicle models whose dynamics admit accurate linear representations in the lifted spaces. Second, for more strongly nonlinear systems, we employ FML (Flow Map Learning), a deep neural network approach that approximates the parametric evolution map without requiring special treatment of nonlinearities. We further extend FML with a transfer-learning-based model correction procedure, enabling the correction of misspecified prior models using only a sparse set of high-fidelity or experimental measurements, without assuming a prescribed form for the correction term. Through a suite of numerical experiments on unicycle, simplified bicycle, and slip-based bicycle models, we demonstrate that DRIPS offers robust and highly data-efficient learning of non-autonomous vehicle dynamics, while FML provides expressive nonlinear modeling and effective correction of model-form errors under severe data scarcity.

14 Jun 2024

Richards equation is often used to represent two-phase fluid flow in an

unsaturated porous medium when one phase is much heavier and more viscous than

the other. However, it cannot describe the fully saturated flow for some

capillary functions without specialized treatment due to degeneracy in the

capillary pressure term. Mathematically, gravity-dominated variably saturated

flows are interesting because their governing partial differential equation

switches from hyperbolic in the unsaturated region to elliptic in the saturated

region. Moreover, the presence of wetting fronts introduces strong spatial

gradients often leading to numerical instability. In this work, we develop a

robust, multidimensional mathematical model and implement a well-known

efficient and conservative numerical method for such variably saturated flow in

the limit of negligible capillary forces. The elliptic problem in saturated

regions is integrated efficiently into our framework by solving a reduced

system corresponding only to the saturated cells using fixed head boundary

conditions in the unsaturated cells. In summary, this coupled

hyperbolic-elliptic PDE framework provides an efficient, physics-based

extension of the hyperbolic Richards equation to simulate fully saturated

regions. Finally, we provide a suite of easy-to-implement yet challenging

benchmark test problems involving saturated flows in one and two dimensions.

These simple problems, accompanied by their corresponding analytical solutions,

can prove to be pivotal for the code verification, model validation (V&V) and

performance comparison of simulators for variably saturated flow. Our numerical

solutions show an excellent comparison with the analytical results for the

proposed problems. The last test problem on two-dimensional infiltration in a

stratified, heterogeneous soil shows the formation and evolution of multiple

disconnected saturated regions.

21 May 2024

Halide perovskites emerged as a revolutionary family of high-quality

semiconductors for solar energy harvesting and energy-efficient lighting. There

is mounting evidence that the exceptional optoelectronic properties of these

materials could stem from unconventional electron-phonon couplings, and it has

been suggested that the formation of polarons and self-trapped excitons could

be key to understanding such properties. By performing first-principles

simulations with unprecedented detail across the length scales, here we show

that halide perovskites harbor a uniquely rich variety of polaronic species,

including small polarons, large polarons, and charge density waves, and we

explain a variety of experimental observations. We find that these emergent

quasiparticles support topologically nontrivial phonon fields with quantized

topological charge, making them the first non-magnetic analog of the helical

Bloch points found in magnetic skyrmion lattices.

06 Sep 2022

We address the solution of large-scale Bayesian optimal experimental design

(OED) problems governed by partial differential equations (PDEs) with

infinite-dimensional parameter fields. The OED problem seeks to find sensor

locations that maximize the expected information gain (EIG) in the solution of

the underlying Bayesian inverse problem. Computation of the EIG is usually

prohibitive for PDE-based OED problems. To make the evaluation of the EIG

tractable, we approximate the (PDE-based) parameter-to-observable map with a

derivative-informed projected neural network (DIPNet) surrogate, which exploits

the geometry, smoothness, and intrinsic low-dimensionality of the map using a

small and dimension-independent number of PDE solves. The surrogate is then

deployed within a greedy algorithm-based solution of the OED problem such that

no further PDE solves are required. We analyze the EIG approximation error in

terms of the generalization error of the DIPNet and show they are of the same

order. Finally, the efficiency and accuracy of the method are demonstrated via

numerical experiments on OED problems governed by inverse scattering and

inverse reactive transport with up to 16,641 uncertain parameters and 100

experimental design variables, where we observe up to three orders of magnitude

speedup relative to a reference double loop Monte Carlo method.

22 Jul 2024

We present a scalable and efficient framework for the inference of

spatially-varying parameters of continuum materials from image observations of

their deformations. Our goal is the nondestructive identification of arbitrary

damage, defects, anomalies and inclusions without knowledge of their morphology

or strength. Since these effects cannot be directly observed, we pose their

identification as an inverse problem. Our approach builds on integrated digital

image correlation (IDIC, Besnard Hild, Roux, 2006), which poses the image

registration and material inference as a monolithic inverse problem, thereby

enforcing physical consistency of the image registration using the governing

PDE. Existing work on IDIC has focused on low-dimensional parameterizations of

materials. In order to accommodate the inference of heterogeneous material

propertes that are formally infinite dimensional, we present ∞-IDIC, a

general formulation of the PDE-constrained coupled image registration and

inversion posed directly in the function space setting. This leads to several

mathematical and algorithmic challenges arising from the ill-posedness and high

dimensionality of the inverse problem. To address ill-posedness, we consider

various regularization schemes, namely H1 and total variation for the

inference of smooth and sharp features, respectively. To address the

computational costs associated with the discretized problem, we use an

efficient inexact-Newton CG framework for solving the regularized inverse

problem. In numerical experiments, we demonstrate the ability of ∞-IDIC

to characterize complex, spatially varying Lam\'e parameter fields of linear

elastic and hyperelastic materials. Our method exhibits (i) the ability to

recover fine-scale and sharp material features, (ii) mesh-independent

convergence performance and hyperparameter selection, (iii) robustness to

observational noise.

There are no more papers matching your filters at the moment.