Ask or search anything...

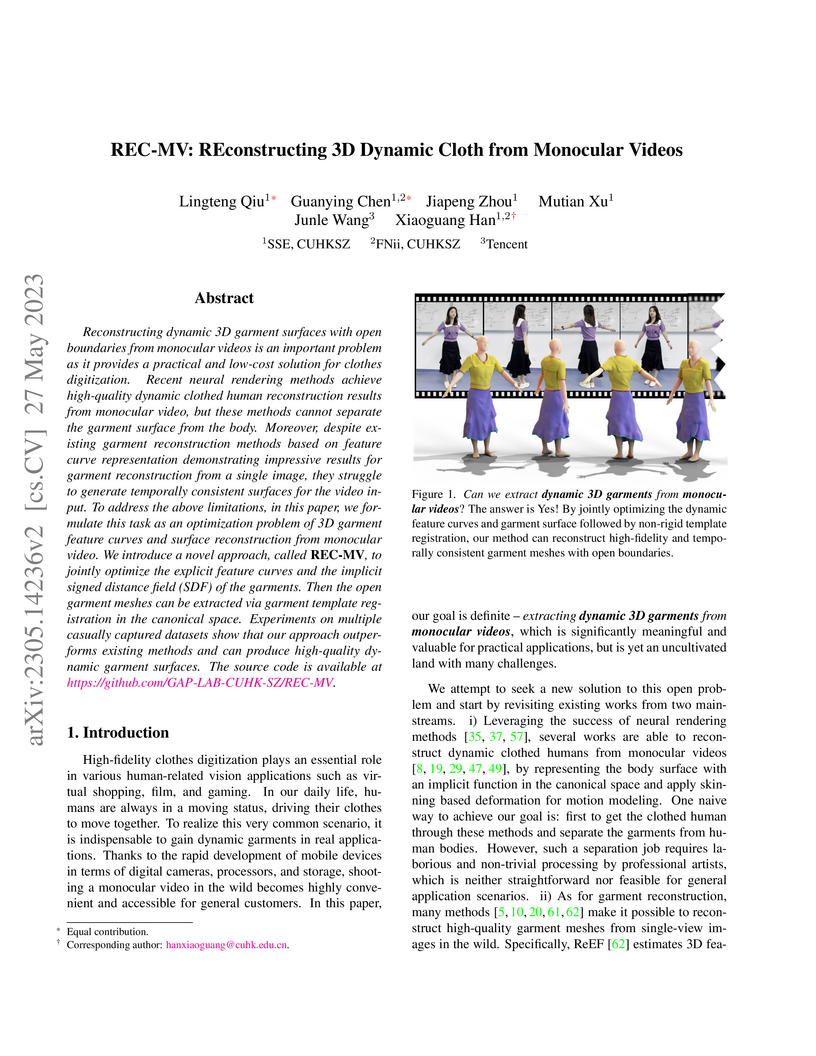

GSFixer enhances 3D Gaussian Splatting reconstruction quality from sparse input views by introducing a reference-guided video diffusion model. This method leverages both 2D semantic and 3D geometric features to achieve superior visual fidelity and 3D consistency, quantitatively improving PSNR by 2.16 dB on artifact restoration and 3.55 dB for 3-view input over prior generative approaches.

View blogResearchers at The Chinese University of Hong Kong, Shenzhen, developed TASTE-Rob, a large-scale dataset, and a three-stage pose-refinement pipeline to generate high-fidelity, task-oriented hand-object interaction videos. This approach achieved state-of-the-art video generation quality with FVD 9.43, reduced grasping pose classification error from 67.8% to 9.7%, and improved robotic manipulation success rates from 84% to 96%.

View blogA new benchmark, MME-VideoOCR, evaluates Multimodal Large Language Models (MLLMs) on their OCR-based capabilities within dynamic video scenarios. This benchmark, comprising 1,464 videos and 2,000 human-annotated QA pairs, reveals that state-of-the-art MLLMs achieve only up to 73.7% accuracy, particularly struggling with cross-frame information integration and exhibiting a strong language prior bias.

View blogScene-R1 introduces a video-grounded large language model capable of 3D scene reasoning by leveraging 2D foundation models and reinforcement learning, thereby eliminating the need for costly 3D annotations. The model generates explicit chain-of-thought rationales, enhancing interpretability, and outperforms label-free baselines on 3D visual grounding and Visual Question Answering tasks.

View blogVideoMV, developed by researchers from Alibaba Group's Institute for Intelligent Computing and collaborators, presents a framework for consistent multi-view image generation by leveraging pre-trained video generative models. The method fine-tunes these models and integrates a novel 3D-aware denoising sampling strategy, drastically reducing training time to 4 GPU hours while producing high-quality, consistent 24-view images that surpass prior techniques.

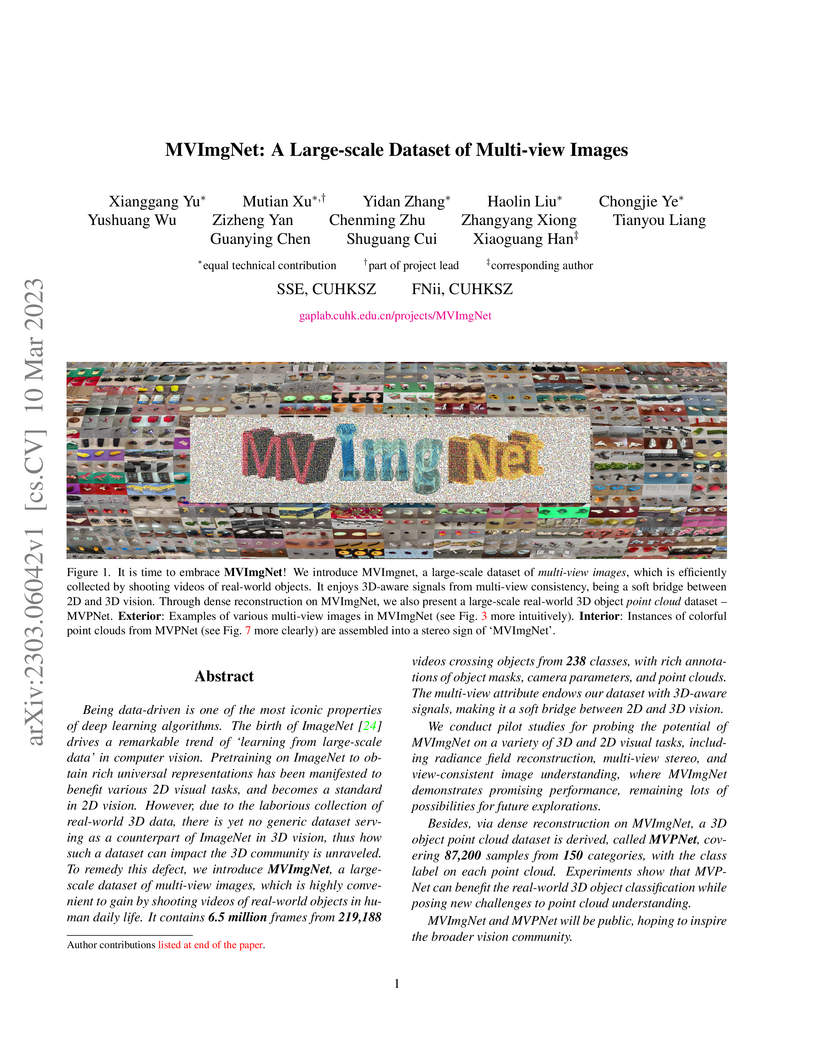

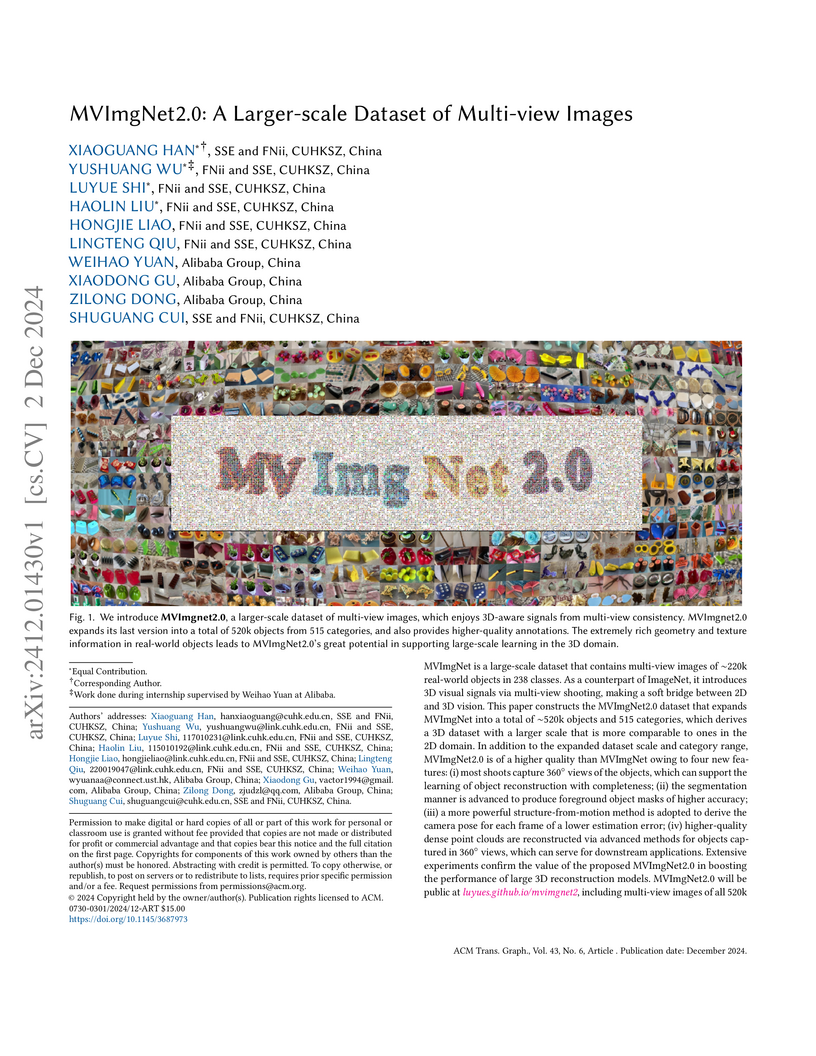

View blogThis work introduces MVImgNet, a large-scale dataset of 6.5 million multi-view images extracted from 219,188 videos covering 238 object classes, and MVPNet, a derived 3D point cloud dataset. It provides rich annotations including camera parameters and 3D information, demonstrating improvements in novel view synthesis, multi-view stereo, and view-consistent image understanding through pretraining.

View blogRichDreamer introduces a generalizable Normal-Depth diffusion model and a depth-conditioned albedo diffusion model to produce detail-rich 3D content from text prompts. This approach achieves superior geometric fidelity and disentangled appearance properties for accurate relighting, establishing a new state-of-the-art in text-to-3D generation.

View blogThis research introduces the Heterogeneous Value Alignment Evaluation (HVAE) system, an automated framework to assess how large language models (LLMs) align with diverse human values using the Social Value Orientation (SVO) framework. The evaluation of mainstream LLMs revealed a general propensity towards neutral values and varying performance based on model architecture and fine-tuning methods.

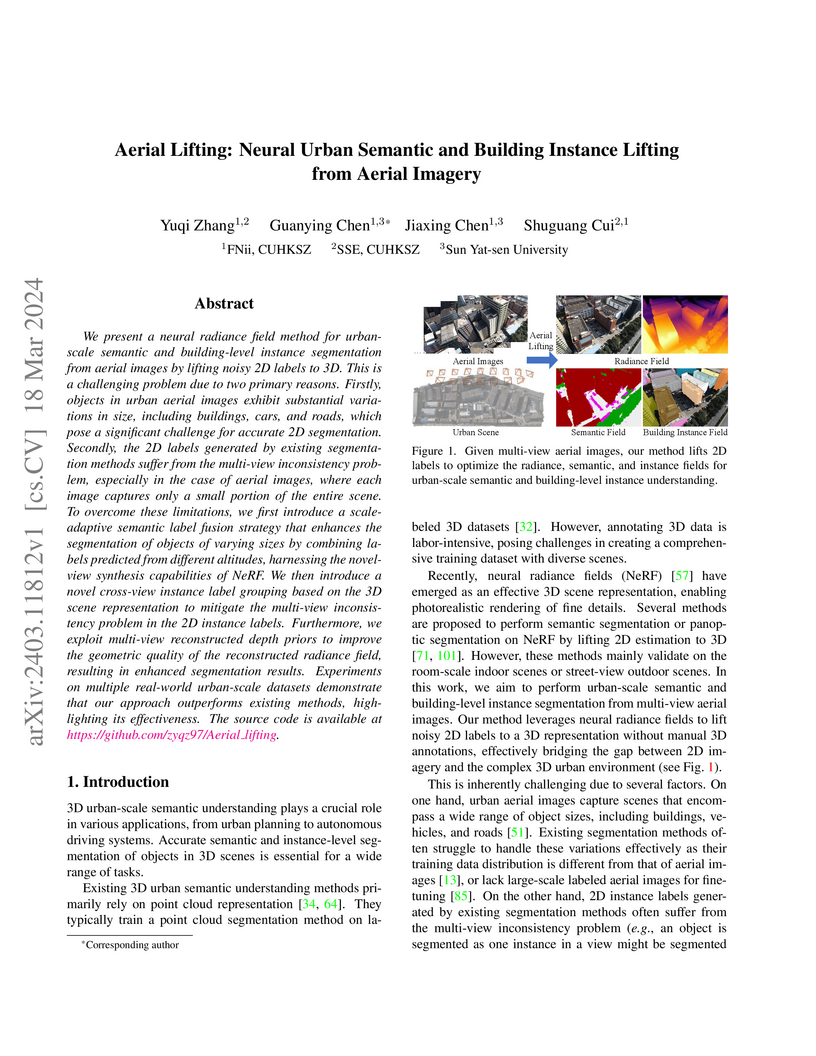

View blogThis paper presents "Aerial Lifting," a method for achieving urban-scale semantic and building-level instance segmentation directly from multi-view aerial images by lifting noisy 2D labels to a 3D neural radiance field. The approach employs scale-adaptive semantic label fusion and a cross-view instance label grouping strategy to address the challenges of scale variation and multi-view inconsistencies in 2D labels, demonstrating improved 3D semantic and instance segmentation accuracy on urban datasets.

View blog

Alibaba Group

Alibaba Group Fudan University

Fudan University

International Digital Economy Academy (IDEA)

International Digital Economy Academy (IDEA)

Sun Yat-Sen University

Sun Yat-Sen University

Nanjing University

Nanjing University

University of Michigan

University of Michigan Peking University

Peking University

Tencent

Tencent