San Jose State University

T2VWorldBench introduces a framework for evaluating how well text-to-video models integrate world knowledge into generated content. The evaluation of ten state-of-the-art models reveals a common struggle to embed factual accuracy, commonsense reasoning, and causal relationships into videos, particularly for abstract knowledge domains.

A theoretical foundation is established for Second-Order MeanFlow, a generative model that integrates average acceleration into its dynamics while preserving efficient single-step sampling. The work, from Wyoming Seminary, San Jose State University, and UC Berkeley, also classifies the model's expressivity within the TC0 circuit complexity class and demonstrates how inference can be accelerated to O(n^(2+o(1))) with bounded approximation error.

We analyze pole skipping in two-dimensional field theories perturbed away from conformality by a relevant deformation. The leading-order correction to the retarded Green's function can be formally computed in conformal perturbation theory, though it results in singular expressions. We suggest a natural interpretation of these expressions and compute the resulting Green's function to leading nontrivial order in the deformation. As a check of our results, we compare the skipped poles we find to the butterfly velocity obtained from a holographic gravitational dual perturbed by a massive scalar field; we find precise agreement. We comment on extensions to subleading order, where agreement with holographic expectations would no longer be expected.

15 Oct 2025

Large Language Models (LLMs) suffer significant performance degradation in multi-turn conversations when information is presented incrementally. Given that multi-turn conversations characterize everyday interactions with LLMs, this degradation poses a severe challenge to real world usability. We hypothesize that abrupt increases in model uncertainty signal misalignment in multi-turn LLM interactions, and we exploit this insight to dynamically realign conversational context. We introduce ERGO (Entropy-guided Resetting for Generation Optimization), which continuously quantifies internal uncertainty via Shannon entropy over next token distributions and triggers adaptive prompt consolidation when a sharp spike in entropy is detected. By treating uncertainty as a first class signal rather than a nuisance to eliminate, ERGO embraces variability in language and modeling, representing and responding to uncertainty. In multi-turn tasks with incrementally revealed instructions, ERGO yields a 56.6% average performance gain over standard baselines, increases aptitude (peak performance capability) by 24.7%, and decreases unreliability (variability in performance) by 35.3%, demonstrating that uncertainty aware interventions can improve both accuracy and reliability in conversational AI.

06 Oct 2025

Recently, Liao and Qin [J. Fluid Mech. 1008, R2 (2025)] claimed that numerical noise in direct numerical simulation of turbulence using the deterministic Navier-Stokes equations is "approximately equivalent" to the physical noise arising from random molecular motion (thermal fluctuations). We show here that it this claim not supported by their results and that it contradicts other results in the literature. Furthermore, we demonstrate that the numerical implementation of thermal fluctuations in their so-called "clean numerical simulations" is incorrect.

25 Jan 2024

The web serves as a global repository of knowledge, used by billions of people to search for information. Ensuring that users receive the most relevant and up-to-date information, especially in the presence of multiple versions of web content from different time points remains a critical challenge for information retrieval. This challenge has recently been compounded by the increased use of question answering tools trained on Wikipedia or web content and powered by large language models (LLMs) which have been found to make up information (or hallucinate), and in addition have been shown to struggle with the temporal dimensions of information. Even Retriever Augmented Language Models (RALMs) which incorporate a document database to reduce LLM hallucination are unable to handle temporal queries correctly. This leads to instances where RALMs respond to queries such as "Who won the Wimbledon Championship?", by retrieving document passages related to Wimbledon but without the ability to differentiate between them based on how recent they are.

In this paper, we propose and evaluate, TempRALM, a temporally-aware Retriever Augmented Language Model (RALM) with few-shot learning extensions, which takes into account both semantically and temporally relevant documents relative to a given query, rather than relying on semantic similarity alone. We show that our approach results in up to 74% improvement in performance over the baseline RALM model, without requiring model pre-training, recalculating or replacing the RALM document index, or adding other computationally intensive elements.

05 Aug 2025

This educational book outlines the fundamental scientific and engineering principles behind trapped ion quantum computers, focusing on how these systems meet the essential criteria for practical quantum computation. It details the physical mechanisms of qubit realization, trapping, cooling, and gate operations using the Ytterbium-171 ion as a concrete example.

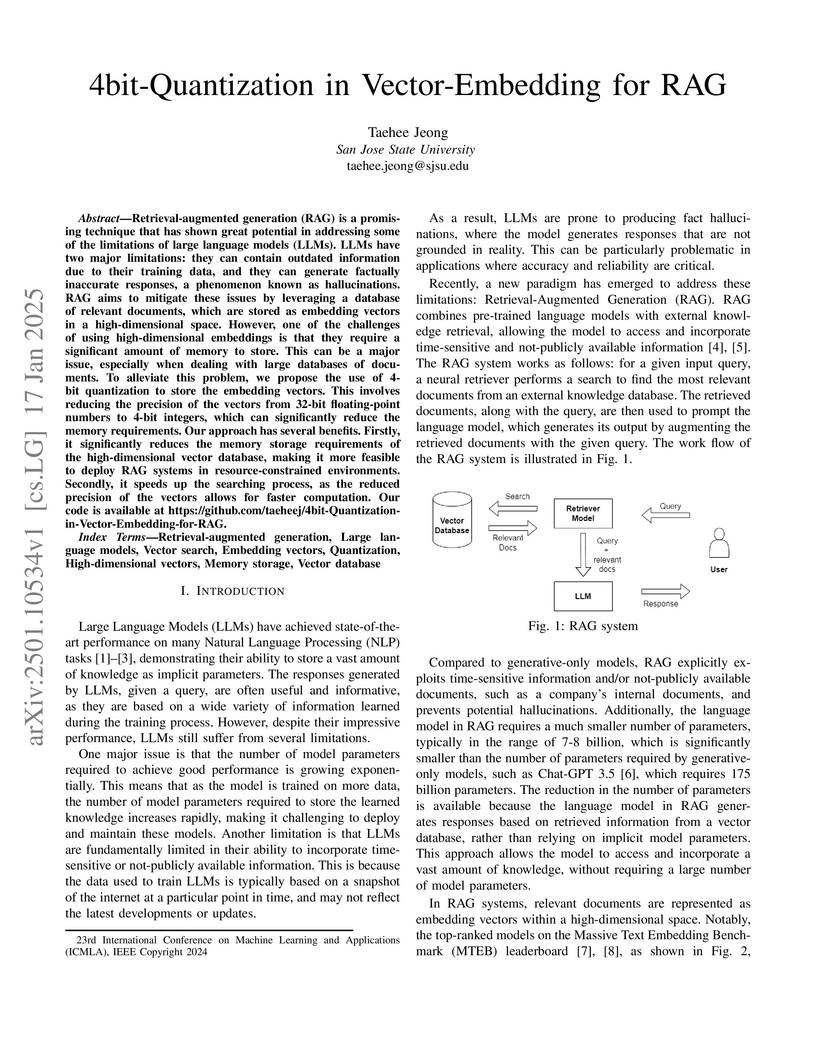

This paper investigates 4-bit quantization for vector embeddings in Retrieval-Augmented Generation (RAG) systems to reduce memory consumption. The research demonstrates that group-wise 4-bit quantization can decrease memory requirements by eight times compared to 32-bit floating-point while maintaining acceptable information retrieval accuracy and outperforming Product Quantization.

11 Jan 2025

Molecular fluctuations profoundly inhibit spatio-temporal intermittency and modify the energy spectrum in compressible turbulence. The study demonstrates that these microscopic effects lead to more Gaussian-like statistics and introduce a thermal energy crossover scale at wavelengths approximately three times larger than the Kolmogorov length scale.

The goal of this expository paper is to present the basics of geometric control theory suitable for advanced undergraduate or beginning graduate students with a solid background in advanced calculus and ordinary differential equations.

Logging is a critical function in modern distributed applications, but the lack of standardization in log query languages and formats creates significant challenges. Developers currently must write ad hoc queries in platform-specific languages, requiring expertise in both the query language and application-specific log details -- an impractical expectation given the variety of platforms and volume of logs and applications. While generating these queries with large language models (LLMs) seems intuitive, we show that current LLMs struggle with log-specific query generation due to the lack of exposure to domain-specific knowledge. We propose a novel natural language (NL) interface to address these inconsistencies and aide log query generation, enabling developers to create queries in a target log query language by providing NL inputs. We further introduce ~\textbf{NL2QL}, a manually annotated, real-world dataset of natural language questions paired with corresponding LogQL queries spread across three log formats, to promote the training and evaluation of NL-to-loq query systems. Using NL2QL, we subsequently fine-tune and evaluate several state of the art LLMs, and demonstrate their improved capability to generate accurate LogQL queries. We perform further ablation studies to demonstrate the effect of additional training data, and the transferability across different log formats. In our experiments, we find up to 75\% improvement of finetuned models to generate LogQL queries compared to non finetuned models.

This research compares large language model (LLM) fine-tuning methods, including Quantized Low Rank Adapter (QLoRA), Retrieval Augmented fine-tuning (RAFT), and Reinforcement Learning from Human Feedback (RLHF), and additionally compared LLM evaluation methods including End to End (E2E) benchmark method of "Golden Answers", traditional natural language processing (NLP) metrics, RAG Assessment (Ragas), OpenAI GPT-4 evaluation metrics, and human evaluation, using the travel chatbot use case. The travel dataset was sourced from the the Reddit API by requesting posts from travel-related subreddits to get travel-related conversation prompts and personalized travel experiences, and augmented for each fine-tuning method. We used two pretrained LLMs utilized for fine-tuning research: LLaMa 2 7B, and Mistral 7B. QLoRA and RAFT are applied to the two pretrained models. The inferences from these models are extensively evaluated against the aforementioned metrics. The best model according to human evaluation and some GPT-4 metrics was Mistral RAFT, so this underwent a Reinforcement Learning from Human Feedback (RLHF) training pipeline, and ultimately was evaluated as the best model. Our main findings are that: 1) quantitative and Ragas metrics do not align with human evaluation, 2) Open AI GPT-4 evaluation most aligns with human evaluation, 3) it is essential to keep humans in the loop for evaluation because, 4) traditional NLP metrics insufficient, 5) Mistral generally outperformed LLaMa, 6) RAFT outperforms QLoRA, but still needs postprocessing, 7) RLHF improves model performance significantly. Next steps include improving data quality, increasing data quantity, exploring RAG methods, and focusing data collection on a specific city, which would improve data quality by narrowing the focus, while creating a useful product.

UCLA

UCLA MetaWashington State UniversitySan Jose State UniversityNational Institute of Technology SilcharIndian Institute of Information Technology GuwahatiIndraprastha Institute of Information Technology DelhiVishwakarma Institute of Information TechnologyAmazon AIBITS Pilani Hyderabad CampusKalyani Government Engineering CollegeAI Institute University of South CarolinaGandhi Institute for Technological AdvancementBirla Institute of Technology and Science Pilani Goa

MetaWashington State UniversitySan Jose State UniversityNational Institute of Technology SilcharIndian Institute of Information Technology GuwahatiIndraprastha Institute of Information Technology DelhiVishwakarma Institute of Information TechnologyAmazon AIBITS Pilani Hyderabad CampusKalyani Government Engineering CollegeAI Institute University of South CarolinaGandhi Institute for Technological AdvancementBirla Institute of Technology and Science Pilani GoaThe rapid advancement of large language models (LLMs) has led to increasingly human-like AI-generated text, raising concerns about content authenticity, misinformation, and trustworthiness. Addressing the challenge of reliably detecting AI-generated text and attributing it to specific models requires large-scale, diverse, and well-annotated datasets. In this work, we present a comprehensive dataset comprising over 58,000 text samples that combine authentic New York Times articles with synthetic versions generated by multiple state-of-the-art LLMs including Gemma-2-9b, Mistral-7B, Qwen-2-72B, LLaMA-8B, Yi-Large, and GPT-4-o. The dataset provides original article abstracts as prompts, full human-authored narratives. We establish baseline results for two key tasks: distinguishing human-written from AI-generated text, achieving an accuracy of 58.35\%, and attributing AI texts to their generating models with an accuracy of 8.92\%. By bridging real-world journalistic content with modern generative models, the dataset aims to catalyze the development of robust detection and attribution methods, fostering trust and transparency in the era of generative AI. Our dataset is available at: this https URL.

Recently, a considerable amount of malware research has focused on the use of powerful image-based machine learning techniques, which generally yield impressive results. However, before image-based techniques can be applied to malware, the samples must be converted to images, and there is no generally-accepted approach for doing so. The malware-to-image conversion strategies found in the literature often appear to be ad hoc, with little or no effort made to take into account properties of executable files. In this paper, we experiment with eight distinct malware-to-image conversion techniques, and for each, we test a variety of learning models. We find that several of these image conversion techniques perform similarly across a range of learning models, in spite of the image conversion processes being quite different. These results suggest that the effectiveness of image-based malware classification techniques may depend more on the inherent strengths of image analysis techniques, as opposed to the precise details of the image conversion strategy.

Managing the threat posed by malware requires accurate detection and

classification techniques. Traditional detection strategies, such as signature

scanning, rely on manual analysis of malware to extract relevant features,

which is labor intensive and requires expert knowledge. Function call graphs

consist of a set of program functions and their inter-procedural calls,

providing a rich source of information that can be leveraged to classify

malware without the labor intensive feature extraction step of traditional

techniques. In this research, we treat malware classification as a graph

classification problem. Based on Local Degree Profile features, we train a wide

range of Graph Neural Network (GNN) architectures to generate embeddings which

we then classify. We find that our best GNN models outperform previous

comparable research involving the well-known MalNet-Tiny Android malware

dataset. In addition, our GNN models do not suffer from the overfitting issues

that commonly afflict non-GNN techniques, although GNN models require longer

training times.

30 Apr 2025

These notes are an introduction to fluctuating hydrodynamics (FHD) and the

formulation of numerical schemes for the resulting stochastic partial

differential equations (PDEs). Fluctuating hydrodynamics was originally

introduced by Landau and Lifshitz as a way to put thermal fluctuations into a

continuum framework by including a stochastic forcing to each dissipative

transport process (e.g., heat flux). While FHD has been useful in modeling

transport and fluid dynamics at the mesoscopic scale, theoretical calculations

have been feasible only with simplifying assumptions. As such there is great

interest in numerical schemes for Computational Fluctuating Hydrodynamics

(CFHD). There are a variety of algorithms (e.g., spectral, finite element,

lattice Boltzmann) but in this introduction we focus on finite volume schemes.

Accompanying these notes is a demonstration program in Python available on

GitHub (this https URL).

As an intriguing case is the goodness of the machine and deep learning models generated by these LLMs in conducting automated scientific data analysis, where a data analyst may not have enough expertise in manually coding and optimizing complex deep learning models and codes and thus may opt to leverage LLMs to generate the required models. This paper investigates and compares the performance of the mainstream LLMs, such as ChatGPT, PaLM, LLama, and Falcon, in generating deep learning models for analyzing time series data, an important and popular data type with its prevalent applications in many application domains including financial and stock market. This research conducts a set of controlled experiments where the prompts for generating deep learning-based models are controlled with respect to sensitivity levels of four criteria including 1) Clarify and Specificity, 2) Objective and Intent, 3) Contextual Information, and 4) Format and Style. While the results are relatively mix, we observe some distinct patterns. We notice that using LLMs, we are able to generate deep learning-based models with executable codes for each dataset seperatly whose performance are comparable with the manually crafted and optimized LSTM models for predicting the whole time series dataset. We also noticed that ChatGPT outperforms the other LLMs in generating more accurate models. Furthermore, we observed that the goodness of the generated models vary with respect to the ``temperature'' parameter used in configuring LLMS. The results can be beneficial for data analysts and practitioners who would like to leverage generative AIs to produce good prediction models with acceptable goodness.

A new framework, STEAD, combines an X3D feature extractor, (2+1)D convolutions, and linear attention with a triplet loss to provide efficient, high-accuracy video anomaly detection for real-time applications. On the UCF-Crime dataset, STEAD-Base achieved 91.34% AUC, while STEAD-Fast reduced parameters by 99.70% over prior methods while maintaining competitive performance.

This work addresses the challenge of malware classification using machine learning by developing a novel dataset labeled at both the malware type and family levels. Raw binaries were collected from sources such as VirusShare, VX Underground, and MalwareBazaar, and subsequently labeled with family information parsed from binary names and type-level labels integrated from ClarAVy. The dataset includes 14 malware types and 17 malware families, and was processed using a unified feature extraction pipeline based on static analysis, particularly extracting features from Portable Executable headers, to support advanced classification tasks. The evaluation was focused on three key classification tasks. In the binary classification of malware versus benign samples, Random Forest and XGBoost achieved high accuracy on the full datasets, reaching 98.5% for type-based detection and 98.98% for family-based detection. When using truncated datasets of 1,000 samples to assess performance under limited data conditions, both models still performed strongly, achieving 97.6% for type-based detection and 98.66% for family-based detection. For interclass classification, which distinguishes between malware types or families, the models reached up to 97.5% accuracy on type-level tasks and up to 93.7% on family-level tasks. In the multiclass classification setting, which assigns samples to the correct type or family, SVM achieved 81.1% accuracy on type labels, while Random Forest and XGBoost reached approximately 73.4% on family labels. The results highlight practical trade-offs between accuracy and computational cost, and demonstrate that labeling at both the type and family levels enables more fine-grained and insightful malware classification. The work establishes a robust foundation for future research on advanced malware detection and classification.

There have been many recent advances in the fields of generative Artificial Intelligence (AI) and Large Language Models (LLM), with the Generative Pre-trained Transformer (GPT) model being a leading "chatbot." LLM-based chatbots have become so powerful that it may seem difficult to differentiate between human-written and machine-generated text. To analyze this problem, we have developed a new dataset consisting of more than 750,000 human-written paragraphs, with a corresponding chatbot-generated paragraph for each. Based on this dataset, we apply Machine Learning (ML) techniques to determine the origin of text (human or chatbot). Specifically, we consider two methodologies for tackling this issue: feature analysis and embeddings. Our feature analysis approach involves extracting a collection of features from the text for classification. We also explore the use of contextual embeddings and transformer-based architectures to train classification models. Our proposed solutions offer high classification accuracy and serve as useful tools for textual analysis, resulting in a better understanding of chatbot-generated text in this era of advanced AI technology.

There are no more papers matching your filters at the moment.