Shiraz University of Technology

10 Nov 2022

Requirement engineering (RE) is the first and the most important step in software production and development. The RE is aimed to specify software requirements. One of the tasks in RE is the categorization of software requirements as functional and non-functional requirements. The functional requirements (FR) show the responsibilities of the system while non-functional requirements represent the quality factors of software. Discrimination between FR and NFR is a challenging task. Nowadays Deep Learning (DL) has entered all fields of engineering and has increased accuracy and reduced time in their implementation process. In this paper, we use deep learning for the classification of software requirements. Five prominent DL algorithms are trained for classifying requirements. Also, two voting classification algorithms are utilized for creating ensemble classifiers based on five DL methods. The PURE, a repository of Software Requirement Specification (SRS) documents, is selected for our experiments. We created a dataset from PURE which contains 4661 requirements where 2617 requirements are functional and the remaining are non-functional. Our methods are applied to the dataset and their performance analysis is reported. The results show that the performance of deep learning models is satisfactory and the voting mechanisms provide better results.

31 May 2025

AI copilots represent a new generation of AI-powered systems designed to

assist users, particularly knowledge workers and developers, in complex,

context-rich tasks. As these systems become more embedded in daily workflows,

personalization has emerged as a critical factor for improving usability,

effectiveness, and user satisfaction. Central to this personalization is

preference optimization: the system's ability to detect, interpret, and align

with individual user preferences. While prior work in intelligent assistants

and optimization algorithms is extensive, their intersection within AI copilots

remains underexplored. This survey addresses that gap by examining how user

preferences are operationalized in AI copilots. We investigate how preference

signals are sourced, modeled across different interaction stages, and refined

through feedback loops. Building on a comprehensive literature review, we

define the concept of an AI copilot and introduce a taxonomy of preference

optimization techniques across pre-, mid-, and post-interaction phases. Each

technique is evaluated in terms of advantages, limitations, and design

implications. By consolidating fragmented efforts across AI personalization,

human-AI interaction, and language model adaptation, this work offers both a

unified conceptual foundation and a practical design perspective for building

user-aligned, persona-aware AI copilots that support end-to-end adaptability

and deployment.

In user-centric design, persona development plays a vital role in understanding user behaviour, capturing needs, segmenting audiences, and guiding design decisions. However, the growing complexity of user interactions calls for a more contextualized approach to ensure designs align with real user needs. While earlier studies have advanced persona classification by modelling user behaviour, capturing contextual information, especially by integrating textual and tabular data, remains a key challenge. These models also often lack explainability, leaving their predictions difficult to interpret or justify. To address these limitations, we present ExBigBang (Explainable BigBang), a hybrid text-tabular approach that uses transformer-based architectures to model rich contextual features for persona classification. ExBigBang incorporates metadata, domain knowledge, and user profiling to embed deeper context into predictions. Through a cyclical process of user profiling and classification, our approach dynamically updates to reflect evolving user behaviours. Experiments on a benchmark persona classification dataset demonstrate the robustness of our model. An ablation study confirms the benefits of combining text and tabular data, while Explainable AI techniques shed light on the rationale behind the model's predictions.

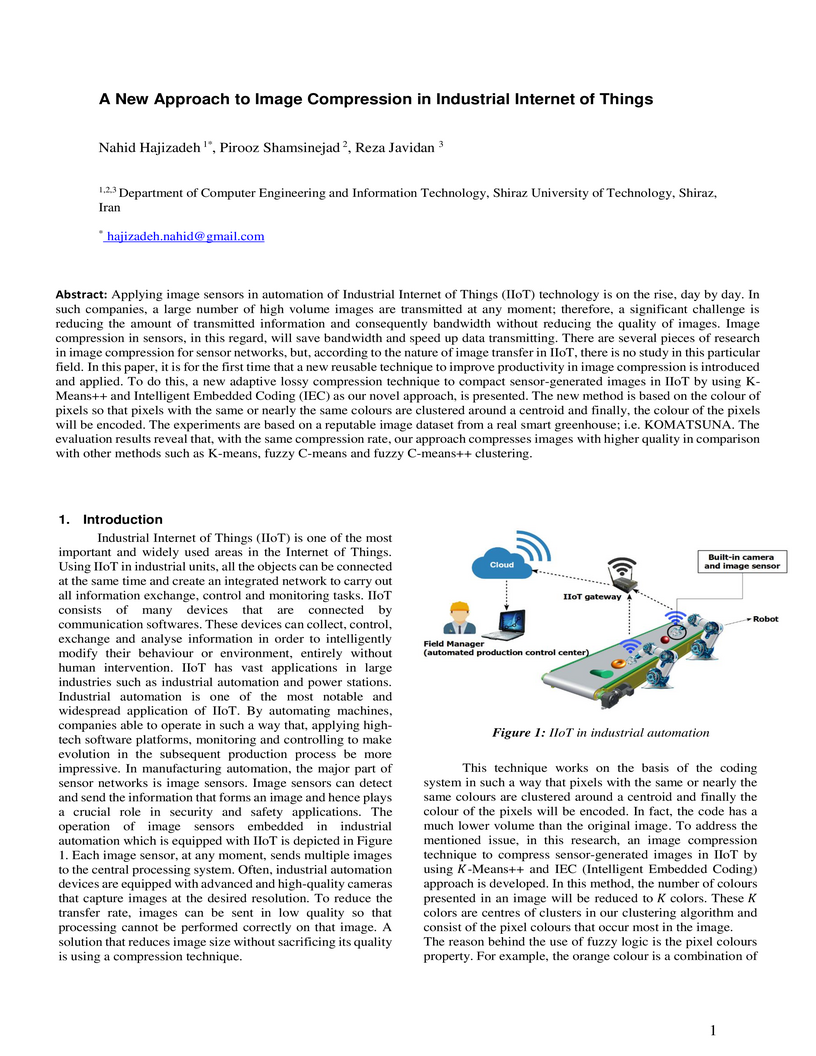

Applying image sensors in automation of Industrial Internet of Things (IIoT) technology is on the rise, day by day. In such companies, a large number of high volume images are transmitted at any moment; therefore, a significant challenge is reducing the amount of transmitted information and consequently bandwidth without reducing the quality of images. Image compression in sensors, in this regard, will save bandwidth and speed up data transmitting. There are several pieces of research in image compression for sensor networks, but, according to the nature of image transfer in IIoT, there is no study in this particular field. In this paper, it is for the first time that a new reusable technique to improve productivity in image compression is introduced and applied. To do this, a new adaptive lossy compression technique to compact sensor-generated images in IIoT by using K- Means++ and Intelligent Embedded Coding (IEC) as our novel approach, is presented. The new method is based on the colour of pixels so that pixels with the same or nearly the same colours are clustered around a centroid and finally, the colour of the pixels will be encoded. The experiments are based on a reputable image dataset from a real smart greenhouse; i.e. KOMATSUNA. The evaluation results reveal that, with the same compression rate, our approach compresses images with higher quality in comparison with other methods such as K-means, fuzzy C-means and fuzzy C-means++ clustering.

16 Dec 2024

Abstract: The rising global temperatures caused by climate change significantly impact energy consumption and electricity generation. Fluctuating temperatures and frequent extreme weather events disrupt energy production and consumption patterns. Addressing these challenges has become a priority, prompting governments, industries, and societies to pursue sustainable development and embrace eco-friendly economies. This strategy aims to decouple economic growth from environmental harm, ensuring a sustainable future for generations. Understanding the link between climate change, energy resources, and sustainable development is crucial. Techno-economic analysis provides a framework for evaluating energy-related projects and policies, guiding decision-makers toward sustainable solutions. A case study highlights the interaction between hydroponic unit energy needs, electricity pricing from wind farms, and product sales prices. Findings suggest that smaller 2-megawatt investments are more efficient and adaptable than larger 18-megawatt projects, proving economically viable and technologically flexible. However, such investments must also consider their social and environmental impacts on local communities. Sustainable development seeks to ensure that progress benefits all stakeholders while protecting the environment. Achieving this requires collaboration among governments, businesses, researchers, and individuals. By fostering innovation, adopting eco-friendly practices, and creating supportive policies, society can transition to a green economy, mitigating climate change and promoting a sustainable, resilient future.

09 Apr 2024

Despite many advantages of direction-of-arrivals (DOAs) in sparse representation domain, they have high computational complexity. This paper presents a new method for real-valued 2-D DOAs estimation of sources in a uniform circular array configuration. This method uses a transformation based on phase mode excitation in uniform circular arrays which called real beamspace L1-SVD (RB-L1SVD). This unitary transformation converts complex manifold matrix to real one, so that the computational complexity is decreased with respect to complex valued computations,its computation, at least, is one,fourth of the complex-valued case; moreover, some benefits from using this transformation are robustness to array imperfections, a better noise suppression because of exploiting an additional real structure, and etc. Numerical results demonstrate the better performance of the proposed approach over previous techniques such as C-L1SVD, RB-ESPRIT, and RB-MUSIC, especially in low signal-to-noise ratios.

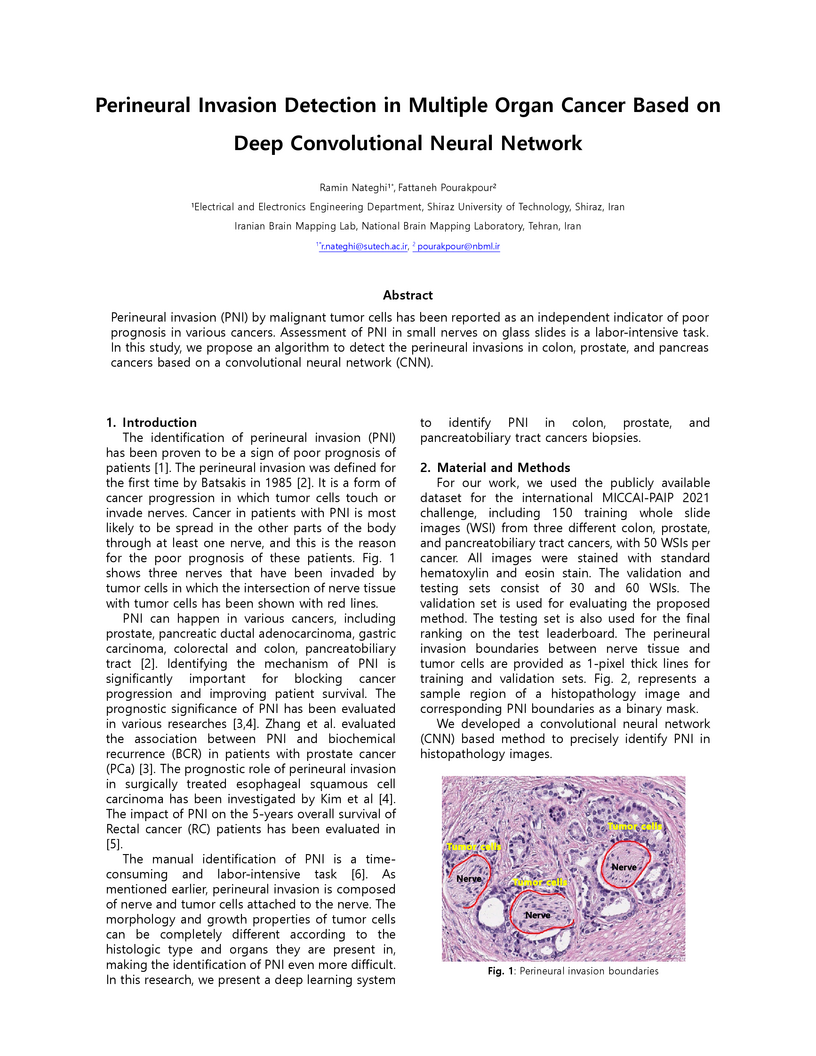

Perineural invasion (PNI) by malignant tumor cells has been reported as an independent indicator of poor prognosis in various cancers. Assessment of PNI in small nerves on glass slides is a labor-intensive task. In this study, we propose an algorithm to detect the perineural invasions in colon, prostate, and pancreas cancers based on a convolutional neural network (CNN).

11 Sep 2021

We develop spectral methods for ODEs and operator eigenvalue problems that are based on a least-squares formulation of the problem. The key tool is a method for rectangular generalized eigenvalue problems, which we extend to quasimatrices and objects combining quasimatrices and matrices. The strength of the approach is its flexibility that lies in the quasimatrix formulation allowing the basis functions to be chosen arbitrarily (e.g. those obtained by solving nearby problems), and often giving high accuracy. We also show how our algorithm can easily be modified to solve problems with eigenvalue-dependent boundary conditions, and discuss reformulations as an integral equation, which often improves the accuracy.

This exhaustive investigation is dedicated to delving into the intricate legal aspects that underlie the inefficiency in the advancement and utilization of sustainable energies, with a primary focus on the dynamic landscape of China and carefully selected representative nations. In an era where the global community increasingly acknowledges the pressing need for environmentally-friendly alternatives to traditional fossil fuels, renewable energy sources have rightfully garnered substantial attention as encouraging solutions. Nevertheless, notwithstanding their potential to revolutionize the energy sector and counteract climate change, a multitude of legal and regulatory barriers may present formidable hindrances that impede their seamless integration into the energy landscape. With a resolute and concentrated aim, the research sets forth on a painstaking exploration and analysis of the intricate legal frameworks, policies, and institutional arrangements in place within China and the chosen representative nations. The ultimate objective is to discern and identify potential challenges and inefficiencies that could hinder the progress of renewable energy projects and initiatives.

05 Jul 2016

We study cyclic codes with arbitrary length over Fp+vFp where theta(v)=av, a in Fp and v^2=0. We characterize all existing codes in case of O(theta)|n by using certain projections from (Fp+vFp)[x;theta] to Fp[x]. We provide an explicit expression for the ensemble of all possible codes. We also prove useful properties of these codes in the case where O(theta)|n does not hold. We provide results and examples to illustrate how the codes are constructed and how their encoding and decoding are realized.

24 Feb 2019

Unidirectional surface plasmon polaritons (SPPs) at the interface between a gyrotropic medium and a simple medium are studied in a newly-recognized frequency regime wherein the SPPs form narrow, beam-like patterns due to hyperbolic dispersion. The SPP beams are steerable by controlling parameters such as the cyclotron frequency (external bias) or the frequency of operation. The bulk band structure along different propagation directions is examined to ascertain a common bandgap, valid for all propagation directions, which the SPPs cross. The case of a finite-thickness gyrotropic slab is also considered, for which we present the Green function and examine the thickness and loss level required to maintain a unidirectional SPP.

24 Jan 2025

Communication reliability, as defined by 3GPP, refers to the probability of providing a desired quality of service (QoS). This metric is typically quantified for wireless networks by averaging the QoS success indicator over spatial and temporal random variables. Recently, the meta distribution (MD) has emerged as a two-level performance analysis tool for wireless networks, offering a detailed examination of the outer level (i.e., system-level) reliability assessment versus the inner level (i.e., link-level) reliability thresholds. Most existing studies focus on first-order spatiotemporal MD reliability analyses, and the benefits of leveraging MD reliability for applications beyond this structure remain unexplored, a gap addressed in this paper. We present wireless application examples that can benefit the higher-order MD reliability analysis. Specifically, we provide the analysis and numerical results for a second-order spatial-spectral-temporal MD reliability of ultra-wideband THz communication. The results demonstrate the value of the hierarchical representation of MD reliability across three domains and the impact of the inner-layer target reliability on the overall MD reliability measure.

10 Feb 2023

Researchers applied the LIME Explainable AI technique to a Random Forest classifier for software requirement analysis, demonstrating how explanations in terms of supportive and distractive words can clarify model decisions for functional and non-functional requirement classification. This approach builds trust in AI tools and informs data preprocessing by enabling feature reduction without impacting classification performance.

In recent years, malware detection has become an active research topic in the area of Internet of Things (IoT) security. The principle is to exploit knowledge from large quantities of continuously generated malware. Existing algorithms practice available malware features for IoT devices and lack real-time prediction behaviors. More research is thus required on malware detection to cope with real-time misclassification of the input IoT data. Motivated by this, in this paper we propose an adversarial self-supervised architecture for detecting malware in IoT networks, SETTI, considering samples of IoT network traffic that may not be labeled. In the SETTI architecture, we design three self-supervised attack techniques, namely Self-MDS, GSelf-MDS and ASelf-MDS. The Self-MDS method considers the IoT input data and the adversarial sample generation in real-time. The GSelf-MDS builds a generative adversarial network model to generate adversarial samples in the self-supervised structure. Finally, ASelf-MDS utilizes three well-known perturbation sample techniques to develop adversarial malware and inject it over the self-supervised architecture. Also, we apply a defence method to mitigate these attacks, namely adversarial self-supervised training to protect the malware detection architecture against injecting the malicious samples. To validate the attack and defence algorithms, we conduct experiments on two recent IoT datasets: IoT23 and NBIoT. Comparison of the results shows that in the IoT23 dataset, the Self-MDS method has the most damaging consequences from the attacker's point of view by reducing the accuracy rate from 98% to 74%. In the NBIoT dataset, the ASelf-MDS method is the most devastating algorithm that can plunge the accuracy rate from 98% to 77%.

03 Oct 2024

In this paper, we investigate Jordan left α-centralizer on algebras. We show that every Jordan left α-centralizer on an algebra with a right identity is a left α-centralizer. We also investigate this result for Banach algebras with a bounded approximate identity. Finally, we study Jordan left α-centralizer on group algebra L1(G).

03 Oct 2024

Let θ be an isomorphism on L0∞(w)∗. In this paper, we investigate θ-generalized derivations on L0∞(w)∗. We show that every θ-centralizing θ-generalized derivation on L0∞(w)∗ is a θ-right centralizer. We also prove that this result is true for θ-skew centralizing θ-generalized derivations.

10 Dec 2021

Software product line represents software engineering methods, tools and techniques for creating a group of related software systems from a shared set of software assets. Each product is a combination of multiple features. These features are known as software assets. So, the task of production can be mapped to a feature subset selection problem which is an NP-hard combinatorial optimization problem. This issue is much significant when the number of features in a software product line is huge. In this paper, a new method based on Multi Objective Bee Swarm Optimization algorithm (called MOBAFS) is presented. The MOBAFS is a population based optimization algorithm which is inspired by foraging behavior of honey bees. The is used to solve a SBSE problem. This technique is evaluated on five large scale real world software product lines in the range of 1,244 to 6,888 features. The proposed method is compared with the state-of-the-art, SATIBEA. According to results of three solution quality indicators and two diversity metrics, the proposed method, in most cases, surpasses the other algorithm.

The volume of malware and the number of attacks in IoT devices are rising

everyday, which encourages security professionals to continually enhance their

malware analysis tools. Researchers in the field of cyber security have

extensively explored the usage of sophisticated analytics and the efficiency of

malware detection. With the introduction of new malware kinds and attack

routes, security experts confront considerable challenges in developing

efficient malware detection and analysis solutions. In this paper, a different

view of malware analysis is considered and the risk level of each sample

feature is computed, and based on that the risk level of that sample is

calculated. In this way, a criterion is introduced that is used together with

accuracy and FPR criteria for malware analysis in IoT environment. In this

paper, three malware detection methods based on visualization techniques called

the clustering approach, the probabilistic approach, and the deep learning

approach are proposed. Then, in addition to the usual machine learning criteria

namely accuracy and FPR, a proposed criterion based on the risk of samples has

also been used for comparison, with the results showing that the deep learning

approach performed better in detecting malware

Image-guided interventions are saving the lives of a large number of patients

where the image registration problem should indeed be considered as the most

complex and complicated issue to be tackled. On the other hand, the recently

huge progress in the field of machine learning made by the possibility of

implementing deep neural networks on the contemporary many-core GPUs opened up

a promising window to challenge with many medical applications, where the

registration is not an exception. In this paper, a comprehensive review on the

state-of-the-art literature known as medical image registration using deep

neural networks is presented. The review is systematic and encompasses all the

related works previously published in the field. Key concepts, statistical

analysis from different points of view, confiding challenges, novelties and

main contributions, key-enabling techniques, future directions and prospective

trends all are discussed and surveyed in details in this comprehensive review.

This review allows a deep understanding and insight for the readers active in

the field who are investigating the state-of-the-art and seeking to contribute

the future literature.

In this paper, we develop four malware detection methods using Hamming

distance to find similarity between samples which are first nearest neighbors

(FNN), all nearest neighbors (ANN), weighted all nearest neighbors (WANN), and

k-medoid based nearest neighbors (KMNN). In our proposed methods, we can

trigger the alarm if we detect an Android app is malicious. Hence, our

solutions help us to avoid the spread of detected malware on a broader scale.

We provide a detailed description of the proposed detection methods and related

algorithms. We include an extensive analysis to asses the suitability of our

proposed similarity-based detection methods. In this way, we perform our

experiments on three datasets, including benign and malware Android apps like

Drebin, Contagio, and Genome. Thus, to corroborate the actual effectiveness of

our classifier, we carry out performance comparisons with some state-of-the-art

classification and malware detection algorithms, namely Mixed and Separated

solutions, the program dissimilarity measure based on entropy (PDME) and the

FalDroid algorithms. We test our experiments in a different type of features:

API, intent, and permission features on these three datasets. The results

confirm that accuracy rates of proposed algorithms are more than 90% and in

some cases (i.e., considering API features) are more than 99%, and are

comparable with existing state-of-the-art solutions.

There are no more papers matching your filters at the moment.