St. Pölten University of Applied Sciences

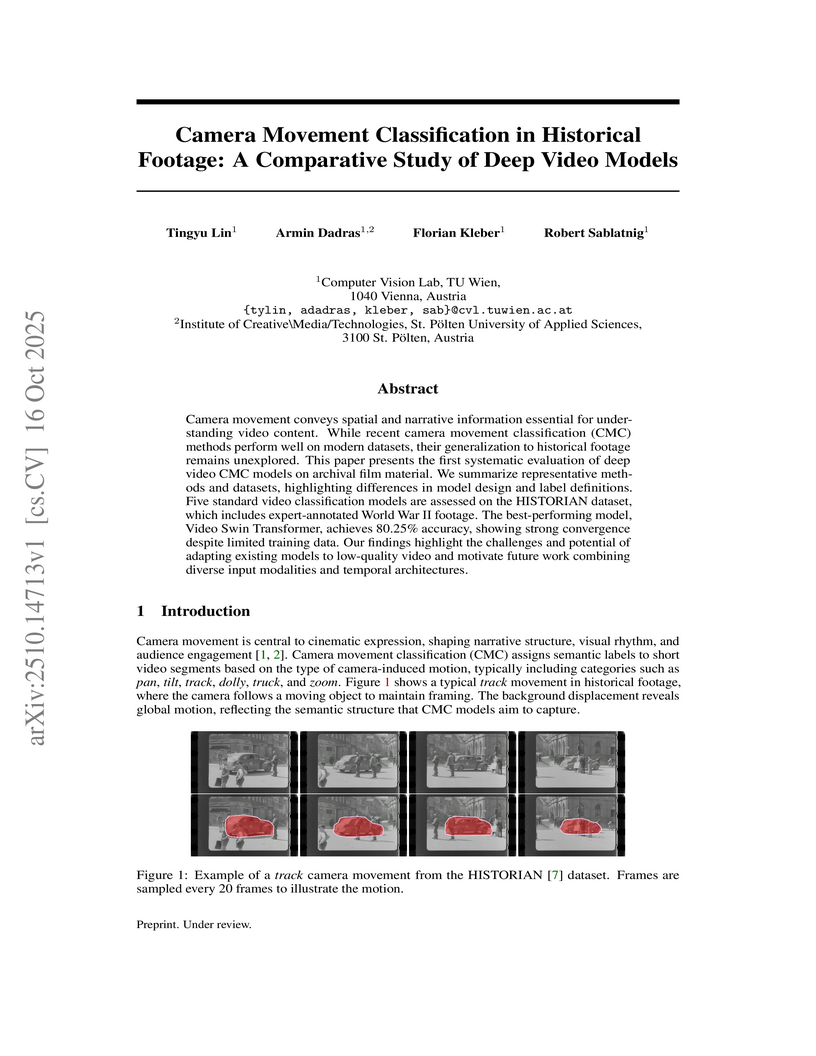

Camera movement conveys spatial and narrative information essential for understanding video content. While recent camera movement classification (CMC) methods perform well on modern datasets, their generalization to historical footage remains unexplored. This paper presents the first systematic evaluation of deep video CMC models on archival film material. We summarize representative methods and datasets, highlighting differences in model design and label definitions. Five standard video classification models are assessed on the HISTORIAN dataset, which includes expert-annotated World War II footage. The best-performing model, Video Swin Transformer, achieves 80.25% accuracy, showing strong convergence despite limited training data. Our findings highlight the challenges and potential of adapting existing models to low-quality video and motivate future work combining diverse input modalities and temporal architectures.

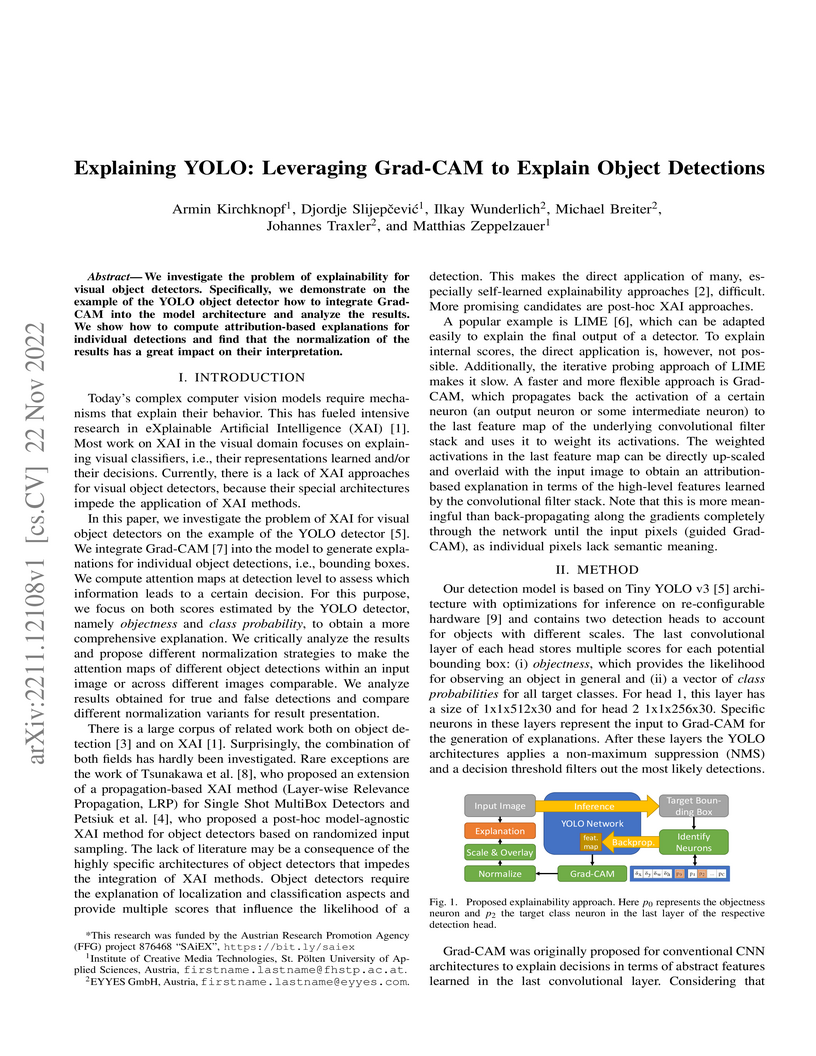

We investigate the problem of explainability for visual object detectors. Specifically, we demonstrate on the example of the YOLO object detector how to integrate Grad-CAM into the model architecture and analyze the results. We show how to compute attribution-based explanations for individual detections and find that the normalization of the results has a great impact on their interpretation.

05 Mar 2025

This chapter provides an overview of recent and promising Machine Learning

applications, i.e. pose estimation, feature estimation, event detection, data

exploration & clustering, and automated classification, in gait (walking and

running) and sports biomechanics. It explores the potential of Machine Learning

methods to address challenges in biomechanical workflows, highlights central

limitations, i.e. data and annotation availability and explainability, that

need to be addressed, and emphasises the importance of interdisciplinary

approaches for fully harnessing the potential of Machine Learning in gait and

sports biomechanics.

The development of powerful 3D scanning hardware and reconstruction algorithms has strongly promoted the generation of 3D surface reconstructions in different domains. An area of special interest for such 3D reconstructions is the cultural heritage domain, where surface reconstructions are generated to digitally preserve historical artifacts. While reconstruction quality nowadays is sufficient in many cases, the robust analysis (e.g. segmentation, matching, and classification) of reconstructed 3D data is still an open topic. In this paper, we target the automatic and interactive segmentation of high-resolution 3D surface reconstructions from the archaeological domain. To foster research in this field, we introduce a fully annotated and publicly available large-scale 3D surface dataset including high-resolution meshes, depth maps and point clouds as a novel benchmark dataset to the community. We provide baseline results for our existing random forest-based approach and for the first time investigate segmentation with convolutional neural networks (CNNs) on the data. Results show that both approaches have complementary strengths and weaknesses and that the provided dataset represents a challenge for future research.

The research communities studying visualization and sonification for data

display and analysis share exceptionally similar goals, essentially making data

of any kind interpretable to humans. One community does so by using visual

representations of data, and the other community employs auditory (non-speech)

representations of data. While the two communities have a lot in common, they

developed mostly in parallel over the course of the last few decades. With this

STAR, we discuss a collection of work that bridges the borders of the two

communities, hence a collection of work that aims to integrate the two

techniques into one form of audiovisual display, which we argue to be "more

than the sum of the two."

We introduce and motivate a classification system applicable to such

audiovisual displays and categorize a corpus of 57 academic publications that

appeared between 2011 and 2023 in categories such as reading level, dataset

type, or evaluation system, to mention a few. The corpus also enables a

meta-analysis of the field, including regularly occurring design patterns such

as type of visualization and sonification techniques, or the use of visual and

auditory channels, showing an overall diverse field with different designs. An

analysis of a co-author network of the field shows individual teams without

many interconnections. The body of work covered in this STAR also relates to

three adjacent topics: audiovisual monitoring, accessibility, and audiovisual

data art. These three topics are discussed individually in addition to the

systematically conducted part of this research. The findings of this report may

be used by researchers from both fields to understand the potentials and

challenges of such integrated designs while hopefully inspiring them to

collaborate with experts from the respective other field.

24 Sep 2025

Social workers need visual tools to collect information about their client's life situation, so that they can reflect it together and choose tailored interventions. easyNWK and easyBiograph are two visual tools for the client's social network and life history. We recently redesigned both tools in a participatory design project with social work faculty and professionals. In this short paper we discuss these tools from perspective of input visualization systems.

11 Jan 2022

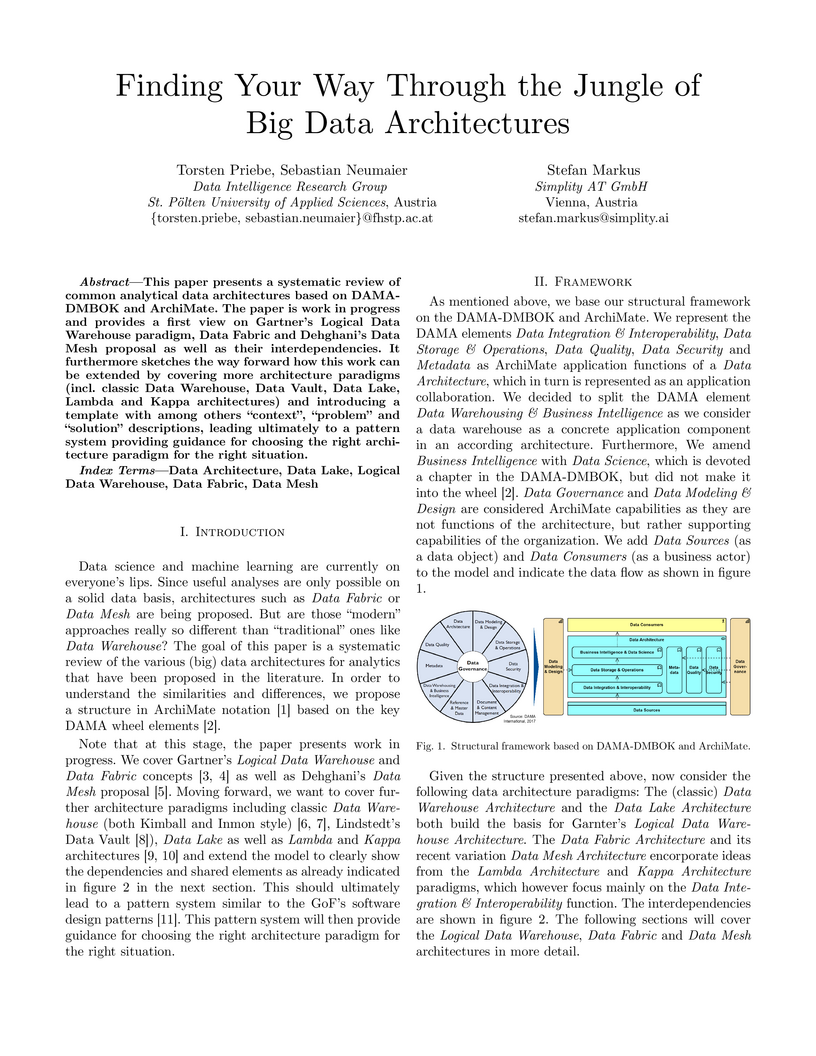

This paper presents a systematic review of common analytical data architectures based on DAMA-DMBOK and ArchiMate. The paper is work in progress and provides a first view on Gartner's Logical Data Warehouse paradigm, Data Fabric and Dehghani's Data Mesh proposal as well as their interdependencies. It furthermore sketches the way forward how this work can be extended by covering more architecture paradigms (incl. classic Data Warehouse, Data Vault, Data Lake, Lambda and Kappa architectures) and introducing a template with among others "context", "problem" and "solution" descriptions, leading ultimately to a pattern system providing guidance for choosing the right architecture paradigm for the right situation.

Addressing a critical aspect of cybersecurity in online gaming, this paper

systematically evaluates the extent to which kernel-level anti-cheat systems

mirror the properties of rootkits, highlighting the importance of

distinguishing between protective and potentially invasive software. After

establishing a definition for rootkits (making distinctions between rootkits

and simple kernel-level applications) and defining metrics to evaluate such

software, we introduce four widespread kernel-level anti-cheat solutions. We

lay out the inner workings of these types of software, assess them according to

our previously established definitions, and discuss ethical considerations and

the possible privacy infringements introduced by such programs. Our analysis

shows two of the four anti-cheat solutions exhibiting rootkit-like behaviour,

threatening the privacy and the integrity of the system. This paper thus

provides crucial insights for researchers and developers in the field of gaming

security and software engineering, highlighting the need for informed

development practices that carefully consider the intersection of effective

anti-cheat mechanisms and user privacy.

08 Jul 2020

Recently enacted legislation grants individuals certain rights to decide in

what fashion their personal data may be used, and in particular a "right to be

forgotten". This poses a challenge to machine learning: how to proceed when an

individual retracts permission to use data which has been part of the training

process of a model? From this question emerges the field of machine unlearning,

which could be broadly described as the investigation of how to "delete

training data from models". Our work complements this direction of research for

the specific setting of class-wide deletion requests for classification models

(e.g. deep neural networks). As a first step, we propose linear filtration as a

intuitive, computationally efficient sanitization method. Our experiments

demonstrate benefits in an adversarial setting over naive deletion schemes.

We present an approach for the analysis of hybrid visual compositions in animation in the domain of ephemeral film. We combine ideas from semi-supervised and weakly supervised learning to train a model that can segment hybrid compositions without requiring pre-labeled segmentation masks. We evaluate our approach on a set of ephemeral films from 13 film archives. Results demonstrate that the proposed learning strategy yields a performance close to a fully supervised baseline. On a qualitative level the performed analysis provides interesting insights on hybrid compositions in animation film.

03 Dec 2018

This paper presents two stochastic optimization approaches for simultaneous project scheduling and personnel planning, extending a deterministic model previously developed by Heimerl and Kolisch. For the problem of assigning work packages to multi-skilled human resources with heterogeneous skills, the uncertainty on work package processing times is addressed. In the case where the required capacity exceeds the available capacity of internal resources, external human resources are used. The objective is to minimize the expected external costs. The first solution approach is a 'matheuristic' based on a decomposition of the problem into a project scheduling subproblem and a staffing subproblem. An iterated local search procedure determines the project schedules, while the staffing subproblem is solved by means of the Frank-Wolfe algorithm for convex optimization. The second solution approach is Sample Average Approximation where, based on sampled scenarios, the deterministic equivalent problem is solved through mixed integer programming. Experimental results for synthetically generated test instances inspired by a real-world situation are provided, and some managerial insights are derived.

Persistent homology (PH) is a rigorous mathematical theory that provides a robust descriptor of data in the form of persistence diagrams (PDs) which are 2D multisets of points. Their variable size makes them, however, difficult to combine with typical machine learning workflows. In this paper we introduce persistence codebooks, a novel expressive and discriminative fixed-size vectorized representation of PDs. To this end, we adapt bag-of-words (BoW), vectors of locally aggregated descriptors (VLAD) and Fischer vectors (FV) for the quantization of PDs. Persistence codebooks represent PDs in a convenient way for machine learning and statistical analysis and have a number of favorable practical and theoretical properties including 1-Wasserstein stability. We evaluate the presented representations on several heterogeneous datasets and show their (high) discriminative power. Our approach achieves state-of-the-art performance and beyond in much less time than alternative approaches.

Persistent homology (PH) is a rigorous mathematical theory that provides a robust descriptor of data in the form of persistence diagrams (PDs). PDs exhibit, however, complex structure and are difficult to integrate in today's machine learning workflows. This paper introduces persistence bag-of-words: a novel and stable vectorized representation of PDs that enables the seamless integration with machine learning. Comprehensive experiments show that the new representation achieves state-of-the-art performance and beyond in much less time than alternative approaches.

25 Jun 2019

Today, many different types of scams can be found on the internet. Online

criminals are always finding new creative ways to trick internet users, be it

in the form of lottery scams, downloading scam apps for smartphones or fake

gambling websites. This paper presents a large-scale study on one particular

delivery method of online scam: pop-up scam on typosquatting domains.

Typosquatting describes the concept of registering domains which are very

similar to existing ones while deliberately containing common typing errors;

these domains are then used to trick online users while under the belief of

browsing the intended website. Pop-up scam uses JavaScript alert boxes to

present a message which attracts the user's attention very effectively, as they

are a blocking user interface element.

Our study among typosquatting domains derived from the Alexa Top 1 Million

list revealed on 8255 distinct typosquatting URLs a total of 9857 pop-up

messages, out of which 8828 were malicious. The vast majority of those distinct

URLs (7176) were targeted and displayed pop-up messages to one specific HTTP

user agent only. Based on our scans, we present an in-depth analysis as well as

a detailed classification of different targeting parameters (user agent and

language) which triggered varying kinds of pop-up scams.

02 Apr 2020

Today, many different types of scams can be found on the internet. Online

criminals are always finding new creative ways to trick internet users, be it

in the form of lottery scams, downloading scam apps for smartphones or fake

gambling websites. This paper presents a large-scale study on one particular

delivery method of online scam: pop-up scam on typosquatting domains.

Typosquatting describes the concept of registering domains which are very

similar to existing ones while deliberately containing common typing errors;

these domains are then used to trick online users while under the belief of

browsing the intended website. Pop-up scam uses JavaScript alert boxes to

present a message which attracts the user's attention very effectively, as they

are a blocking user interface element.

Our study among typosquatting domains derived from the Majestic Million list

utilising an Austrian IP address revealed on 1219 distinct typosquatting URLs a

total of 2577 pop-up messages, out of which 1538 were malicious. Approximately

a third of those distinct URLs (403) were targeted and displayed pop-up

messages to one specific HTTP user agent only. Based on our scans, we present

an in-depth analysis as well as a detailed classification of different

targeting parameters (user agent and language) which triggered varying kinds of

pop-up scams. Furthermore, we expound the differences of current pop-up scam

characteristics in comparison with a previous scan performed in late 2018 and

examine the use of IDN homograph attacks as well as the application of message

localisation using additional scans with IP addresses from the United States

and Japan.

Explainable artificial intelligence is the attempt to elucidate the workings

of systems too complex to be directly accessible to human cognition through

suitable side-information referred to as "explanations". We present a trainable

explanation module for convolutional image classifiers we call bounded logit

attention (BLA). The BLA module learns to select a subset of the convolutional

feature map for each input instance, which then serves as an explanation for

the classifier's prediction. BLA overcomes several limitations of the

instancewise feature selection method "learning to explain" (L2X) introduced by

Chen et al. (2018): 1) BLA scales to real-world sized image classification

problems, and 2) BLA offers a canonical way to learn explanations of variable

size. Due to its modularity BLA lends itself to transfer learning setups and

can also be employed as a post-hoc add-on to trained classifiers. Beyond

explainability, BLA may serve as a general purpose method for differentiable

approximation of subset selection. In a user study we find that BLA

explanations are preferred over explanations generated by the popular

(Grad-)CAM method.

Distributed ledger systems have become more prominent and successful in recent years, with a focus on blockchains and cryptocurrency. This has led to various misunderstandings about both the technology itself and its capabilities, as in many cases blockchain and cryptocurrency is used synonymously and other applications are often overlooked. Therefore, as a whole, the view of distributed ledger technology beyond blockchains and cryptocurrencies is very limited. Existing vocabularies and ontologies often focus on single aspects of the technology, or in some cases even just on one product. This potentially leads to other types of distributed ledgers and their possible use cases being neglected. In this paper, we present a knowledge graph and an ontology for distributed ledger technologies, which includes security considerations to model aspects such as threats and vulnerabilities, application domains, as well as relevant standards and regulations. Such a knowledge graph improves the overall understanding of distributed ledgers, reveals their strengths, and supports the work of security personnel, i.e. analysts and system architects. We discuss potential uses and follow semantic web best practices to evaluate and publish the ontology and knowledge graph.

The use of multiple camera technologies in a combined multimodal monitoring

system for plant phenotyping offers promising benefits. Compared to

configurations that only utilize a single camera technology, cross-modal

patterns can be recorded that allow a more comprehensive assessment of plant

phenotypes. However, the effective utilization of cross-modal patterns is

dependent on precise image registration to achieve pixel-accurate alignment, a

challenge often complicated by parallax and occlusion effects inherent in plant

canopy imaging.

In this study, we propose a novel multimodal 3D image registration method

that addresses these challenges by integrating depth information from a

time-of-flight camera into the registration process. By leveraging depth data,

our method mitigates parallax effects and thus facilitates more accurate pixel

alignment across camera modalities. Additionally, we introduce an automated

mechanism to identify and differentiate different types of occlusions, thereby

minimizing the introduction of registration errors.

To evaluate the efficacy of our approach, we conduct experiments on a diverse

image dataset comprising six distinct plant species with varying leaf

geometries. Our results demonstrate the robustness of the proposed registration

algorithm, showcasing its ability to achieve accurate alignment across

different plant types and camera compositions. Compared to previous methods it

is not reliant on detecting plant specific image features and can thereby be

utilized for a wide variety of applications in plant sciences. The registration

approach principally scales to arbitrary numbers of cameras with different

resolutions and wavelengths. Overall, our study contributes to advancing the

field of plant phenotyping by offering a robust and reliable solution for

multimodal image registration.

Artificial Intelligence has rapidly become a cornerstone technology, significantly influencing Europe's societal and economic landscapes. However, the proliferation of AI also raises critical ethical, legal, and regulatory challenges. The CERTAIN (Certification for Ethical and Regulatory Transparency in Artificial Intelligence) project addresses these issues by developing a comprehensive framework that integrates regulatory compliance, ethical standards, and transparency into AI systems. In this position paper, we outline the methodological steps for building the core components of this framework. Specifically, we present: (i) semantic Machine Learning Operations (MLOps) for structured AI lifecycle management, (ii) ontology-driven data lineage tracking to ensure traceability and accountability, and (iii) regulatory operations (RegOps) workflows to operationalize compliance requirements. By implementing and validating its solutions across diverse pilots, CERTAIN aims to advance regulatory compliance and to promote responsible AI innovation aligned with European standards.

The concept of dataspaces aims to facilitate secure and sovereign data exchange among multiple stakeholders. Technical implementations known as "connectors" support the definition of usage control policies and the verifiable enforcement of such policies. This paper provides an overview of existing literature and reviews current open-source dataspace connector implementations that are compliant with the International Data Spaces (IDS) standard. To assess maturity and readiness, we review four implementations with regard to their architecture, underlying data model and usage control language.

There are no more papers matching your filters at the moment.