The University of Maryland

My3DGen introduces a parameter-efficient framework for personalized 3D generative face models, leveraging Low-Rank Adaptation (LoRA) to preserve individual identity from a few images. The approach reduces trainable parameters by 127x compared to full fine-tuning while enabling realistic novel view synthesis, semantic editing, and image enhancement for specific individuals.

03 May 2025

Generating entanglement deterministically at a capacity-approaching rate is

critical for next-generation quantum networks. We propose

weak-coherent-state-assisted protocols that can generate entanglement

near-deterministically between reflective-cavity-based quantum memories at a

success rate that exceeds the 50\% limit associated with single-photon-mediated

schemes. The most pronounced benefit is shown in the low-channel-loss regime

and persists even with moderate noise. We extend our protocols to entangle an

array of memories in a GHZ state, and infer that it yields an exponential

speed-up compared to previous single-photon-based protocols.

22 Nov 2024

We propose the first Bayesian methods for detecting change points in high-dimensional mean and covariance structures. These methods are constructed using pairwise Bayes factors, leveraging modularization to identify significant changes in individual components efficiently. We establish that the proposed methods consistently detect and estimate change points under much milder conditions than existing approaches in the literature. Additionally, we demonstrate that their localization rates are nearly optimal in terms of rates. The practical performance of the proposed methods is evaluated through extensive simulation studies, where they are compared to state-of-the-art techniques. The results show comparable or superior performance across most scenarios. Notably, the methods effectively detect change points whenever signals of sufficient magnitude are present, irrespective of the number of signals. Finally, we apply the proposed methods to genetic and financial datasets, illustrating their practical utility in real-world applications.

To solve inverse problems, plug-and-play (PnP) methods replace the proximal step in a convex optimization algorithm with a call to an application-specific denoiser, often implemented using a deep neural network (DNN). Although such methods yield accurate solutions, they can be improved. For example, denoisers are usually designed/trained to remove white Gaussian noise, but the denoiser input error in PnP algorithms is usually far from white or Gaussian. Approximate message passing (AMP) methods provide white and Gaussian denoiser input error, but only when the forward operator is sufficiently random. In this work, for Fourier-based forward operators, we propose a PnP algorithm based on generalized expectation-consistent (GEC) approximation -- a close cousin of AMP -- that offers predictable error statistics at each iteration, as well as a new DNN denoiser that leverages those statistics. We apply our approach to magnetic resonance (MR) image recovery and demonstrate its advantages over existing PnP and AMP methods.

10 Jan 2022

We propose improving the cross-target and cross-scene generalization of visual navigation through learning an agent that is guided by conceiving the next observations it expects to see. This is achieved by learning a variational Bayesian model, called NeoNav, which generates the next expected observations (NEO) conditioned on the current observations of the agent and the target view. Our generative model is learned through optimizing a variational objective encompassing two key designs. First, the latent distribution is conditioned on current observations and the target view, leading to a model-based, target-driven navigation. Second, the latent space is modeled with a Mixture of Gaussians conditioned on the current observation and the next best action. Our use of mixture-of-posteriors prior effectively alleviates the issue of over-regularized latent space, thus significantly boosting the model generalization for new targets and in novel scenes. Moreover, the NEO generation models the forward dynamics of agent-environment interaction, which improves the quality of approximate inference and hence benefits data efficiency. We have conducted extensive evaluations on both real-world and synthetic benchmarks, and show that our model consistently outperforms the state-of-the-art models in terms of success rate, data efficiency, and generalization.

04 Oct 2024

Neutron scattering characterization of materials allows for the study of entanglement and microscopic structure, but is inefficient to simulate classically for comparison to theoretical models and predictions. However, quantum processors, notably analog quantum simulators, have the potential to offer an unprecedented, efficient method of Hamiltonian simulation by evolving a state in real time to compute phase transitions, dynamical properties, and entanglement witnesses. Here, we present a method for simulating neutron scattering on QuEra's Aquila processor by measuring the dynamic structure factor (DSF) for the prototypical example of the critical transverse field Ising chain, and propose a method for error mitigation. We provide numerical simulations and experimental results for the performance of the procedure on the hardware, up to a chain of length L=25. Additionally, the DSF result is used to compute the quantum Fisher information (QFI) density, where we confirm bipartite entanglement in the system experimentally.

To solve inverse problems, plug-and-play (PnP) methods replace the proximal step in a convex optimization algorithm with a call to an application-specific denoiser, often implemented using a deep neural network (DNN). Although such methods yield accurate solutions, they can be improved. For example, denoisers are usually designed/trained to remove white Gaussian noise, but the denoiser input error in PnP algorithms is usually far from white or Gaussian. Approximate message passing (AMP) methods provide white and Gaussian denoiser input error, but only when the forward operator is sufficiently random. In this work, for Fourier-based forward operators, we propose a PnP algorithm based on generalized expectation-consistent (GEC) approximation -- a close cousin of AMP -- that offers predictable error statistics at each iteration, as well as a new DNN denoiser that leverages those statistics. We apply our approach to magnetic resonance (MR) image recovery and demonstrate its advantages over existing PnP and AMP methods.

18 Feb 2019

The tidal disruption of stars by (super-)massive black holes in galactic nuclei has been discussed in theoretical terms for about 30 years but only in the past decade have we been able to detect such events in substantial numbers. Thus, we are now starting to carry out observational tests of models for the disruption. We are also formulating expectations for the inspiral and disruption of white dwarfs by intermediate-mass black holes with masses < 10^5\;{\rm M}_\odot. Such events are very rich with information and open a new window to intermediate-mass black holes, thought to live in dwarf galaxies and star clusters. They can inform us of the demographics of intermediate-mass black holes, stellar populations and dynamics in their immediate vicinity, and the physics of accretion of hydrogen-deficient material. The combination of upcoming transient surveys using ground-based, electromagnetic observatories and low-frequency gravitational wave observations is ideal for exploiting tidal disruptions of white dwarfs. The detection rate of gravitational wave signals, optimistically, may reach a few dozen per year in a volume up to z≈0.1. Gravitational wave observations are particularly useful because they yield the masses of the objects involved and allow determination of the spin of the black hole, affording tests of physical models for black hole formation and growth. They also give us advance warning of the electromagnetic flares by weeks or more. The right computing infrastructure for modern models for the disruption process and event rates will allow us to make the most of the upcoming observing facilities.

Vanderbilt UniversityUniversity of Zurich Yale University

Yale University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center The Pennsylvania State UniversityCity University of New York

The Pennsylvania State UniversityCity University of New York Princeton UniversityThe University of MarylandAmerican Museum of Natural HistoryFisk UniversityRutgers, The State University of New JerseyMontana State UniversityUniversity of Milano

Bicocca

Princeton UniversityThe University of MarylandAmerican Museum of Natural HistoryFisk UniversityRutgers, The State University of New JerseyMontana State UniversityUniversity of Milano

Bicocca

Yale University

Yale University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center The Pennsylvania State UniversityCity University of New York

The Pennsylvania State UniversityCity University of New York Princeton UniversityThe University of MarylandAmerican Museum of Natural HistoryFisk UniversityRutgers, The State University of New JerseyMontana State UniversityUniversity of Milano

Bicocca

Princeton UniversityThe University of MarylandAmerican Museum of Natural HistoryFisk UniversityRutgers, The State University of New JerseyMontana State UniversityUniversity of Milano

BicoccaObservational evidence has been mounting for the existence of intermediate

mass black holes (IMBHs, 10^2-10^5 Msun), but observing them at all, much less

constraining their masses, is very challenging. In one theorized formation

channel, IMBHs are the seeds for supermassive black holes in the early

universe. As a result, IMBHs are predicted to exist in the local universe in

dwarf galaxies, as well as wandering in more massive galaxy halos. However,

these environments are not conducive to the accretion events or dynamical

signatures that allow us to detect IMBHs. The Laser Interferometer Space

Antenna (LISA) will demystify IMBHs by detecting the mergers of these objects

out to extremely high redshifts, while measuring their masses with extremely

high precision. These observations of merging IMBHs will allow us to constrain

the formation mechanism and subsequent evolution of massive black holes, from

the 'dark ages' to the present day, and reveal the role that IMBHs play in

hierarchical galaxy evolution.

09 May 2022

To enhance the cross-target and cross-scene generalization of target-driven

visual navigation based on deep reinforcement learning (RL), we introduce an

information-theoretic regularization term into the RL objective. The

regularization maximizes the mutual information between navigation actions and

visual observation transforms of an agent, thus promoting more informed

navigation decisions. This way, the agent models the action-observation

dynamics by learning a variational generative model. Based on the model, the

agent generates (imagines) the next observation from its current observation

and navigation target. This way, the agent learns to understand the causality

between navigation actions and the changes in its observations, which allows

the agent to predict the next action for navigation by comparing the current

and the imagined next observations. Cross-target and cross-scene evaluations on

the AI2-THOR framework show that our method attains at least a 10%

improvement of average success rate over some state-of-the-art models. We

further evaluate our model in two real-world settings: navigation in unseen

indoor scenes from a discrete Active Vision Dataset (AVD) and continuous

real-world environments with a TurtleBot.We demonstrate that our navigation

model is able to successfully achieve navigation tasks in these scenarios.

Videos and models can be found in the supplementary material.

08 Oct 2020

Recent stellar population analysis of early-type galaxy spectra has demonstrated that the low-mass galaxies in cluster centers have high [α/Fe] and old ages characteristic of massive galaxies and unlike the low-mass galaxy population in the outskirts of clusters and fields. This phenomenon has been termed "coordinated assembly" to highlight the fact that the building blocks of massive cluster central galaxies are drawn from a special subset of the overall low-mass galaxy population. Here we explore this idea in the IllustrisTNG simulations, particularly the TNG300 run, in order to understand how environment, especially cluster centers, shape the star formation histories of quiescent satellite galaxies in groups and clusters (M200c,z=0≥1013M⊙). Tracing histories of quenched satellite galaxies with M⋆,z=0≥1010M⊙, we find that those in more massive dark matter halos, and located closer to the primary galaxies, are quenched earlier, have shorter star formation timescales, and older stellar ages. The star formation timescale-M⋆ and stellar age-M⋆ scaling relations are in good agreement with observations, and are predicted to vary with halo mass and cluster-centric distance. The dependence on environment arises due to the infall histories of satellite galaxies: galaxies that are located closer to cluster centers in more massive dark matter halos at z=0 were accreted earlier on average. The delay between infall and quenching time is shorter for galaxies in more massive halos, and depends on the halo mass at its first accretion, showing that group pre-processing is a crucial aspect in satellite quenching.

16 Oct 2020

Trajectories represent the mobility of moving objects and thus is of great

value in data mining applications. However, trajectory data is enormous in

volume, so it is expensive to store and process the raw data directly.

Trajectories are also redundant so data compression techniques can be applied.

In this paper, we propose effective algorithms to simplify trajectories. We

first extend existing algorithms by replacing the commonly used L2 metric

with the L∞ metric so that they can be generalized to high dimensional

space (e.g., 3-space in practice). Next, we propose a novel approach, namely

L-infinity Multidimensional Interpolation Trajectory Simplification (LiMITS).

LiMITS belongs to weak simplification and takes advantage of the L∞

metric. It generates simplified trajectories by multidimensional interpolation.

It also allows a new format called compact representation to further improve

the compression ratio. Finally, We demonstrate the performance of LiMITS

through experiments on real-world datasets, which show that it is more

effective than other existing methods.

We report the discovery of three new cases of QSOs acting as strong gravitational lenses on background emission line galaxies: SDSS J0827+5224 (zQSO = 0.293, zs = 0.412), SDSS J0919+2720 (zQSO = 0.209, zs = 0.558), SDSS J1005+4016 (zQSO = 0.230, zs = 0.441). The selection was carried out using a sample of 22,298 SDSS spectra displaying at least four emission lines at a redshift beyond that of the foreground QSO. The lensing nature is confirmed from Keck imaging and spectroscopy, as well as from HST/WFC3 imaging in the F475W and F814W filters. Two of the QSOs have face-on spiral host galaxies and the third is a QSO+galaxy pair. The velocity dispersion of the host galaxies, inferred from simple lens modeling, is between \sigma_v = 210 and 285 km/s, making these host galaxies comparable in mass with the SLACS sample of early-type strong lenses.

Range-dependent clutter suppression poses significant challenges in airborne frequency diverse array (FDA) radar, where resolving range ambiguity is particularly difficult. Traditional space-time adaptive processing (STAP) techniques used for clutter mitigation in FDA radars operate in the physical domain defined by first-order statistics. In this paper, unlike conventional airborne uniform FDA, we introduce a space-time-range adaptive processing (STRAP) method to exploit second-order statistics for clutter suppression in the newly proposed co-pulsing FDA radar. This approach utilizes co-prime frequency offsets (FOs) across the elements of a co-prime array, with each element transmitting at a non-uniform co-prime pulse repetition interval (C-Cube). By incorporating second-order statistics from the co-array domain, the co-pulsing STRAP or CoSTAP benefits from increased degrees of freedom (DoFs) and low computational cost while maintaining strong clutter suppression capabilities. However, this approach also introduces significant computational burdens in the coarray domain. To address this, we propose an approximate method for three-dimensional (3-D) clutter subspace estimation using discrete prolate spheroidal sequences (DPSS) to balance clutter suppression performance and computational cost. We first develop a 3-D clutter rank evaluation criterion to exploit the geometry of 3-D clutter in a general scenario. Following this, we present a clutter subspace rejection method to mitigate the effects of interference such as jammer. Compared to existing FDA-STAP algorithms, our proposed CoSTAP method offers superior clutter suppression performance, lower computational complexity, and enhanced robustness to interference. Numerical experiments validate the effectiveness and advantages of our method.

23 Oct 2012

Recall, the proportion of relevant documents retrieved, is an important measure of effectiveness in information retrieval, particularly in the legal, patent, and medical domains. Where document sets are too large for exhaustive relevance assessment, recall can be estimated by assessing a random sample of documents; but an indication of the reliability of this estimate is also required. In this article, we examine several methods for estimating two-tailed recall confidence intervals. We find that the normal approximation in current use provides poor coverage in many circumstances, even when adjusted to correct its inappropriate symmetry. Analytic and Bayesian methods based on the ratio of binomials are generally more accurate, but are inaccurate on small populations. The method we recommend derives beta-binomial posteriors on retrieved and unretrieved yield, with fixed hyperparameters, and a Monte Carlo estimate of the posterior distribution of recall. We demonstrate that this method gives mean coverage at or near the nominal level, across several scenarios, while being balanced and stable. We offer advice on sampling design, including the allocation of assessments to the retrieved and unretrieved segments, and compare the proposed beta-binomial with the officially reported normal intervals for recent TREC Legal Track iterations.

Many-body localization (MBL) lends remarkable robustness to nonequilibrium phases of matter. Such phases can show topological and symmetry breaking order in their ground and excited states, but they may also belong to an anomalous localized topological phase (ALT phase). All eigenstates in an ALT phase are trivial, in that they can be deformed to product states, but the entire Hamiltonian cannot be deformed to a trivial localized model without going through a delocalization transition. Using a correspondence between MBL phases with short-ranged entanglement and locality preserving unitaries - called quantum cellular automata (QCA) - we reduce the classification of ALT phases to that of QCA. This method extends to periodically (Floquet) and quasiperiodically driven ALT phases, and captures anomalous Floquet phases within the same framework as static phases. We considerably develop the study of the topology of QCA, allowing us to classify static and driven ALT phases in low dimensions. The QCA framework further generalizes to include symmetry-enriched ALT phases (SALT phases) - which we also classify in low dimensions - and provides a large class of soluble models suitable for realization in quantum simulators. In systematizing the study of ALT phases, we both greatly extend the classification of interacting nonequilibrium systems and clarify a confusion in the literature which implicitly equates nontrivial Hamiltonians with nontrivial ground states.

26 Apr 2018

Laser cooled lanthanide atoms are ideal candidates with which to study strong and unconventional quantum magnetism with exotic phases. Here, we use state-of-the-art closed-coupling simulations to model quantum magnetism for pairs of ultracold spin-6 erbium lanthanide atoms placed in a deep optical lattice. In contrast to the widely used single-channel Hubbard model description of atoms and molecules in an optical lattice, we focus on the single-site multi-channel spin evolution due to spin-dependent contact, anisotropic van der Waals, and dipolar forces. This has allowed us to identify the leading mechanism, orbital anisotropy, that governs molecular spin dynamics among erbium atoms. The large magnetic moment and combined orbital angular momentum of the 4f-shell electrons are responsible for these strong anisotropic interactions and unconventional quantum magnetism. Multi-channel simulations of magnetic Cr atoms under similar trapping conditions show that their spin-evolution is controlled by spin-dependent contact interactions that are distinct in nature from the orbital anisotropy in Er. The role of an external magnetic field and the aspect ratio of the lattice site on spin dynamics is also investigated.

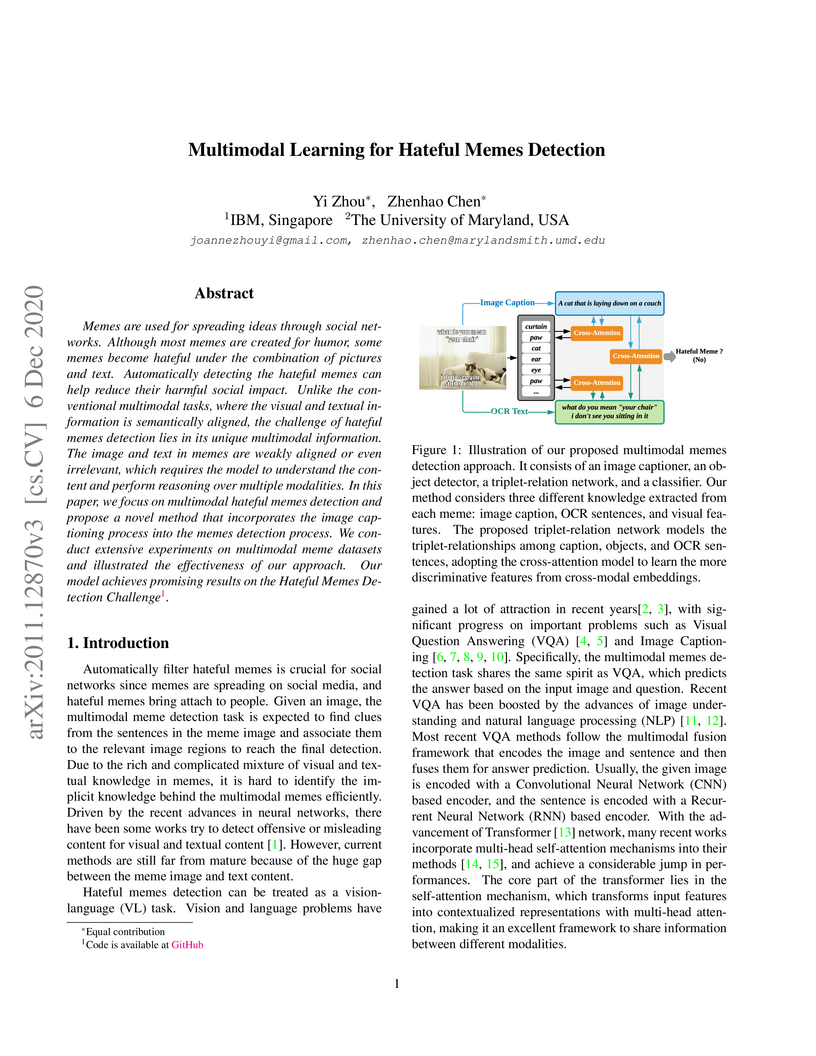

Memes are used for spreading ideas through social networks. Although most memes are created for humor, some memes become hateful under the combination of pictures and text. Automatically detecting the hateful memes can help reduce their harmful social impact. Unlike the conventional multimodal tasks, where the visual and textual information is semantically aligned, the challenge of hateful memes detection lies in its unique multimodal information. The image and text in memes are weakly aligned or even irrelevant, which requires the model to understand the content and perform reasoning over multiple modalities. In this paper, we focus on multimodal hateful memes detection and propose a novel method that incorporates the image captioning process into the memes detection process. We conduct extensive experiments on multimodal meme datasets and illustrated the effectiveness of our approach. Our model achieves promising results on the Hateful Memes Detection Challenge.

Humanity is close to characterizing the atmospheres of rocky exoplanets due

to the advent of JWST. These astronomical observations motivate us to

understand exoplanetary atmospheres to constrain habitability. We study the

influence greenhouse gas supplement has on the atmosphere of TRAPPIST-1e, an

Earth-like exoplanet, and Earth itself by analyzing ExoCAM and CMIP6 model

simulations. We find an analogous relationship between CO2 supplement and

amplified warming at non-irradiated regions (night side and polar) - such

spatial heterogeneity results in significant global circulation changes. A

dynamical systems framework provides additional insight into the vertical

dynamics of the atmospheres. Indeed, we demonstrate that adding CO2 increases

temporal stability near the surface and decreases stability at low pressures.

Although Earth and TRAPPIST-1e take entirely different climate states, they

share the relative response between climate dynamics and greenhouse gas

supplements.

We introduce the nested stochastic block model (NSBM) to cluster a collection

of networks while simultaneously detecting communities within each network.

NSBM has several appealing features including the ability to work on unlabeled

networks with potentially different node sets, the flexibility to model

heterogeneous communities, and the means to automatically select the number of

classes for the networks and the number of communities within each network.

This is accomplished via a Bayesian model, with a novel application of the

nested Dirichlet process (NDP) as a prior to jointly model the between-network

and within-network clusters. The dependency introduced by the network data

creates nontrivial challenges for the NDP, especially in the development of

efficient samplers. For posterior inference, we propose several Markov chain

Monte Carlo algorithms including a standard Gibbs sampler, a collapsed Gibbs

sampler, and two blocked Gibbs samplers that ultimately return two levels of

clustering labels from both within and across the networks. Extensive

simulation studies are carried out which demonstrate that the model provides

very accurate estimates of both levels of the clustering structure. We also

apply our model to two social network datasets that cannot be analyzed using

any previous method in the literature due to the anonymity of the nodes and the

varying number of nodes in each network.

There are no more papers matching your filters at the moment.