UMass Amherst

Researchers analyzed sequential and parallel asynchronous protocols for entanglement distribution in quantum networks, quantifying their performance under realistic conditions including classical communication delays and memory decoherence. The study found that while parallel protocols offer a marginal rate improvement, sequential schemes are often more practical, and a memory cutoff strategy significantly boosts secret key rates by mitigating decoherence.

MindJourney enhances Vision-Language Models (VLMs) in spatial reasoning tasks by enabling them to interactively explore imagined 3D environments using controllable video diffusion models. The framework yields an average 7.7% top-1 accuracy gain on the SAT benchmark, pushing models like OpenAI o1 from 74.6% to 84.7% accuracy on SAT-Real.

QServe introduces a W4A8KV4 quantization scheme and an optimized inference system to enhance large language model serving efficiency. It achieves 1.2-3.5x higher throughput than TensorRT-LLM and enables a 3x reduction in GPU dollar cost for LLM serving on L40S GPUs.

The 3D-LLM framework from a collaboration including MIT and UMass Amherst enables large language models to understand and reason about the 3D physical world. It achieves this by generating large-scale 3D-language data and deriving 3D features from multi-view 2D images, demonstrating improved performance across tasks like 3D question answering and object grounding compared to prior methods.

Researchers at the University of Maryland and Microsoft introduced ONERULER, a multilingual benchmark with 26 languages for evaluating long-context understanding in large language models up to 128K tokens. The benchmark reveals that performance disparities between high and low-resource languages widen with context length, and models struggle with aggregation tasks and correctly identifying unanswerable questions.

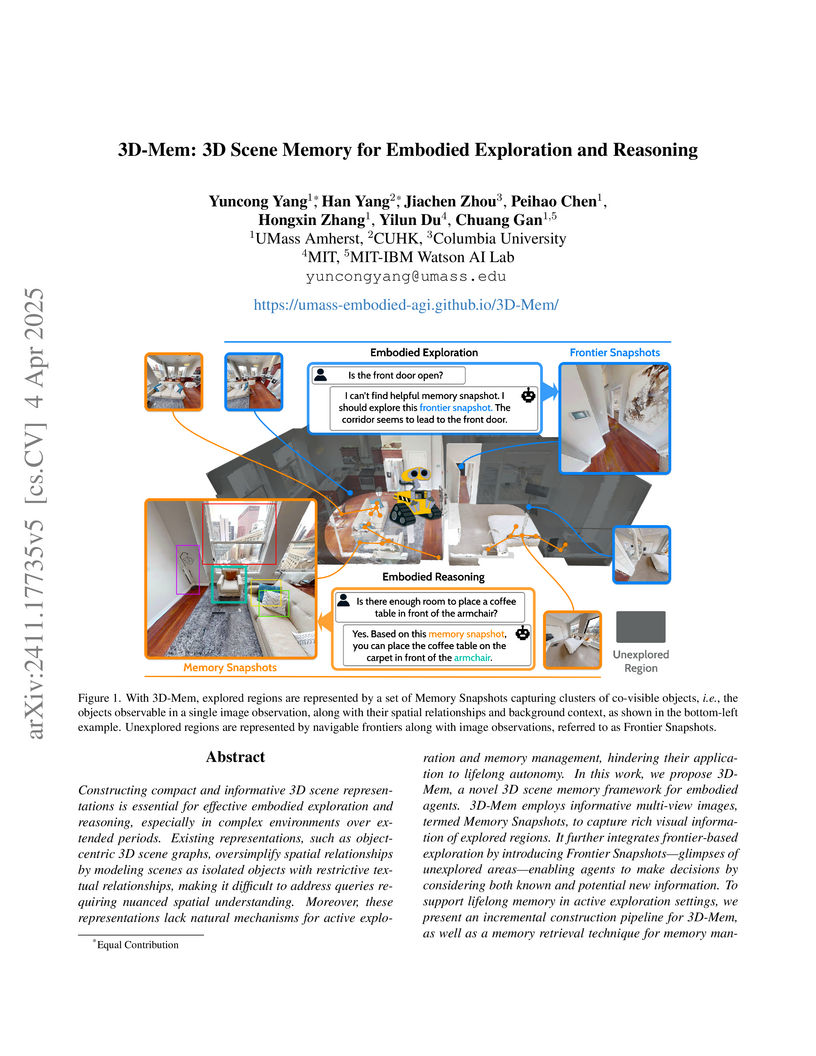

3D-Mem introduces a scalable 3D scene memory framework for embodied agents, leveraging multi-view image "Memory Snapshots" for explored regions and "Frontier Snapshots" for unexplored areas. This enables efficient lifelong exploration and enhanced spatial reasoning, outperforming baselines in various embodied question answering and navigation tasks by effectively integrating with Vision-Language Models.

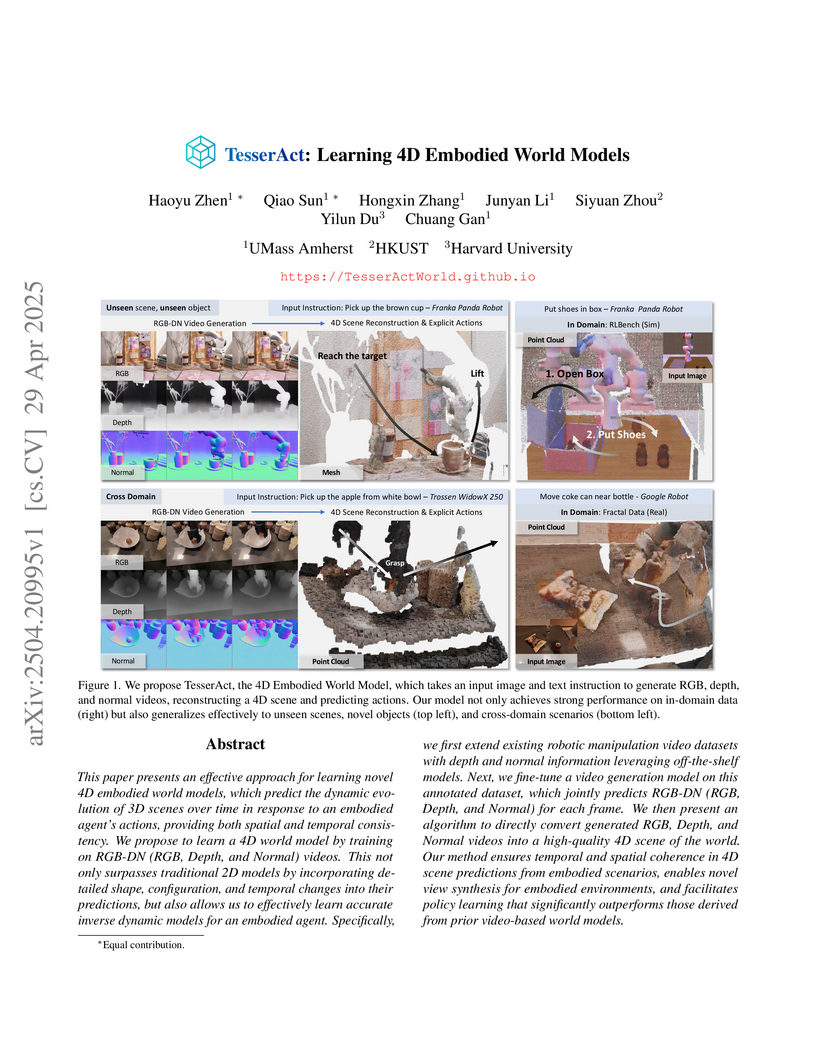

TesserAct introduces a 4D embodied world model that predicts sequences of RGB, depth, and normal maps, enabling robust reconstruction of dynamic 3D scenes over time. This approach allows robots to achieve higher success rates in various manipulation tasks by providing enhanced spatial and temporal understanding.

The TALE framework introduces a method for large language models to dynamically adjust reasoning token usage, addressing the token overhead of Chain-of-Thought prompting. This approach, implemented through prompt-based estimation or model fine-tuning, achieves an average 67% reduction in output tokens and 59% reduction in monetary expense while largely preserving reasoning accuracy across various models and tasks.

Researchers from UMass Amherst, Zhejiang University, and MIT-IBM Watson AI Lab developed Budget Guidance, a fine-tuning-free method for controlling the reasoning length of large language models. The approach modulates an LLM’s generation probabilities using a lightweight auxiliary predictor to ensure reasoning stays within a specified budget, improving token efficiency and reducing inference costs while maintaining high task performance, outperforming previous hard-cutoff methods by up to 26% accuracy on math reasoning benchmarks.

RoboGen is a framework that leverages foundation models to automatically generate diverse robotic tasks, construct corresponding simulation environments, and create training supervisions for robot skill learning. The system enables the continuous, scalable generation of training data, demonstrating an average skill learning success rate of 0.774 across 69 varied tasks including manipulation and locomotion.

CameraBench, a new benchmark and dataset developed in collaboration with cinematographers, is introduced to evaluate and improve computational models' understanding of camera motions in videos. Fine-tuning large vision-language models on this high-quality dataset significantly boosts their performance in classifying camera movements and answering related questions, often matching or exceeding geometric methods.

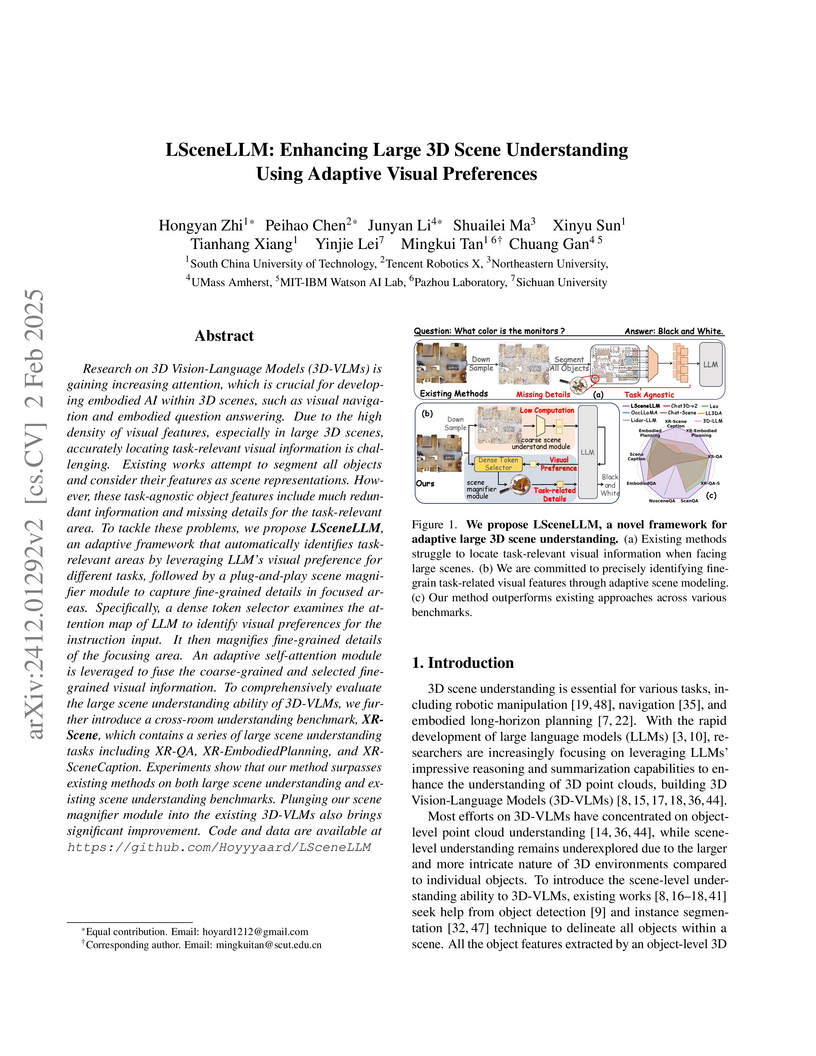

LSceneLLM, developed by researchers from South China University of Technology, Tencent Robotics X, and others, presents an adaptive framework for large 3D scene understanding that mimics human visual processing by focusing on task-relevant regions. The approach achieves state-of-the-art performance across large indoor (XR-Scene), single-room indoor (ScanQA), and large outdoor (NuscenesQA) benchmarks, significantly improving fine-grained detail recognition and setting a new standard for embodied AI applications.

Researchers from UC Berkeley, CMU, UW-Madison, and other institutions successfully adapt Reinforcement Learning from Human Feedback (RLHF) to large multimodal models (LMMs), introducing Factually Augmented RLHF (Fact-RLHF) to mitigate hallucinations and improve alignment with human preferences. The resulting LLaVA-RLHF model shows a 60% improvement in hallucination reduction on a new benchmark, MMHAL-BENCH, and achieves 95.6% of GPT-4's performance on LLaVA-Bench for general alignment.

In this work, we present TalkCuts, a large-scale dataset designed to facilitate the study of multi-shot human speech video generation. Unlike existing datasets that focus on single-shot, static viewpoints, TalkCuts offers 164k clips totaling over 500 hours of high-quality human speech videos with diverse camera shots, including close-up, half-body, and full-body views. The dataset includes detailed textual descriptions, 2D keypoints and 3D SMPL-X motion annotations, covering over 10k identities, enabling multimodal learning and evaluation. As a first attempt to showcase the value of the dataset, we present Orator, an LLM-guided multi-modal generation framework as a simple baseline, where the language model functions as a multi-faceted director, orchestrating detailed specifications for camera transitions, speaker gesticulations, and vocal modulation. This architecture enables the synthesis of coherent long-form videos through our integrated multi-modal video generation module. Extensive experiments in both pose-guided and audio-driven settings show that training on TalkCuts significantly enhances the cinematographic coherence and visual appeal of generated multi-shot speech videos. We believe TalkCuts provides a strong foundation for future work in controllable, multi-shot speech video generation and broader multimodal learning.

08 Oct 2025

LangSplatV2 presents an optimized architecture for high-dimensional 3D language Gaussian Splatting, achieving real-time open-vocabulary text querying at 384.6 FPS by eliminating the decoding bottleneck, while also demonstrating improved 3D semantic segmentation accuracy across multiple benchmarks.

Satori trains a single Large Language Model (LLM) to perform autoregressive search internally using a Chain-of-Action-Thought format and a two-stage reinforcement learning pipeline. The approach achieves state-of-the-art performance among small models on mathematical reasoning benchmarks and demonstrates strong generalization across diverse out-of-domain tasks by internalizing self-reflection and self-exploration capabilities.

The VERISCORE framework, developed by researchers at the University of Massachusetts Amherst, introduces an automated metric for evaluating the factual accuracy of long-form text generated by large language models. It specifically focuses on "verifiable claims" to prevent unfair penalties and incorporates context-aware claim extraction, with human evaluators preferring its extracted claims 93% of the time over a previous method.

This work introduces fast adaptation strategies for Behavioral Foundation Models (BFMs) that rapidly improve upon their zero-shot performance. The proposed methods, ReLA and LoLA, achieve up to 50% performance gains by optimizing within the BFM's low-dimensional latent policy space, with LoLA notably demonstrating monotonic improvement and computational efficiency 157 times faster than actor-critic alternatives.

SALMON introduces a novel Reinforcement Learning from AI Feedback (RLAIF) paradigm, utilizing an instructable reward model conditioned by natural language principles to align large language models. This approach enabled the Dromedary-2-70b model to outperform LLaMA-2-Chat-70b on various benchmarks, including MT-Bench, while requiring significantly less human supervision.

Autoregressive models (ARMs), which predict subsequent tokens one-by-one ``from left to right,'' have achieved significant success across a wide range of sequence generation tasks. However, they struggle to accurately represent sequences that require satisfying sophisticated constraints or whose sequential dependencies are better addressed by out-of-order generation. Masked Diffusion Models (MDMs) address some of these limitations, but the process of unmasking multiple tokens simultaneously in MDMs can introduce incoherences, and MDMs cannot handle arbitrary infilling constraints when the number of tokens to be filled in is not known in advance. In this work, we introduce Insertion Language Models (ILMs), which learn to insert tokens at arbitrary positions in a sequence -- that is, they select jointly both the position and the vocabulary element to be inserted. By inserting tokens one at a time, ILMs can represent strong dependencies between tokens, and their ability to generate sequences in arbitrary order allows them to accurately model sequences where token dependencies do not follow a left-to-right sequential structure. To train ILMs, we propose a tailored network parameterization and use a simple denoising objective. Our empirical evaluation demonstrates that ILMs outperform both ARMs and MDMs on common planning tasks. Furthermore, we show that ILMs outperform MDMs and perform on par with ARMs in an unconditional text generation task while offering greater flexibility than MDMs in arbitrary-length text infilling. The code is available at: this https URL .

There are no more papers matching your filters at the moment.