University of Bucharest

This survey by Soviany, Ionescu, Rota, and Sebe offers a comprehensive review of Curriculum Learning (CL), formalizing its methods, proposing a new multi-perspective taxonomy, and validating it through automated hierarchical clustering of nearly 200 papers. It demonstrates CL's consistent benefits across diverse machine learning domains and identifies critical areas for future research.

Denoising diffusion models represent a recent emerging topic in computer vision, demonstrating remarkable results in the area of generative modeling. A diffusion model is a deep generative model that is based on two stages, a forward diffusion stage and a reverse diffusion stage. In the forward diffusion stage, the input data is gradually perturbed over several steps by adding Gaussian noise. In the reverse stage, a model is tasked at recovering the original input data by learning to gradually reverse the diffusion process, step by step. Diffusion models are widely appreciated for the quality and diversity of the generated samples, despite their known computational burdens, i.e. low speeds due to the high number of steps involved during sampling. In this survey, we provide a comprehensive review of articles on denoising diffusion models applied in vision, comprising both theoretical and practical contributions in the field. First, we identify and present three generic diffusion modeling frameworks, which are based on denoising diffusion probabilistic models, noise conditioned score networks, and stochastic differential equations. We further discuss the relations between diffusion models and other deep generative models, including variational auto-encoders, generative adversarial networks, energy-based models, autoregressive models and normalizing flows. Then, we introduce a multi-perspective categorization of diffusion models applied in computer vision. Finally, we illustrate the current limitations of diffusion models and envision some interesting directions for future research.

The ever growing realism and quality of generated videos makes it

increasingly harder for humans to spot deepfake content, who need to rely more

and more on automatic deepfake detectors. However, deepfake detectors are also

prone to errors, and their decisions are not explainable, leaving humans

vulnerable to deepfake-based fraud and misinformation. To this end, we

introduce ExDDV, the first dataset and benchmark for Explainable Deepfake

Detection in Video. ExDDV comprises around 5.4K real and deepfake videos that

are manually annotated with text descriptions (to explain the artifacts) and

clicks (to point out the artifacts). We evaluate a number of vision-language

models on ExDDV, performing experiments with various fine-tuning and in-context

learning strategies. Our results show that text and click supervision are both

required to develop robust explainable models for deepfake videos, which are

able to localize and describe the observed artifacts. Our novel dataset and

code to reproduce the results are available at

this https URL

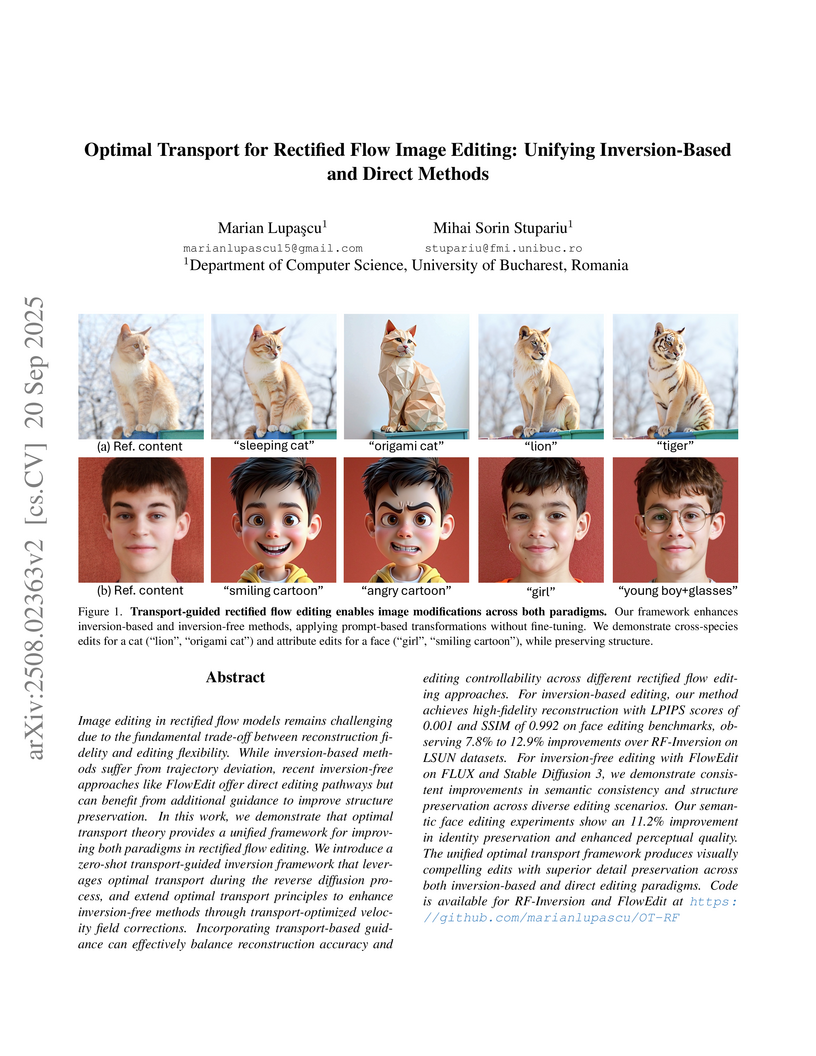

Image editing in rectified flow models remains challenging due to the fundamental trade-off between reconstruction fidelity and editing flexibility. While inversion-based methods suffer from trajectory deviation, recent inversion-free approaches like FlowEdit offer direct editing pathways but can benefit from additional guidance to improve structure preservation. In this work, we demonstrate that optimal transport theory provides a unified framework for improving both paradigms in rectified flow editing. We introduce a zero-shot transport-guided inversion framework that leverages optimal transport during the reverse diffusion process, and extend optimal transport principles to enhance inversion-free methods through transport-optimized velocity field corrections. Incorporating transport-based guidance can effectively balance reconstruction accuracy and editing controllability across different rectified flow editing approaches. For inversion-based editing, our method achieves high-fidelity reconstruction with LPIPS scores of 0.001 and SSIM of 0.992 on face editing benchmarks, observing 7.8% to 12.9% improvements over RF-Inversion on LSUN datasets. For inversion-free editing with FlowEdit on FLUX and Stable Diffusion 3, we demonstrate consistent improvements in semantic consistency and structure preservation across diverse editing scenarios. Our semantic face editing experiments show an 11.2% improvement in identity preservation and enhanced perceptual quality. The unified optimal transport framework produces visually compelling edits with superior detail preservation across both inversion-based and direct editing paradigms. Code is available for RF-Inversion and FlowEdit at: this https URL

This survey provides a comprehensive analysis of Masked Image Modeling (MIM) in computer vision, presenting a structured taxonomy of methods, formalizing two dominant paradigms, and aggregating performance benchmarks. It systematically organizes the rapidly expanding field to clarify concepts, compare approaches, and identify future research directions.

With the recent advancements in generative modeling, the realism of deepfake content has been increasing at a steady pace, even reaching the point where people often fail to detect manipulated media content online, thus being deceived into various kinds of scams. In this paper, we survey deepfake generation and detection techniques, including the most recent developments in the field, such as diffusion models and Neural Radiance Fields. Our literature review covers all deepfake media types, comprising image, video, audio and multimodal (audio-visual) content. We identify various kinds of deepfakes, according to the procedure used to alter or generate the fake content. We further construct a taxonomy of deepfake generation and detection methods, illustrating the important groups of methods and the domains where these methods are applied. Next, we gather datasets used for deepfake detection and provide updated rankings of the best performing deepfake detectors on the most popular datasets. In addition, we develop a novel multimodal benchmark to evaluate deepfake detectors on out-of-distribution content. The results indicate that state-of-the-art detectors fail to generalize to deepfake content generated by unseen deepfake generators. Finally, we propose future directions to obtain robust and powerful deepfake detectors. Our project page and new benchmark are available at this https URL.

The intersection of AI and legal systems presents a growing need for tools that support legal education, particularly in under-resourced languages such as Romanian. In this work, we aim to evaluate the capabilities of Large Language Models (LLMs) and Vision-Language Models (VLMs) in understanding and reasoning about Romanian driving law through textual and visual question-answering tasks. To facilitate this, we introduce RoD-TAL, a novel multimodal dataset comprising Romanian driving test questions, text-based and image-based, alongside annotated legal references and human explanations. We implement and assess retrieval-augmented generation (RAG) pipelines, dense retrievers, and reasoning-optimized models across tasks including Information Retrieval (IR), Question Answering (QA), Visual IR, and Visual QA. Our experiments demonstrate that domain-specific fine-tuning significantly enhances retrieval performance. At the same time, chain-of-thought prompting and specialized reasoning models improve QA accuracy, surpassing the minimum grades required to pass driving exams. However, visual reasoning remains challenging, highlighting the potential and the limitations of applying LLMs and VLMs to legal education.

08 Oct 2025

In this paper we derive quantitative boundary Hölder estimates, with explicit constants, for the inhomogeneous Poisson problem in a bounded open set D⊂Rd.

Our approach has two main steps: firstly, we consider an arbitrary D as above and prove that the boundary α-Hölder regularity of the solution the Poisson equation is controlled, with explicit constants, by the Hölder seminorm of the boundary data, the Lγ-norm of the forcing term with γ>d/2, and the α/2-moment of the exit time from D of the Brownian motion.

Secondly, we derive explicit estimates for the α/2-moment of the exit time in terms of the distance to the boundary, the regularity of the domain D, and α. Using this approach, we derive explicit estimates for the same problem in domains satisfying exterior ball conditions, respectively exterior cone/wedge conditions, in terms of simple geometric features.

As a consequence we also obtain explicit constants for pointwise estimates for the Green function and for the gradient of the solution.

The obtained estimates can be employed to bypass the curse of high dimensions when aiming to approximate the solution of the Poisson problem using neural networks, obtaining polynomial scaling with dimension, which in some cases can be shown to be optimal.

Researchers from the University of Bucharest and collaborating institutions introduce MAVOS-DD, a multilingual audio-video benchmark for deepfake detection that evaluates model performance across eight languages and multiple generation methods, revealing significant performance degradation when models encounter unseen deepfake techniques or languages despite strong in-domain results.

The increasing prevalence of mental health disorders globally highlights the

urgent need for effective digital screening methods that can be used in

multilingual contexts. Most existing studies, however, focus on English data,

overlooking critical mental health signals that may be present in non-English

texts. To address this important gap, we present the first survey on the

detection of mental health disorders using multilingual social media data. We

investigate the cultural nuances that influence online language patterns and

self-disclosure behaviors, and how these factors can impact the performance of

NLP tools. Additionally, we provide a comprehensive list of multilingual data

collections that can be used for developing NLP models for mental health

screening. Our findings can inform the design of effective multilingual mental

health screening tools that can meet the needs of diverse populations,

ultimately improving mental health outcomes on a global scale.

This study investigates the Lagrangian properties of ion turbulent transport driven by drift-type turbulence in tokamak plasmas. Despite the compressible and inhomogeneous nature of Eulerian gyrocenter drifts, numerical simulations with the T3ST code reveal approximate ergodicity, stationarity, and time-symmetry. These characteristics are attributed to broad initial phase-space distributions that support ergodic mixing. Moreover, relatively minor constraints on the initial distributions are found to have negligible effects on transport levels.

04 Aug 2025

We present the first fixed-length elementary closed-form expressions for the prime-counting function, π(n), and the n-th prime number, p(n). These expressions are arithmetic terms, requiring only a finite and fixed number of elementary arithmetic operations from the set: addition, subtraction, multiplication, integer division, and exponentiation. Mazzanti proved that every Kalmar function can be represented as an arithmetic term. We develop an arithmetic term representing the prime omega function, ω(n), which counts the number of distinct prime divisors of a positive integer n. From this term, we find immediately an arithmetic term for the prime-counting function, π(n). Combining these results with a new arithmetic term for binomial coefficients and novel prime-related exponential Diophantine equations, we manage to develop an arithmetic term for the n-th prime number, p(n), thereby providing a constructive solution to the fundamental question: Is there an order to the primes?

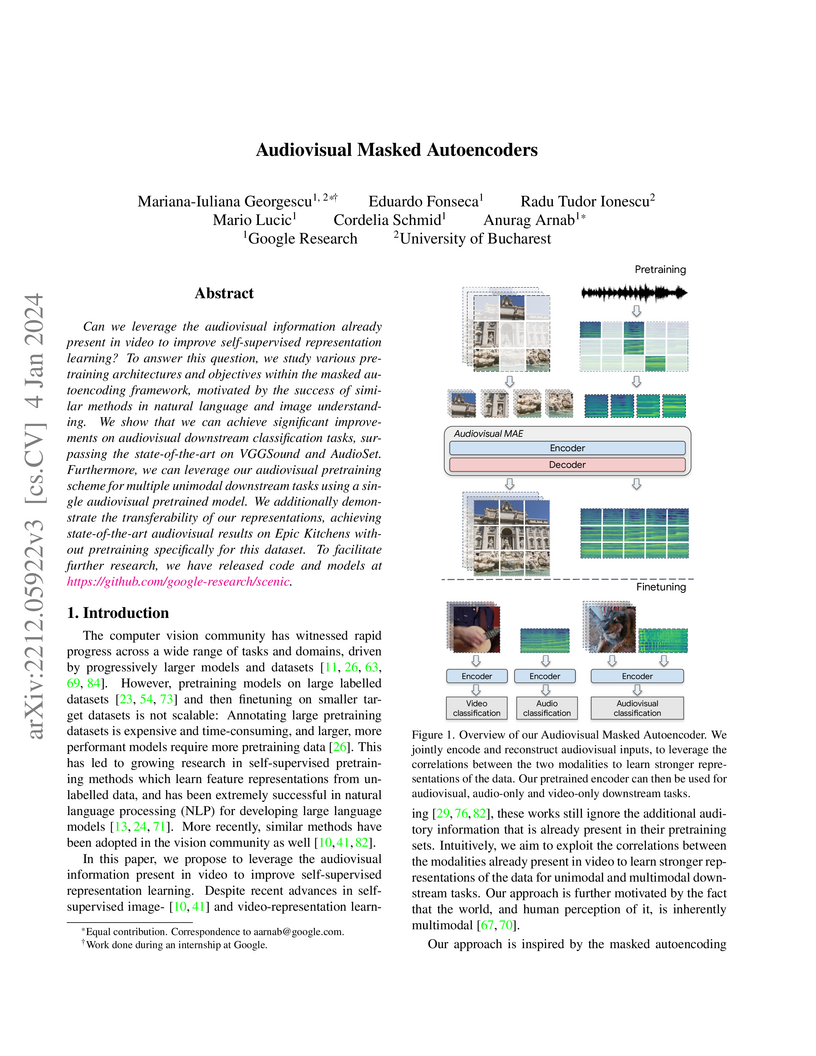

Can we leverage the audiovisual information already present in video to improve self-supervised representation learning? To answer this question, we study various pretraining architectures and objectives within the masked autoencoding framework, motivated by the success of similar methods in natural language and image understanding. We show that we can achieve significant improvements on audiovisual downstream classification tasks, surpassing the state-of-the-art on VGGSound and AudioSet. Furthermore, we can leverage our audiovisual pretraining scheme for multiple unimodal downstream tasks using a single audiovisual pretrained model. We additionally demonstrate the transferability of our representations, achieving state-of-the-art audiovisual results on Epic Kitchens without pretraining specifically for this dataset.

Anomaly detection in video is a challenging computer vision problem. Due to the lack of anomalous events at training time, anomaly detection requires the design of learning methods without full supervision. In this paper, we approach anomalous event detection in video through self-supervised and multi-task learning at the object level. We first utilize a pre-trained detector to detect objects. Then, we train a 3D convolutional neural network to produce discriminative anomaly-specific information by jointly learning multiple proxy tasks: three self-supervised and one based on knowledge distillation. The self-supervised tasks are: (i) discrimination of forward/backward moving objects (arrow of time), (ii) discrimination of objects in consecutive/intermittent frames (motion irregularity) and (iii) reconstruction of object-specific appearance information. The knowledge distillation task takes into account both classification and detection information, generating large prediction discrepancies between teacher and student models when anomalies occur. To the best of our knowledge, we are the first to approach anomalous event detection in video as a multi-task learning problem, integrating multiple self-supervised and knowledge distillation proxy tasks in a single architecture. Our lightweight architecture outperforms the state-of-the-art methods on three benchmarks: Avenue, ShanghaiTech and UCSD Ped2. Additionally, we perform an ablation study demonstrating the importance of integrating self-supervised learning and normality-specific distillation in a multi-task learning setting.

Distributed Denial of Service attacks represent an active cybersecurity

research problem. Recent research shifted from static rule-based defenses

towards AI-based detection and mitigation. This comprehensive survey covers

several key topics. Preeminently, state-of-the-art AI detection methods are

discussed. An in-depth taxonomy based on manual expert hierarchies and an

AI-generated dendrogram are provided, thus settling DDoS categorization

ambiguities. An important discussion on available datasets follows, covering

data format options and their role in training AI detection methods together

with adversarial training and examples augmentation. Beyond detection, AI based

mitigation techniques are surveyed as well. Finally, multiple open research

directions are proposed.

We propose an efficient abnormal event detection model based on a lightweight masked auto-encoder (AE) applied at the video frame level. The novelty of the proposed model is threefold. First, we introduce an approach to weight tokens based on motion gradients, thus shifting the focus from the static background scene to the foreground objects. Second, we integrate a teacher decoder and a student decoder into our architecture, leveraging the discrepancy between the outputs given by the two decoders to improve anomaly detection. Third, we generate synthetic abnormal events to augment the training videos, and task the masked AE model to jointly reconstruct the original frames (without anomalies) and the corresponding pixel-level anomaly maps. Our design leads to an efficient and effective model, as demonstrated by the extensive experiments carried out on four benchmarks: Avenue, ShanghaiTech, UBnormal and UCSD Ped2. The empirical results show that our model achieves an excellent trade-off between speed and accuracy, obtaining competitive AUC scores, while processing 1655 FPS. Hence, our model is between 8 and 70 times faster than competing methods. We also conduct an ablation study to justify our design. Our code is freely available at: this https URL.

Masked image modeling has been demonstrated as a powerful pretext task for generating robust representations that can be effectively generalized across multiple downstream tasks. Typically, this approach involves randomly masking patches (tokens) in input images, with the masking strategy remaining unchanged during training. In this paper, we propose a curriculum learning approach that updates the masking strategy to continually increase the complexity of the self-supervised reconstruction task. We conjecture that, by gradually increasing the task complexity, the model can learn more sophisticated and transferable representations. To facilitate this, we introduce a novel learnable masking module that possesses the capability to generate masks of different complexities, and integrate the proposed module into masked autoencoders (MAE). Our module is jointly trained with the MAE, while adjusting its behavior during training, transitioning from a partner to the MAE (optimizing the same reconstruction loss) to an adversary (optimizing the opposite loss), while passing through a neutral state. The transition between these behaviors is smooth, being regulated by a factor that is multiplied with the reconstruction loss of the masking module. The resulting training procedure generates an easy-to-hard curriculum. We train our Curriculum-Learned Masked Autoencoder (CL-MAE) on ImageNet and show that it exhibits superior representation learning capabilities compared to MAE. The empirical results on five downstream tasks confirm our conjecture, demonstrating that curriculum learning can be successfully used to self-supervise masked autoencoders. We release our code at this https URL.

Mariana-Iuliana Georgescu from the University of Bucharest developed a framework for unsupervised anomaly detection in medical images, combining Masked Autoencoders with a pseudo-supervised anomaly classifier. The method achieved an AUROC of 0.899 on BRATS2020 and 0.634 on LUNA16, outperforming several state-of-the-art methods.

Google DeepMind

Google DeepMind University of Cambridge

University of Cambridge Harvard UniversityUniversity of Zurich

Harvard UniversityUniversity of Zurich National University of Singapore

National University of Singapore University of Oxford

University of Oxford Zhejiang University

Zhejiang University University of Copenhagen

University of Copenhagen City University of Hong KongUniversity of GranadaUniversity of Exeter

City University of Hong KongUniversity of GranadaUniversity of Exeter King’s College LondonUtrecht UniversityUniversity of Vienna

King’s College LondonUtrecht UniversityUniversity of Vienna University of WarwickLondon School of Economics and Political ScienceUniversity of LeedsJagiellonian UniversityUniversity of KentMax Planck Institute for Human DevelopmentXian Jiaotong UniversityUniversity of KwaZulu-NatalNortheastern University LondonVilnius universityUniversity of BucharestFernUniversität in HagenCity, University of LondonLudwigs-Maximilian-Universität München

University of WarwickLondon School of Economics and Political ScienceUniversity of LeedsJagiellonian UniversityUniversity of KentMax Planck Institute for Human DevelopmentXian Jiaotong UniversityUniversity of KwaZulu-NatalNortheastern University LondonVilnius universityUniversity of BucharestFernUniversität in HagenCity, University of LondonLudwigs-Maximilian-Universität MünchenHow we should design and interact with social artificial intelligence depends

on the socio-relational role the AI is meant to emulate or occupy. In human

society, relationships such as teacher-student, parent-child, neighbors,

siblings, or employer-employee are governed by specific norms that prescribe or

proscribe cooperative functions including hierarchy, care, transaction, and

mating. These norms shape our judgments of what is appropriate for each

partner. For example, workplace norms may allow a boss to give orders to an

employee, but not vice versa, reflecting hierarchical and transactional

expectations. As AI agents and chatbots powered by large language models are

increasingly designed to serve roles analogous to human positions - such as

assistant, mental health provider, tutor, or romantic partner - it is

imperative to examine whether and how human relational norms should extend to

human-AI interactions. Our analysis explores how differences between AI systems

and humans, such as the absence of conscious experience and immunity to

fatigue, may affect an AI's capacity to fulfill relationship-specific functions

and adhere to corresponding norms. This analysis, which is a collaborative

effort by philosophers, psychologists, relationship scientists, ethicists,

legal experts, and AI researchers, carries important implications for AI

systems design, user behavior, and regulation. While we accept that AI systems

can offer significant benefits such as increased availability and consistency

in certain socio-relational roles, they also risk fostering unhealthy

dependencies or unrealistic expectations that could spill over into human-human

relationships. We propose that understanding and thoughtfully shaping (or

implementing) suitable human-AI relational norms will be crucial for ensuring

that human-AI interactions are ethical, trustworthy, and favorable to human

well-being.

Northeastern University

Northeastern University Imperial College LondonUniversity of Melbourne

Imperial College LondonUniversity of Melbourne McGill UniversityLancaster UniversityMBZUAI

McGill UniversityLancaster UniversityMBZUAI University of AlbertaUppsala UniversityUniversity of LiverpoolNational Research Council Canada

University of AlbertaUppsala UniversityUniversity of LiverpoolNational Research Council Canada Technical University of MunichCardiff UniversityUniversity of Santiago de CompostelaSkoltechSanta Clara UniversityIIIT Hyderabad

Technical University of MunichCardiff UniversityUniversity of Santiago de CompostelaSkoltechSanta Clara UniversityIIIT Hyderabad University of GöttingenHamburg UniversityUniversity of PretoriaUniversity of YorkUniversitas IndonesiaNational University of Science and Technology POLITEHNICA BucharestUniversity of PortoUniversitat Politécnica de ValénciaInstitut Teknologi BandungSailplane AIUniversity of BucharestAl Akhawayn UniversityMaseno UniversityBayero University KanoCentro Universitário FEIKaduna State UniversityUniversidad Politécnica Salesiana

University of GöttingenHamburg UniversityUniversity of PretoriaUniversity of YorkUniversitas IndonesiaNational University of Science and Technology POLITEHNICA BucharestUniversity of PortoUniversitat Politécnica de ValénciaInstitut Teknologi BandungSailplane AIUniversity of BucharestAl Akhawayn UniversityMaseno UniversityBayero University KanoCentro Universitário FEIKaduna State UniversityUniversidad Politécnica SalesianaThe BRIGHTER project introduces a human-annotated, multi-labeled dataset for textual emotion recognition across 28 languages, emphasizing under-resourced ones from Africa, Asia, Eastern Europe, and Latin America, which includes emotion intensity for a subset. This resource provides benchmarks for multilingual and large language models, revealing their capabilities and limitations in diverse linguistic and cultural contexts.

There are no more papers matching your filters at the moment.