University of Ghent

As large language models (LLMs) are increasingly used in human-AI interactions, their social reasoning capabilities in interpersonal contexts are critical. We introduce SCRIPTS, a 1k-dialogue dataset in English and Korean, sourced from movie scripts. The task involves evaluating models' social reasoning capability to infer the interpersonal relationships (e.g., friends, sisters, lovers) between speakers in each dialogue. Each dialogue is annotated with probabilistic relational labels (Highly Likely, Less Likely, Unlikely) by native (or equivalent) Korean and English speakers from Korea and the U.S. Evaluating nine models on our task, current proprietary LLMs achieve around 75-80% on the English dataset, whereas their performance on Korean drops to 58-69%. More strikingly, models select Unlikely relationships in 10-25% of their responses. Furthermore, we find that thinking models and chain-of-thought prompting, effective for general reasoning, provide minimal benefits for social reasoning and occasionally amplify social biases. Our findings reveal significant limitations in current LLMs' social reasoning capabilities, highlighting the need for efforts to develop socially-aware language models.

Quantum many-body scars (QMBS) serve as important examples of ergodicity-breaking phenomena in quantum many-body systems. Despite recent extensive studies, exact QMBS are rare in dimensions higher than one. In this paper, we study a two-dimensional quantum Z2 gauge model that is dual to a two-dimensional spin-1/2 XY model defined on bipartite graphs. We identify the exact eigenstates of the XY model with a tower structure as exact QMBS. Exploiting the duality transformation, we show that the exact QMBS of the XY model (and XXZ model) after the transformation are the exact QMBS of the dual Z2 gauge model. This construction is versatile and has potential applications for finding new QMBS in other higher-dimensional models.

University of CanterburyNational Central University UC Berkeley

UC Berkeley Georgia Institute of TechnologySungkyunkwan UniversityNational Taiwan University

Georgia Institute of TechnologySungkyunkwan UniversityNational Taiwan University University of California, Irvine

University of California, Irvine University of Maryland, College Park

University of Maryland, College Park University of California, San DiegoOhio State UniversityPennsylvania State UniversityLouisiana State University

University of California, San DiegoOhio State UniversityPennsylvania State UniversityLouisiana State University University of Pennsylvania

University of Pennsylvania University of Tokyo

University of Tokyo Lawrence Berkeley National Laboratory

Lawrence Berkeley National Laboratory University of AlbertaUppsala University

University of AlbertaUppsala University University of California, Davis

University of California, Davis Technical University of MunichDeutsches Elektronen-Synchrotron DESY

Technical University of MunichDeutsches Elektronen-Synchrotron DESY MITUniversity of SheffieldChiba UniversityUniversity of GenevaHumboldt-Universität zu BerlinUniversity of DelawareHelmholtz-Zentrum Dresden-Rossendorf (HZDR)University of New MexicoUniversity of AlabamaUniversität HamburgUniversity of Erlangen-NurembergTechnical University of DortmundRuhr-Universität BochumUniversity of AdelaideKarlsruhe Institute of Technology (KIT)University of Texas at ArlingtonUniversité de MonsAlbert-Ludwigs-Universität FreiburgUniversity of Kansas

MITUniversity of SheffieldChiba UniversityUniversity of GenevaHumboldt-Universität zu BerlinUniversity of DelawareHelmholtz-Zentrum Dresden-Rossendorf (HZDR)University of New MexicoUniversity of AlabamaUniversität HamburgUniversity of Erlangen-NurembergTechnical University of DortmundRuhr-Universität BochumUniversity of AdelaideKarlsruhe Institute of Technology (KIT)University of Texas at ArlingtonUniversité de MonsAlbert-Ludwigs-Universität FreiburgUniversity of Kansas University of California, Santa CruzDrexel UniversityUniversity of Hawai’iUniversity of WuppertalNiels Bohr Institute, University of CopenhagenKochi UniversityUniversity of MainzClark Atlanta UniversityUniversity of KlagenfurtUniversity of GhentNational Chiao Tung UniversityUniversity of StockholmUniversity of Wisconsin-River FallsUniversit

Libre de BruxellesRWTH Aachen UniversityUniversity of Wisconsin

Half-width em dash

–MadisonKavli Institute for the Physics and Mathematics of the Universe (IPMU), University of TokyoUniversity of Wisconsin

Half-width em dash

–MilwaukeeVrije Universiteit Brussel

University of California, Santa CruzDrexel UniversityUniversity of Hawai’iUniversity of WuppertalNiels Bohr Institute, University of CopenhagenKochi UniversityUniversity of MainzClark Atlanta UniversityUniversity of KlagenfurtUniversity of GhentNational Chiao Tung UniversityUniversity of StockholmUniversity of Wisconsin-River FallsUniversit

Libre de BruxellesRWTH Aachen UniversityUniversity of Wisconsin

Half-width em dash

–MadisonKavli Institute for the Physics and Mathematics of the Universe (IPMU), University of TokyoUniversity of Wisconsin

Half-width em dash

–MilwaukeeVrije Universiteit Brussel

UC Berkeley

UC Berkeley Georgia Institute of TechnologySungkyunkwan UniversityNational Taiwan University

Georgia Institute of TechnologySungkyunkwan UniversityNational Taiwan University University of California, Irvine

University of California, Irvine University of Maryland, College Park

University of Maryland, College Park University of California, San DiegoOhio State UniversityPennsylvania State UniversityLouisiana State University

University of California, San DiegoOhio State UniversityPennsylvania State UniversityLouisiana State University University of Pennsylvania

University of Pennsylvania University of Tokyo

University of Tokyo Lawrence Berkeley National Laboratory

Lawrence Berkeley National Laboratory University of AlbertaUppsala University

University of AlbertaUppsala University University of California, Davis

University of California, Davis Technical University of MunichDeutsches Elektronen-Synchrotron DESY

Technical University of MunichDeutsches Elektronen-Synchrotron DESY MITUniversity of SheffieldChiba UniversityUniversity of GenevaHumboldt-Universität zu BerlinUniversity of DelawareHelmholtz-Zentrum Dresden-Rossendorf (HZDR)University of New MexicoUniversity of AlabamaUniversität HamburgUniversity of Erlangen-NurembergTechnical University of DortmundRuhr-Universität BochumUniversity of AdelaideKarlsruhe Institute of Technology (KIT)University of Texas at ArlingtonUniversité de MonsAlbert-Ludwigs-Universität FreiburgUniversity of Kansas

MITUniversity of SheffieldChiba UniversityUniversity of GenevaHumboldt-Universität zu BerlinUniversity of DelawareHelmholtz-Zentrum Dresden-Rossendorf (HZDR)University of New MexicoUniversity of AlabamaUniversität HamburgUniversity of Erlangen-NurembergTechnical University of DortmundRuhr-Universität BochumUniversity of AdelaideKarlsruhe Institute of Technology (KIT)University of Texas at ArlingtonUniversité de MonsAlbert-Ludwigs-Universität FreiburgUniversity of Kansas University of California, Santa CruzDrexel UniversityUniversity of Hawai’iUniversity of WuppertalNiels Bohr Institute, University of CopenhagenKochi UniversityUniversity of MainzClark Atlanta UniversityUniversity of KlagenfurtUniversity of GhentNational Chiao Tung UniversityUniversity of StockholmUniversity of Wisconsin-River FallsUniversit

Libre de BruxellesRWTH Aachen UniversityUniversity of Wisconsin

Half-width em dash

–MadisonKavli Institute for the Physics and Mathematics of the Universe (IPMU), University of TokyoUniversity of Wisconsin

Half-width em dash

–MilwaukeeVrije Universiteit Brussel

University of California, Santa CruzDrexel UniversityUniversity of Hawai’iUniversity of WuppertalNiels Bohr Institute, University of CopenhagenKochi UniversityUniversity of MainzClark Atlanta UniversityUniversity of KlagenfurtUniversity of GhentNational Chiao Tung UniversityUniversity of StockholmUniversity of Wisconsin-River FallsUniversit

Libre de BruxellesRWTH Aachen UniversityUniversity of Wisconsin

Half-width em dash

–MadisonKavli Institute for the Physics and Mathematics of the Universe (IPMU), University of TokyoUniversity of Wisconsin

Half-width em dash

–MilwaukeeVrije Universiteit BrusselThis Letter presents the result of a 3+1 sterile neutrino search using 10.7 years of IceCube data. We analyze atmospheric muon neutrinos that traverse the Earth with energies ranging from 0.5 to 100 TeV, incorporating significant improvements in modeling neutrino flux and detector response compared to earlier studies. Notably, for the first time, we categorize data into starting and through-going events, distinguishing neutrino interactions with vertices inside or outside the instrumented volume, to improve energy resolution. The best-fit point for a 3+1 model is found to be at sin2(2θ24)=0.16 and Δm412=3.5 eV2, which agrees with previous iterations of this study. The result is consistent with the null hypothesis of no sterile neutrinos with a p-value of 3.1\%.

We revisit the question of describing critical spin systems and field theories using matrix product states, and formulate a scaling hypothesis in terms of operators, eigenvalues of the transfer matrix, and lattice spacing in the case of field theories. Critical exponents and central charge are determined by optimizing the exponents such as to obtain a data collapse. We benchmark this method by studying critical Ising and Potts models, where we also obtain a scaling ansatz for the correlation length and entanglement entropy. The formulation of those scaling functions turns out to be crucial for studying critical quantum field theories on the lattice. For the case of λϕ4 with mass μ2 and lattice spacing a, we demonstrate a double data collapse for the correlation length δξ(μ,λ,D)=ξ~((α−αc)(δ/a)−1/ν) with D the bond dimension, δ the gap between eigenvalues of the transfer matrix, and αc=μR2/λ the parameter which fixes the critical quantum field theory.

The densification of Wi-Fi deployments means that fully distributed random channel access is no longer sufficient for high and predictable performance. Therefore, the upcoming IEEE 802.11bn amendment introduces multi-access point coordination (MAPC) methods. This paper addresses a variant of MAPC called coordinated spatial reuse (C-SR), where devices transmit simultaneously on the same channel, with the power adjusted to minimize interference. The C-SR scheduling problem is selecting which devices transmit concurrently and with what settings. We provide a theoretical upper bound model, optimized for either throughput or fairness, which finds the best possible transmission schedule using mixed-integer linear programming. Then, a practical, probing-based approach is proposed which uses multi-armed bandits (MABs), a type of reinforcement learning, to solve the C-SR scheduling problem. We validate both classical (flat) MAB and hierarchical MAB (H-MAB) schemes with simulations and in a testbed. Using H-MABs for C-SR improves aggregate throughput over legacy IEEE 802.11 (on average by 80% in random scenarios), without reducing the number of transmission opportunities per station. Finally, our framework is lightweight and ready for implementation in Wi-Fi devices.

The proliferation of IoT devices and advancements in network technologies have intensified the demand for real-time data processing at the network edge. To address these demands, low-power AI accelerators, particularly GPUs, are increasingly deployed for inference tasks, enabling efficient computation while mitigating cloud-based systems' latency and bandwidth limitations. Despite their growing deployment, GPUs remain underutilised even in computationally intensive workloads. This underutilisation stems from the limited understanding of GPU resource sharing, particularly in edge computing scenarios. In this work, we conduct a detailed analysis of both high- and low-level metrics, including GPU utilisation, memory usage, streaming multiprocessor (SM) utilisation, and tensor core usage, to identify bottlenecks and guide hardware-aware optimisations. By integrating traces from multiple profiling tools, we provide a comprehensive view of resource behaviour on NVIDIA Jetson edge devices under concurrent vision inference workloads. Our findings indicate that while GPU utilisation can reach 100% under specific optimisations, critical low-level resources, such as SMs and tensor cores, often operate only at 15% to 30% utilisation. Moreover, we observe that certain CPU-side events, such as thread scheduling, context switching, etc., frequently emerge as bottlenecks, further constraining overall GPU performance. We provide several key observations for users of vision inference workloads on NVIDIA edge devices.

We construct a Hamiltonian lattice regularisation of the N-flavour Gross-Neveu model that manifestly respects the full O(2N) symmetry, preventing the appearance of any unwanted marginal perturbations to the quantum field theory. In the context of this lattice model, the dynamical mass generation is intimately related to the Coleman-Mermin-Wagner and Lieb-Schultz-Mattis theorem. In particular, the model can be interpreted as lying at the first order phase transition line between a trivial and symmetry-protected topological (SPT) phase, which explains the degeneracy of the elementary kink excitations. We show that our Hamiltonian model can be solved analytically in the large N limit, producing the correct expression for the mass gap. Furthermore, we perform extensive numerical matrix product state simulations for N=2, thereby recovering the emergent Lorentz symmetry and the proper non-perturbative mass gap scaling in the continuum limit. Finally, our simulations also reveal how the continuum limit manifests itself in the entanglement spectrum. As expected from conformal field theory we find two conformal towers, one tower spanned by the linear representations of O(4), corresponding to the trivial phase, and the other by the projective (i.e. spinor) representations, corresponding to the SPT phase.

CNRS

CNRS University of Pittsburgh

University of Pittsburgh University of CambridgeNational Astronomical Observatory of Japan

University of CambridgeNational Astronomical Observatory of Japan University of Oxford

University of Oxford Tsinghua University

Tsinghua University Nagoya UniversityScuola Normale Superiore

Nagoya UniversityScuola Normale Superiore University of Michigan

University of Michigan University of Copenhagen

University of Copenhagen Texas A&M University

Texas A&M University University of Texas at Austin

University of Texas at Austin Stockholm University

Stockholm University Sorbonne UniversitéNiels Bohr Institute

Sorbonne UniversitéNiels Bohr Institute University of California, Santa CruzCavendish LaboratoryINAF - Osservatorio di Astrofisica e Scienza dello SpazioINAF – Osservatorio Astronomico di RomaInstituto de Astrofísica de Canarias (IAC)DARK, Niels Bohr InstituteThe Oskar Klein CentreKavli Institute for Cosmology, University of CambridgeUniversity of GhentGraduate University for Advanced Studies (SOKENDAI)George P. and Cynthia Woods Mitchell Institute for Fundamental Physics and AstronomyCNRS, UMR 7095, Institut d’Astrophysique de ParisCNRS, UMR 7095, Institut d’Astrophysique de Paris, Sorbonne UniversitéрениеVIB Center for Plant Systems BiologyCosmic Dawn Center(DAWN)Universit

Claude Bernard Lyon 1Université Paris-SaclayUniversit

de LyonINAF

Osservatorio Astrofisico di ArcetriINAF

` Osservatorio Astronomico di Trieste

University of California, Santa CruzCavendish LaboratoryINAF - Osservatorio di Astrofisica e Scienza dello SpazioINAF – Osservatorio Astronomico di RomaInstituto de Astrofísica de Canarias (IAC)DARK, Niels Bohr InstituteThe Oskar Klein CentreKavli Institute for Cosmology, University of CambridgeUniversity of GhentGraduate University for Advanced Studies (SOKENDAI)George P. and Cynthia Woods Mitchell Institute for Fundamental Physics and AstronomyCNRS, UMR 7095, Institut d’Astrophysique de ParisCNRS, UMR 7095, Institut d’Astrophysique de Paris, Sorbonne UniversitéрениеVIB Center for Plant Systems BiologyCosmic Dawn Center(DAWN)Universit

Claude Bernard Lyon 1Université Paris-SaclayUniversit

de LyonINAF

Osservatorio Astrofisico di ArcetriINAF

` Osservatorio Astronomico di TriesteThe recent discovery of a large number of massive black holes within the first two billion years after the Big Bang, as well as their peculiar properties, have been largely unexpected based on the extrapolation of the properties of luminous quasars. These findings have prompted the development of several theoretical models for the early formation and growth of black holes, which are, however, difficult to differentiate. We report the metallicity measurement around a gravitationally lensed massive black hole at redshift 7.04 (classified as a Little Red Dot), hosted in a galaxy with very low dynamical mass. The weakness of the [OIII]5007 emission line relative to the narrow Hβ emission indicates extremely low metallicity, about 4×10−2 solar, and even more metal poor in the surrounding few 100 pc. We argue that such properties cannot be uncommon among accreting black holes around this early cosmic epoch. Explaining such a low chemical enrichment in a system that has developed a massive black hole is challenging for most theories. Models assuming heavy black hole seeds (such as Direct Collapse Black Holes) or super-Eddington accretion scenarios struggle to explain the observations, although they can potentially reproduce the observed properties in some cases. Models invoking "primordial black holes" (i.e. putative black holes formed shortly after the Big Bang) may potentially explain the low chemical enrichment associated with this black hole, although this class of models also requires further developments for proper testing.

Noninvertible symmetry generalizes traditional group symmetries, advancing our understanding of quantum matter, especially one-dimensional gapped quantum systems. In critical lattice models, it is usually realized as emergent symmetries in the corresponding low-energy conformal field theories. In this work, we study critical lattice models with the noninvertible Rep(D8) symmetry in one dimension. This leads us to a new class of quantum critical points (QCP), noninvertible symmetry-enriched QCPs, as a generalization of known group symmetry-enriched QCPs. They are realized as phase transitions between one noninvertible symmetry-protected topological (SPT) phase and another different one or spontaneous symmetry breaking (SSB) phase. We identify their low-energy properties and topological features through the Kennedy-Tasaki (KT) duality transformation. We argue that distinct noninvertible symmetry-enriched QCPs can not be smoothly connected without a phase transition or a multi-critical point.

We revisit quantum false vacuum decay for the one-dimensional Ising model, focusing on the real-time nucleation and growth of true vacuum bubbles. Via matrix product state simulations, we demonstrate that for a wide range of parameters, the full time-dependent quantum state is well described by a Gaussian ansatz in terms of domain wall operators, with the associated vacuum bubble wave function evolving according to the linearized time-dependent variational principle. The emerging picture shows three different stages of evolution: an initial nucleation of small bubbles, followed by semi-classical bubble growth, which in turn is halted by the lattice phenomenon of Bloch oscillations. Furthermore, we find that the resonant bubble only plays a significant role in a certain region of parameter-space. However, when significant, it does lead to an approximately constant decay rate during the intermediate stage. Moreover, this rate is in quantitative agreement with the analytical result of Rutkevich (Phys. Rev. B 60, 14525) for which we provide an independent derivation based on the Gaussian ansatz.

Recent advancements in generalized symmetries have drawn significant

attention to gapped phases of matter exhibiting novel symmetries, such as

noninvertible symmetries. By leveraging the duality transformations, the

classification and construction of gapped phases with noninvertible symmetry

can be mapped to those involving conventional group symmetries. We demonstrate

this approach by classifying symmetry-protected topological phases with a broad

class of noninvertible symmetries in arbitrary spacetime dimensions. Our

results reveal new classifications that extend beyond those based on group

symmetries. Additionally, we construct lattice models in (1+1)d and (2+1)d

that realize these new phases and explore their anomalous interfaces.

11 Jul 2024

Digital twins promise to revolutionize engineering by offering new avenues for optimization, control, and predictive maintenance. We propose a novel framework for simultaneously training the digital twin of an engineering system and an associated control agent. The twin's training combines adjoint-based data assimilation and system identification methods, while the control agent's training merges model-based optimal control with model-free reinforcement learning. The control agent evolves along two independent paths: one driven by model-based optimal control and the other by reinforcement learning. The digital twin serves as a virtual environment for confrontation and indirect interaction, functioning as an "expert demonstrator." The best policy is selected for real-world interaction and cloned to the other path if training stagnates. We call this framework Reinforcement Twinning (RT). The framework is tested on three diverse engineering systems and control tasks: (1) controlling a wind turbine under varying wind speeds, (2) trajectory control of flapping-wing micro air vehicles (FWMAVs) facing wind gusts, and (3) mitigating thermal loads in managing cryogenic storage tanks. These test cases use simplified models with known ground truth closure laws. Results show that the adjoint-based digital twin training is highly sample-efficient, completing within a few iterations. For the control agent training, both model-based and model-free approaches benefit from their complementary learning experiences. The promising results pave the way for implementing the RT framework on real systems.

31 May 2016

Digital quantum simulation of fermionic systems is important in the context

of chemistry and physics. Simulating fermionic models on general purpose

quantum computers requires imposing a fermionic algebra on spins. The

previously studied Jordan-Wigner and Bravyi-Kitaev transformations are two

techniques for accomplishing this task. Here we re-examine an auxiliary fermion

construction which maps fermionic operators to local operators on spins. The

local simulation is performed by relaxing the requirement that the number of

spins should match the number of fermionic modes. Instead, auxiliary modes are

introduced to enable non-consecutive fermionic couplings to be simulated with

constant low-rank tensor products on spins. We connect the auxiliary fermion

construction to other topological models and give examples of the construction.

Tensor network states provide an efficient class of states that faithfully

capture strongly correlated quantum models and systems in classical statistical

mechanics. While tensor networks can now be seen as becoming standard tools in

the description of such complex many-body systems, close to optimal variational

principles based on such states are less obvious to come by. In this work, we

generalize a recently proposed variational uniform matrix product state

algorithm for capturing one-dimensional quantum lattices in the thermodynamic

limit, to the study of regular two-dimensional tensor networks with a

non-trivial unit cell. A key property of the algorithm is a computational

effort that scales linearly rather than exponentially in the size of the unit

cell. We demonstrate the performance of our approach on the computation of the

classical partition functions of the antiferromagnetic Ising model and

interacting dimers on the square lattice, as well as of a quantum doped

resonating valence bond state.

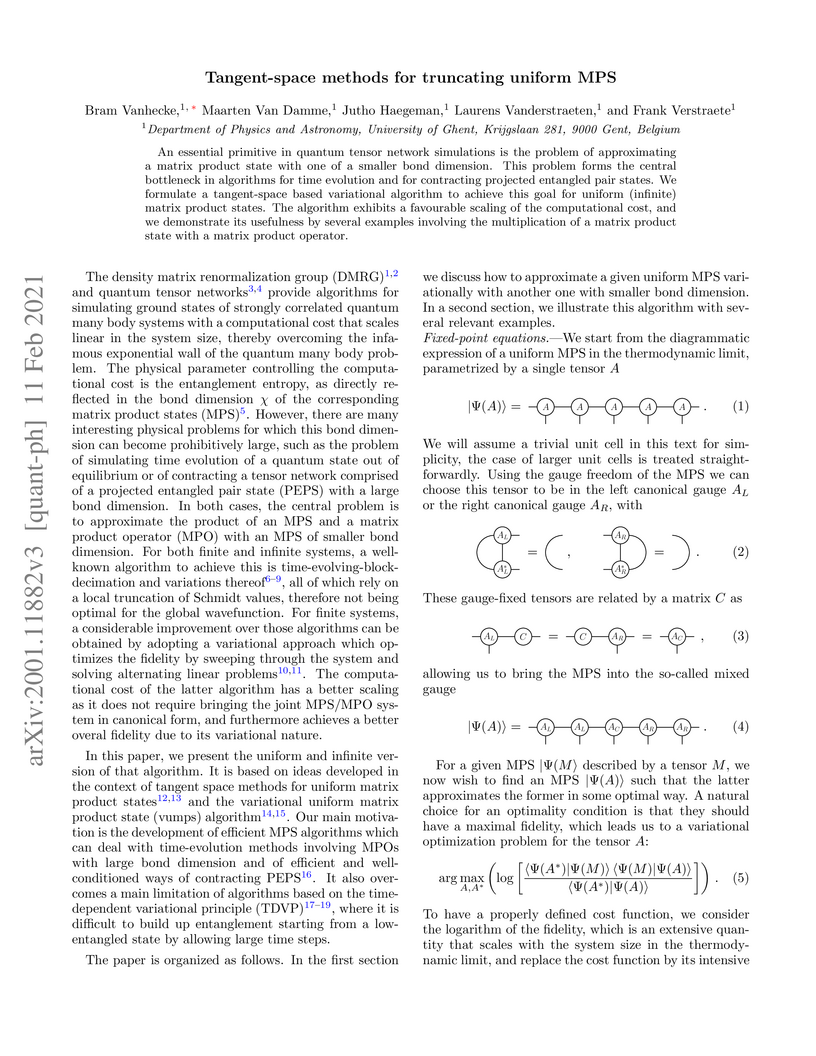

A central primitive in quantum tensor network simulations is the problem of

approximating a matrix product state with one of a lower bond dimension. This

problem forms the central bottleneck in algorithms for time evolution and for

contracting projected entangled pair states. We formulate a tangent-space based

variational algorithm to achieve this for uniform (infinite) matrix product

states. The algorithm exhibits a favourable scaling of the computational cost,

and we demonstrate its usefulness by several examples involving the

multiplication of a matrix product state with a matrix product operator.

Cluster expansions for the exponential of local operators are constructed

using tensor networks. In contrast to other approaches, the cluster expansion

does not break any spatial or internal symmetries and exhibits a very

favourable prefactor to the error scaling versus bond dimension. This is

illustrated by time evolving a matrix product state using very large time

steps, and by constructing a novel robust algorithm for finding ground states

of 2-dimensional Hamiltonians using projected entangled pair states as fixed

points of 2-dimensional transfer matrices.

09 Dec 2020

Motivated by the recent success of tensor networks to calculate the residual entropy of spin ice and kagome Ising models, we develop a general framework to study frustrated Ising models in terms of infinite tensor networks %, i.e. tensor networks that can be contracted using standard algorithms for infinite systems. This is achieved by reformulating the problem as local rules for configurations on overlapping clusters chosen in such a way that they relieve the frustration, i.e. that the energy can be minimized independently on each cluster. We show that optimizing the choice of clusters, including the weight on shared bonds, is crucial for the contractibility of the tensor networks, and we derive some basic rules and a linear program to implement them. We illustrate the power of the method by computing the residual entropy of a frustrated Ising spin system on the kagome lattice with next-next-nearest neighbour interactions, vastly outperforming Monte Carlo methods in speed and accuracy. The extension to finite-temperature is briefly discussed.

12 Feb 2025

Two-dimensional electron systems in both magnetic fields and periodic potentials are described by Hofstadter butterfly, a fundamental problem of solid-state physics. While moiré systems provide a powerful method to realize this spectrum, previous experiments, however, have been limited to fractional flux quanta regime due to the difficulty of building ~ 50 nm periodic modulations. Here, we demonstrate a super-moiré strategy to overcome this challenge. By aligning monolayer graphene (G) with 1.0° twisted hexagonal boron nitride (t-hBN), a 63.2 nm bichromatic G/t-hBN super-moiré is constructed, made possible by exploiting the electrostatic nature of t-hBN potential. Under magnetic field B, magnetic Bloch states at integer flux quanta (1-9) are achieved and observed as integer Brown-Zak oscillations, expanding the flux quanta from factions to integers. Theoretical analysis reproduces these experimental findings. This work opens new avenues to study unexplored Hofstadter butterfly, explore emergent topological order at integer flux quanta and engineer long-wavelength periodic modulations.

Reliable prediction of river floods in the first 72 hours can reduce harm because emergency agencies have sufficient time to prepare and deploy for help at the scene. Such river flood prediction models already exist and perform relatively well in most high-income countries. But, due to the limited availability of data, these models are lacking in low-income countries. Here, we offer the first global river flood prediction framework based on the newly published Caravan dataset. Our framework aims to serve as a benchmark for future global river flood prediction research. To support generalizability claims we include custom data evaluation splits. Further, we propose and evaluate a novel two-path LSTM architecture (2P-LSTM) against three baseline models. Finally, we evaluate the generated models on different locations in Africa and Asia that were not part of the Caravan dataset.

On Metrics to Assess the Transferability of Machine Learning Models in Non-Intrusive Load Monitoring

On Metrics to Assess the Transferability of Machine Learning Models in Non-Intrusive Load Monitoring

To assess the performance of load disaggregation algorithms it is common

practise to train a candidate algorithm on data from one or multiple households

and subsequently apply cross-validation by evaluating the classification and

energy estimation performance on unseen portions of the dataset derived from

the same households. With an emerging discussion of transferability in

Non-Intrusive Load Monitoring (NILM), there is a need for domain-specific

metrics to assess the performance of NILM algorithms on new test scenarios

being unseen buildings. In this paper, we discuss several metrics to assess the

generalisation ability of NILM algorithms. These metrics target different

aspects of performance evaluation in NILM and are meant to complement the

traditional performance evaluation approach. We demonstrate how our metrics can

be utilised to evaluate NILM algorithms by means of two case studies. We

conduct our studies on several energy consumption datasets and take into

consideration five state-of-the-art as well as four baseline NILM solutions.

Finally, we formulate research challenges for future work.

There are no more papers matching your filters at the moment.