University of Illinois Springfield

This research delved into GPT-4 and Kimi, two Large Language Models (LLMs),

for systematic reviews. We evaluated their performance by comparing

LLM-generated codes with human-generated codes from a peer-reviewed systematic

review on assessment. Our findings suggested that the performance of LLMs

fluctuates by data volume and question complexity for systematic reviews.

21 Apr 2025

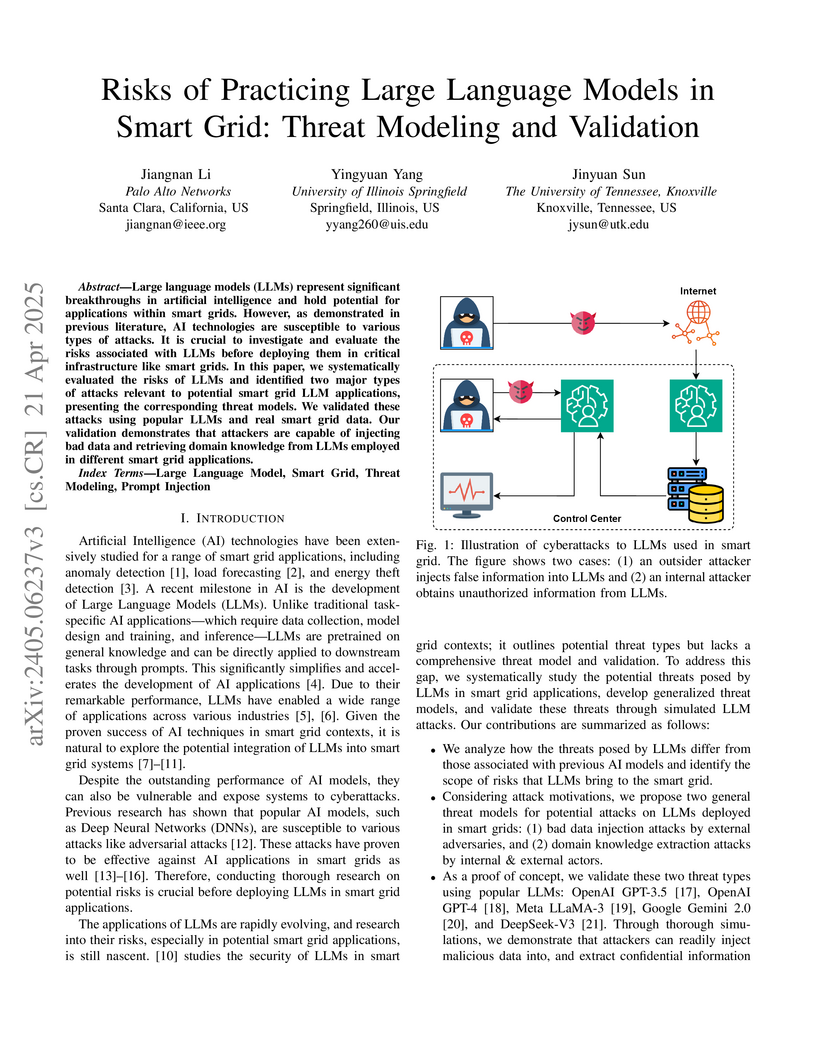

Large language models (LLMs) represent significant breakthroughs in

artificial intelligence and hold potential for applications within smart grids.

However, as demonstrated in previous literature, AI technologies are

susceptible to various types of attacks. It is crucial to investigate and

evaluate the risks associated with LLMs before deploying them in critical

infrastructure like smart grids. In this paper, we systematically evaluated the

risks of LLMs and identified two major types of attacks relevant to potential

smart grid LLM applications, presenting the corresponding threat models. We

validated these attacks using popular LLMs and real smart grid data. Our

validation demonstrates that attackers are capable of injecting bad data and

retrieving domain knowledge from LLMs employed in different smart grid

applications.

14 Jun 2022

Bournez, Fraigniaud, and Koegler defined a number in [0,1] as computable by

their Large-Population Protocol (LPP) model, if the proportion of agents in a

set of marked states converges to said number over time as the population grows

to infinity. The notion, however, restricts the ordinary differential equations

(ODEs) associated with an LPP to have only finitely many equilibria. This

restriction places an intrinsic limitation on the model. As a result, a number

is computable by an LPP if and only if it is algebraic, namely, not a single

transcendental number can be computed under this notion.

In this paper, we lift the finitary requirement on equilibria. That is, we

consider systems with a continuum of equilibria. We show that essentially all

numbers in [0,1] that are computable by bounded general-purpose analog

computers (GPACs) or chemical reaction networks (CRNs) can also be computed by

LPPs under this new definition. This implies a rich series of numbers (e.g.,

the reciprocal of Euler's constant, π/4, Euler's γ, Catalan's

constant, and Dottie number) are all computable by LPPs. Our proof is

constructive: We develop an algorithm that transfers bounded GPACs/CRNs into

LPPs. Our algorithm also fixes a gap in Bournez et al.'s construction of LPPs

designed to compute any arbitrary algebraic number in [0,1].

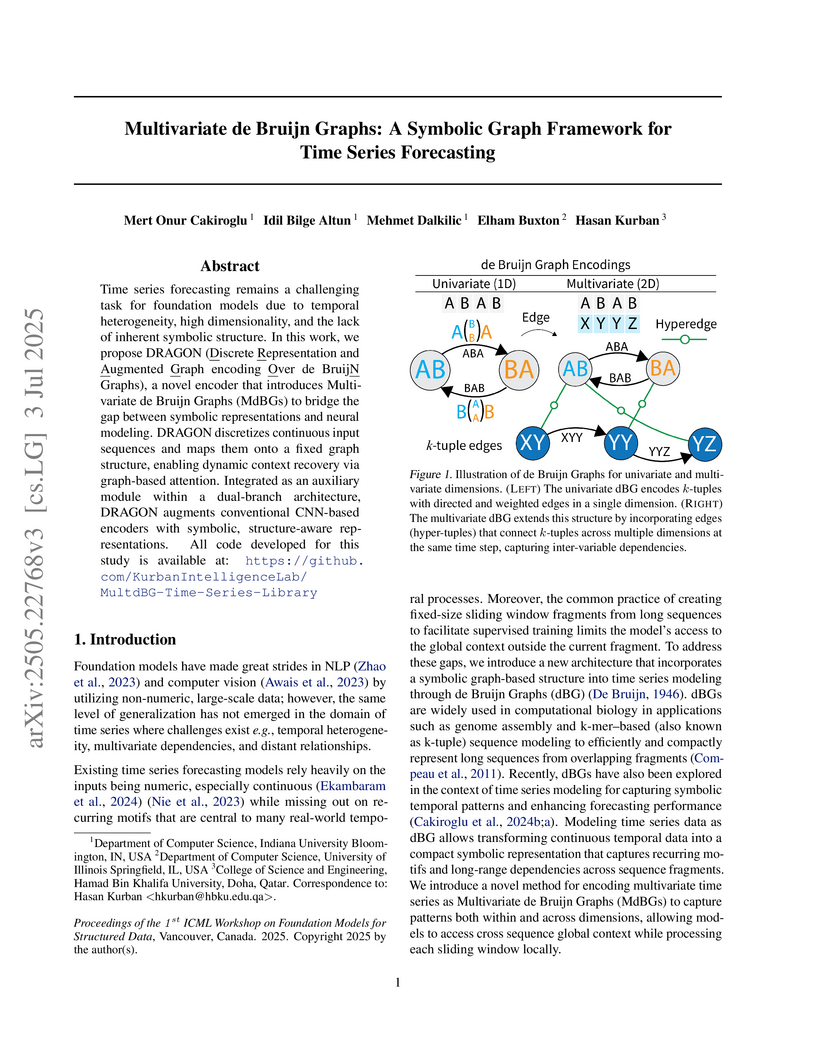

Time series forecasting remains a challenging task for foundation models due to temporal heterogeneity, high dimensionality, and the lack of inherent symbolic structure. In this work, we propose DRAGON (Discrete Representation and Augmented Graph encoding Over de BruijN Graphs), a novel encoder that introduces Multivariate de Bruijn Graphs (MdBGs) to bridge the gap between symbolic representations and neural modeling. DRAGON discretizes continuous input sequences and maps them onto a fixed graph structure, enabling dynamic context recovery via graph-based attention. Integrated as an auxiliary module within a dual-branch architecture, DRAGON augments conventional CNN-based encoders with symbolic, structure-aware representations. All code developed for this study is available at: this https URL

We examine the spatial distributions of LBV's, B[e] supergiants, and W-R stars in the LMC, to clarify their relative ages, evolutionary states, and relationships. This survey employs a reference catalog that was not available for previous work, comprising more than 3900 of the LMC's most luminous stars. Our analysis shows that LBV's, B[e] supergiants, and WR's have spatial distributions like normal stars with the same spectral types and luminosities. Most LBV's are not isolated, nor do they require binary or multiple status to explain their spatial relationship to other populations. There are two likely exceptions: one lower-luminosity LBV and one LBV candidate are relatively isolated and may have velocities that require additional acceleration. The B[e] supergiants are spatially and kinematically more dispersed than LBV's, suggesting that they belong to an older population. The most luminous early-type WN's are most closely associated with the evolved late O-type supergiants. The high luminosity late WN's, however, are highly concentrated in the 30 Dor region which biases the analysis. The less luminous WN's and WC's are associated with a mix of evolved late B, A-type, and yellow supergiants which may be in a post-red-supergiant phase. Spatial distributions of the less luminous WN, WC, and WN3/O3 stars reinforce proposed evolutionary links among those subtypes. Our analysis also demonstrates the importance of using a comprehensive census, with reference populations clearly defined by spectral type and luminosity, and how small number statistics, especially combined with spatial clustering, can invalidate some commonly-cited statistical tests.

We introduce multihead finite-state dimension, a generalization of finite-state dimension in which a group of finite-state agents (the heads) with oblivious, one-way movement rules, each reporting only one symbol at a time, enable their leader to bet on subsequent symbols in an infinite data stream. In aggregate, such a scheme constitutes an h-head finite state gambler whose maximum achievable growth rate of capital in this task, quantified using betting strategies called gales, determines the multihead finite-state dimension of the sequence. The 1-head case is equivalent to finite-state dimension as defined by Dai, Lathrop, Lutz and Mayordomo (2004). In our main theorem, we prove a strict hierarchy as the number of heads increases, giving an explicit sequence family that separates, for each positive integer h, the earning power of h-head finite-state gamblers from that of (h+1)-head finite-state gamblers. We prove that multihead finite-state dimension is stable under finite unions but that the corresponding quantity for any fixed number h>1 of heads--the h-head finite-state predimension--lacks this stability property.

Data certainty is one of the issues in the real-world applications which is caused by unwanted noise in data. Recently, more attentions have been paid to overcome this problem. We proposed a new method based on neutrosophic set (NS) theory to detect boundary and outlier points as challenging points in clustering methods. Generally, firstly, a certainty value is assigned to data points based on the proposed definition in NS. Then, certainty set is presented for the proposed cost function in NS domain by considering a set of main clusters and noise cluster. After that, the proposed cost function is minimized by gradient descent method. Data points are clustered based on their membership degrees. Outlier points are assigned to noise cluster and boundary points are assigned to main clusters with almost same membership degrees. To show the effectiveness of the proposed method, two types of datasets including 3 datasets in Scatter type and 4 datasets in UCI type are used. Results demonstrate that the proposed cost function handles boundary and outlier points with more accurate membership degrees and outperforms existing state of the art clustering methods.

05 Oct 2021

Implicit authentication (IA) transparently authenticates users by utilizing

their behavioral data sampled from various sensors. Identifying the

illegitimate user through constantly analyzing current users' behavior, IA adds

another layer of protection to the smart device. Due to the diversity of human

behavior, the existing research works tend to simultaneously utilize many

different features to identify users, which is less efficient. Irrelevant

features may increase system delay and reduce the authentication accuracy.

However, dynamically choosing the best suitable features for each user

(personal features) requires a massive calculation, especially in the real

environment. In this paper, we proposed EchoIA to find personal features with a

small amount of calculation by utilizing user feedback. In the authentication

phase, our approach maintains the transparency, which is the major advantage of

IA. In the past two years, we conducted a comprehensive experiment to evaluate

EchoIA. We compared it with other state-of-the-art IA schemes in the aspect of

authentication accuracy and efficiency. The experiment results show that EchoIA

has better authentication accuracy (93\%) and less energy consumption (23-hour

battery lifetimes) than other IA schemes.

Energy theft causes large economic losses to utility companies around the world. In recent years, energy theft detection approaches based on machine learning (ML) techniques, especially neural networks, become popular in the research literature and achieve state-of-the-art detection performance. However, in this work, we demonstrate that the well-perform ML models for energy theft detection are highly vulnerable to adversarial attacks. In particular, we design an adversarial measurement generation algorithm that enables the attacker to report extremely low power consumption measurements to the utilities while bypassing the ML energy theft detection. We evaluate our approach with three kinds of neural networks based on a real-world smart meter dataset. The evaluation result demonstrates that our approach can significantly decrease the ML models' detection accuracy, even for black-box attackers.

Background: The learning of genotype-phenotype associations and history of

human disease by doing detailed and precise analysis of phenotypic

abnormalities can be defined as deep phenotyping. To understand and detect this

interaction between phenotype and genotype is a fundamental step when

translating precision medicine to clinical practice. The recent advances in the

field of machine learning is efficient to predict these interactions between

abnormal human phenotypes and genes.

Methods: In this study, we developed a framework to predict links between

human phenotype ontology (HPO) and genes. The annotation data from the

heterogeneous knowledge resources i.e., orphanet, is used to parse human

phenotype-gene associations. To generate the embeddings for the nodes (HPO &

genes), an algorithm called node2vec was used. It performs node sampling on

this graph based on random walks, then learns features over these sampled nodes

to generate embeddings. These embeddings were used to perform the downstream

task to predict the presence of the link between these nodes using 5 different

supervised machine learning algorithms.

Results: The downstream link prediction task shows that the Gradient Boosting

Decision Tree based model (LightGBM) achieved an optimal AUROC 0.904 and AUCPR

0.784. In addition, LightGBM achieved an optimal weighted F1 score of 0.87.

Compared to the other 4 methods LightGBM is able to find more accurate

interaction/link between human phenotype & gene pairs.

There are no more papers matching your filters at the moment.