University of Patras

09 Oct 2025

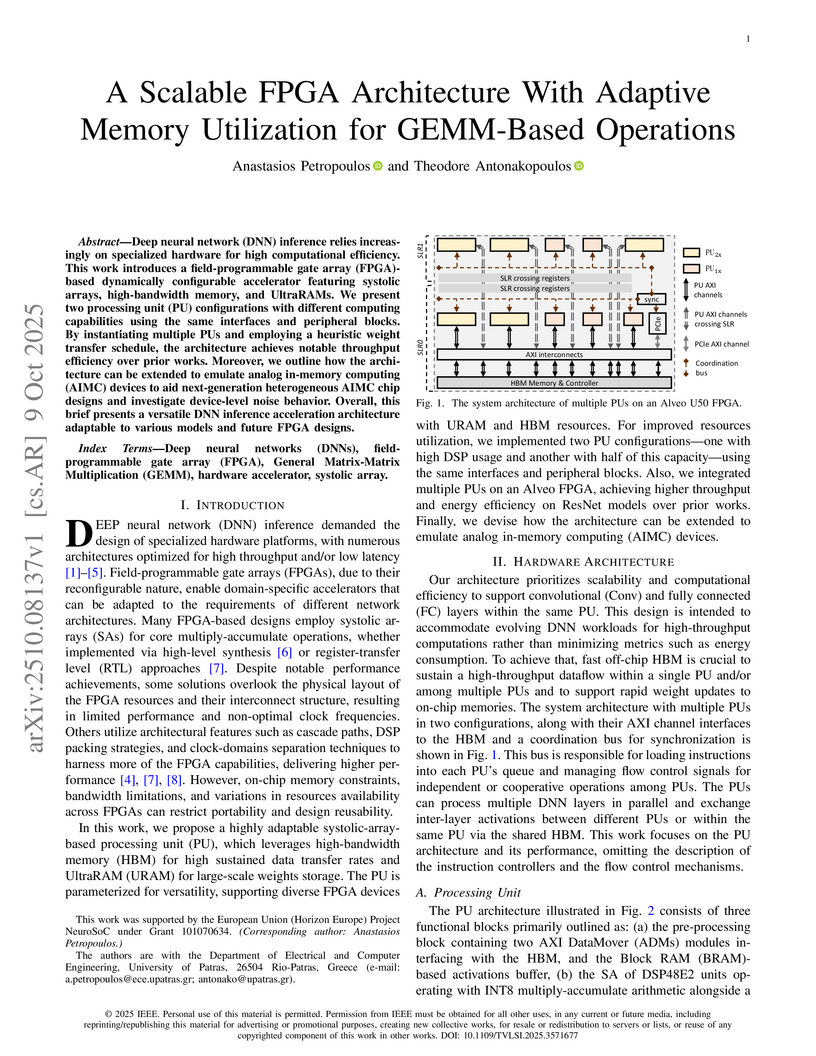

Deep neural network (DNN) inference relies increasingly on specialized hardware for high computational efficiency. This work introduces a field-programmable gate array (FPGA)-based dynamically configurable accelerator featuring systolic arrays, high-bandwidth memory, and UltraRAMs. We present two processing unit (PU) configurations with different computing capabilities using the same interfaces and peripheral blocks. By instantiating multiple PUs and employing a heuristic weight transfer schedule, the architecture achieves notable throughput efficiency over prior works. Moreover, we outline how the architecture can be extended to emulate analog in-memory computing (AIMC) devices to aid next-generation heterogeneous AIMC chip designs and investigate device-level noise behavior. Overall, this brief presents a versatile DNN inference acceleration architecture adaptable to various models and future FPGA designs.

21 Sep 2025

This research provides a unified theoretical and empirical analysis of 3D orientation representations, benchmarking their performance across direct optimization, imitation learning, reinforcement learning, and trajectory optimization tasks in robotics. It offers practical guidelines based on observed strengths and weaknesses for each representation in specific application contexts.

03 Sep 2025

We confirm the direct connection between entanglement entropy and the notion of irreversibility in the renormalization-group flow in the context of a simple theory for which a calculation from first principles is feasible. The change of the entanglement entropy for a spherical entangling surface as its radius grows from zero to infinity corresponds to the flow from the UV to the IR. Through analytical and numerical means, we compute the entanglement entropy for a free massive scalar theory, making use of the method of correlation functions. We deduce a c-function in 1+1 dimensions and an a-function in 3+1 dimensions. Both functions are monotonic and vary continuously between one and zero, as expected for this simple theory.

02 Sep 2025

In quantum mechanics separable states can be characterized as convex combinations of product states whereas non-separable states exhibit entanglement. Quantum entanglement has played a pivotal role in both theoretical investigations and practical applications within quantum information science. In this study, we explore the connection between product states and geometric structures, specifically manifolds and their associated geometric properties such as the first fundamental form (metric). We focus on the manifolds formed by the product states of M systems of N levels, examining the induced metric derived from the Euclidean metric. For elementary cases we will compute the Levi-Civita connection, and, where computationally tractable, the scalar curvature.

We propose a novel point cloud U-Net diffusion architecture for 3D generative modeling capable of generating high-quality and diverse 3D shapes while maintaining fast generation times. Our network employs a dual-branch architecture, combining the high-resolution representations of points with the computational efficiency of sparse voxels. Our fastest variant outperforms all non-diffusion generative approaches on unconditional shape generation, the most popular benchmark for evaluating point cloud generative models, while our largest model achieves state-of-the-art results among diffusion methods, with a runtime approximately 70% of the previously state-of-the-art PVD. Beyond unconditional generation, we perform extensive evaluations, including conditional generation on all categories of ShapeNet, demonstrating the scalability of our model to larger datasets, and implicit generation which allows our network to produce high quality point clouds on fewer timesteps, further decreasing the generation time. Finally, we evaluate the architecture's performance in point cloud completion and super-resolution. Our model excels in all tasks, establishing it as a state-of-the-art diffusion U-Net for point cloud generative modeling. The code is publicly available at this https URL.

29 Aug 2025

The CoRoT (Convection, Rotation, and planetary Transits) mission still holds a large trove of high-quality, underused light curves with excellent signal-to-noise and continuous coverage. This paper, the first in a series, identifies and classifies variable stars in CoRoT fields whose variability has not been analyzed in the main repositories. We combine simulations and real data to test a moving-average scheme that mitigates instrumental jumps and enhances the recovery of short-period signals (<1 day) in roughly 20-day time series. For classification, we adopt a supervised selection built on features extracted from folded light curves using the double period, and we construct template-based models that also act as a new classifier for well-sampled light curves. We report 9,272 variables, of which 6,249 are not listed in SIMBAD or VSX. Our preliminary classes include 309 Beta Cephei, 3,105 Delta Scuti, 599 Algol-type eclipsing binaries, 844 Beta Lyrae eclipsing binaries, 497 W Ursae Majoris eclipsing binaries, 1,443 Gamma Doradus, 63 RR Lyrae, and 32 T Tauri stars. The resulting catalog inserts CoRoT variables into widely used astronomical repositories. Comparing sources in the inner and outer Milky Way, we find significant differences in the occurrence of several classes, consistent with metallicity and age gradients. The ability to recover sub-day periods also points to automated strategies for detecting longer-period variability, which we will develop in subsequent papers of this series.

19 Aug 2025

KAISTUniversity of Bonn

KAISTUniversity of Bonn University of British Columbia

University of British Columbia Columbia UniversityInstitute for Basic Science (IBS)

Columbia UniversityInstitute for Basic Science (IBS) Université Paris-SaclayUniversitat de BarcelonaUniversity of PatrasTechnical University of DenmarkUniversität HamburgNCSR DemokritosLawrence Livermore National LaboratoryUniversity of TriesteAlbert-Ludwigs-Universität FreiburgEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH (CERN)University of RijekaXian Jiaotong UniversityMax-Planck Institut für extraterrestrische PhysikFriedrich-Schiller-University JenaIstinye UniversityBoğaziçi UniversityMax-Planck-Institut für SonnensystemforschungRudjer Bošković InstituteTechnical University of CartagenaIstanbul University-CerrahpasaInstituto Nazionale di Fisica Nucleare (INFN)University de Zaragoza

Université Paris-SaclayUniversitat de BarcelonaUniversity of PatrasTechnical University of DenmarkUniversität HamburgNCSR DemokritosLawrence Livermore National LaboratoryUniversity of TriesteAlbert-Ludwigs-Universität FreiburgEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH (CERN)University of RijekaXian Jiaotong UniversityMax-Planck Institut für extraterrestrische PhysikFriedrich-Schiller-University JenaIstinye UniversityBoğaziçi UniversityMax-Planck-Institut für SonnensystemforschungRudjer Bošković InstituteTechnical University of CartagenaIstanbul University-CerrahpasaInstituto Nazionale di Fisica Nucleare (INFN)University de ZaragozaWe present a search for solar axions produced through the axion-electron coupling

(gae) using data from a novel 7-GridPix detector installed at

the CERN Axion Solar Telescope (CAST). The detector, featuring

ultra-thin silicon nitride windows and multiple veto systems,

collected approximately 160 hours of solar tracking data between

2017-2018. Using machine learning techniques and the veto systems,

we achieved a background rate of

1.06×10−5keV−1cm−2s−1 at a signal efficiency of

about 80% in the 0.2-8keV range. Analysis

of the data yielded no significant excess above background, allowing

us to set a new upper limit on the product of the axion-electron and

axion-photon couplings of

g_{ae}\cdot g_{a\gamma} < 7.35\times 10^{-23}\,\text{GeV}^{-1} at 95%

confidence level. This result improves upon the previous best

helioscope limit and demonstrates the potential of GridPix

technology for rare event searches. Additionally, we derived a limit

on the axion-photon coupling of

g_{a\gamma} < 9.0\times 10^{-11}\,\text{GeV}^{-1} at 95% CL, which,

while not surpassing CAST's best limit, provides complementary

constraints on axion models.

The advent of digital streaming platforms have recently revolutionized the landscape of music industry, with the ensuing digitalization providing structured data collections that open new research avenues for investigating popularity dynamics and mainstream success. The present work explored which determinants hold the strongest predictive influence for a track's inclusion in the Billboard Hot 100 charts, including streaming popularity, measurable audio signal attributes, and probabilistic indicators of human listening. The analysis revealed that popularity was by far the most decisive predictor of Billboard Hot 100 inclusion, with considerable contribution from instrumentalness, valence, duration and speechiness. Logistic Regression achieved 90.0% accuracy, with very high recall for charting singles (0.986) but lower recall for non-charting ones (0.813), yielding balanced F1-scores around 0.90. Random Forest slightly improved performance to 90.4% accuracy, maintaining near-perfect precision for non-charting singles (0.990) and high recall for charting ones (0.992), with F1-scores up to 0.91. Gradient Boosting (XGBoost) reached 90.3% accuracy, delivering a more balanced trade-off by improving recall for non-charting singles (0.837) while sustaining high recall for charting ones (0.969), resulting in F1-scores comparable to the other models.

Code optimization is a challenging task requiring a substantial level of

expertise from developers. Nonetheless, this level of human capacity is not

sufficient considering the rapid evolution of new hardware architectures and

software environments. In light of this, recent research proposes adopting

machine learning and artificial intelligence techniques to automate the code

optimization process. In this paper, we introduce PerfRL, an innovative

framework designed to tackle the problem of code optimization. Our framework

leverages the capabilities of small language models (SLMs) and reinforcement

learning (RL), facilitating a system where SLMs can assimilate feedback from

their environment during the fine-tuning phase, notably through unit tests.

When benchmarked against existing models, PerfRL demonstrates superior

efficiency in terms of speed and computational resource usage, attributed to

its reduced need for training steps and its compatibility with SLMs.

Furthermore, it substantially diminishes the risk of logical and syntactical

errors. To evaluate our framework, we conduct experiments on the PIE dataset

using a lightweight large language model (i.e., CodeT5) and a new reinforcement

learning algorithm, namely RRHF. For evaluation purposes, we use a list of

evaluation metrics related to optimization quality and speedup. The evaluation

results show that our approach achieves similar or better results compared to

state-of-the-art models using shorter training times and smaller pre-trained

models.

The paper presents a cradle-to-gate sustainability assessment methodology specifically designed to evaluate aircraft components in a robust and systematic manner. This methodology integrates multi-criteria decision-making (MCDM) analysis across ten criteria, categorized under environmental impact, cost, and performance. Environmental impact is analyzed through life cycle assessment and cost through life cycle costing, with both analyses facilitated by SimaPro software. Performance is measured in terms of component mass and specific stiffness. The robustness of this methodology is tested through various MCDM techniques, normalization approaches, and objective weighting methods. To demonstrate the methodology, the paper assesses the sustainability of a fuselage panel, comparing nine variants that differ in materials, joining techniques, and part thicknesses. All approaches consistently identify thermoplastic CFRP panels as the most sustainable option, with the geometric mean aggregation of weights providing balanced criteria consideration across environmental, cost, and performance aspects. The adaptability of this proposed methodology is illustrated, showing its applicability to any aircraft component with the requisite data. This structured approach offers critical insights to support sustainable decision-making in aircraft component design and procurement.

Vector Quantized-Elites (VQ-Elites) is an unsupervised Quality-Diversity optimization algorithm that leverages Vector Quantized Variational Autoencoders to autonomously learn and structure behavior spaces. It consistently outperformed existing unsupervised methods, demonstrating robust adaptation to unknown task constraints and yielding higher true diversity of discovered solutions across various robotics and exploration tasks.

Flexible electronics offer unique advantages for conformable, lightweight, and disposable healthcare wearables. However, their limited gate count, large feature sizes, and high static power consumption make on-body machine learning classification highly challenging. While existing bendable RISC-V systems provide compact solutions, they lack the energy efficiency required. We present a mechanically flexible RISC-V that integrates a bespoke multiply-accumulate co-processor with fixed coefficients to maximize energy efficiency and minimize latency. Our approach formulates a constrained programming problem to jointly determine co-processor constants and optimally map Multi-Layer Perceptron (MLP) inference operations, enabling compact, model-specific hardware by leveraging the low fabrication and non-recurring engineering costs of flexible technologies. Post-layout results demonstrate near-real-time performance across several healthcare datasets, with our circuits operating within the power budget of existing flexible batteries and occupying only 2.42 mm^2, offering a promising path toward accessible, sustainable, and conformable healthcare wearables. Our microprocessors achieve an average 2.35x speedup and 2.15x lower energy consumption compared to the state of the art.

The advent of Generative AI (GenAI) in education presents a transformative approach to traditional teaching methodologies, which often overlook the diverse needs of individual students. This study introduces a GenAI tool, based on advanced natural language processing, designed as a digital assistant for educators, enabling the creation of customized lesson plans. The tool utilizes an innovative feature termed 'interactive mega-prompt,' a comprehensive query system that allows educators to input detailed classroom specifics such as student demographics, learning objectives, and preferred teaching styles. This input is then processed by the GenAI to generate tailored lesson plans. To evaluate the tool's effectiveness, a comprehensive methodology incorporating both quantitative (i.e., % of time savings) and qualitative (i.e., user satisfaction) criteria was implemented, spanning various subjects and educational levels, with continuous feedback collected from educators through a structured evaluation form. Preliminary results show that educators find the GenAI-generated lesson plans effective, significantly reducing lesson planning time and enhancing the learning experience by accommodating diverse student needs. This AI-driven approach signifies a paradigm shift in education, suggesting its potential applicability in broader educational contexts, including special education needs (SEN), where individualized attention and specific learning aids are paramount

14 Mar 2025

It is well known that alternative theories to the Standard Model allow -- and

sometimes require -- fundamental constants, such as the fine-structure

constant, α, to vary in spacetime. We demonstrate that one way to

investigate these variations is through the Mass-Radius relation of compact

astrophysical objects, which is inherently affected by α variations. We

start by considering the model of a polytropic white dwarf, which we perturb by

adding the α variations for a generic class of Grand Unified Theories.

We then extend our analysis to neutron stars, building upon the polytropic

approach to consider more realistic equations of state, discussing the impact

of such variations on mass-radius measurements in neutron stars. We present

some constraints on these models based on current data and also outline how

future observations might distinguish between extensions of the Standard Model.

31 Oct 2013

California Institute of TechnologyUniversity of VictoriaSLAC National Accelerator Laboratory

California Institute of TechnologyUniversity of VictoriaSLAC National Accelerator Laboratory Carnegie Mellon University

Carnegie Mellon University New York University

New York University University of Chicago

University of Chicago Stanford University

Stanford University Yale University

Yale University Northwestern University

Northwestern University University of Florida

University of Florida CERN

CERN Argonne National LaboratoryThe University of Alabama

Argonne National LaboratoryThe University of Alabama Stony Brook University

Stony Brook University Brookhaven National Laboratory

Brookhaven National Laboratory Rutgers UniversityLos Alamos National LaboratoryDeutsches Elektronen-Synchrotron DESYUniversity of Western AustraliaFermi National Accelerator LaboratoryUniversidad de ZaragozaUniversitat de BarcelonaUniversity of Patras

Rutgers UniversityLos Alamos National LaboratoryDeutsches Elektronen-Synchrotron DESYUniversity of Western AustraliaFermi National Accelerator LaboratoryUniversidad de ZaragozaUniversitat de BarcelonaUniversity of Patras University of VirginiaUniversity of TennesseeIstituto Nazionale di Fisica NucleareUniversity of Cape TownLawrence Livermore National LaboratoryUniversity of New HampshireJohannes Gutenberg University MainzInstitut d'Astrophysique de ParisThomas Jefferson National Accelerator FacilityLudwig Maximilians UniversityCollege of William and MaryHampton UniversityLaboratori Nazionali di Frascati dell’INFNNorth Carolina Central UniversityKasetsart UniversityUniversità di MessinaMax-Planck-Institute f ̈ur PhysikMassachusetts Inst. of TechnologyEwha UniversityPerimeter Inst. for Theoretical PhysicsMax-Planck-Institut f

ür RadioastronomieUniversit at HamburgUniversită di GenovaUniversit

at HeidelbergNorth Carolina AT State University

University of VirginiaUniversity of TennesseeIstituto Nazionale di Fisica NucleareUniversity of Cape TownLawrence Livermore National LaboratoryUniversity of New HampshireJohannes Gutenberg University MainzInstitut d'Astrophysique de ParisThomas Jefferson National Accelerator FacilityLudwig Maximilians UniversityCollege of William and MaryHampton UniversityLaboratori Nazionali di Frascati dell’INFNNorth Carolina Central UniversityKasetsart UniversityUniversità di MessinaMax-Planck-Institute f ̈ur PhysikMassachusetts Inst. of TechnologyEwha UniversityPerimeter Inst. for Theoretical PhysicsMax-Planck-Institut f

ür RadioastronomieUniversit at HamburgUniversită di GenovaUniversit

at HeidelbergNorth Carolina AT State UniversityDark sectors, consisting of new, light, weakly-coupled particles that do not

interact with the known strong, weak, or electromagnetic forces, are a

particularly compelling possibility for new physics. Nature may contain

numerous dark sectors, each with their own beautiful structure, distinct

particles, and forces. This review summarizes the physics motivation for dark

sectors and the exciting opportunities for experimental exploration. It is the

summary of the Intensity Frontier subgroup "New, Light, Weakly-coupled

Particles" of the Community Summer Study 2013 (Snowmass). We discuss axions,

which solve the strong CP problem and are an excellent dark matter candidate,

and their generalization to axion-like particles. We also review dark photons

and other dark-sector particles, including sub-GeV dark matter, which are

theoretically natural, provide for dark matter candidates or new dark matter

interactions, and could resolve outstanding puzzles in particle and

astro-particle physics. In many cases, the exploration of dark sectors can

proceed with existing facilities and comparatively modest experiments. A rich,

diverse, and low-cost experimental program has been identified that has the

potential for one or more game-changing discoveries. These physics

opportunities should be vigorously pursued in the US and elsewhere.

06 Dec 2022

The need to repeatedly shuttle around synaptic weight values from memory to processing units has been a key source of energy inefficiency associated with hardware implementation of artificial neural networks. Analog in-memory computing (AIMC) with spatially instantiated synaptic weights holds high promise to overcome this challenge, by performing matrix-vector multiplications (MVMs) directly within the network weights stored on a chip to execute an inference workload. However, to achieve end-to-end improvements in latency and energy consumption, AIMC must be combined with on-chip digital operations and communication to move towards configurations in which a full inference workload is realized entirely on-chip. Moreover, it is highly desirable to achieve high MVM and inference accuracy without application-wise re-tuning of the chip. Here, we present a multi-core AIMC chip designed and fabricated in 14-nm complementary metal-oxide-semiconductor (CMOS) technology with backend-integrated phase-change memory (PCM). The fully-integrated chip features 64 256x256 AIMC cores interconnected via an on-chip communication network. It also implements the digital activation functions and processing involved in ResNet convolutional neural networks and long short-term memory (LSTM) networks. We demonstrate near software-equivalent inference accuracy with ResNet and LSTM networks while implementing all the computations associated with the weight layers and the activation functions on-chip. The chip can achieve a maximal throughput of 63.1 TOPS at an energy efficiency of 9.76 TOPS/W for 8-bit input/output matrix-vector multiplications.

16 Sep 2024

INFN

INFN ETH Zürich

ETH Zürich CERNYork UniversityUniversity of PatrasUniversität BonnUniversit`a degli Studi di GenovaUniversidad Andres BelloUniversidad de La SerenaInstituto de Fisica CorpuscularRheinische Friedrich-Wilhelms-UniversitätJohannes Gutenberg-Universitaet MainzUniversidad T ecnica Federico Santa Mar a

CERNYork UniversityUniversity of PatrasUniversität BonnUniversit`a degli Studi di GenovaUniversidad Andres BelloUniversidad de La SerenaInstituto de Fisica CorpuscularRheinische Friedrich-Wilhelms-UniversitätJohannes Gutenberg-Universitaet MainzUniversidad T ecnica Federico Santa Mar aA search for Dark Sectors is performed using the unique M2 beam line at the

CERN Super Proton Synchrotron. New particles (X) could be produced in the

bremsstrahlung-like reaction of high energy 160 GeV muons impinging on an

active target, μN→μNX, followed by their decays,

X→invisible. The experimental signature would be a scattered

single muon from the target, with about less than half of its initial energy

and no activity in the sub-detectors located downstream the interaction point.

The full sample of the 2022 run is analyzed through the missing energy/momentum

channel, with a total statistics of (1.98±0.02)×1010 muons on

target. We demonstrate that various muon-philic scenarios involving different

types of mediators, such as scalar or vector particles, can be probed

simultaneously with such a technique. For the vector-case, besides a

Lμ−Lτ Z′ vector boson, we also consider an invisibly decaying dark

photon (A′→invisible). This search is complementary to NA64

running with electrons and positrons, thus, opening the possibility to expand

the exploration of the thermal light dark matter parameter space by combining

the results obtained with the three beams.

Cryo-electron tomography (cryo-ET) is an imaging technique that allows

three-dimensional visualization of macro-molecular assemblies under near-native

conditions. Cryo-ET comes with a number of challenges, mainly low

signal-to-noise and inability to obtain images from all angles. Computational

methods are key to analyze cryo-electron tomograms.

To promote innovation in computational methods, we generate a novel simulated

dataset to benchmark different methods of localization and classification of

biological macromolecules in tomograms. Our publicly available dataset contains

ten tomographic reconstructions of simulated cell-like volumes. Each volume

contains twelve different types of complexes, varying in size, function and

structure.

In this paper, we have evaluated seven different methods of finding and

classifying proteins. Seven research groups present results obtained with

learning-based methods and trained on the simulated dataset, as well as a

baseline template matching (TM), a traditional method widely used in cryo-ET

research. We show that learning-based approaches can achieve notably better

localization and classification performance than TM. We also experimentally

confirm that there is a negative relationship between particle size and

performance for all methods.

31 Aug 2025

The entanglement entropy of a massless scalar field in de Sitter space depends on multiple scales, such as the radius of the entangling surface, the Hubble constant and the UV cutoff. We perform a high-precision numerical calculation using a lattice model in order to determine the dependence on these scales in the Bunch-Davies vacuum. We derive the leading de Sitter corrections to the flat-space entanglement entropy for subhorizon entangling radii. We analyze the structure of the finite-size effects and we show that the contribution to the entanglement entropy of the sector of the theory with vanishing angular momentum depends logarithmically on the size of the overall system, which extends beyond the horizon.

31 May 2025

This paper presents an innovative pedagogical framework employing tangible interactive games to enhance artificial intelligence (AI) knowledge and literacy among elementary education students. Recognizing the growing importance of AI competencies in the 21st century, this study addresses the critical need for age-appropriate, experiential learning tools that demystify core AI concepts for young learners. The proposed approach integrates physical role-playing activities that embody fundamental AI principles, including neural networks, decision-making, machine learning, and pattern recognition. Through carefully designed game mechanics, students actively engage in collaborative problem solving, fostering deeper conceptual understanding and critical thinking skills. The framework further supports educators by providing detailed guidance on implementation and pedagogical objectives, thus facilitating effective AI education in early childhood settings. Empirical insights and theoretical grounding demonstrate the potential of tangible interactive games to bridge the gap between abstract AI theories and practical comprehension, ultimately promoting AI literacy at foundational educational levels. The study contributes to the growing discourse on AI education by offering scalable and adaptable strategies that align with contemporary curricular demands and prepare young learners for a technologically driven future.

There are no more papers matching your filters at the moment.